O'Reilly - Practical UNIX & Internet Sec... 7015KB

O'Reilly - Practical UNIX & Internet Sec... 7015KB

O'Reilly - Practical UNIX & Internet Sec... 7015KB

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

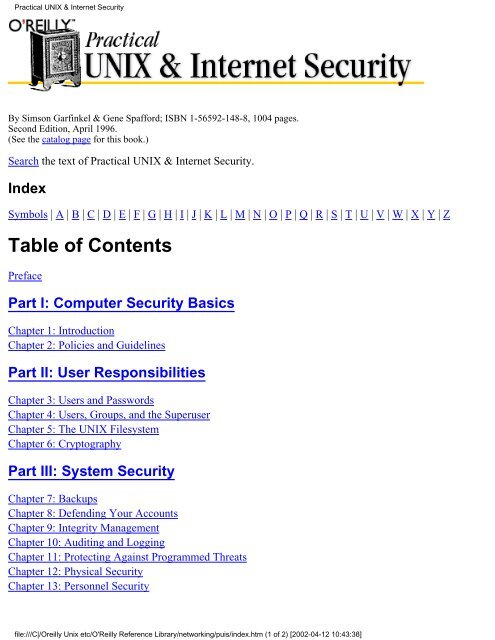

<strong>Practical</strong> <strong>UNIX</strong> & <strong>Internet</strong> <strong>Sec</strong>urity<br />

By Simson Garfinkel & Gene Spafford; ISBN 1-56592-148-8, 1004 pages.<br />

<strong>Sec</strong>ond Edition, April 1996.<br />

(See the catalog page for this book.)<br />

Search the text of <strong>Practical</strong> <strong>UNIX</strong> & <strong>Internet</strong> <strong>Sec</strong>urity.<br />

Index<br />

Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Table of Contents<br />

Preface<br />

Part I: Computer <strong>Sec</strong>urity Basics<br />

Chapter 1: Introduction<br />

Chapter 2: Policies and Guidelines<br />

Part II: User Responsibilities<br />

Chapter 3: Users and Passwords<br />

Chapter 4: Users, Groups, and the Superuser<br />

Chapter 5: The <strong>UNIX</strong> Filesystem<br />

Chapter 6: Cryptography<br />

Part III: System <strong>Sec</strong>urity<br />

Chapter 7: Backups<br />

Chapter 8: Defending Your Accounts<br />

Chapter 9: Integrity Management<br />

Chapter 10: Auditing and Logging<br />

Chapter 11: Protecting Against Programmed Threats<br />

Chapter 12: Physical <strong>Sec</strong>urity<br />

Chapter 13: Personnel <strong>Sec</strong>urity<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index.htm (1 of 2) [2002-04-12 10:43:38]

<strong>Practical</strong> <strong>UNIX</strong> & <strong>Internet</strong> <strong>Sec</strong>urity<br />

Part IV: Network and <strong>Internet</strong> <strong>Sec</strong>urity<br />

Chapter 14: Telephone <strong>Sec</strong>urity<br />

Chapter 15: UUCP<br />

Chapter 16: TCP/IP Networks<br />

Chapter 17: TCP/IP Services<br />

Chapter 18: WWW <strong>Sec</strong>urity<br />

Chapter 19: RPC, NIS, NIS+, and Kerberos<br />

Chapter 20: NFS<br />

Part V: Advanced Topics<br />

Chapter 21: Firewalls<br />

Chapter 22: Wrappers and Proxies<br />

Chapter 23: Writing <strong>Sec</strong>ure SUID and Network Programs<br />

Part VI: Handling <strong>Sec</strong>urity Incidents<br />

Chapter 24: Discovering a Break-in<br />

Chapter 25: Denial of Service Attacks and Solutions<br />

Chapter 26: Computer <strong>Sec</strong>urity and U.S. Law<br />

Chapter 27: Who Do You Trust?<br />

Part VII: Appendixes<br />

Appendix A: <strong>UNIX</strong> <strong>Sec</strong>urity Checklist<br />

Appendix B: Important Files<br />

Appendix C: <strong>UNIX</strong> Processes<br />

Appendix D: Paper Sources<br />

Appendix E: Electronic Resources<br />

Appendix F: Organizations<br />

Appendix G: Table of IP Services<br />

Copyright © 1999 <strong>O'Reilly</strong> & Associates. All Rights Reserved.<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index.htm (2 of 2) [2002-04-12 10:43:38]

Index<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Index: Symbols and Numbers<br />

10BaseT networks<br />

12.3.1.2. Eavesdropping by Ethernet and 10Base-T<br />

16.1. Networking<br />

8mm video tape : 7.1.4. Guarding Against Media Failure<br />

@ (at sign)<br />

with chacl command : 5.2.5.2. HP-UX access control lists<br />

in xhost list : 17.3.21.3. The xhost facility<br />

! and mail command : 15.1.3. mail Command<br />

. (dot) directory : 5.1.1. Directories<br />

.. (dot-dot) directory : 5.1.1. Directories<br />

# (hash mark), disabling services with : 17.3. Primary <strong>UNIX</strong> Network Services<br />

+ (plus sign)<br />

in hosts.equiv file : 17.3.18.5. Searching for .rhosts files<br />

in NIS<br />

19.4. Sun's Network Information Service (NIS)<br />

19.4.4.6. NIS is confused about "+"<br />

/ (slash)<br />

IFS separator : 11.5.1.2. IFS attacks<br />

root directory<br />

5.1.1. Directories<br />

(see also root directory)<br />

~ (tilde)<br />

in automatic backups : 18.2.3.5. Beware stray CGI scripts<br />

for home directory : 11.5.1.3. $HOME attacks<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Copyright © 1999 <strong>O'Reilly</strong> & Associates, Inc. All Rights Reserved.<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_0.htm (1 of 2) [2002-04-12 10:43:39]

Index<br />

[ Library Home | DNS & BIND | TCP/IP | sendmail | sendmail Reference | Firewalls | <strong>Practical</strong> <strong>Sec</strong>urity ]<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_0.htm (2 of 2) [2002-04-12 10:43:39]

Index<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Index: A<br />

absolute pathnames : 5.1.3. Current Directory and Paths<br />

access<br />

/etc/exports file : 20.2.1.1. /etc/exports<br />

levels, NIS+ : 19.5.4. Using NIS+<br />

by non-citizens : 26.4.1. Munitions Export<br />

tradition of open : 1.4.1. Expectations<br />

via Web : 18.2.2.2. Additional configuration issues<br />

access control : 2.1. Planning Your <strong>Sec</strong>urity Needs<br />

ACLs<br />

5.2.5. Access Control Lists<br />

5.2.5.2. HP-UX access control lists<br />

17.3.13. Network News Transport Protocol (NNTP) (TCP Port 119)<br />

anonymous FTP : 17.3.2.1. Using anonymous FTP<br />

<strong>Internet</strong> servers : 17.2. Controlling Access to Servers<br />

monitoring employee access : 13.2.4. Auditing Access<br />

physical : 12.2.3. Physical Access<br />

restricted filesystems<br />

8.1.5. Restricted Filesystem<br />

8.1.5.2. Checking new software<br />

restricting data availability : 2.1. Planning Your <strong>Sec</strong>urity Needs<br />

USERFILE (UUCP)<br />

15.4.1. USERFILE: Providing Remote File Access<br />

15.4.2.1. Some bad examples<br />

Web server files<br />

18.3. Controlling Access to Files on Your Server<br />

18.3.3. Setting Up Web Users and Passwords<br />

X Window System<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (1 of 8) [2002-04-12 10:43:40]

Index<br />

17.3.21.2. X security<br />

17.3.21.3. The xhost facility<br />

access control lists : (see ACLs)<br />

access.conf file : 18.3.1. The access.conf and .htaccess Files<br />

access() : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

access_log file<br />

10.3.5. access_log Log File<br />

18.4.2. Eavesdropping Through Log Files<br />

with refer_log file : 18.4.2. Eavesdropping Through Log Files<br />

accidents<br />

12.2.2. Preventing Accidents<br />

(see also natural disasters)<br />

accounting process<br />

10.2. The acct/pacct Process Accounting File<br />

10.2.3. messages Log File<br />

(see also auditing)<br />

accounts : 3.1. Usernames<br />

aliases for : 8.8.9. Account Names Revisited: Using Aliases for Increased <strong>Sec</strong>urity<br />

changing login shell<br />

8.4.2. Changing the Account's Login Shell<br />

8.7.1. Integrating One-time Passwords with <strong>UNIX</strong><br />

created by intruders : 24.4.1. New Accounts<br />

default : 8.1.2. Default Accounts<br />

defense checklist : A.1.1.7. Chapter 8: Defending Your Accounts<br />

dormant<br />

8.4. Managing Dormant Accounts<br />

8.4.3. Finding Dormant Accounts<br />

expiring old : 8.4.3. Finding Dormant Accounts<br />

group : 8.1.6. Group Accounts<br />

importing to NIS server<br />

19.4.1. Including or excluding specific accounts:<br />

Joes<br />

19.4.4.2. Using netgroups to limit the importing of accounts<br />

3.6.2. Smoking Joes<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (2 of 8) [2002-04-12 10:43:40]

Index<br />

8.8.3.1. Joetest: a simple password cracker<br />

locking automatically : 3.3. Entering Your Password<br />

logging changes to : 10.7.2.1. Exception and activity reports<br />

multiple, same UID : 4.1.2. Multiple Accounts with the Same UID<br />

names for : (see usernames)<br />

restricted, with rsh : 8.1.4.5. How to set up a restricted account with rsh<br />

restricting FTP from : 17.3.2.5. Restricting FTP with the standard <strong>UNIX</strong> FTP server<br />

running single command : 8.1.3. Accounts That Run a Single Command<br />

without passwords : 8.1.1. Accounts Without Passwords<br />

acct file : 10.2. The acct/pacct Process Accounting File<br />

acctcom program<br />

10.2. The acct/pacct Process Accounting File<br />

10.2.2. Accounting with BSD<br />

ACEs : (see ACLs)<br />

ACK bit : 16.2.4.2. TCP<br />

acledit command : 5.2.5.1. AIX Access Control Lists<br />

aclget, aclput commands : 5.2.5.1. AIX Access Control Lists<br />

ACLs (access control lists)<br />

5.2.5. Access Control Lists<br />

5.2.5.2. HP-UX access control lists<br />

errors in : 5.2.5.1. AIX Access Control Lists<br />

NNTP with : 17.3.13. Network News Transport Protocol (NNTP) (TCP Port 119)<br />

ACM (Association for Computing Machinery) : F.1.1. Association for Computing Machinery (ACM)<br />

active FTP : 17.3.2.2. Passive vs. active FTP<br />

aculog file : 10.3.1. aculog File<br />

adaptive modems : (see modems)<br />

adb debugger<br />

19.3.1.3. Setting the window<br />

C.4. The kill Command<br />

add-on functionality : 1.4.3. Add-On Functionality Breeds Problems<br />

addresses<br />

CIDR : 16.2.1.3. CIDR addresses<br />

commands embedded in : 15.7. Early <strong>Sec</strong>urity Problems with UUCP<br />

<strong>Internet</strong><br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (3 of 8) [2002-04-12 10:43:40]

Index<br />

16.2.1. <strong>Internet</strong> Addresses<br />

16.2.1.3. CIDR addresses<br />

IP : (see IP addresses)<br />

Adleman, Leonard<br />

6.4.2. Summary of Public Key Systems<br />

6.4.6. RSA and Public Key Cryptography<br />

.Admin directory : 10.3.4. uucp Log Files<br />

administration : (see system administration)<br />

adult material : 26.4.5. Pornography and Indecent Material<br />

Advanced Network & Services (ANS) : F.3.4.2. ANS customers<br />

AFCERT : F.3.4.41. U.S. Air Force<br />

aftpd server : 17.3.2.4. Setting up an FTP server<br />

agent (user) : 4.1. Users and Groups<br />

agent_log file : 18.4.2. Eavesdropping Through Log Files<br />

aging : (see expiring)<br />

air ducts : 12.2.3.2. Entrance through air ducts<br />

air filters : 12.2.1.3. Dust<br />

Air Force Computer Emergency Response Team (AFCERT) : F.3.4.41. U.S. Air Force<br />

AIX<br />

3.3. Entering Your Password<br />

8.7.1. Integrating One-time Passwords with <strong>UNIX</strong><br />

access control lists : 5.2.5.1. AIX Access Control Lists<br />

tftp access : 17.3.7. Trivial File Transfer Protocol (TFTP) (UDP Port 69)<br />

trusted path : 8.5.3.1. Trusted path<br />

alarms : (see detectors)<br />

aliases<br />

8.8.9. Account Names Revisited: Using Aliases for Increased <strong>Sec</strong>urity<br />

11.1.2. Back Doors and Trap Doors<br />

11.5.3.3. /usr/lib/aliases, /etc/aliases, /etc/sendmail/aliases, aliases.dir, or aliases.pag<br />

decode : 17.3.4.2. Using sendmail to receive email<br />

mail : 17.3.4. Simple Mail Transfer Protocol (SMTP) (TCP Port 25)<br />

aliases file : 11.5.3.3. /usr/lib/aliases, /etc/aliases, /etc/sendmail/aliases, aliases.dir, or aliases.pag<br />

AllowOverride option : 18.3.2. Commands Within the Block<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (4 of 8) [2002-04-12 10:43:40]

Index<br />

American Society for Industrial <strong>Sec</strong>urity (ASIS) : F.1.2. American Society for Industrial <strong>Sec</strong>urity (ASIS)<br />

ancestor directories : 9.2.2.2. Ancestor directories<br />

ANI schemes : 14.6. Additional <strong>Sec</strong>urity for Modems<br />

animals : 12.2.1.7. Bugs (biological)<br />

anlpasswd package : 8.8.2. Constraining Passwords<br />

anon option for /etc/exports : 20.2.1.1. /etc/exports<br />

anonymous FTP<br />

4.1. Users and Groups<br />

17.3.2.1. Using anonymous FTP<br />

17.3.2.6. Setting up anonymous FTP with the standard <strong>UNIX</strong> FTP server<br />

and HTTP : 18.2.4.1. Beware mixing HTTP with anonymous FTP<br />

ANS (Advanced Network & Services, Inc.) : F.3.4.2. ANS customers<br />

ANSI C standards : 1.4.2. Software Quality<br />

answer mode : 14.3.1. Originate and Answer<br />

answer testing : 14.5.3.2. Answer testing<br />

answerback terminal mode : 11.1.4. Trojan Horses<br />

APOP option (POP) : 17.3.10. Post Office Protocol (POP) (TCP Ports 109 and 110)<br />

Apple CORES (Computer Response Squad) : F.3.4.3. Apple Computer worldwide R&D community<br />

Apple Macintosh, Web server on : 18.2. Running a <strong>Sec</strong>ure Server<br />

applets : 11.1.5. Viruses<br />

application-level encryption : 16.3.1. Link-level <strong>Sec</strong>urity<br />

applications, CGI : (see CGI, scripts)<br />

ar program : 7.4.2. Simple Archives<br />

architecture, room : 12.2.3. Physical Access<br />

archiving information<br />

7.1.1.1. A taxonomy of computer failures<br />

(see also logging)<br />

arguments, checking : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

ARPA (Advanced Research Projects Agency)<br />

1.3. History of <strong>UNIX</strong><br />

(see also <strong>UNIX</strong>, history of)<br />

ARPANET network : 16.1.1. The <strong>Internet</strong><br />

ASIS (American Society for Industrial <strong>Sec</strong>urity) : F.1.2. American Society for Industrial <strong>Sec</strong>urity (ASIS)<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (5 of 8) [2002-04-12 10:43:40]

Index<br />

assert macro : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

assessing risks<br />

2.2. Risk Assessment<br />

2.2.2. Review Your Risks<br />

2.5.3. Final Words: Risk Management Means Common Sense<br />

assets, identifying : 2.2.1.1. Identifying assets<br />

ASSIST : F.3.4.42. U.S. Department of Defense<br />

Association for Computing Machinery (ACM) : F.1.1. Association for Computing Machinery (ACM)<br />

asymmetric key cryptography : 6.4. Common Cryptographic Algorithms<br />

asynchronous systems : 19.2. Sun's Remote Procedure Call (RPC)<br />

Asynchronous Transfer Mode (ATM) : 16.2. IPv4: The <strong>Internet</strong> Protocol Version 4<br />

at program<br />

11.5.3.4. The at program<br />

25.2.1.2. System overload attacks<br />

AT&T System V : (see System V <strong>UNIX</strong>)<br />

Athena : (see Kerberos system)<br />

atime<br />

5.1.2. Inodes<br />

5.1.5. File Times<br />

ATM (Asynchronous Transfer Mode) : 16.2. IPv4: The <strong>Internet</strong> Protocol Version 4<br />

attacks : (see threats)<br />

audio device : 23.8. Picking a Random Seed<br />

audit IDs<br />

4.3.3. Other IDs<br />

10.1. The Basic Log Files<br />

auditing<br />

10. Auditing and Logging<br />

(see also logging)<br />

C2 audit : 10.1. The Basic Log Files<br />

checklist for : A.1.1.9. Chapter 10: Auditing and Logging<br />

employee access : 13.2.4. Auditing Access<br />

login times : 10.1.1. lastlog File<br />

system activity : 2.1. Planning Your <strong>Sec</strong>urity Needs<br />

user activity : 4.1.2. Multiple Accounts with the Same UID<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (6 of 8) [2002-04-12 10:43:40]

Index<br />

who is logged in<br />

10.1.2. utmp and wtmp Files<br />

10.1.2.1. su command and /etc/utmp and /var/adm/wtmp files<br />

AUTH_DES authentication : 19.2.2.3. AUTH_DES<br />

AUTH_KERB authentication : 19.2.2.4. AUTH_KERB<br />

AUTH_NONE authentication : 19.2.2.1. AUTH_NONE<br />

AUTH_<strong>UNIX</strong> authentication : 19.2.2.2. AUTH_<strong>UNIX</strong><br />

authd service : 23.3. Tips on Writing Network Programs<br />

authdes_win variable : 19.3.1.3. Setting the window<br />

authentication : 3.2.3. Authentication<br />

ID services : 16.3.3. Authentication<br />

Kerberos<br />

19.6.1. Kerberos Authentication<br />

19.6.1.4. Kerberos 4 vs. Kerberos 5<br />

of logins : 17.3.5. TACACS (UDP Port 49)<br />

message digests<br />

6.5.2. Using Message Digests<br />

9.2.3. Checksums and Signatures<br />

23.5.1. Use Message Digests for Storing Passwords<br />

NIS+ : 19.5.4. Using NIS+<br />

RPCs<br />

19.2.2. RPC Authentication<br />

19.2.2.4. AUTH_KERB<br />

<strong>Sec</strong>ure RPC : 19.3.1. <strong>Sec</strong>ure RPC Authentication<br />

security standard for : 2.4.2. Standards<br />

for Web use : 18.3.3. Setting Up Web Users and Passwords<br />

xhost facility : 17.3.21.3. The xhost facility<br />

authenticators : 3.1. Usernames<br />

AuthGroupFile option : 18.3.2. Commands Within the Block<br />

authors of programmed threats : 11.3. Authors<br />

AuthRealm option : 18.3.2. Commands Within the Block<br />

AuthType option : 18.3.2. Commands Within the Block<br />

AuthUserFile option : 18.3.2. Commands Within the Block<br />

Auto_Mounter table (NIS+) : 19.5.3. NIS+ Tables<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (7 of 8) [2002-04-12 10:43:40]

Index<br />

autologout shell variable : 12.3.5.1. Built-in shell autologout<br />

Automated Systems Incident Response Capability (NASA) : F.3.4.24. NASA: NASA-wide<br />

automatic<br />

11.5.3. Abusing Automatic Mechanisms<br />

(see also at program; cron file)<br />

account lockout : 3.3. Entering Your Password<br />

backups system : 7.3.2. Building an Automatic Backup System<br />

cleanup scripts (UUCP) : 15.6.2. Automatic Execution of Cleanup Scripts<br />

directory listings (Web) : 18.2.2.2. Additional configuration issues<br />

disabling of dormant accounts : 8.4.3. Finding Dormant Accounts<br />

logging out : 12.3.5.1. Built-in shell autologout<br />

mechanisms, abusing<br />

11.5.3. Abusing Automatic Mechanisms<br />

11.5.3.6. Other files<br />

password generation : 8.8.4. Password Generators<br />

power cutoff : (see detectors)<br />

sprinkler systems : 12.2.1.1. Fire<br />

wtmp file pruning : 10.1.3.1. Pruning the wtmp file<br />

auxiliary (printer) ports : 12.3.1.4. Auxiliary ports on terminals<br />

awareness, security : (see security, user awareness of)<br />

awk scripts<br />

11.1.4. Trojan Horses<br />

11.5.1.2. IFS attacks<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Copyright © 1999 <strong>O'Reilly</strong> & Associates, Inc. All Rights Reserved.<br />

[ Library Home | DNS & BIND | TCP/IP | sendmail | sendmail Reference | Firewalls | <strong>Practical</strong> <strong>Sec</strong>urity ]<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_a.htm (8 of 8) [2002-04-12 10:43:40]

Index<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Index: B<br />

back doors<br />

2.5. The Problem with <strong>Sec</strong>urity Through Obscurity<br />

6.2.3. Cryptographic Strength<br />

11.1. Programmed Threats: Definitions<br />

11.1.2. Back Doors and Trap Doors<br />

11.5. Protecting Yourself<br />

27.1.2. Trusting Trust<br />

in MUDs and IRCs : 17.3.23. Other TCP Ports: MUDs and <strong>Internet</strong> Relay Chat (IRC)<br />

background checks, employee : 13.1. Background Checks<br />

backquotes in CGI input<br />

18.2.3.2. Testing is not enough!<br />

18.2.3.3. Sending mail<br />

BACKSPACE key : 3.4. Changing Your Password<br />

backup program : 7.4.3. Specialized Backup Programs<br />

backups<br />

7. Backups<br />

7.4.7. inode Modification Times<br />

9.1.2. Read-only Filesystems<br />

24.2.2. What to Do When You Catch Somebody<br />

across networks : 7.4.5. Backups Across the Net<br />

for archiving information : 7.1.1.1. A taxonomy of computer failures<br />

automatic<br />

7.3.2. Building an Automatic Backup System<br />

18.2.3.5. Beware stray CGI scripts<br />

checklist for : A.1.1.6. Chapter 7: Backups<br />

criminal investigations and : 26.2.4. Hazards of Criminal Prosecution<br />

of critical files<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_b.htm (1 of 6) [2002-04-12 10:43:41]

Index<br />

bacteria<br />

7.3. Backing Up System Files<br />

7.3.2. Building an Automatic Backup System<br />

encrypting<br />

7.4.4. Encrypting Your Backups<br />

12.3.2.4. Backup encryption<br />

hardcopy : 24.5.1. Never Trust Anything Except Hardcopy<br />

keeping secure<br />

2.4.2. Standards<br />

2.4.3. Guidelines<br />

7.1.6. <strong>Sec</strong>urity for Backups<br />

7.1.6.3. Data security for backups<br />

26.2.6. Other Tips<br />

laws concerning : 7.1.7. Legal Issues<br />

of log files : 10.2.2. Accounting with BSD<br />

retention of<br />

7.1.5. How Long Should You Keep a Backup?<br />

7.2. Sample Backup Strategies<br />

7.2.5. Deciding upon a Backup Strategy<br />

rotating media : 7.1.3. Types of Backups<br />

software for<br />

7.4. Software for Backups<br />

7.4.7. inode Modification Times<br />

commercial : 7.4.6. Commercial Offerings<br />

special programs for : 7.4.3. Specialized Backup Programs<br />

strategies for<br />

7.2. Sample Backup Strategies<br />

7.2.5. Deciding upon a Backup Strategy<br />

10.8. Managing Log Files<br />

theft of<br />

12.3.2. Protecting Backups<br />

12.3.2.4. Backup encryption<br />

verifying : 12.3.2.1. Verify your backups<br />

zero-filled bytes in : 7.4. Software for Backups<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_b.htm (2 of 6) [2002-04-12 10:43:41]

Index<br />

11.1. Programmed Threats: Definitions<br />

11.1.7. Bacteria and Rabbits<br />

BADSU attempts : (see sulog file)<br />

Baldwin, Robert : 6.6.1.1. The crypt program<br />

bang (!) and mail command : 15.1.3. mail Command<br />

Bash shell (bsh) : 8.1.4.4. No restricted bash<br />

Basic Networking Utilities : (see BNU UUCP)<br />

bastion hosts : 21.1.3. Anatomy of a Firewall<br />

batch command : 25.2.1.2. System overload attacks<br />

batch jobs : (see cron file)<br />

baud : 14.1. Modems: Theory of Operation<br />

bell (in Swatch program) : 10.6.2. The Swatch Configuration File<br />

Bellcore : F.3.4.5. Bellcore<br />

Berkeley <strong>UNIX</strong> : (see BSD <strong>UNIX</strong>)<br />

Berkeley's sendmail : (see sendmail)<br />

bidirectionality<br />

14.1. Modems: Theory of Operation<br />

14.4.1. One-Way Phone Lines<br />

bigcrypt algorithm : 8.6.4. Crypt16() and Other Algorithms<br />

/bin directory<br />

11.1.5. Viruses<br />

11.5.1.1. PATH attacks<br />

backing up : 7.1.2. What Should You Back Up?<br />

/bin/csh : (see csh)<br />

/bin/ksh : (see ksh)<br />

/bin/login : (see login program)<br />

/bin/passwd : (see passwd command)<br />

/bin/sh : (see sh)<br />

in restricted filesystems : 8.1.5. Restricted Filesystem<br />

binary code : 11.1.5. Viruses<br />

bind system call<br />

16.2.6.1. DNS under <strong>UNIX</strong><br />

17.1.3. The /etc/inetd Program<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_b.htm (3 of 6) [2002-04-12 10:43:41]

Index<br />

biological threats : 12.2.1.7. Bugs (biological)<br />

block devices : 5.6. Device Files<br />

block send commands : 11.1.4. Trojan Horses<br />

blocking systems : 19.2. Sun's Remote Procedure Call (RPC)<br />

BNU UUCP<br />

15.5. <strong>Sec</strong>urity in BNU UUCP<br />

15.5.3. uucheck: Checking Your Permissions File<br />

Boeing CERT : F.3.4.5. Bellcore<br />

bogusns directive : 17.3.6.2. DNS nameserver attacks<br />

boot viruses : 11.1.5. Viruses<br />

Bootparams table (NIS+) : 19.5.3. NIS+ Tables<br />

Bourne shell<br />

C.5.3. Running the User's Shell<br />

(see also sh program; shells)<br />

(see sh)<br />

Bourne shell (sh) : C.5.3. Running the User's Shell<br />

bps (bits per second) : 14.1. Modems: Theory of Operation<br />

BREAK key : 14.5.3.2. Answer testing<br />

breakins<br />

checklist for : A.1.1.23. Chapter 24: Discovering a Break-in<br />

legal options following : 26.1. Legal Options After a Break-in<br />

responding to<br />

24. Discovering a Break-in<br />

24.7. Damage Control<br />

resuming operation after : 24.6. Resuming Operation<br />

broadcast storm : 25.3.2. Message Flooding<br />

browsers : (see Web browsers)<br />

BSD <strong>UNIX</strong><br />

Which <strong>UNIX</strong> System?<br />

1.3. History of <strong>UNIX</strong><br />

accounting with : 10.2.2. Accounting with BSD<br />

Fast Filesystem (FFS) : 25.2.2.6. Reserved space<br />

groups and : 4.1.3.3. Groups and BSD or SVR4 <strong>UNIX</strong><br />

immutable files : 9.1.1. Immutable and Append-Only Files<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_b.htm (4 of 6) [2002-04-12 10:43:41]

Index<br />

modems and : 14.5.1. Hooking Up a Modem to Your Computer<br />

programming references : D.1.11. <strong>UNIX</strong> Programming and System Administration<br />

ps command with : C.1.2.2. Listing processes with Berkeley-derived versions of <strong>UNIX</strong><br />

published resources for : D.1. <strong>UNIX</strong> <strong>Sec</strong>urity References<br />

restricted shells : 8.1.4.2. Restricted shells under Berkeley versions<br />

SUID files, list of : B.3. SUID and SGID Files<br />

sulog log under : 4.3.7.1. The sulog under Berkeley <strong>UNIX</strong><br />

utmp and wtmp files : 10.1.2. utmp and wtmp Files<br />

BSD/OS (operating system) : 1.3. History of <strong>UNIX</strong><br />

bsh (Bash shell) : 8.1.4.4. No restricted bash<br />

BSI/GISA : F.3.4.15. Germany: government institutions<br />

buffers<br />

checking boundaries : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

bugs<br />

for editors : 11.1.4. Trojan Horses<br />

1.1. What Is Computer <strong>Sec</strong>urity?<br />

1.4.2. Software Quality<br />

23.1.2.1. What they found<br />

27.2.3. Buggy Software<br />

27.2.5. <strong>Sec</strong>urity Bugs that Never Get Fixed<br />

Bugtraq mailing list : E.1.3.3. Bugtraq<br />

hacker challenges : 27.2.4. Hacker Challenges<br />

hardware : 27.2.1. Hardware Bugs<br />

.htaccess file : 18.3.1. The access.conf and .htaccess Files<br />

keeping secret : 2.5. The Problem with <strong>Sec</strong>urity Through Obscurity<br />

tips on avoiding : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

bugs (biological) : 12.2.1.7. Bugs (biological)<br />

bugs<br />

Preface<br />

(see also security holes)<br />

bulk erasers : 12.3.2.3. Sanitize your media before disposal<br />

byte-by-byte comparisons<br />

9.2.1. Comparison Copies<br />

9.2.1.3. rdist<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_b.htm (5 of 6) [2002-04-12 10:43:41]

Index<br />

bytes, zero-filled : 7.4. Software for Backups<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Copyright © 1999 <strong>O'Reilly</strong> & Associates, Inc. All Rights Reserved.<br />

[ Library Home | DNS & BIND | TCP/IP | sendmail | sendmail Reference | Firewalls | <strong>Practical</strong> <strong>Sec</strong>urity ]<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_b.htm (6 of 6) [2002-04-12 10:43:41]

Index<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Index: C<br />

C programming language<br />

1.3. History of <strong>UNIX</strong><br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

-Wall compiler option : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

C shell : (see csh)<br />

C2 audit : 10.1. The Basic Log Files<br />

cables, network<br />

12.2.4.2. Network cables<br />

12.3.1.5. Fiber optic cable<br />

cutting : 25.1. Destructive Attacks<br />

tampering detectors for : 12.3.1.1. Wiretapping<br />

wiretapping : 12.3.1.1. Wiretapping<br />

cache, nameserver : 16.3.2. <strong>Sec</strong>urity and Nameservice<br />

caching : 5.6. Device Files<br />

Caesar Cipher : 6.4.3. ROT13: Great for Encoding Offensive Jokes<br />

calculating costs of losses : 2.3.1. The Cost of Loss<br />

call forwarding : 14.5.4. Physical Protection of Modems<br />

Call Trace : 24.2.4. Tracing a Connection<br />

CALLBACK= command : 15.5.2. Permissions Commands<br />

callbacks<br />

14.4.2.<br />

14.6. Additional <strong>Sec</strong>urity for Modems<br />

BNU UUCP : 15.5.2. Permissions Commands<br />

Version 2 UUCP : 15.4.1.5. Requiring callback<br />

Caller-ID (CNID)<br />

14.4.3. Caller-ID (CNID)<br />

14.6. Additional <strong>Sec</strong>urity for Modems<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (1 of 12) [2002-04-12 10:43:42]

Index<br />

24.2.4. Tracing a Connection<br />

Canada, export control in : 6.7.2. Cryptography and Export Controls<br />

carbon monoxide : 12.2.1.2. Smoke<br />

caret (^) in encrypted messages : 6.2. What Is Encryption?<br />

case in usernames : 3.1. Usernames<br />

cat command<br />

3.2.2. The /etc/passwd File and Network Databases<br />

15.4.3. L.cmds: Providing Remote Command Execution<br />

-ve option : 5.5.4.1. The ncheck command<br />

-v option : 24.4.1.7. Hidden files and directories<br />

cat-passwd command : 3.2.2. The /etc/passwd File and Network Databases<br />

CBC (cipher block chaining)<br />

6.4.4.2. DES modes<br />

6.6.2. des: The Data Encryption Standard<br />

CBW (Crypt Breaker's Workbench) : 6.6.1.1. The crypt program<br />

CCTA IT <strong>Sec</strong>urity & Infrastructure Group : F.3.4.39. UK: other government departments and agencies<br />

CD-ROM : 9.1.2. Read-only Filesystems<br />

CDFs (context-dependent files)<br />

5.9.2. Context-Dependent Files<br />

24.4.1.7. Hidden files and directories<br />

ceilings, dropped : 12.2.3.1. Raised floors and dropped ceilings<br />

cellular telephones : 12.2.1.8. Electrical noise<br />

CERCUS (Computer Emergency Response Committee for Unclassified Systems) : F.3.4.36. TRW<br />

network area and system administrators<br />

Cerf, Vint : 16.2. IPv4: The <strong>Internet</strong> Protocol Version 4<br />

CERN : E.4.1. CERN HTTP Daemon<br />

CERT (Computer Emergency Response Team)<br />

6.5.2. Using Message Digests<br />

27.3.5. Response Personnel?<br />

F.3.4.1. All <strong>Internet</strong> sites<br />

CERT-NL (Netherlands) : F.3.4.25. Netherlands: SURFnet-connected sites<br />

mailing list for : E.1.3.4. CERT-advisory<br />

CFB (cipher feedback) : 6.4.4.2. DES modes<br />

CGI (Common Gateway Interface) : 18.1. <strong>Sec</strong>urity and the World Wide Web<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (2 of 12) [2002-04-12 10:43:42]

Index<br />

scripts<br />

18.2. Running a <strong>Sec</strong>ure Server<br />

18.2.3. Writing <strong>Sec</strong>ure CGI Scripts and Programs<br />

18.2.4.1. Beware mixing HTTP with anonymous FTP<br />

cgi-bin directory : 18.2.2. Understand Your Server's Directory Structure<br />

chacl command : 5.2.5.2. HP-UX access control lists<br />

-f option : 5.2.5.2. HP-UX access control lists<br />

-r option : 5.2.5.2. HP-UX access control lists<br />

change detection<br />

9.2. Detecting Change<br />

9.3. A Final Note<br />

character devices : 5.6. Device Files<br />

chat groups, harassment via : 26.4.7. Harassment, Threatening Communication, and Defamation<br />

chdir command<br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

25.2.2.8. Tree-structure attacks<br />

checklists for detecting changes<br />

9.2.2. Checklists and Metadata<br />

9.2.3. Checksums and Signatures<br />

checksums<br />

6.5.5.1. Checksums<br />

9.2.3. Checksums and Signatures<br />

Chesson, Greg : 15.2. Versions of UUCP<br />

chfn command : 8.2. Monitoring File Format<br />

chgrp command : 5.8. chgrp: Changing a File's Group<br />

child processes : C.2. Creating Processes<br />

chkey command : 19.3.1.1. Proving your identity<br />

chmod command<br />

5.2.1. chmod: Changing a File's Permissions<br />

5.2.4. Using Octal File Permissions<br />

8.3. Restricting Logins<br />

-A option : 5.2.5.2. HP-UX access control lists<br />

-f option : 5.2.1. chmod: Changing a File's Permissions<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (3 of 12) [2002-04-12 10:43:42]

Index<br />

-h option : 5.2.1. chmod: Changing a File's Permissions<br />

-R option : 5.2.1. chmod: Changing a File's Permissions<br />

chokes : (see firewalls)<br />

chown command<br />

5.7. chown: Changing a File's Owner<br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

chroot system call<br />

8.1.5. Restricted Filesystem<br />

8.1.5.2. Checking new software<br />

11.1.4. Trojan Horses<br />

23.4.1. Using chroot()<br />

with anonymous FTP : 17.3.2.6. Setting up anonymous FTP with the standard <strong>UNIX</strong> FTP server<br />

chrootuid daemon : E.4.2. chrootuid<br />

chsh command : 8.7.1. Integrating One-time Passwords with <strong>UNIX</strong><br />

CIAC (Computer Incident Advisory Capability) : F.3.4.43. U.S. Department of Energy sites, Energy<br />

Sciences Network (ESnet), and DOE contractors<br />

CIDR (Classless InterDomain Routing)<br />

16.2.1.1. IP networks<br />

16.2.1.3. CIDR addresses<br />

cigarettes : 12.2.1.2. Smoke<br />

cipher<br />

6.4.3. ROT13: Great for Encoding Offensive Jokes<br />

(see also cryptography; encryption)<br />

block chaining (CBC)<br />

6.4.4.2. DES modes<br />

6.6.2. des: The Data Encryption Standard<br />

ciphertext<br />

6.2. What Is Encryption?<br />

8.6.1. The crypt() Algorithm<br />

feedback (CFB) : 6.4.4.2. DES modes<br />

CISCO : F.3.4.8. CISCO Systems<br />

civil actions (lawsuits) : 26.3. Civil Actions<br />

classified data and breakins<br />

26.1. Legal Options After a Break-in<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (4 of 12) [2002-04-12 10:43:42]

Index<br />

26.2.2. Federal Jurisdiction<br />

Classless InterDomain Routing (CIDR)<br />

16.2.1.1. IP networks<br />

16.2.1.3. CIDR addresses<br />

clear text : 8.6.1. The crypt() Algorithm<br />

Clear to Send (CTS) : 14.3. The RS-232 Serial Protocol<br />

client flooding : 16.3.2. <strong>Sec</strong>urity and Nameservice<br />

client/server model : 16.2.5. Clients and Servers<br />

clients, NIS : (see NIS)<br />

clock, system<br />

5.1.5. File Times<br />

17.3.14. Network Time Protocol (NTP) (UDP Port 123)<br />

for random seeds : 23.8. Picking a Random Seed<br />

resetting : 9.2.3. Checksums and Signatures<br />

<strong>Sec</strong>ure RPC timestamp : 19.3.1.3. Setting the window<br />

clogging : 25.3.4. Clogging<br />

CMW (Compartmented-Mode Workstation) : "<strong>Sec</strong>ure" Versions of <strong>UNIX</strong><br />

CNID (Caller-ID)<br />

14.4.3. Caller-ID (CNID)<br />

14.6. Additional <strong>Sec</strong>urity for Modems<br />

24.2.4. Tracing a Connection<br />

CO2 system (for fires) : 12.2.1.1. Fire<br />

COAST (Computer Operations, Audit, and <strong>Sec</strong>urity Technology)<br />

E.3.2. COAST<br />

E.4. Software Resources<br />

code breaking : (see cryptography)<br />

codebooks : 8.7.3. Code Books<br />

CodeCenter : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

cold, extreme : 12.2.1.6. Temperature extremes<br />

command shells : (see shells)<br />

commands<br />

8.1.3. Accounts That Run a Single Command<br />

(see also under specific command name)<br />

accounts running single : 8.1.3. Accounts That Run a Single Command<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (5 of 12) [2002-04-12 10:43:42]

Index<br />

in addresses : 15.7. Early <strong>Sec</strong>urity Problems with UUCP<br />

editor, embedded : 11.5.2.7. Other initializations<br />

remote execution of<br />

15.1.2. uux Command<br />

15.4.3. L.cmds: Providing Remote Command Execution<br />

17.3.17. rexec (TCP Port 512)<br />

running simultaneously<br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

(see also multitasking)<br />

commands in blocks : 18.3.2. Commands Within the Block<br />

COMMANDS= command : 15.5.2. Permissions Commands<br />

commenting out services : 17.3. Primary <strong>UNIX</strong> Network Services<br />

comments in BNU UUCP : 15.5.1.3. A Sample Permissions file<br />

Common Gateway Interface : (see CGI)<br />

communications<br />

modems : (see modems)<br />

national telecommunications : 26.2.2. Federal Jurisdiction<br />

threatening : 26.4.7. Harassment, Threatening Communication, and Defamation<br />

comparison copies<br />

9.2.1. Comparison Copies<br />

9.2.1.3. rdist<br />

compress program : 6.6.1.2. Ways of improving the security of crypt<br />

Compressed SLIP (CSLIP) : 16.2. IPv4: The <strong>Internet</strong> Protocol Version 4<br />

Computer Emergency Response Committee for Unclassified Systems (CERCUS) : F.3.4.36. TRW<br />

network area and system administrators<br />

Computer Emergency Response Team : (see CERT)<br />

Computer Incident Advisory Capability (CIAC) : F.3.4.43. U.S. Department of Energy sites, Energy<br />

Sciences Network (ESnet), and DOE contractors<br />

computer networks : 1.4.3. Add-On Functionality Breeds Problems<br />

Computer <strong>Sec</strong>urity Institute (CSI) : F.1.3. Computer <strong>Sec</strong>urity Institute (CSI)<br />

computers<br />

assigning UUCP name : 15.5.2. Permissions Commands<br />

auxiliary ports : 12.3.1.4. Auxiliary ports on terminals<br />

backing up individual : 7.2.1. Individual Workstation<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (6 of 12) [2002-04-12 10:43:42]

Index<br />

contacting administrator of : 24.2.4.2. How to contact the system administrator of a computer you<br />

don't know<br />

cutting cables to : 25.1. Destructive Attacks<br />

failure of : 7.1.1.1. A taxonomy of computer failures<br />

hostnames for<br />

16.2.3. Hostnames<br />

16.2.3.1. The /etc/hosts file<br />

modems : (see modems)<br />

multiple screens : 12.3.4.3. Multiple screens<br />

multiple suppliers of : 18.6. Dependence on Third Parties<br />

non-citizen access to : 26.4.1. Munitions Export<br />

operating after breakin : 24.6. Resuming Operation<br />

portable : 12.2.6.3. Portables<br />

remote command execution : 17.3.17. rexec (TCP Port 512)<br />

running NIS+ : 19.5.5. NIS+ Limitations<br />

screen savers : 12.3.5.2. X screen savers<br />

security<br />

culture of : D.1.10. Understanding the Computer <strong>Sec</strong>urity "Culture"<br />

four steps toward : 2.4.4.7. Defend in depth<br />

physical : 12.2.6.1. Physically secure your computer<br />

references for : D.1.7. General Computer <strong>Sec</strong>urity<br />

resources on : D.1.1. Other Computer References<br />

seized as evidence : 26.2.4. Hazards of Criminal Prosecution<br />

transferring files between : 15.1.1. uucp Command<br />

trusting<br />

27.1. Can you Trust Your Computer?<br />

27.1.3. What the Superuser Can and Cannot Do<br />

unattended<br />

12.3.5. Unattended Terminals<br />

12.3.5.2. X screen savers<br />

unplugging : 24.2.5. Getting Rid of the Intruder<br />

vacuums for : 12.2.1.3. Dust<br />

vandalism of : (see vandalism)<br />

virtual : (see Telnet utility)<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (7 of 12) [2002-04-12 10:43:42]

Index<br />

computing base (TCB) : 8.5.3.2. Trusted computing base<br />

conf directory : 18.2.2.1. Configuration files<br />

conf/access.conf : (see access.conf file)<br />

conf/srm.conf file : 18.3.1. The access.conf and .htaccess Files<br />

confidentiality : (see encryption; privacy)<br />

configuration<br />

errors : 9.1. Prevention<br />

files : 11.5.3. Abusing Automatic Mechanisms<br />

logging : 10.7.2.2. Informational material<br />

MCSA web server : 18.2.2.1. Configuration files<br />

UUCP version differences : 15.2. Versions of UUCP<br />

simplifying management of : 9.1.2. Read-only Filesystems<br />

connections<br />

hijacking : 16.3. IP <strong>Sec</strong>urity<br />

laundering : 16.1.1.1. Who is on the <strong>Internet</strong>?<br />

tracing<br />

24.2.4. Tracing a Connection<br />

24.2.4.2. How to contact the system administrator of a computer you don't know<br />

unplugging : 24.2.5. Getting Rid of the Intruder<br />

connectors, network : 12.2.4.3. Network connectors<br />

consistency of software : 2.1. Planning Your <strong>Sec</strong>urity Needs<br />

console device : 5.6. Device Files<br />

CONSOLE variable : 8.5.1. <strong>Sec</strong>ure Terminals<br />

constraining passwords : 8.8.2. Constraining Passwords<br />

consultants : 27.3.4. Your Consultants?<br />

context-dependent files (CDFs)<br />

5.9.2. Context-Dependent Files<br />

24.4.1.7. Hidden files and directories<br />

control characters in usernames : 3.1. Usernames<br />

cookies<br />

17.3.21.4. Using Xauthority magic cookies<br />

18.2.3.1. Do not trust the user's browser!<br />

COPS (Computer Oracle and Password System)<br />

19.5.5. NIS+ Limitations<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (8 of 12) [2002-04-12 10:43:42]

Index<br />

E.4.3. COPS (Computer Oracle and Password System)<br />

copyright<br />

9.2.1. Comparison Copies<br />

26.4.2. Copyright Infringement<br />

26.4.2.1. Software piracy and the SPA<br />

notices of : 26.2.6. Other Tips<br />

CORBA (Common Object Request Broker Architecture) : 19.2. Sun's Remote Procedure Call (RPC)<br />

core files<br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

C.4. The kill Command<br />

cost-benefit analysis<br />

2.3. Cost-Benefit Analysis<br />

2.3.4. Convincing Management<br />

costs of losses : 2.3.1. The Cost of Loss<br />

cp command : 7.4.1. Simple Local Copies<br />

cpio program<br />

7.3.2. Building an Automatic Backup System<br />

7.4.2. Simple Archives<br />

crack program<br />

8.8.3. Cracking Your Own Passwords<br />

18.3.3. Setting Up Web Users and Passwords<br />

cracking<br />

backing up because of : 7.1.1.1. A taxonomy of computer failures<br />

passwords<br />

3.6.1. Bad Passwords: Open Doors<br />

3.6.4. Passwords on Multiple Machines<br />

8.6.1. The crypt() Algorithm<br />

8.8.3. Cracking Your Own Passwords<br />

8.8.3.2. The dilemma of password crackers<br />

17.3.3. TELNET (TCP Port 23)<br />

logging failed attempts : 10.5.3. syslog Messages<br />

responding to<br />

24. Discovering a Break-in<br />

24.7. Damage Control<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (9 of 12) [2002-04-12 10:43:42]

Index<br />

using rexecd : 17.3.17. rexec (TCP Port 512)<br />

crashes, logging : 10.7.2.1. Exception and activity reports<br />

CRC checksums : (see checksums)<br />

Cred table (NIS+) : 19.5.3. NIS+ Tables<br />

criminal prosecution<br />

26.2. Criminal Prosecution<br />

26.2.7. A Final Note on Criminal Actions<br />

cron file<br />

9.2.2.1. Simple listing<br />

11.5.1.4. Filename attacks<br />

11.5.3.1. crontab entries<br />

automating backups : 7.3.2. Building an Automatic Backup System<br />

cleaning up /tmp directory : 25.2.4. /tmp Problems<br />

collecting login times : 10.1.1. lastlog File<br />

symbolic links in : 10.3.7. Other Logs<br />

system clock and : 17.3.14. Network Time Protocol (NTP) (UDP Port 123)<br />

uucp scripts in : 15.6.2. Automatic Execution of Cleanup Scripts<br />

crontab file : 15.6.2. Automatic Execution of Cleanup Scripts<br />

Crypt Breaker's Workbench (CBW) : 6.6.1.1. The crypt program<br />

crypt command/algorithm<br />

6.4.1. Summary of Private Key Systems<br />

6.6.1. <strong>UNIX</strong> crypt: The Original <strong>UNIX</strong> Encryption Command<br />

6.6.1.3. Example<br />

8.6. The <strong>UNIX</strong> Encrypted Password System<br />

18.3.3. Setting Up Web Users and Passwords<br />

crypt function<br />

8.6. The <strong>UNIX</strong> Encrypted Password System<br />

8.6.1. The crypt() Algorithm<br />

8.8.7. Algorithm and Library Changes<br />

23.5. Tips on Using Passwords<br />

crypt16 algorithm : 8.6.4. Crypt16() and Other Algorithms<br />

cryptography<br />

6. Cryptography<br />

6.7.2. Cryptography and Export Controls<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (10 of 12) [2002-04-12 10:43:42]

Index<br />

14.4.4.2. Protection against eavesdropping<br />

checklist for : A.1.1.5. Chapter 6: Cryptography<br />

checksums : 6.5.5.1. Checksums<br />

digital signatures : (see digital signatures)<br />

export laws concerning : 6.7.2. Cryptography and Export Controls<br />

Message Authentication Codes (MACs) : 6.5.5.2. Message authentication codes<br />

message digests : (see message digests)<br />

PGP : (see PGP)<br />

private-key<br />

6.4. Common Cryptographic Algorithms<br />

6.4.1. Summary of Private Key Systems<br />

public-key<br />

6.4. Common Cryptographic Algorithms<br />

6.4.2. Summary of Public Key Systems<br />

6.4.6. RSA and Public Key Cryptography<br />

6.4.6.3. Strength of RSA<br />

6.5.3. Digital Signatures<br />

18.3. Controlling Access to Files on Your Server<br />

18.6. Dependence on Third Parties<br />

references on : D.1.5. Cryptography Books<br />

and U.S. patents : 6.7.1. Cryptography and the U.S. Patent System<br />

csh (C shell)<br />

5.5.2. Problems with SUID<br />

11.5.1. Shell Features<br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

C.5.3. Running the User's Shell<br />

(see also shells)<br />

autologout variable : 12.3.5.1. Built-in shell autologout<br />

history file : 10.4.1. Shell History<br />

uucp command : 15.1.1.1. uucp with the C shell<br />

.cshrc file<br />

11.5.2.2. .cshrc, .kshrc<br />

12.3.5.1. Built-in shell autologout<br />

24.4.1.6. Changes to startup files<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (11 of 12) [2002-04-12 10:43:42]

Index<br />

CSI (Computer <strong>Sec</strong>urity Institute) : F.1.3. Computer <strong>Sec</strong>urity Institute (CSI)<br />

CSLIP (Compressed SLIP) : 16.2. IPv4: The <strong>Internet</strong> Protocol Version 4<br />

ctime<br />

5.1.2. Inodes<br />

5.1.5. File Times<br />

5.2.1. chmod: Changing a File's Permissions<br />

7.4.7. inode Modification Times<br />

9.2.3. Checksums and Signatures<br />

cu command<br />

14.5. Modems and <strong>UNIX</strong><br />

14.5.3.1. Originate testing<br />

14.5.3.3. Privilege testing<br />

-l option : 14.5.3.1. Originate testing<br />

culture, computer security : D.1.10. Understanding the Computer <strong>Sec</strong>urity "Culture"<br />

current directory : 5.1.3. Current Directory and Paths<br />

Customer Warning System (CWS) : F.3.4.34. Sun Microsystems customers<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Copyright © 1999 <strong>O'Reilly</strong> & Associates, Inc. All Rights Reserved.<br />

[ Library Home | DNS & BIND | TCP/IP | sendmail | sendmail Reference | Firewalls | <strong>Practical</strong> <strong>Sec</strong>urity ]<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_c.htm (12 of 12) [2002-04-12 10:43:42]

Index<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Index: D<br />

DAC (Discretionary Access Controls) : 4.1.3. Groups and Group Identifiers (GIDs)<br />

daemon (user) : 4.1. Users and Groups<br />

damage, liability for : 26.4.6. Liability for Damage<br />

DARPA : (see ARPA)<br />

DAT (Digital Audio Tape) : 7.1.4. Guarding Against Media Failure<br />

data<br />

assigning owners to : 2.4.4.1. Assign an owner<br />

availability of : 2.1. Planning Your <strong>Sec</strong>urity Needs<br />

communication equipment (DCE) : 14.3. The RS-232 Serial Protocol<br />

confidential<br />

2.1. Planning Your <strong>Sec</strong>urity Needs<br />

2.5.2. Confidential Information<br />

disclosure of : 11.2. Damage<br />

giving away with NIS : 19.4.5. Unintended Disclosure of Site Information with NIS<br />

identifying assets : 2.2.1.1. Identifying assets<br />

integrity of : (see integrity, data)<br />

spoofing : 16.3. IP <strong>Sec</strong>urity<br />

terminal equipment (DTE) : 14.3. The RS-232 Serial Protocol<br />

Data Carrier Detect (DCD) : 14.3. The RS-232 Serial Protocol<br />

Data Defense Network (DDN) : F.3.4.20. MILNET<br />

Data Encryption Standard : (see DES)<br />

Data Set Ready (DSR) : 14.3. The RS-232 Serial Protocol<br />

Data Terminal Ready (DTR) : 14.3. The RS-232 Serial Protocol<br />

database files : 1.2. What Is an Operating System?<br />

databases : (see network databases)<br />

date command<br />

8.1.3. Accounts That Run a Single Command<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (1 of 8) [2002-04-12 10:43:43]

Index<br />

24.5.1. Never Trust Anything Except Hardcopy<br />

day-zero backups : 7.1.3. Types of Backups<br />

dbx debugger : C.4. The kill Command<br />

DCE (data communication equipment) : 14.3. The RS-232 Serial Protocol<br />

DCE (Distributed Computing Environment)<br />

3.2.2. The /etc/passwd File and Network Databases<br />

8.7.3. Code Books<br />

16.2.6.2. Other naming services<br />

19.2. Sun's Remote Procedure Call (RPC)<br />

19.7.1. DCE<br />

dd command<br />

6.6.1.2. Ways of improving the security of crypt<br />

7.4.1. Simple Local Copies<br />

DDN (Data Defense Network) : F.3.4.20. MILNET<br />

deadlock : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

debug command : 17.3.4.2. Using sendmail to receive email<br />

debugfs command : 25.2.2.8. Tree-structure attacks<br />

DEC (Digital Equipment Corporation) : F.3.4.9. Digital Equipment Corporation and customers<br />

DECnet protocol : 16.4.3. DECnet<br />

decode aliases : 17.3.4.2. Using sendmail to receive email<br />

decryption : (see encryption)<br />

defamation : 26.4.7. Harassment, Threatening Communication, and Defamation<br />

default<br />

accounts : 8.1.2. Default Accounts<br />

deny : 21.1.1. Default Permit vs. Default Deny<br />

domain : 16.2.3. Hostnames<br />

permit : 21.1.1. Default Permit vs. Default Deny<br />

defense in depth : (see multilevel security)<br />

DELETE key : 3.4. Changing Your Password<br />

deleting<br />

destructive attack via : 25.1. Destructive Attacks<br />

files : 5.4. Using Directory Permissions<br />

demo accounts : 8.1.2. Default Accounts<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (2 of 8) [2002-04-12 10:43:43]

Index<br />

denial-of-service attacks<br />

1.5. Role of This Book<br />

25. Denial of Service Attacks and Solutions<br />

25.3.4. Clogging<br />

accidental : 25.2.5. Soft Process Limits: Preventing Accidental Denial of Service<br />

automatic lockout : 3.3. Entering Your Password<br />

checklist for : A.1.1.24. Chapter 25: Denial of Service Attacks and Solutions<br />

inodes : 25.2.2.3. Inode problems<br />

internal inetd services : 17.1.3. The /etc/inetd Program<br />

on networks<br />

25.3. Network Denial of Service Attacks<br />

25.3.4. Clogging<br />

via syslog : 10.5.1. The syslog.conf Configuration File<br />

X Window System : 17.3.21.5. Denial of service attacks under X<br />

departure of employees : 13.2.6. Departure<br />

depository directories, FTP : 17.3.2.6. Setting up anonymous FTP with the standard <strong>UNIX</strong> FTP server<br />

DES (Data Encryption Standard)<br />

6.4.1. Summary of Private Key Systems<br />

6.4.4. DES<br />

6.4.5.2. Triple DES<br />

8.6.1. The crypt() Algorithm<br />

authentication (NIS+) : 19.5.4. Using NIS+<br />

improving security of<br />

6.4.5. Improving the <strong>Sec</strong>urity of DES<br />

des program<br />

6.4.4. DES<br />

6.4.5.2. Triple DES<br />

6.6.2. des: The Data Encryption Standard<br />

7.4.4. Encrypting Your Backups<br />

destroying media : 12.3.2.3. Sanitize your media before disposal<br />

destructive attacks : 25.1. Destructive Attacks<br />

detached signatures : 6.6.3.6. PGP detached signatures<br />

detectors<br />

cable tampering : 12.3.1.1. Wiretapping<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (3 of 8) [2002-04-12 10:43:43]

Index<br />

carbon-monoxide : 12.2.1.2. Smoke<br />

humidity : 12.2.1.11. Humidity<br />

logging alarm systems : 10.7.1.1. Exception and activity reports<br />

smoke : 12.2.1.2. Smoke<br />

temperature alarms : 12.2.1.6. Temperature extremes<br />

water sensors : 12.2.1.12. Water<br />

Deutsches Forschungsnetz : F.3.4.14. Germany: DFN-WiNet <strong>Internet</strong> sites<br />

/dev directory : 14.5.1. Hooking Up a Modem to Your Computer<br />

/dev/audio device : 23.8. Picking a Random Seed<br />

/dev/console device : 5.6. Device Files<br />

/dev/kmem device<br />

5.6. Device Files<br />

11.1.2. Back Doors and Trap Doors<br />

/dev/null device : 5.6. Device Files<br />

/dev/random device : 23.7.4. Other random number generators<br />

/dev/swap device : 5.5.1. SUID, SGID, and Sticky Bits<br />

/dev/urandom device : 23.7.4. Other random number generators<br />

device files : 5.6. Device Files<br />

devices<br />

managing with SNMP : 17.3.15. Simple Network Management Protocol (SNMP) (UDP Ports 161<br />

and 162)<br />

modem control : 14.5.2. Setting Up the <strong>UNIX</strong> Device<br />

Devices file : 14.5.1. Hooking Up a Modem to Your Computer<br />

df -i command : 25.2.2.3. Inode problems<br />

dictionary attack : 8.6.1. The crypt() Algorithm<br />

Diffie-Hellman key exchange system<br />

6.4.2. Summary of Public Key Systems<br />

18.6. Dependence on Third Parties<br />

19.3. <strong>Sec</strong>ure RPC (AUTH_DES)<br />

breaking key : 19.3.4. Limitations of <strong>Sec</strong>ure RPC<br />

exponential key exchange : 19.3.1. <strong>Sec</strong>ure RPC Authentication<br />

Digital Audio Tape (DAT) : 7.1.4. Guarding Against Media Failure<br />

digital computers : 6.1.2. Cryptography and Digital Computers<br />

Digital Equipment Corporation (DEC) : F.3.4.9. Digital Equipment Corporation and customers<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (4 of 8) [2002-04-12 10:43:43]

Index<br />

Digital Signature Algorithm : (see DSA)<br />

digital signatures<br />

6.4. Common Cryptographic Algorithms<br />

6.5. Message Digests and Digital Signatures<br />

6.5.5.2. Message authentication codes<br />

9.2.3. Checksums and Signatures<br />

checksums : 6.5.5.1. Checksums<br />

detached signatures : 6.6.3.6. PGP detached signatures<br />

with PGP : 6.6.3.4. Adding a digital signature to an announcement<br />

Digital <strong>UNIX</strong><br />

1.3. History of <strong>UNIX</strong><br />

(see also Ultrix)<br />

directories<br />

5.1.1. Directories<br />

5.1.3. Current Directory and Paths<br />

ancestor : 9.2.2.2. Ancestor directories<br />

backing up by : 7.1.3. Types of Backups<br />

CDFs (context-dependent files) : 24.4.1.7. Hidden files and directories<br />

checklist for : A.1.1.4. Chapter 5: The <strong>UNIX</strong> Filesystem<br />

dot, dot-dot, and / : 5.1.1. Directories<br />

FTP depositories : 17.3.2.6. Setting up anonymous FTP with the standard <strong>UNIX</strong> FTP server<br />

immutable : 9.1.1. Immutable and Append-Only Files<br />

listing automatically (Web) : 18.2.2.2. Additional configuration issues<br />

mounted : 5.5.5. Turning Off SUID and SGID in Mounted Filesystems<br />

mounting secure : 19.3.2.5. Mounting a secure filesystem<br />

nested : 25.2.2.8. Tree-structure attacks<br />

NFS : (see NFS)<br />

permissions : 5.4. Using Directory Permissions<br />

read-only : 9.1.2. Read-only Filesystems<br />

restricted<br />

8.1.5. Restricted Filesystem<br />

8.1.5.2. Checking new software<br />

root : (see root directory)<br />

SGI and sticky bits on : 5.5.6. SGID and Sticky Bits on Directories<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (5 of 8) [2002-04-12 10:43:43]

Index<br />

Web server structure of<br />

18.2.2. Understand Your Server's Directory Structure<br />

18.2.2.2. Additional configuration issues<br />

world-writable : 11.6.1.1. World-writable user files and directories<br />

blocks<br />

18.3.1. The access.conf and .htaccess Files<br />

18.3.2. Commands Within the Block<br />

18.3.2.1. Examples<br />

disaster recovery : 12.2.6.4. Minimizing downtime<br />

disk attacks<br />

25.2.2. Disk Attacks<br />

25.2.2.8. Tree-structure attacks<br />

disk quotas : 25.2.2.5. Using quotas<br />

diskettes : (see backups; media)<br />

dismissed employees : 13.2.6. Departure<br />

disposing of materials : 12.3.3. Other Media<br />

Distributed Computing Environment : (see DCE)<br />

DNS (Domain Name System)<br />

16.2.6. Name Service<br />

16.2.6.2. Other naming services<br />

17.3.6. Domain Name System (DNS) (TCP and UDP Port 53)<br />

17.3.6.2. DNS nameserver attacks<br />

nameserver attacks : 17.3.6.2. DNS nameserver attacks<br />

rogue servers : 16.3.2. <strong>Sec</strong>urity and Nameservice<br />

security and : 16.3.2. <strong>Sec</strong>urity and Nameservice<br />

zone transfers<br />

17.3.6. Domain Name System (DNS) (TCP and UDP Port 53)<br />

17.3.6.1. DNS zone transfers<br />

documentation<br />

2.5. The Problem with <strong>Sec</strong>urity Through Obscurity<br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

domain name : 16.2.3. Hostnames<br />

Domain Name System : (see DNS)<br />

domainname command : 19.4.3. NIS Domains<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (6 of 8) [2002-04-12 10:43:43]

Index<br />

domains : 19.4.3. NIS Domains<br />

dormant accounts<br />

8.4. Managing Dormant Accounts<br />

8.4.3. Finding Dormant Accounts<br />

dot (.) directory : 5.1.1. Directories<br />

dot-dot (..) directory : 5.1.1. Directories<br />

Double DES : 6.4.5. Improving the <strong>Sec</strong>urity of DES<br />

double reverse lookup : 16.3.2. <strong>Sec</strong>urity and Nameservice<br />

DOW USA : F.3.4.10. DOW USA<br />

downloading files : 12.3.4. Protecting Local Storage<br />

logging<br />

10.3.3. xferlog Log File<br />

10.3.5. access_log Log File<br />

downtime : 12.2.6.4. Minimizing downtime<br />

due to criminal investigations : 26.2.4. Hazards of Criminal Prosecution<br />

logging : 10.7.2.1. Exception and activity reports<br />

drand48 function : 23.7.3. drand48 ( ), lrand48 ( ), and mrand48 ( )<br />

drills, security : 24.1.3. Rule #3: PLAN AHEAD<br />

drink : 12.2.2.1. Food and drink<br />

DSA (Digital Signature Algorithm)<br />

6.4.2. Summary of Public Key Systems<br />

6.5.3. Digital Signatures<br />

DTE (data terminal equipment) : 14.3. The RS-232 Serial Protocol<br />

du command : 25.2.2.1. Disk-full attacks<br />

dual universes : 5.9.1. Dual Universes<br />

ducts, air : 12.2.3.2. Entrance through air ducts<br />

dump/restore program<br />

7.1.3. Types of Backups<br />

7.4.3. Specialized Backup Programs<br />

7.4.4. Encrypting Your Backups<br />

dumpster diving : 12.3.3. Other Media<br />

duress code : 8.7.2. Token Cards<br />

dust : 12.2.1.3. Dust<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (7 of 8) [2002-04-12 10:43:43]

Index<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Copyright © 1999 <strong>O'Reilly</strong> & Associates, Inc. All Rights Reserved.<br />

[ Library Home | DNS & BIND | TCP/IP | sendmail | sendmail Reference | Firewalls | <strong>Practical</strong> <strong>Sec</strong>urity ]<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_d.htm (8 of 8) [2002-04-12 10:43:43]

Index<br />

Search | Symbols | A | B | C | D | E | F | G | H | I | J | K | L | M | N | O | P | Q | R | S | T | U | V | W | X | Y | Z<br />

Index: E<br />

earthquakes : 12.2.1.4. Earthquake<br />

eavesdropping<br />

12.3.1. Eavesdropping<br />

12.3.1.5. Fiber optic cable<br />

12.4.1.2. Potential for eavesdropping and data theft<br />

14.4.4. Protecting Against Eavesdropping<br />

14.4.4.2. Protection against eavesdropping<br />

16.3.1. Link-level <strong>Sec</strong>urity<br />

IP packets<br />

16.3.1. Link-level <strong>Sec</strong>urity<br />

17.3.3. TELNET (TCP Port 23)<br />

through log files : 18.4.2. Eavesdropping Through Log Files<br />

on the Web<br />

18.4. Avoiding the Risks of Eavesdropping<br />

18.4.2. Eavesdropping Through Log Files<br />

X clients : 17.3.21.2. X security<br />

ECB (electronic code book)<br />

6.4.4.2. DES modes<br />

6.6.2. des: The Data Encryption Standard<br />

echo command : 23.5. Tips on Using Passwords<br />

ECPA (Electronic Communications Privacy Act) : 26.2.3. Federal Computer Crime Laws<br />

editing wtmp file : 10.1.3.1. Pruning the wtmp file<br />

editors : 11.5.2.7. Other initializations<br />

buffers for : 11.1.4. Trojan Horses<br />

Emacs : 11.5.2.3. GNU .emacs<br />

ex<br />

5.5.3.2. Another SUID example: IFS and the /usr/lib/preserve hole<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_e.htm (1 of 10) [2002-04-12 10:43:45]

Index<br />

11.5.2.4. .exrc<br />

11.5.2.7. Other initializations<br />

startup file attacks : 11.5.2.4. .exrc<br />

vi<br />

5.5.3.2. Another SUID example: IFS and the /usr/lib/preserve hole<br />

11.5.2.4. .exrc<br />

11.5.2.7. Other initializations<br />

edquota command : 25.2.2.5. Using quotas<br />

EDS : F.3.4.11. EDS and EDS customers worldwide<br />

education : (see security, user awareness of)<br />

effective UIDs/GIDs<br />

4.3.1. Real and Effective UIDs<br />

5.5. SUID<br />

10.1.2.1. su command and /etc/utmp and /var/adm/wtmp files<br />

C.1.3.2. Process real and effective UID<br />

8mm video tape : 7.1.4. Guarding Against Media Failure<br />

electrical fires<br />

12.2.1.2. Smoke<br />

(see also fires; smoke and smoking)<br />

electrical noise : 12.2.1.8. Electrical noise<br />

electronic<br />

breakins : (see breakins; cracking)<br />

code book (ECB)<br />

6.4.4.2. DES modes<br />

6.6.2. des: The Data Encryption Standard<br />

mail : (see mail)<br />

Electronic Communications Privacy Act (ECPA) : 26.2.3. Federal Computer Crime Laws<br />

ElGamal algorithm<br />

6.4.2. Summary of Public Key Systems<br />

6.5.3. Digital Signatures<br />

elm (mail system) : 11.5.2.5. .forward, .procmailrc<br />

emacs editor : 11.5.2.7. Other initializations<br />

.emacs file : 11.5.2.3. GNU .emacs<br />

email : (see mail)<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_e.htm (2 of 10) [2002-04-12 10:43:45]

Index<br />

embedded commands : (see commands)<br />

embezzlers : 11.3. Authors<br />

emergency response organizations : (see response teams)<br />

employees<br />

11.3. Authors<br />

13. Personnel <strong>Sec</strong>urity<br />

13.3. Outsiders<br />

departure of : 13.2.6. Departure<br />

phonebook of : 12.3.3. Other Media<br />

security checklist for : A.1.1.12. Chapter 13: Personnel <strong>Sec</strong>urity<br />

targeted in legal investigation : 26.2.5. If You or One of Your Employees Is a Target of an<br />

Investigation...<br />

trusting : 27.3.1. Your Employees?<br />

written authorization for : 26.2.6. Other Tips<br />

encryption<br />

6.2. What Is Encryption?<br />

6.2.2. The Elements of Encryption<br />

12.2.6.2. Encryption<br />

(see also cryptography)<br />

algorithms : 2.5. The Problem with <strong>Sec</strong>urity Through Obscurity<br />

crypt<br />

6.6.1. <strong>UNIX</strong> crypt: The Original <strong>UNIX</strong> Encryption Command<br />

6.6.1.3. Example<br />

Digital Signature Algorithm<br />

6.4.2. Summary of Public Key Systems<br />

6.5.3. Digital Signatures<br />

ElGamal : 6.4.2. Summary of Public Key Systems<br />

IDEA : 6.4.1. Summary of Private Key Systems<br />

RC2, RC4, and RC5<br />

6.4.1. Summary of Private Key Systems<br />

6.4.8. Proprietary Encryption Systems<br />

ROT13 : 6.4.3. ROT13: Great for Encoding Offensive Jokes<br />

RSA<br />

6.4.2. Summary of Public Key Systems<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_e.htm (3 of 10) [2002-04-12 10:43:45]

Index<br />

6.4.6. RSA and Public Key Cryptography<br />

6.4.6.3. Strength of RSA<br />

application-level : 16.3.1. Link-level <strong>Sec</strong>urity<br />

of backups<br />

7.1.6.3. Data security for backups<br />

7.4.4. Encrypting Your Backups<br />

12.3.2.4. Backup encryption<br />

checklist for : A.1.1.5. Chapter 6: Cryptography<br />

Data Encryption Standard (DES)<br />

6.4.1. Summary of Private Key Systems<br />

6.4.4. DES<br />

6.4.5.2. Triple DES<br />

6.6.2. des: The Data Encryption Standard<br />

DCE and : 3.2.2. The /etc/passwd File and Network Databases<br />

Diffie-Hellman : (see Diffie-Hellman key exchange system)<br />

end-to-end : 16.3.1. Link-level <strong>Sec</strong>urity<br />

Enigma system<br />

6.3. The Enigma Encryption System<br />

6.6.1.1. The crypt program<br />

(see also crypt command/algorithm)<br />

escrowing keys<br />

6.1.3. Modern Controversy<br />

7.1.6.3. Data security for backups<br />

exporting software : 26.4.1. Munitions Export<br />

of hypertext links : 18.4.1. Eavesdropping Over the Wire<br />

laws about<br />

6.7. Encryption and U.S. Law<br />

6.7.2. Cryptography and Export Controls<br />

link-level : 16.3.1. Link-level <strong>Sec</strong>urity<br />

of modems : 14.6. Additional <strong>Sec</strong>urity for Modems<br />

Netscape Navigator system : 18.4.1. Eavesdropping Over the Wire<br />

with network services : 17.4. <strong>Sec</strong>urity Implications of Network Services<br />

one-time pad mechanism : 6.4.7. An Unbreakable Encryption Algorithm<br />

of passwords<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_e.htm (4 of 10) [2002-04-12 10:43:45]

Index<br />

8.6. The <strong>UNIX</strong> Encrypted Password System<br />

8.6.4. Crypt16() and Other Algorithms<br />

23.5. Tips on Using Passwords<br />

PGP : (see PGP)<br />

programs for <strong>UNIX</strong><br />

6.6. Encryption Programs Available for <strong>UNIX</strong><br />

6.6.3.6. PGP detached signatures<br />

proprietary algorithms : 6.4.8. Proprietary Encryption Systems<br />

RC4 and RC5 algorithms : 6.4.1. Summary of Private Key Systems<br />

references on : D.1.5. Cryptography Books<br />

Skipjack algorithm : 6.4.1. Summary of Private Key Systems<br />

superencryption : 6.4.5. Improving the <strong>Sec</strong>urity of DES<br />

and superusers : 6.2.4. Why Use Encryption with <strong>UNIX</strong>?<br />

of Web information : 18.4.1. Eavesdropping Over the Wire<br />

end-to-end encryption : 16.3.1. Link-level <strong>Sec</strong>urity<br />

Energy Sciences Network (ESnet) : F.3.4.43. U.S. Department of Energy sites, Energy Sciences Network<br />

(ESnet), and DOE contractors<br />

Enigma encryption system<br />

6.3. The Enigma Encryption System<br />

6.6.1.1. The crypt program<br />

Enterprise Networks : 16.1. Networking<br />

environment variables<br />

11.5.2.7. Other initializations<br />

23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

environment, physical<br />

12.2.1. The Environment<br />

12.2.1.13. Environmental monitoring<br />

erasing disks : 12.3.2.3. Sanitize your media before disposal<br />

erotica, laws governing : 26.4.5. Pornography and Indecent Material<br />

errno variable : 23.2. Tips on Avoiding <strong>Sec</strong>urity-related Bugs<br />

errors : 7.1.1.1. A taxonomy of computer failures<br />

in ACLs : 5.2.5.1. AIX Access Control Lists<br />

configuration : 9.1. Prevention<br />

human : 7.1.4. Guarding Against Media Failure<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_e.htm (5 of 10) [2002-04-12 10:43:45]

Index<br />

errors<br />

Preface<br />

(see also auditing, system activity)<br />

escape sequences, modems and : 14.5.3.1. Originate testing<br />

escrowing encryption keys<br />

6.1.3. Modern Controversy<br />

7.1.6.3. Data security for backups<br />

ESnet (Energy Sciences Network) : F.3.4.43. U.S. Department of Energy sites, Energy Sciences Network<br />

(ESnet), and DOE contractors<br />

espionage : 11.3. Authors<br />

/etc directory<br />

11.1.2. Back Doors and Trap Doors<br />

11.5.3.5. System initialization files<br />

backups of : 7.1.3. Types of Backups<br />

/etc/aliases file : 11.5.3.3. /usr/lib/aliases, /etc/aliases, /etc/sendmail/aliases, aliases.dir, or<br />

aliases.pag<br />

/etc/default/login file : 8.5.1. <strong>Sec</strong>ure Terminals<br />

/etc/exports file<br />

11.6.1.2. Writable system files and directories<br />

19.3.2.4. Using <strong>Sec</strong>ure NFS<br />

making changes to : 20.2.1.2. /usr/etc/exportfs<br />

/etc/fbtab file : 17.3.21.1. /etc/fbtab and /etc/logindevperm<br />

/etc/fingerd program : (see finger command)<br />

/etc/fsck program : 24.4.1.7. Hidden files and directories<br />

/etc/fstab file<br />

11.1.2. Back Doors and Trap Doors<br />

19.3.2.5. Mounting a secure filesystem<br />

/etc/ftpd : (see ftpd server)<br />

/etc/ftpusers file : 17.3.2.5. Restricting FTP with the standard <strong>UNIX</strong> FTP server<br />

/etc/group file<br />

1.2. What Is an Operating System?<br />

4.1.3.1. The /etc/group file<br />

4.2.3. Impact of the /etc/passwd and /etc/group Files on <strong>Sec</strong>urity<br />

8.1.6. Group Accounts<br />

file:///C|/Oreilly Unix etc/<strong>O'Reilly</strong> Reference Library/networking/puis/index/idx_e.htm (6 of 10) [2002-04-12 10:43:45]

Index<br />

/etc/halt command : 24.2.6. Anatomy of a Break-in<br />

/etc/hosts file : 16.2.3.1. The /etc/hosts file<br />

/etc/hosts.equiv : (see hosts.equiv file)<br />

/etc/hosts.lpd file : 17.3.18.6. /etc/hosts.lpd file<br />

/etc/inetd : (see inetd daemon)<br />

/etc/inetd.conf file : 17.3. Primary <strong>UNIX</strong> Network Services<br />

/etc/init program : C.5.1. Process #1: /etc/init<br />

/etc/inittab : (see inittab program)<br />

/etc/keystore file : 19.3.1.1. Proving your identity<br />

/etc/logindevperm file : 17.3.21.1. /etc/fbtab and /etc/logindevperm<br />

/etc/motd file : 26.2.6. Other Tips<br />

/etc/named.boot file<br />

17.3.6.1. DNS zone transfers<br />