Discrete Mathematics University of Kentucky CS 275 Spring ... - MGNet

Discrete Mathematics University of Kentucky CS 275 Spring ... - MGNet

Discrete Mathematics University of Kentucky CS 275 Spring ... - MGNet

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

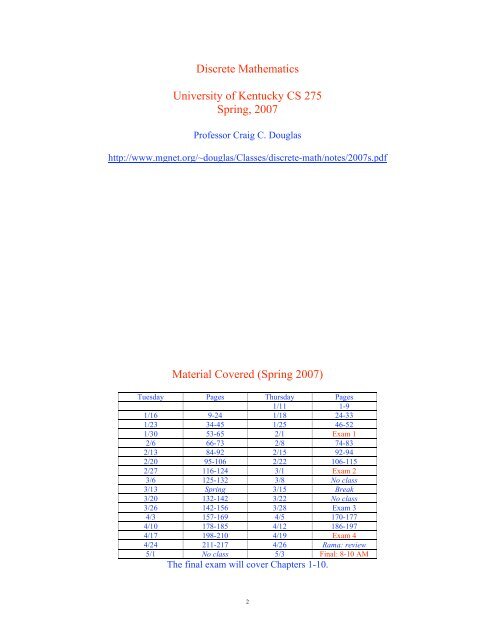

<strong>Discrete</strong> <strong>Mathematics</strong><br />

<strong>University</strong> <strong>of</strong> <strong>Kentucky</strong> <strong>CS</strong> <strong>275</strong><br />

<strong>Spring</strong>, 2007<br />

Pr<strong>of</strong>essor Craig C. Douglas<br />

http://www.mgnet.org/~douglas/Classes/discrete-math/notes/2007s.pdf<br />

Material Covered (<strong>Spring</strong> 2007)<br />

Tuesday Pages Thursday Pages<br />

1/11 1-9<br />

1/16 9-24 1/18 24-33<br />

1/23 34-45 1/25 46-52<br />

1/30 53-65 2/1 Exam 1<br />

2/6 66-73 2/8 74-83<br />

2/13 84-92 2/15 92-94<br />

2/20 95-106 2/22 106-115<br />

2/27 116-124 3/1 Exam 2<br />

3/6 125-132 3/8 No class<br />

3/13 <strong>Spring</strong> 3/15 Break<br />

3/20 132-142 3/22 No class<br />

3/26 142-156 3/28 Exam 3<br />

4/3 157-169 4/5 170-177<br />

4/10 178-185 4/12 186-197<br />

4/17 198-210 4/19 Exam 4<br />

4/24 211-217 4/26 Rama: review<br />

5/1 No class 5/3 Final: 8-10 AM<br />

The final exam will cover Chapters 1-10.<br />

2

Course Outline<br />

1. Logic Principles<br />

2. Sets, Functions, Sequences, and Sums<br />

3. Algorithms, Integers, and Matrices<br />

4. Induction and Recursion<br />

5. Simple Counting Principles<br />

6. <strong>Discrete</strong> Probability<br />

7. Advanced Counting Principles<br />

8. Relations<br />

9. Graphs<br />

10. Trees<br />

11. Boolean Algebra<br />

12. Modeling Computation<br />

3<br />

Logic Principles<br />

Basic values: T or F representing true or false, respectively. In a computer T an<br />

F may be represented by 1 or 0 bits.<br />

Basic items:<br />

• Propositions<br />

o Logic and Equivalences<br />

• Truth tables<br />

• Predicates<br />

• Quantifiers<br />

• Rules <strong>of</strong> Inference<br />

• Pro<strong>of</strong>s<br />

o Concrete, outlines, hand waving, and false<br />

4

Definition: A proposition is a statement <strong>of</strong> a true or false fact (but not both).<br />

Examples:<br />

• 2+2 = 4 is a proposition because this is a fact.<br />

• x+1 = 2 is not a proposition unless a specific value <strong>of</strong> x is stated.<br />

Definition: The negation <strong>of</strong> a proposition p, denoted by ¬p and pronounced not<br />

p, means that, “it is not the case that p.” The truth values for ¬p are the opposite<br />

for p.<br />

Examples:<br />

• p: Today is Thursay, ¬p: Today is not Thursday.<br />

• p: At least a foot <strong>of</strong> snow falls in Boulder on Fridays. ¬p: Less than a foot<br />

<strong>of</strong> snow falls in Boulder on Fridays.<br />

5<br />

Definition: The conjunction <strong>of</strong> propositions p and q, denoted p!q, is true if<br />

both p and q are true, otherwise false.<br />

Definition: The disjunction <strong>of</strong> propositions p and q, denoted p"q, is true if<br />

either p or q is true, otherwise false.<br />

Definition: The exclusive or <strong>of</strong> propositions p and q, denoted p#q, is true if<br />

only one <strong>of</strong> p and q is true, otherwise false.<br />

Truth tables:<br />

p ¬p q p!q p"q p#q<br />

T F T T T F<br />

T * F * F F T T<br />

F * T * T F T T<br />

F T F F F F<br />

* The truth table for p and ¬p is really a 2$2 table.<br />

6

Concepts so far can be extended to Boolean variables and Bit strings.<br />

Definition: A bit is a binary digit. Hence, it has two possible values: 0 and 1.<br />

Definition: A bit string is a sequence <strong>of</strong> zero or more bits. The length <strong>of</strong> a bit<br />

string is the number <strong>of</strong> bits.<br />

Definition: The bitwise operators OR, AND, and XOR are defined based on<br />

!, ", and #, bit by bit in a bit string.<br />

Examples:<br />

• 010111 is a bit string <strong>of</strong> length 6<br />

• 010111 OR 110000 = 110111<br />

• 010111 AND 110000 = 010000<br />

• 010111 XOR 110000 = 100111<br />

7<br />

Definition: The conditional statement is an implication, denoted p%q, and is<br />

false when p is true and q is false, otherwise it is true. In this case p is known<br />

as a hypothesis (or antecedent or premise) and q is known as the conclusion<br />

(or consequence).<br />

Definition: The biconditional statement is a bi-implication, denoted p&q, and<br />

is true if and only if p and q have the same truth table values.<br />

Truth tables:<br />

p q p%q p&q<br />

T T T T<br />

T F F F<br />

F T T F<br />

F F T T<br />

8

We can compound logical operators to make complicated propositions. In<br />

general, using parentheses makes the expressions clearer, even though more<br />

symbols are used. However, there is a well defined operator precedence<br />

accepted in the field. Lower numbered operators take precedence over higher<br />

numbered operators.<br />

Examples:<br />

• ¬p!q = (¬p) !q<br />

• p!q"r = (p!q) "r<br />

Operator Precedence<br />

¬ 1<br />

! 2<br />

" 3<br />

% 4<br />

& 5<br />

9<br />

Definition: A compound proposition that is always true is a tautology. One that<br />

is always false is a contradiction. One that is neither is a contingency.<br />

Example:<br />

p ¬p p!¬p p"¬p<br />

T F F T<br />

F T F T<br />

contigencies contradiction tautology<br />

Definition: Compound propositions p and q are logically equivalent if p&q is a<br />

tautology and is denoted p'q (sometimes written as p!q instead).<br />

10

Theorem: ¬(p"q) ' ¬p ! ¬q.<br />

Pro<strong>of</strong>: Construct a truth table.<br />

p q ¬(p"q) ¬p ¬q ¬p!¬q<br />

T T F F F F<br />

T F F F T F<br />

F T F T F F<br />

F F T T T T<br />

qed<br />

Theorem: ¬(p!q) ' ¬p " ¬q.<br />

Pro<strong>of</strong>: Construct a truth table similar to the previous theorem.<br />

These two theorems are known as DeMorgan’s laws and can be extended to any<br />

number <strong>of</strong> propositions:<br />

¬(p 1 "p 2 "…"p k ) ' ¬ p 1 ! ¬ p 2 ! … ! ¬ p k<br />

¬(p 1 !p 2 !…!p k ) ' ¬ p 1 " ¬ p 2 " … " ¬ p k<br />

11<br />

Theorem: p%q ' ¬p"q.<br />

Pro<strong>of</strong>: Construct a truth table.<br />

p q p%q ¬p ¬p"q<br />

T T T F T<br />

T F F F F<br />

F T T T T<br />

F F T T T<br />

qed<br />

These pro<strong>of</strong>s are examples are concrete ones that are proven using an exhaustive<br />

search <strong>of</strong> all possibilities. As the number <strong>of</strong> propositions grows, the number <strong>of</strong><br />

possibilities grows like 2 k for k propositions.<br />

The distributive laws are an example when k=3.<br />

12

Theorem: p" (q!r) ' (p"q)!(p"r).<br />

Pro<strong>of</strong>: Construct a truth table.<br />

p q r p" (q!r) p"q p"r (p"q)!(p"r)<br />

T T T T T T T<br />

T T F T T T T<br />

T F T T T T T<br />

T F F T T T T<br />

F T T T T T T<br />

F T F F T F F<br />

F F T F F T F<br />

F F F F F F F<br />

qed<br />

Theorem: p! (q"r) ' (p!q) " (p!r).<br />

Pro<strong>of</strong>: Construct a truth table similar to the previous theorem.<br />

13<br />

Some well known logical equivalences includes the following laws:<br />

'<br />

p!T'p<br />

p"F'p<br />

p"T'T<br />

p!F'F<br />

p"p'p<br />

p!p'p<br />

¬(¬p) 'p<br />

p"¬p 'T<br />

p!¬p 'F<br />

p"q'q"p<br />

p!q'q!p<br />

(p"q)"r' p"(q"r)<br />

(p!q) !r' p!(q!r)<br />

Law<br />

Identity<br />

Domination<br />

Idempotent<br />

Double negation<br />

Negation<br />

Commutative<br />

Associative<br />

14

'<br />

p"(q!r) '(p"q)!(q"r)<br />

p!(q"r) '(p!q)"(q!r)<br />

¬(p"q) ' ¬p!¬q<br />

¬(p!q) ' ¬p"¬q<br />

p"(p!q)'p<br />

p!(p"q)'p<br />

Law<br />

Distributive<br />

DeMorgan<br />

Absorption<br />

All <strong>of</strong> these laws can be proven concretely using truth tables. It is a good<br />

exercise to see if you can prove some.<br />

15<br />

Well known logical equivalences involving conditional statements:<br />

p%q ' ¬p"q<br />

p%q ' ¬q%¬p<br />

p"q ' ¬p%q<br />

p!q ' ¬(p%¬q)<br />

¬(p%q) ' p!¬q<br />

(p%q)!(p%r) ' p%(q!r)<br />

(p%r)!(q%r) ' (p"q)%r<br />

(p%q)"(p%r) ' p%(q"r)<br />

(p%r)"(q%r) ' (p!q)%r<br />

Well known logical equivalences involving biconditional statements:<br />

p&q ' (p%q)!(q%p)<br />

p&q ' ¬p&¬q<br />

p&q ' (p!q) " (¬p!¬q)<br />

¬(p&q) ' p&¬q<br />

16

Propositional logic is pretty limited. Almost anything you really are interested in<br />

requires a more sophisticated form <strong>of</strong> logic: predicate logic with quantifiers (or<br />

predicate calculus).<br />

Definition: P(x) is a propositional function when a specific value x is substituted<br />

for the expression in P(x) gives us a proposition. The part <strong>of</strong> the expression<br />

referring to x is known as the predicate.<br />

Examples:<br />

• P(x): x > 24. P(2) = F, P(102) = T.<br />

• P(x): x = y + 1. P(x) = T for one value only (y is an unbounded variable).<br />

• P(x,y): x = y + 1. P(2,1) = T, P(102,-14) = F.<br />

Definition: A statement <strong>of</strong> the form P(x 1 ,x 2 ,…,x n ) is the value <strong>of</strong> the<br />

propositional function P at the n-tuple (x 1 ,x 2 ,…,x n ). P is also known as a n-place<br />

(or n-ary) predicate.<br />

17<br />

Definition: The universal quantification <strong>of</strong> P(x) is the statement P(x) is true for<br />

all values <strong>of</strong> x in some domain, denoted by (x P(x).<br />

Definition: The existential quantification <strong>of</strong> P(x) is the statement P(x) is true for<br />

at least one value <strong>of</strong> x in some domain, denoted by )x P(x).<br />

Definition: The uniqueness quantification <strong>of</strong> P(x) is the statement P(x) is true for<br />

exactly one value <strong>of</strong> x in some domain, denoted by )!x P(x).<br />

There is an infinite number <strong>of</strong> quantifiers that can be constructed, but the three<br />

above are among the most important and common.<br />

Examples: Assume x belongs to the real numbers.<br />

• (x 0). The negative real numbers form the domain.<br />

• )!x (x 1223 = 0).<br />

18

( and ) have higher precedence than the logical operators.<br />

Example: (x P(x)!Q(x) means ((x P(x))!Q(x).<br />

Definition: When a variable is used in a quantification, it is said to be bound.<br />

Otherwise the variable is free.<br />

Example: )x (x = y + 1).<br />

Definition: Statements involving predicates and quantifiers are logically<br />

equivalent if and only if they have the same truth value independent <strong>of</strong> which<br />

predicates are substituted and in which domains are used. Notation: S ' T.<br />

DeMorgan’s Laws for Negation:<br />

• ¬)x P(x) ' (x ¬P(x).<br />

• ¬(x P(x) ' )x ¬P(x).<br />

19<br />

Nested quantifiers just means that more than one is in a statement. The order <strong>of</strong><br />

quantifiers is important.<br />

Examples: Assume x and y belong to the real numbers.<br />

• (x)y (x + y = 0).<br />

• (x(y (x < 0) ! (y > 0) % xy < 0.<br />

Quantification <strong>of</strong> two variables:<br />

Statement When True? When False?<br />

(x(y P(x,y) For all x and y, P(x,y)=T. There is a pair <strong>of</strong> x and y such that<br />

P(x,y)=F.<br />

(x)y P(x,y) For all x there is a y such There is an x such that for all y,<br />

that P(x,y)=T<br />

P(x,y)=F.<br />

)x(y P(x,y) There is an x such that for For all x there is a y such that<br />

)x)y P(x,y)<br />

all y, P(x,y)=T.<br />

There is a pair x and y<br />

such that P(x,y)=T.<br />

P(x,y)=F.<br />

For all x and y, P(x,y)=F.<br />

20

Rules <strong>of</strong> Inference are used instead <strong>of</strong> truth tables in many instances. For n<br />

variables, there are 2 n rows in a truth table, which gets out <strong>of</strong> hand quickly.<br />

Definition: A propositional logic argument is a sequence <strong>of</strong> propositions. The<br />

last proposition is the conclusion. The earlier ones are the premises. An<br />

argument is valid if the truth <strong>of</strong> the premises implies the truth <strong>of</strong> the conclusion.<br />

Definition: A propositional logic argument form is a sequence <strong>of</strong> compound<br />

propositions involving propositional variables. An argument form is valid if no<br />

matter what particular propositions are substituted for the proposition variables<br />

in its premises, the conclusion remains true if the premises are all true.<br />

Translation: An argument form with premises p 1 , p 2 , …, p n and conclusion q is<br />

valid when (p 1 !p 2 !…!p n ) % q is a tautology.<br />

21<br />

There are eight basic rules <strong>of</strong> inference.<br />

Rule Tautology Name<br />

p<br />

[p!( p%q)] % q Modus ponens<br />

p%q<br />

*q<br />

¬q<br />

[¬q!(p%q)] % ¬p Modus tollens<br />

p%q<br />

*¬p<br />

p%q<br />

[(p%q)!(q%r)] % (p%r) Hypothetical syllogism<br />

q%r<br />

* p%r<br />

p"q<br />

[(p"q)!¬p] % q Disjunctive syllogism<br />

¬p<br />

*q<br />

p<br />

*p"q<br />

p % (p"q)<br />

Addition<br />

22

Rule Tautology Name<br />

p!q<br />

(p!q) % p<br />

Simplification<br />

*p<br />

p<br />

[(p)!(q)] % (p!q) Conjunction<br />

q<br />

*p!q<br />

p"q<br />

¬p"r<br />

*q"r<br />

[(p"q)!(¬p"r)]% (q"r) Resolution<br />

23<br />

Rules <strong>of</strong> Inference for Quantified Statements:<br />

Rule <strong>of</strong> Inference<br />

(x P(x)<br />

*P(c)<br />

P(c) for an arbitrary c<br />

*(x P(x)<br />

(x (P(x) % Q(x))<br />

P(a), where a is a particular element in the domain<br />

*Q(a)<br />

(x (P(x) % Q(x))<br />

¬Q(a), where a is a particular element in the domain<br />

*¬P(a)<br />

)x P(x)<br />

*P(c) for some c<br />

P(c) for some c<br />

*)x P(x)<br />

Name<br />

Universal instantiation<br />

Universal generalization<br />

Universal modus ponens<br />

Universal modus tollens<br />

Existential instantiation<br />

Existential generalization<br />

24

Sets, Functions, Sequences, and Sums<br />

Definition: A set is a collection <strong>of</strong> unordered elements.<br />

Examples:<br />

• Z = {…, -3, -2, -1, 0, 1, 2, 3, …}<br />

• N = {1, 2, 3, …} and + = N 0 = {0, 1, 2, 3, …} (Slightly different than text)<br />

• Q = {p/q | p,q,Z, q-0}<br />

• R = {reals}<br />

Definition: The cardinality <strong>of</strong> a set S is denoted |S|. If |S| = n, where n,Z, then<br />

the set S is a finite set. Otherwise it is an infinite set (|S| = .).<br />

Example: The cardinality <strong>of</strong> <strong>of</strong> Z, N, N 0 , Q, and R is infinite.<br />

25<br />

Definition: If |S| = |N|, then S is a countable set. Otherwise it is an uncountable<br />

set.<br />

Examples:<br />

• Q is countable.<br />

• R is uncountable.<br />

Definition: Two sets S and T are equal, denoted S = T, if and only if (x(x,S &<br />

x,T).<br />

Examples:<br />

• Let S = {0, 1, 2} and T = {2, 0, 1}. Then S = T. Order does not count.<br />

• Let S = {0, 1, 2} and T = {0, 1, 3}. Then S - T. Only the elements count.<br />

Definition: The empty set is denoted by /. Note that (S(/,S).<br />

26

Definition: A set S is a subset <strong>of</strong> a set T if (x,S(x,T) and is denoted S0T. S is<br />

a proper subset <strong>of</strong> T if S0T, but S-T and is denoted S1T.<br />

Example: S = {1, 0} and T = {0, 1, 2}. Then S1T.<br />

Theorem: (S(S0S).<br />

Pro<strong>of</strong>: By definition, (x,S(x,S).<br />

27<br />

Definition: The Power Set <strong>of</strong> a set S, denoted P(S), is the set <strong>of</strong> all possible<br />

subsets <strong>of</strong> S.<br />

Theorem: If |S| = n, then |P(S)| = 2 n .<br />

Example: S = {0, 1}. Then P(S) = {/, {0}, {1}, {0,1}}<br />

Definition: The Cartesian product <strong>of</strong> n sets A i is defined by ordered elements<br />

from the A i and is denoted A 1 $A 2 $…$A n = {(a 1 ,a 2 ,…a n ) | a i ,A i }.<br />

Example: Let S = {0, 1} and T = {a, b}. Then S$T = {(0,a), (0,b), (1,a), (1,b)}.<br />

Definition: The union <strong>of</strong> n sets A i is defined by<br />

n<br />

! = A 1 2A 2 2…2A n = {x | )i x,A i }.<br />

i=1 A i<br />

Definition: The intersection <strong>of</strong> n sets A i is defined by<br />

n<br />

! = A 1 3A 2 3…3A n = {x | (i x,A i<br />

i=1 A i<br />

28

Definition: n sets A i are disjoint if A 1 3A 2 3…3A n = /.<br />

Definition: The complement <strong>of</strong> set S with respect to T, denoted T4S, is defined<br />

by T4S = {x,T | x6S}. T4S is also called the difference <strong>of</strong> S and T.<br />

Definitions: The universal set is denoted U. The universal complement <strong>of</strong> S is<br />

S = U4S.<br />

29<br />

Examples:<br />

• Let S = {1, 0} and T = {0, 1, 2}. Then<br />

o S1T.<br />

o S3T = S.<br />

o S2T = T.<br />

o T4S = {2}.<br />

o Let U = N 0 . S = {2, 3, …}<br />

• Let S = {0, 1} and T = {2, 3}. Then<br />

o S5T.<br />

o S3T = /.<br />

o S2T = {0, 1, 2, 3}.<br />

o T4S = {2, 3}.<br />

o Let U=R. Then S is the set <strong>of</strong> all reals except the integers 0 and 1, i.e.,<br />

S = {x,R | x-0 ! x-1}.<br />

30

The textbook has a large number <strong>of</strong> set identities in a table.<br />

Identity<br />

Law(s)<br />

A2/ = A, A3U = A Identity<br />

A2U = U, A3/ = /<br />

Domination<br />

A2A = A, A3A = A<br />

Idempotent<br />

A = A<br />

Complementation<br />

A2B = B2A, A3B = B3A<br />

Commutative<br />

A2(B2C) = (A2B)2C, A3 (B3C) = (A3B) 3C Associative<br />

A3 (B2C) = (A3B) 2 (A3C)<br />

Distributive<br />

A2(B3C) = (A2B) 3 (A2C)<br />

A!B = A"B, A!B = A"B<br />

DeMorgan<br />

A2 (A3B) = A, A3 (A2B) = A<br />

Absorption<br />

A!A = U, A"A = #<br />

Complement<br />

Many <strong>of</strong> these are simple to prove from very basic laws.<br />

31<br />

Definition: A function f:A%B maps a set A to a set B, denoted f(a) = b for a,A<br />

and b,B, where the mapping (or transformation) is unique.<br />

Definition: If f:A%B, then<br />

• If (b,B )a,A (f(a) = b), then f is a surjective function or onto.<br />

• If A=B and f(a) = f(b) implies a = b, then f is one-to-one (1-1) or injective.<br />

• A function f is a bijection or a one-to-one correspondence if it is 1-1 and<br />

onto.<br />

Definition: Let f:A%B. A is the domain <strong>of</strong> f. The minimal set B such that<br />

f:A%B is onto is the image <strong>of</strong> f.<br />

Definitions: Some compound functions include<br />

n<br />

n<br />

• (!<br />

f<br />

i i )(a) = ! f (a) i=1 i . We can substitute + if we expand the summation.<br />

n<br />

n<br />

• (!<br />

f<br />

i=1 i )(a)=! f (a) i=1 i . We can substitute * if we expand the product.<br />

32

Definition: The composition <strong>of</strong> n functions f i : A i %A i+1 is defined by<br />

(f 1 °f 2 °…°f n )(a) = f 1 (f 2 (…(f n (a)…)),<br />

where a,A 1 .<br />

Definition: If f: A%B, then the inverse <strong>of</strong> f, denoted f -1 : B%A exists if and only<br />

if (b,B )a,A (f(a) = b ! f -1 (b) = a).<br />

Examples:<br />

• Let A = [0,1] 1 R, B = [0,2] 1 R.<br />

o f(a) = a 2 and g(a) = a+1. Then f+g: A%B and f*g: A%B.<br />

o f(a) = 2*a and g(a) = a-1. Then neither f+g: A%B nor f*g: A%B.<br />

• Let B = A = [0,1] 1 R.<br />

o f(a) = a 2 and g(a) = 1-a. Then f+g: A%A and f*g: A%A. Both<br />

compound functions are bijections.<br />

o f(a) = a 3 and g(a) = a 1/3 . Then g°f(a): A%A is a bijection.<br />

• Let A = [-1, 1] and B=[0, 1]. Then<br />

o f(a) = a 3 and g(a) = {x>0 | x= a 1/3 }. Then g°f(a): A%B is onto.<br />

33<br />

Definition: The graph <strong>of</strong> a function f is {(a,f(a)) | a,A}.<br />

Example: A = {0, 1, 2, 3, 4, 5} and f(a) = a 2 . Then<br />

(a) graph(f,A)<br />

(b) an approximation to graph(f,[0,5])<br />

34

Definitions: The floor and ceiling functions are defined by<br />

Examples:<br />

• 7x8 = largest integer smaller or equal to x.<br />

• 9x: = smallest integer larger or equal to x.<br />

• 72.998 = 2, 92.99: = 3<br />

• 7-2.998 = -3, 9-2.99: = -2<br />

Definition: A sequence is a function from either N or a subset <strong>of</strong> N to a set A<br />

whose elements a i are the terms <strong>of</strong> the sequence.<br />

Definitions: A geometric progression is a sequence <strong>of</strong> the form {ar i , i=0, 1, …}.<br />

An arithmetic progression is a sequence <strong>of</strong> the form {a+id, i=0, 1,…}.<br />

Translation: f(a,r,i) = ar i and f(a,d,i) = a + id are the corresponding functions.<br />

35<br />

There are a number <strong>of</strong> interesting summations that have closed form solutions.<br />

Theorem: If a,r,R, then<br />

"(n+1)a,<br />

if r=1,<br />

n $<br />

! ar i =<br />

i=0<br />

# ar n+1 -a<br />

$<br />

% r-1 , otherwise.<br />

Pro<strong>of</strong>: If r = 1, then we are left summing a n+1 times. Hence, the r = 1 case is<br />

n<br />

trivial. Suppose r - 1. Let S = ! ar i . Then<br />

n<br />

i=0<br />

i=0<br />

rS = r! ar i<br />

Substitution S formula.<br />

n+1<br />

! ar i<br />

Simplifying.<br />

"<br />

#<br />

$<br />

!<br />

i=1<br />

n<br />

i=0<br />

ar i<br />

S+(ar n+1 -a)<br />

( ) Removing n+1 term and adding 0 term.<br />

%<br />

&<br />

' + arn+1 -a<br />

Substituting S for formula<br />

Solve for S in rS = S+(ar n+1 -a) to get the desired formula.<br />

qed<br />

36

Some other common summations with closed form solutions are<br />

Sum<br />

Closed Form Solution<br />

n<br />

i<br />

n(n+1)<br />

! i=1<br />

2<br />

n<br />

! i<br />

i=1<br />

2<br />

n(n+1)(2n+1)<br />

6<br />

n<br />

! i<br />

i=1<br />

3<br />

n 2 (n+1) 2<br />

4<br />

!<br />

! x<br />

i=0<br />

i , |x|

Theorem: If f i (x) is O(g i (x)), for 1;i;n, then<br />

n<br />

i=1<br />

! f i<br />

(x) is O(max{|g 1 (x)|, |g 2 (x)|, …, |g n (x)|}).<br />

Pro<strong>of</strong>: Let g(x) = max{|g 1 (x)|, |g 2 (x)|, …, |g n (x)|} and C i the constants associated<br />

with O(g i (x)). Then<br />

n<br />

i=1<br />

n<br />

i=1<br />

n<br />

i=1<br />

! f i<br />

(x) ; ! C i<br />

g i<br />

(x) ; ! C i<br />

g(x) = |g(x)| ! C i<br />

= C|g(x)|.<br />

Theorem: If f i (x) is O(g i (x)), for 1;i;n, then ! f i<br />

(x) is O( ! g i<br />

(x) ).<br />

Pro<strong>of</strong>: Let g(x) = |g 1 (x)|$|g 2 (x)|$…|g n (x)| and C i the constants associated with<br />

O(g i (x)). Then<br />

n<br />

i=1<br />

n<br />

i=1<br />

n<br />

i=1<br />

! f i<br />

(x) ; ! C i<br />

g i<br />

(x) ; C! g i<br />

(x) .<br />

n<br />

i=1<br />

n<br />

i=1<br />

n<br />

i=1<br />

39<br />

Definition: Let f and g be functions from either Z or R to R. Then f(x) is<br />

=(g(x)) if there are constants C and k such that |f(x)| < C|g(x)| whenever x>k.<br />

Definition: Let f and g be functions from either Z or R to R. Then f(x) is<br />

>(g(x)) if f(x) = O(g(x)) and f(x) = =(g(x)). In this case, we say that f(x) is <strong>of</strong><br />

order g(x).<br />

Comment: f(x) = O(g(x)) notation is great in the limit, but does not always<br />

provide the right bounds for all values <strong>of</strong> x. =, denoted Big Omega, is used to<br />

provide lower bounds. >, denoted Big Theta, is used to provide both lower and<br />

upper bounds.<br />

n<br />

i=0<br />

Example: f(x) = ! a i<br />

x i with a n -0 is <strong>of</strong> order x n .<br />

40

Notation: Timing, as a function <strong>of</strong> the number <strong>of</strong> elements falls into the field <strong>of</strong><br />

Complexity.<br />

Complexity Terminology<br />

>(1) Constant<br />

>(log(n)) Logarithmic<br />

>(n)<br />

Linear<br />

>(nlog(n)) nlog(n)<br />

>(n k )<br />

Polynomial<br />

>(n k log(n)) Polylog<br />

>(k n ), where k>1 Exponential<br />

>(n!)<br />

Factorial<br />

Notation: Problems are tractable if they can be solved in polynomial time and<br />

are intractable otherwise.<br />

41<br />

Algorithms, Integers, and Matrices<br />

Definition: An algorithm is a finite set <strong>of</strong> precise instructions for solving a<br />

problem.<br />

Computational algorithms should have these properties:<br />

• Input: Values from a specified set.<br />

• Output: Results using the input from a specified set.<br />

• Definiteness: The steps in the algorithm are precise.<br />

• Correctness: The output produced from the input is the right solution.<br />

• Finiteness: The results are produced using a finite number <strong>of</strong> steps.<br />

• Effectiveness: Each step must be performable and in a finite amount <strong>of</strong><br />

time.<br />

• Generality: The procedure should accept all input from the input set, not<br />

just special cases.<br />

42

!<br />

Algorithm: Find the maximum value <strong>of</strong> " a i<br />

! $<br />

procedure max( " a<br />

# i<br />

% : integers)<br />

&i=1<br />

max := a 1<br />

for i := 2 to n<br />

if max < a i then max := a i<br />

{max is the largest element}<br />

n<br />

#<br />

n<br />

$<br />

%<br />

&i=1<br />

, where n is finite.<br />

Pro<strong>of</strong> <strong>of</strong> correctness: We use induction.<br />

1. Suppose n = 1, then max := a 1 , which is the correct result.<br />

2. Suppose the result is true for k = 1, 2, …, i-1. Then at step i, we know that<br />

max is the largest element in a 1 , a 2 , …, a i-1 . In the if statement, either max is<br />

already larger than a i or it is set to a i . Hence, max is the largest element in<br />

a 1 , a 2 , …, a i . Since i was arbitrary, we are done. qed<br />

This algorithm’s input and output are well defined and the overall algorithm can<br />

be performed in O(n) time since n is finite. There are no restrictions on the input<br />

set other than the elements are integers.<br />

43<br />

!<br />

Algorithm: Find a value in a sorted, distinct valued " a<br />

# i<br />

There are many, many search algorithms.<br />

! $<br />

procedure linear_search(x, " a<br />

# i<br />

% : integers)<br />

&i=1<br />

i := 1<br />

while (i;n and x-a i )<br />

i := i + 1<br />

if i;n then location := i else location := 0<br />

!<br />

{location is the subscript <strong>of</strong> " a<br />

# i<br />

n<br />

n<br />

$<br />

%<br />

&i=1<br />

n<br />

$<br />

%<br />

&i=1<br />

, where n is finite.<br />

!<br />

equal to x or 0 if x is not in " a<br />

# i<br />

n<br />

$<br />

% }<br />

&i=1<br />

We can prove that this algorithm is correct using an induction argument. This<br />

algorithm does not rely on either distinctiveness nor sorted elements.<br />

Linear search works, but it is very slow in comparison to many other searching<br />

algorithms. It takes 2n+2 comparisons in the worst case, i.e., O(n) time.<br />

44

! $<br />

procedure binary_search(x, " a<br />

# i<br />

% : integers)<br />

&i=1<br />

i := 1<br />

j := n<br />

while ( i < j )<br />

m := 7(i+j)/28<br />

if x > a m then i := m+1 else j := m<br />

if x = a i then location := i else location := 0<br />

!<br />

{location is the subscript <strong>of</strong> " a<br />

# i<br />

n<br />

n<br />

$<br />

%<br />

&i=1<br />

!<br />

equal to x or 0 if x is not in " a<br />

# i<br />

We can prove that this algorithm is correct using an induction argument.<br />

n<br />

$<br />

% }<br />

&i=1<br />

This algorithm is much, much faster than linear_search on average. It is O(logn)<br />

!<br />

in time. The average time to find a member <strong>of</strong> " a<br />

# i<br />

order n.<br />

n<br />

$<br />

%<br />

&i=1<br />

can be proven to be <strong>of</strong><br />

45<br />

!<br />

Algorithm: Sort the distinct valued " a<br />

# i<br />

finite.<br />

n<br />

$<br />

%<br />

&i=1<br />

into increasing order, where n is<br />

There are many, many sorting algorithms.<br />

! $<br />

procedure bubble_sort( " a<br />

# i<br />

% : reals, n>1)<br />

&i=1<br />

for i := 1 to n-1<br />

for j := 1 to n-i<br />

if a j > a j+1 then swap a j and a j+1<br />

!<br />

{ " a<br />

# i<br />

n<br />

$<br />

%<br />

&i=1<br />

is in increasing order}<br />

n<br />

This is one <strong>of</strong> the simplest sorting algorithms. It is expensive, however, but quite<br />

easy to understand and implement. Only one temporary is needed for the<br />

swapping and two loop variables as extra storage. The worst case time is O(n 2 ).<br />

46

!<br />

procedure insertion_sort( " a<br />

# i<br />

for j := 2 to n<br />

i := 1<br />

while a j > a i<br />

i := i + 1<br />

t := a j<br />

for k := 0 to j-i-1<br />

a j-k := a j-k-1<br />

a i := t<br />

!<br />

{ " a<br />

# i<br />

n<br />

$<br />

%<br />

&i=1<br />

n<br />

$<br />

%<br />

&i=1<br />

is in increasing order}<br />

: reals, n>1)<br />

This is not a very efficient sorting algorithm either. However, it is easy to see<br />

that at the j th step that the j th element is put into the correct spot. The worst case<br />

time is O(n 2 ). In fact, insertion_sort is trivially slower than bubble_sort.<br />

47<br />

Number theory is a rich field <strong>of</strong> mathematics. We will study four aspects briefly:<br />

1. Integers and division<br />

2. Primes and greatest common denominators<br />

3. Integers and algorithms<br />

4. Applications <strong>of</strong> number theory<br />

Most <strong>of</strong> the theorems quoted in this part <strong>of</strong> the textbook require knowledge <strong>of</strong><br />

mathematical induction to rigorously prove, a topic covered in detail in the next<br />

chapter. !<br />

48

Definition: If a,b,Z and a-0, we say that a divides b if )c,Z(b=ac), denoted by<br />

a | b. When a divides b, we denote a as a factor <strong>of</strong> b and b as a multiple <strong>of</strong> a.<br />

When a does not divide b, we denote this as a/|b.<br />

Theorem: Let a,b,c,Z. Then<br />

1. If a | b and a | c, then a | (b+c).<br />

2. If a | b, then a | (bc).<br />

3. If a | b and b | c, then a | c.<br />

Pro<strong>of</strong>: Since a | b, )s,Z(b=as).<br />

1. Since a | c it follows that ) t,Z(c=at). Hence, b+c = as + at = a(s+t).<br />

Therefore, a | (b+c).<br />

2. bc = (as)c = a(sc). Therefore, a | (bc).<br />

3. Since b | c it follows that ) t,Z(c=bt). c = bt = (as)t = a(st), Therefore, a | c.<br />

Corollary: Let a,b,c,Z. If a | b and b | c, then a | (mb+nc) for all m,n,Z.<br />

49<br />

Theorem (Division Algorithm): Let a,d,Z(d > 0). Then )!q,r,Z(a = dq+r).<br />

Definition: In the division algorithm, a is the dividend, d is the divisor, q is the<br />

quotient, and r is the remainder. We write q = a div d and r = a mod d.<br />

Examples:<br />

• Consider 101 divided by 9: 101 = 11$9 + 2.<br />

• Consider -11 divided by 3: -11 = 3(-4) + 1.<br />

Definition: Let a,b,m,Z(m > 0). Then a is congruent to b modulo m if m | (a-b),<br />

denoted a ' b (mod m). The set <strong>of</strong> integers congruent to an integer a modulo m<br />

is called the congruence class <strong>of</strong> a modulo m.<br />

Theorem: Let a,b,m,Z(m > 0). Then a ' b (mod m) if and only if a mod m = b<br />

mod m.<br />

50

Examples:<br />

• Does 17 ' 5 mod 6? Yes, since 17 – 5 = 12 and 6 | 12.<br />

• Does 24 ' 14 mod 6? No, since 24 – 14 = 10, which is not divisible by 6.<br />

Theorem: Let a,b,m,Z(m > 0). Then a ' b (mod m) if and only if<br />

)k,Z(a=b+km).<br />

Pro<strong>of</strong>: If a ' b (mod m), then m | (a-b). So, there is a k such that a-b = km, or a =<br />

b+km. Conversely, if there is a k such that a = b + km, then km = a-b. Hence, m<br />

| (a-b), or a ' b (mod m).<br />

Theorem: Let a,b,c,d,m,Z(m > 0). If a ' b (mod m) and c ' d (mod m), then<br />

a+c ' b+d (mod m) and ac ' bd (mod m).<br />

Corollary: Let a,b,m,Z(m > 0). Then (a+b) mod m = ((a mod m)+(b mod m))<br />

mod m and (ab) mod m = ((a mod m)(b mod m)) mod m.<br />

51<br />

Some applications involving congruence include<br />

• Hashing functions h(k) = k mod m.<br />

• Pseudorandom numbers: x n+1 = (ax n +c) mod m.<br />

o c = 0 is known as a pure multiplicative generator.<br />

o c - 0 is known as a linear congruential generator.<br />

• Cryptography<br />

Definition: A positive integer a is a prime if it is divisible only by 1 and a. It is a<br />

composite otherwise.<br />

Fundamental Theorem <strong>of</strong> Arithmetic: Every positive integer greater than 1 can<br />

be written uniquely as a prime or the product <strong>of</strong> two or more primes where the<br />

prime factors are written in nondecreasing order.<br />

Theorem: If a is a composite number, then a has a prime divisor less than or<br />

equal to a 1/2 .<br />

52

Theorem: There are infinitely many primes.<br />

Prime Number Theorem: The ratio <strong>of</strong> primes not exceeding a and x/ln(a)<br />

approaches 1 as a%..<br />

Example: The odds <strong>of</strong> a randomly chosen positive integer n being prime is given<br />

by (n/ln(n))/n = 1/ln(n) asymptotically.<br />

There are still a number <strong>of</strong> open questions regarding the distribution <strong>of</strong> primes.<br />

Definition: Let a,b,Z(a and b not both 0). The largest integer d such that d | a<br />

and d | b is the greatest common devisor <strong>of</strong> a and b, denoted by gcd(a,b).<br />

Example: gcd(24,36) = 12.<br />

Definition: The integers a and b are relatively prime if gcd(a,b) = 1.<br />

53<br />

!<br />

Definition: The integers " a<br />

# i<br />

whenever 1;i

Integers can be expressed uniquely in any base.<br />

Theorem: Let b,Z(b>1). Then if n,N, then there is a unique expression such<br />

that n = a k b k + a k-1 b k-1 +…+a 1 b+a 0 , where {a i },k,N 0 , a k -0, and 0;a i 1, is really easy. Just group k bits together and<br />

convert to the base 2 k symbol.<br />

• Base 10 to any base 2 k is a pain.<br />

• Base 2 k to base 10 is also a pain.<br />

56

Algorithm: Addition <strong>of</strong> integers<br />

procedure add(a, b: integers)<br />

(a n-1 a n-2 …a 1 a 0 ) 2 := base_2_expansion(a)<br />

(b n-1 b n-2 …b 1 b 0 ) 2 := base_2_expansion(b)<br />

c := 0<br />

for j := 0 to n-1<br />

d := 7(a j +b j +c)/28<br />

s j := a j +b j +c – 2d<br />

c := d<br />

s n := c<br />

{the binary expansion <strong>of</strong> the sum is (s k-1 s k-2 …s 1 s 0 ) 2 }<br />

Questions:<br />

• What is the complexity <strong>of</strong> this algorithm?<br />

• Is this the fastest way to compute the sum?<br />

57<br />

Algorithm: Mutiplication <strong>of</strong> integers<br />

procedure multiply(a, b: integers)<br />

(a n-1 a n-2 …a 1 a 0 ) 2 := base_2_expansion(a)<br />

(b n-1 b n-2 …b 1 b 0 ) 2 := base_2_expansion(b)<br />

for j := 0 to n-1<br />

if b j = 1 then c j := a shifted j places else c j := 0<br />

{c 0 ,c 1 ,…,c n-1 are the partial products}<br />

p := 0<br />

for j := 0 to n-1<br />

p := p + c j<br />

{p is the value <strong>of</strong> ab}<br />

Examples:<br />

• (10) 2 $(11) 2 = (110) 2 . Note that there are more bits than the original integers.<br />

• (11) 2 $(11) 2 = (1001) 2 . Twice as many binary digits!<br />

58

Algorithm: Compute div and mod<br />

procedure division(a: integer, d: positive integer)<br />

q := 0<br />

r := |a|<br />

while r < d<br />

r := r – d<br />

q := q + 1<br />

if a < 0 and r > 0 then<br />

r := d – r<br />

q := -(q + 1)<br />

{q = a div d is the quotient and r = a mod d is the remainder}<br />

Notes:<br />

• The complexity <strong>of</strong> the multiplication algorithm is O(n 2 ). Much more<br />

efficient algorithms exist, including one that is O(n 1.585 ) using a divide and<br />

conquer technique we will see later in the course.<br />

• There are O(log(a)log(d)) complexity algorithms for division.<br />

59<br />

Modular exponentiation, b k mod m, where b, k, and m are large integers is<br />

important to compute efficiently to the field <strong>of</strong> cryptology.<br />

Algorithm: Modular exponentiation<br />

procedure modular_exponentiation(b: integer, k,m: positive integers)<br />

(a n-1 a n-2 …a 1 a 0 ) 2 := base_2_expansion(k)<br />

y := 1<br />

power := b mod m<br />

for i := 0 to n-1<br />

if a i = 1 then y := (y $ power) mod m<br />

power := (power $ power) mod m<br />

{y = b k mod m}<br />

Note: The complexity is O((log(m)) 2 log(k)) bit operations, which is fast.<br />

60

Euclidean Algorithm: Compute gcd(a,b)<br />

procedure gcd(a,b: positive integers)<br />

x := a<br />

y := b<br />

while y-0<br />

r := x mod y<br />

x := y<br />

y := r<br />

{gcd(a,b) is x}<br />

Correctness <strong>of</strong> this algorithm is based on<br />

Lemma: Let a=bq+r, where a,b,q,r,Z. then gcd(a,b) = gcd(b,r).<br />

The complexity will be studied after we master mathematical induction.<br />

61<br />

Number theory useful results<br />

Theorem: If a,b,N then )s,t,Z(gcd(a,b) = sa+tb).<br />

Lemma: If a,b,c,N (gcd(a,b) = 1 and a | bc, then a | c).<br />

Note: This lemma makes proving the prime factorization theorem doable.<br />

Lemma: If p is a prime and p | a 1 a 2 …a n where each a i ,Z, then p | a i for some i.<br />

Theorem: Let m,N and let a,b,c,Z. If ac ' bc (mod m) and gcd(c,m) = 1, then<br />

a ' b (mod m).<br />

Definition: A linear congruence is a congruence <strong>of</strong> the form ax ' b (mod m),<br />

where m,N, a,b,Z, and x is a variable.<br />

Definition: An inverse <strong>of</strong> a modulo m is an a such that aa ' 1 (mod m).<br />

62

Theorem: If a and m are relatively prime integers and m>1, then an inverse <strong>of</strong> a<br />

modulo m exists and is unique modulo m.<br />

Pro<strong>of</strong>: Since gcd(a,m) = 1, )s,t,Z(1 = sa+tb). Hence, sa=tb ' 1 (mod m). Since<br />

tm ' 0 (mod m), it follows that sa ' 1 (mod m). Thus, s is the inverse <strong>of</strong> a<br />

modulo m. The uniqueness argument is made by assuming there are two<br />

inverses and proving this is a contradiction.<br />

Systems <strong>of</strong> linear congruences are used in large integer arithmetic. The basis for<br />

the arithmetic goes back to China 1700 years ago.<br />

Puzzle Sun Tzu (or Sun Zi): There are certain things whose number is unknown.<br />

• When divided by 3, the remainder is 2.<br />

• When divided by 5, the remainder is 3, and<br />

• When divided by 7, the remainder is 2.<br />

What will be the number <strong>of</strong> things? (Answer: 23… stay tuned why).<br />

63<br />

Chinese Remander Theorem: Let m 1 , m 2 ,…,m n ,N be pairwise relatively prime.<br />

n<br />

Then the system x ' a i (mod m i ) has a unique solution modulo m = ! .<br />

i=1<br />

m i<br />

Existence Pro<strong>of</strong>: The pro<strong>of</strong> is by construction. Let M k = m / m k , 1;k;n. Then<br />

gcd(M k , m k ) = 1 (from pairwise relatively prime condition). By the previous<br />

theorem we know that there is a y k which is an inverse <strong>of</strong> M k modulo m k , i.e.,<br />

M k y k ' 1 (mod m k ). To construct the solution, form the sum<br />

x = a 1 M 1 y 1 + a 2 M 2 y 2 + … + a n M n y n .<br />

Note that M j ' 0 (mod m k ) whenever j-k. Hence,<br />

x ' a k M k y k ' a k (mod m k ), 1;k;n.<br />

We have shown that x is simultaneous solution to the n congruences. qed<br />

64

Sun Tzu’s Puzzle: The a k ,{2, 1, 2} from 2 pages earlier. Next<br />

The inverses y k are<br />

m k ,{3, 5, 7}, m=3$5$7=105, and M k =m/m k ,{35, 21, 15}.<br />

1. y 1 = 2 (M 1 = 35 modulo 3).<br />

2. y 2 = 1 (M 2 = 21 modulo 5).<br />

3. y 3 = 1 (M 3 = 15 modulo 7).<br />

The solutions to this system are those x such that<br />

x ' a 1 M 1 y 1 + a 2 M 2 y 2 + a 2 M 2 y 2 = 2$35$2 + 3$21$1 + 2$15$1 = 233<br />

Finally, 233 ' 23 (mod 105).<br />

65<br />

Definition: A m$n matrix is a rectangular array <strong>of</strong> numbers with m rows and n<br />

columns. The elements <strong>of</strong> a matrix A are noted by A ij or a ij . A matrix with m=n<br />

is a square matrix. If two matrices A and B have the same number <strong>of</strong> rows and<br />

columns and all <strong>of</strong> the elements A ij = B ij , then A = B.<br />

Definition: The transpose <strong>of</strong> a m$n matrix A = [A ij ], denoted A T , is A T = [A ji ]. A<br />

matrix is symmetric if A = A T and skew symmetric if A = -A T .<br />

Definition: The i th row <strong>of</strong> an m$n matrix A is [A i1 , A i2 , …, A in ]. The j th column<br />

is [A 1j , A 2j , …, A mj ] T .<br />

Definition: Matrix arithmetic is not exactly the same as scalar arithmetic:<br />

• C = A + B: c ij = a ij + b ij , where A and B are m$n.<br />

• C = A – B: c ij = a ij - b ij , where A and B are m$n<br />

k<br />

• C = AB: c ij<br />

= ! a ip<br />

b pj<br />

, where A is m$k, B is k$n, and C is m$n.<br />

p=1<br />

66

Theorem: A±B = B±A, but AB-BA in general.<br />

Definition: The identity matrix I n is n$n with I ii = 1 and I ij = 0 if i-j.<br />

Theorem: If A is n$n, then AI n = I n A = A.<br />

Definition: A r = AA … A (r times).<br />

Definition: Zero-One matrices are matrices A = [a ij ] such that all a ij ,{0, 1}.<br />

Boolean operations are defined on m$n zero-one matrices A = [a ij ] and B = [b ij ]<br />

by<br />

• Meet <strong>of</strong> A and B: A!B = a ij !b ij , 1;i;m and 1;j;n.<br />

• Join <strong>of</strong> A and B: A"B = a ij "b ij , 1;i;m and 1;j;n.<br />

• The Boolean product <strong>of</strong> A and B is C = A !B, where A is m$k, B is k$n,<br />

and C is m$n, is defined by c ij = (a i1 !b 1j )"(a i2 !b 2j )"…"(a ik !b kj ).<br />

Definition: The Boolean power <strong>of</strong> a n$n matrix A is defined by A [r] =<br />

A !A !… !A (r times), where A [0] = I n .<br />

67<br />

Induction and Recursion<br />

Principle <strong>of</strong> Mathematical Induction: Given a propositional function P(n), n,N,<br />

we prove that P(n) is true for all n,N by verifying<br />

1. (Basis) P(1) is true<br />

2. (Induction) P(k)%P(k+1), (k,N.<br />

Notes:<br />

• Equivalent to [P(1) ! (k,N (P(k)%P(k+1))] % (n,N P(n).<br />

• We do not actually assume P(k) is true. It is shown that if it is assumed that<br />

P(k) is true, then P(k+1) is also true. This is a subtle grammatical point with<br />

mathematical implications.<br />

• Mathematical induction is a form <strong>of</strong> deductive reasoning, not inductive<br />

reasoning. The latter tries to make conclusions based on observations and<br />

rules that may lead to false conclusions.<br />

• Sometimes P(1) is not the basis, but some other P(k), k,Z.<br />

68

• Sometimes P(k) is for a (possibly infinite) subset <strong>of</strong> N or Z.<br />

• Sometimes P(k-1)%P(k) is easier to prove than P(k)%P(k+1).<br />

• Being flexible, but staying within the guiding principle usually works.<br />

• There are many ways <strong>of</strong> proving false results using subtly wrong induction<br />

arguments. Usually there is a disconnect between the basis and induction<br />

parts <strong>of</strong> the pro<strong>of</strong>.<br />

• Examples 10, 11, and 12 in your textbook are worth studying until you<br />

really understand each.<br />

n<br />

Lemma: ! (2i-1) = n<br />

i=1<br />

2 (sum <strong>of</strong> odd numbers).<br />

Pro<strong>of</strong>: (Basis) Take k = 1, so 1 = 1.<br />

(Induction) Assume 1+3+5+…+(2k-1) = k 2 for an arbitrary k > 1. Add 2k+1 to<br />

both sides. Then (1+3+5+…+(2k-1))+(2k+1) = k 2 +(2k+1) = (k+1) 2 .<br />

69<br />

n<br />

i=0<br />

Lemma: ! 2 i = 2 n+1 -1.<br />

Pro<strong>of</strong>: (Basis) Take k=0, so 2 0 = 1 = 2 1 – 1.<br />

k<br />

(Induction) Assume ! 2<br />

i=0<br />

i = 2 k+1 -1 for an arbitrary k > 0. Add 2 k+1 to both<br />

sides. Then<br />

k<br />

! 2<br />

i=0<br />

i + 2 k+1 = 2 k+1 -1 + 2 k+1 ,<br />

which simplifies to<br />

k+1<br />

2<br />

i=0<br />

i<br />

! = 2 k+2 -1.<br />

Principle <strong>of</strong> Strong Induction: Given a propositional function P(n), n,N, we<br />

prove that P(n) is true for all n,N by verifying<br />

1. (Basis) P(1) is true<br />

2. (Induction) [P(1)!P(2)!…!P(k)]%P(k+1) is true (k,N.<br />

70

Example: Infinite ladder with reachable rungs. For mathematical or strong<br />

induction, we need to verify the following:<br />

Step Mathematical Strong<br />

Basis<br />

We can reach the first rung.<br />

Induction If we can reach an arbitrary (k,N, if we can reach all k<br />

rung k, then we can reach rungs, then we can reach<br />

rung k+1.<br />

rung k+1.<br />

We cannot prove that you can climb an infinite ladder using mathematical<br />

induction. Using strong induction, however, you can prove this result using a<br />

trick: since you can prove that you can climb to rungs 1, 2, …, k, it follows that<br />

you can climb 2 rungs arbitrarily, which gets you from rung k-1 to rung k+1.<br />

Rule <strong>of</strong> thumb: Always use mathematical induction if P(k)%P(k+1) % (k,N.<br />

Only resort to strong induction when that fails.<br />

71<br />

Fundamental Theorem <strong>of</strong> Arithmetic: Every n,N (n>1) is the product <strong>of</strong> primes.<br />

Pro<strong>of</strong>: Let P(n) be the proposition that n can be written as the product <strong>of</strong> primes.<br />

(Basis) P(2) is true: 2 = 2, the product <strong>of</strong> 1 prime.<br />

(Induction) Assume P(j) is true (j;k. We must verify that P(k+1) is true.<br />

Case 1: k+1 is a prime. Hence, P(k+1) is true.<br />

Case 2: k+1 is a composite. Hence k+1 = a•b, where 2;a;b

Example: Every postage amount < $.12 can be formed using $.04 and $.05<br />

stamp combinations only. We can prove this using modified strong induction.<br />

(Basis) Consider 4 specific cases:<br />

Postage Number <strong>of</strong> $.04’s Number <strong>of</strong> $.05’s<br />

$.12 3 0<br />

$.13 2 1<br />

$.14 1 2<br />

$.15 0 3<br />

Hence, P(j) is true for 12;j;15.<br />

(Induction) Assume P(j) is true for 12;j;k and k2.<br />

• h(0) = 1, h(n) = nh(n-1) = n!<br />

• Fibonacci numbers: f 0 = 0, f 1 = 1, f n = f n-1 + f n-2 , n>1.<br />

n 0 1 2 3 4<br />

f(n) 1 6 16 36 76<br />

g(n) 12 1 -10 -21 -32<br />

h(n) 1 1 2 6 24<br />

f n 0 1 1 2 3<br />

74

Theorem: Whenever n ? n-2 , where !=(1+ 5)/2 .<br />

The pro<strong>of</strong> is by modified strong induction.<br />

Lamé’s Theorem: Let a,b,N (a

Definition: A recursive algorithm solves a problem by reducing it to an instance<br />

<strong>of</strong> the same problem with smaller input(s).<br />

Note: Recursive algorithms can be proven correct using mathematical induction<br />

or modified strong induction.<br />

Examples:<br />

• n! = n•(n-1)!<br />

• a n = a•(a n-1 )<br />

• gcd(a,b) with a,b,N (a

• Fibonacci numbers<br />

procedure fib(n: n,N 0 )<br />

if n = 0 then fib(0) := 0<br />

else if n = 1 then fib(1) := 1<br />

else fib(n) := fib(n-1) + fib(n-2)<br />

or it can be defined iteratively:<br />

procedure fib(n: n,N 0 )<br />

if n = 0 then y := 0<br />

else<br />

x := 0, y := 1<br />

for I := 1 to n-1<br />

z := x+y<br />

x := y<br />

y := z<br />

{y is f n }<br />

79<br />

Graphs and trees are important concepts that we will spend a lot <strong>of</strong> time<br />

considering later in the course.<br />

• A graph is made up <strong>of</strong> vertices and edges that connect some <strong>of</strong> the vertices.<br />

• A tree is a special form <strong>of</strong> a graph, namely it is a connected unidirectional<br />

graph with no simple circuits.<br />

• A rooted tree is a tree with one vertex that is the root and every edge is<br />

directed away from the root.<br />

• A m-ary tree is a rooted tree such that every internal vertex has no more<br />

than m children. If m = 2, it is a binary tree.<br />

• The height <strong>of</strong> a rooted tree T, denoted h(T), is the maximum number <strong>of</strong><br />

levels (or vertices).<br />

• A balanced rooted tree T has all <strong>of</strong> its leaves at h(T) or h(T)-1.<br />

Let T 1 , T 2 , …, T m be rooted trees with roots r 1 , r 2 , …, r m . Let r be another root.<br />

Connecting r to the roots r 1 , r 2 , …, r m constructs another rooted tree T. We can<br />

reformulate this concept using the recursive set methodology.<br />

80

Merge sort is a balanced binary tree method that first breaks a list up recursively<br />

into two lists until each sublist has only one element. Then the sublists are<br />

recombined, two at a time and sorted order, until only one sorted list remains.<br />

Note: The height <strong>of</strong> the tree formed in merge sort is O(log 2 n) for n elements.<br />

Notes:<br />

10, 4, 7, 1<br />

10, 4 7, 1<br />

10 4 7 1<br />

4, 10 1, 7<br />

1, 4, 7, 10<br />

• First three rows do the sublist splitting.<br />

• Last two rows do the merging.<br />

• There are two distinct algorithms at work.<br />

81<br />

!<br />

procedure merge_sort(L = " a<br />

# i<br />

if n > 1 then<br />

m := 7n/28<br />

!<br />

L 1 := " a i<br />

m<br />

$<br />

%<br />

# &i=1<br />

n<br />

$<br />

%<br />

&i=m+1<br />

n<br />

$<br />

% )<br />

&i=1<br />

!<br />

L 2 := " a<br />

# i<br />

L := merge(merge_sort(L 1 ), merge_sort(L 2 ))<br />

!<br />

{L is now the sorted " a<br />

# i<br />

n<br />

$<br />

% }<br />

&i=1<br />

procedure merge(L 1 , L 2 : sorted lists)<br />

L := /<br />

while L 1 and L 2 are both nonempty<br />

remove the smaller <strong>of</strong> the first element <strong>of</strong> L 1 and L 2 and append it to<br />

end <strong>of</strong> L<br />

if either L 1 or L 2 are empty, append the other list to the end <strong>of</strong> L<br />

{L is the merged, sorted list}<br />

82

Theorem: If n i = |L i |, i=1,2, then merge requires at most n 1 +n 2 -1 comparisons. If<br />

n = |L|, then merge_sort requires O(nlog 2 n) comparisons.<br />

Quick sort is another sorting algorithm that breaks an initial list into many<br />

! $<br />

sublists, but using a different heuristic than merge sort. If L = " a<br />

# i<br />

% with<br />

&i=1<br />

distinct elements, then quick sort recursively constructs two lists: L 1 for all a i <<br />

a 1 and L 2 for all a i > a 1 with a 1 appended to the end <strong>of</strong> L 1 . This continues<br />

recursively until each sublist has only one element. Then the sublists are<br />

recombined in order to get a sorted list.<br />

Note: On average, the number <strong>of</strong> comparisons is O(nlog 2 n) for n elements, but<br />

can be O(n 2 ) in the worst case. Quick sort is one <strong>of</strong> the most popular sorting<br />

algorithms used in academia.<br />

Exercise: Google “quick sort, C++” to see many implementations or look in<br />

many <strong>of</strong> the 200+ C++ primers. Defining quick sort is in Rosen’s exercises.<br />

n<br />

83<br />

Counting, Permutations, and Combinations<br />

Product Rule Principle: Suppose a procedure can be broken down into a<br />

sequence <strong>of</strong> k tasks. If there are n i , 1;i;k, ways to do the i th task, then there are<br />

!<br />

k<br />

i=1<br />

n i<br />

ways to do the procedure.<br />

Sum Rule Principle: Suppose a procedure can be broken down into a sequence<br />

<strong>of</strong> k tasks. If there are n i , 1;i;k, ways to do the i th task, with each way unique,<br />

k<br />

then there are ! ways to do the procedure.<br />

i=1<br />

n i<br />

Exclusion (Inclusion) Principle: If the sum rule cannot be applied because the<br />

ways are not unique, we use the sum rule and subtract the number <strong>of</strong> duplicate<br />

ways.<br />

Note: Mapping the individual ways onto a rooted tree and counting the leaves is<br />

another method for summing. The trees are not unique, however.<br />

84

Examples:<br />

• Consider 3 students in a classroom with 10 seats. There are 10$9$8 = 720<br />

ways to assign the students to the seats.<br />

• We want to appoint 1 person to fill out many, may forms that the<br />

administration wants filled in by today. There are 3 students and 2 faculty<br />

members who can fill out the forms. There are 3+2 = 5 ways to choose 1<br />

person. (Duck fast.)<br />

• How many variables are legal in the orginal Dartmouth BASIC computer<br />

language? Variables are 1 or 2 alphanumeric characters long, begin with A-<br />

Z, case independent, and are not one <strong>of</strong> the 5 two character reserved words<br />

in BASIC. We use a combination <strong>of</strong> the three counting principles:<br />

o 1 character variables: V 1 = 26<br />

o 2 character variables: V 2 = 26$36 - 5 = 931<br />

o Total: V = V 1 + V 2 = 957<br />

85<br />

Pigeonhole Principle: If there are k,N boxes and at least k+1 objects placed in<br />

the boxes, then there is at least one box with more than one object in it.<br />

Theorem: A function f: D%E such that |D| >k and |E| = k, then f is not 1-1.<br />

The pro<strong>of</strong> is by the pigeonhole principle.<br />

Theorem (Generalized Pigeonhole Principle): If N objects are placed in k boxes,<br />

then at least one box contains at least 9N/k: - 1 objects.<br />

Pro<strong>of</strong>: First recall that 9N/k: < (N/k)+1. Now suppose that none <strong>of</strong> the boxes<br />

contains more than 9N/k: - 1 objects. Hence, the total number <strong>of</strong> objects has to<br />

be<br />

k(9N/k: - 1) < k((N/k)+1)-1) = N. %B<br />

Hence, the theorem must be true (pro<strong>of</strong> by contradiction).<br />

Theorem: Every sequence <strong>of</strong> n 2 +1 distinct real numbers contains a subsequence<br />

<strong>of</strong> length n+1 that is either strictly increasing or decreasing.<br />

86

Examples: From a standard 52 card playing deck.<br />

• How many cards must be dealt to guarantee that k = 4 cards from the same<br />

suit are dealt?<br />

o GPP Theorem says 9N/k: - 1 < 4 or N = 17.<br />

o Real minimum turns out to be 9N/k: < 4 or N = 16.<br />

• How many cards must be dealt to guarantee that 4 clubs are dealt?<br />

o GPP Theorem does not apply.<br />

o The product rule and inclusion principles apply: 3$13+4 = 43 since all<br />

<strong>of</strong> the hearts, spaces, and diamonds could be dealt before any clubs.<br />

Definition: A permutation <strong>of</strong> a set <strong>of</strong> distinct objects is an ordered arrangment <strong>of</strong><br />

these objects. A r-permutation is an ordered arrangement <strong>of</strong> r <strong>of</strong> these objects.<br />

Example: Given S = {0,1,2}, then {2,1,0} is a permutation and {0,2} is a 2-<br />

permutation <strong>of</strong> S.<br />

87<br />

Theorem: If n,r,N, then there are P(n,r) = n$(n-1)$(n-2)$…$(n-r+1) = n!/(n-r)!<br />

r-permutations <strong>of</strong> a set <strong>of</strong> n distinct elements. Further, P(n,0) = 1.<br />

The pro<strong>of</strong> is by the product rule for r

Theorem: The number <strong>of</strong> r-combinations <strong>of</strong> a set with n elements with n,r,N 0 is<br />

C(n,r) = n # r<br />

!<br />

"<br />

$<br />

.<br />

%<br />

&<br />

Pro<strong>of</strong>: The r-permutations can be formed using C(n,r) r-combinations and then<br />

ordering each r-combination, which can be done in P(r,r) ways. So,<br />

P(n,r) = C(n,r)$P(r,r)<br />

or<br />

C(n,r) = P(r,r)<br />

P(n,r) = n!<br />

(n-r)! !(r-r)! = n!<br />

r! r!(n-r)! .<br />

Theorem: C(n,r) = C(n,n-r) for 0;r;n.<br />

Definition: A combinatorial pro<strong>of</strong> <strong>of</strong> an identity is a pro<strong>of</strong> that uses counting<br />

arguments to prove that both sides f the identity count the same objects, but in<br />

different ways.<br />

89<br />

Binomial Theorem: Let x and y be variables. Then for n,N,<br />

(x+y) n n ! n$<br />

= # &<br />

' x<br />

j=0#<br />

j<br />

n-j y j .<br />

&<br />

Pro<strong>of</strong>: Expanding the terms in the product all are <strong>of</strong> the form x n-j y j for<br />

j=0,1,…,n. To count the number <strong>of</strong> terms for x n-j y j , note that we have to choose<br />

n-j x’s from the n sums so that the other j terms in the product are y’s. Hence,<br />

the coefficient for x n-j y j ! n $<br />

is # &<br />

# n-j<br />

= ! n $<br />

# &<br />

& # j<br />

.<br />

&<br />

"<br />

%<br />

"<br />

%<br />

Example: What is the coefficient <strong>of</strong> x 12 y 13 in (x+y) 25 !<br />

? 25 $<br />

# &<br />

#<br />

13<br />

= 5,200,300.<br />

&<br />

n ! n$<br />

Corollary: Let n,N 0 . Then # &<br />

' k=0#<br />

k<br />

= 2n .<br />

&<br />

Pro<strong>of</strong>: 2 n = (1+1) n n ! n$<br />

= # &<br />

# k<br />

1k 1 n-k n ! n$<br />

= # &<br />

'<br />

&<br />

' .<br />

k=0<br />

k=0#<br />

k&<br />

"<br />

%<br />

"<br />

%<br />

"<br />

"<br />

%<br />

%<br />

"<br />

%<br />

90

n<br />

k=0<br />

Corollary: Let n,N 0 . Then (-1) k n # &<br />

'<br />

# k<br />

= 0 .<br />

&<br />

Pro<strong>of</strong>: 0 = 0 n = ((-1)+1) n n ! n$<br />

= # &<br />

# k<br />

(-1)k 1 n-k n<br />

= (-1) k ! n $<br />

# &<br />

'<br />

&<br />

' .<br />

k=0<br />

k=0 # k&<br />

!<br />

Corollary: n # &<br />

# 0<br />

+ n # &<br />

& # 2<br />

+ n # &<br />

& # 4<br />

+!= n # &<br />

& # 1<br />

+ n # &<br />

& # 3<br />

+ n # &<br />

& # 5<br />

+!<br />

&<br />

"<br />

$<br />

%<br />

!<br />

"<br />

$<br />

%<br />

!<br />

"<br />

$<br />

%<br />

Corollary: Let n,N 0 . Then 2 k n # &<br />

'<br />

# k<br />

= 3n .<br />

&<br />

!<br />

"<br />

$<br />

%<br />

!<br />

"<br />

n<br />

k=0<br />

!<br />

Theorem (Pascal’s Identity): Let n,k,N with n

If we allow repetitions in the permutations, then all <strong>of</strong> the previous theorems and<br />

corollaries no longer apply. We have to start over !.<br />

Theorem: The number <strong>of</strong> r-permutations <strong>of</strong> a set with n objects and repetition is<br />

n r .<br />

Pro<strong>of</strong>: There are n ways to select an element <strong>of</strong> the set <strong>of</strong> all r positions in the r-<br />

permutation. Using the product principle completes the pro<strong>of</strong>.<br />

Theorem: There are C(n+r-1,r) = C(n+r-1,n-1) r-combinations from a set with n<br />

elements when repetition is allowed.<br />

Example: How many solutions are there to x 1 +x 2 +x 3 = 9 for x i ,N? C(3+9-1,9) =<br />

C(11,9) = C(11,2) = 55. Only when the constraints are placed on the x i can we<br />

possibly find a unique solution.<br />

Definition: The multinomial coefficient is C(n; n 1 , n 2 , …, n k ) = n!<br />

k<br />

! n i<br />

!<br />

.<br />

i=1<br />

93<br />

Theorem: The number <strong>of</strong> different permutations <strong>of</strong> n objects, where there are n i ,<br />

1;i;k, indistinguishable objects <strong>of</strong> type i, is C(n; n 1 , n 2 , …, n k ).<br />

Theorem: The number <strong>of</strong> ways to distribute n distinguishable objects in k<br />

distinguishable boxes so that n i objects are placed into box i, 1;i;k, is C(n; n 1 ,<br />

n 2 , …, n k ).<br />

Theorem: The number <strong>of</strong> ways to distribute n distinguishable objects in k<br />

indistinguishable boxes so that n i objects are placed into box i, 1;i;k, is<br />

k<br />

j=11<br />

j!<br />

Multinomial Theorem: If n,N, then<br />

"<br />

$<br />

$<br />

#<br />

!<br />

k<br />

i=1<br />

x i<br />

n<br />

%<br />

'<br />

'<br />

&<br />

j-1<br />

j"<br />

%<br />

j<br />

" %<br />

! -1 $ ' "<br />

! #<br />

$<br />

&<br />

' j-i<br />

i=0 $<br />

i'<br />

#<br />

$<br />

#<br />

&<br />

%<br />

& 'n<br />

.<br />

n<br />

= ! n1<br />

C(n;n 1<br />

,n 2<br />

,...,n<br />

+n 2<br />

+...n k<br />

)x 1<br />

n<br />

k<br />

=k<br />

1<br />

x 2<br />

n<br />

2<br />

...x k k<br />

.<br />

94

Generating permutations and combinations is useful and sometimes important.<br />

Note: We can place any n-set into a 1-1 correspondence with the first n natural<br />

numbers. All permutations can be listed using {1, 2, …, n} instead <strong>of</strong> the actual<br />

set elements. There are n! possible permutations.<br />

Definition: In the lexicographic (or dictionary) ordering, the permutation <strong>of</strong><br />

{1,2,…,n} a 1 a 2 …a n precedes b 1 b 2 …b n if and only if a i ; b i , for all 1;i;n.<br />

Examples:<br />

• 5 elements. The permutation 21435 precedes 21543.<br />

• Given 362541, then 364125 is the next permutation lexicographically.<br />

95<br />

Algorithm: Generate the next permutation in lexicographic order.<br />

procedure next_perm(a 1 a 2 …a n : a i ,{1,2,…,n} and distinct)<br />

j := n – 1<br />

while a j > a j+1<br />

j := j – 1<br />

{j is the largest subscript with a j < a j+1 }<br />

k := n<br />

while a j > a k<br />

k := k – 1<br />

{a k is the smallest integer greater than a j to the right <strong>of</strong> a j }<br />

Swap a j and a k<br />

r := n, s := j+1<br />

while r > s<br />

Swap a r and a s<br />

r := r – 1, s:= s + 1<br />

{This puts the tail end <strong>of</strong> the permutation after the j th<br />

increasing order}<br />

position in<br />

96

Algorithm: Generating the next r-combination in lexicographic order.<br />

procedure next_r_combination(a 1 a 2 …a n : a i ,{1,2,…,n} and distinct)<br />

i := r<br />

while a i = n-r+1<br />

i := i – 1<br />

a i := a i + 1<br />

for j := i+1 to r<br />

a j := a i + j - 1<br />

Example: Let S = {1, 2, …, 6}. Given a 4-permutation <strong>of</strong> {1, 2, 5, 6}, the next 4-<br />

permutation is {1, 3, 4, 5}.<br />

97<br />

<strong>Discrete</strong> Probability<br />

Definition: An experiment is a procedure that yields one <strong>of</strong> a given set <strong>of</strong><br />

possible outcomes.<br />

Definition: The sample space <strong>of</strong> the experiment is the set <strong>of</strong> (all) possible<br />

outcomes.<br />

Definition: An event is a subset <strong>of</strong> the sample space.<br />

First Assumption: We begin by only considering finitely many possible<br />

outcomes.<br />

Definition: If S is a finite sample space <strong>of</strong> equally likely outcomes and E0S is<br />

an event, then the probability <strong>of</strong> E is p(E) = |E| / |S|.<br />

98

Examples:<br />

• I randomly chose an exam1 to grade. What is the probability that it is one <strong>of</strong><br />

the Davids? Thirty one students took exam1 <strong>of</strong> which five were Davids. So,<br />

p(David) = 5 / 31 ~ 0.16.<br />

• Suppose you are allowed to choose 6 numbers from the first 50 natural<br />

numbers. The probability <strong>of</strong> picking the correct 6 numbers in a lottery<br />

drawing is 1/C(50,6) = (44!$6!) / 50! ~ 1.43$10 -9 . This lottery is just a<br />

regressive tax designed for suckers and starry eyed dreamers.<br />

Definition: When sampling, there are two possible methods: with and without<br />

replacement. In the former, the full sample space is always available. In the<br />

latter, the sample space shrinks with each sampling.<br />

99<br />

Example: Let S = {1, 2, …, 50}. What is the probability <strong>of</strong> sampling {1, 14, 23,<br />

32, 49}?<br />

• Without replacement: p({1,14,23,32,49}) = 1 / (50$49$48$47$46) =<br />

3.93$10 -9 .<br />

• With replacement: p({1,14,23,32,49}) = 1 / (50$50$50$50$50) = 3.20$10 -9 .<br />

Definition: If E is an even, then E is the complementary event.<br />

Theorem: p(E) = 1 – p(E) for a sample space S.<br />

Pro<strong>of</strong>: p(E) = (|S| – |E|) / |S| = 1 – |E| / |S| = 1 – p(E).<br />

Example: Suppose we generate n random bits. What is the probability that one<br />

<strong>of</strong> the bits is 0? Let E be the event that a bit string has at least one 0 bit. Then E<br />

is the event that all n bits are 1. p(E) = 1 – p(E) = 1 – 2 -n = (2 n – 1) / 2 n .<br />

Note: Proving the example directly for p(E) is extremely difficult.<br />

100

Theorem: Let E and F be events in a sample space S. Then<br />

p(E2F) = p(E) + p(F) – p(E3F).<br />

Pro<strong>of</strong>: Recall that |E2F| = |E| + |F| – |E3F|. Hence,<br />

p(E2F) = |E2F| / |S| = (|E| + |F| – |E3F|) / |S| = p(E) + p(F) – p(E3F).<br />

Example: What is the probability in the set {1, 2, …, 100} <strong>of</strong> an element being<br />

divisible by 2 or 3? Let E and F represent elements divisible by 2 and 3,<br />

respectively. Then |E| = 50, |F| = 33, and |E3F| = 16. Hence, p(E2F) = 0.67.<br />

101<br />

Second Assumption: Now suppose that the probability <strong>of</strong> an event is not 1 / |S|.<br />

In this case we must assign probabilities for each possible event, either by<br />

setting a specific value or defining a function.<br />

Definition: For a sample space S with a finite or countable number <strong>of</strong> events, we<br />

assign probabilities p(s) to each event s,S such that<br />

(1) 0 ; p(s) ; 1 (s,S, and<br />

(2) " p(s) = 1.<br />

s!S<br />

Notes:<br />

1. When |S| = n, the formulas (1) and (2) can be rewritten using n.<br />

2. When |S| = . and is uncountable, integral calculus is required for (2).<br />

3. When |S| = . and is countable, the sum in (2) is true in the limit.<br />

102

Example: Coin flipping with events H and T.<br />

• S = {H, T} for a fair coin. Hence, p(H) = p(T) = 0.5.<br />

• S = {H, H, T} for a weighted coin. Then p(H) = 0.67 and p(T) = 0.33.<br />

Definition: Suppose that S is a set with n elements. The uniform distribution<br />

assigns the probability 1/n to each element in S.<br />

Definition: The probability <strong>of</strong> the event E is the sum <strong>of</strong> the probabilities <strong>of</strong> the<br />

outcomes in E, i.e., p(E) = " p(s) .<br />

s!E<br />

Note: When |E| = ., the sum<br />

" p(s) must be convergent in the limit.<br />

s!E<br />

Definition: The experiment <strong>of</strong> selecting an element from a sample space S with<br />

a uniform distribution is known as selecting an element from S at random.<br />

We can prove that (1) p(E) = 1 – p(E) and (2) p(E2F) = p(E) + p(F) – p(E3F)<br />

using the more general probability definitions.<br />

103<br />

Definition: Let E and F be events with p(F) > 0. The conditional probability <strong>of</strong> E<br />

given F is defined by p(E|F) = p(E3F) / p(F).<br />

Example: A bit string <strong>of</strong> length 3 is generated at random. What is the probability<br />

that there are two 0 bits in a row given that the first bit is 0? Let F be the event<br />

that the first bit is 0. Let E be the event that there are two 0 bits in a row. Note<br />

that E3F = {000, 001} and p(F) = 0.5. Hence, p(E|F) = 0.25 / 0.5 = 0.5.<br />

Definition: The events E and F are independent if p(E3F) = p(E)p(F).<br />

Note: Independence is equivalent to having p(E|F) = p(E).<br />

Example: Suppose E is the event that a bit string begins with a 1 and F is the<br />

event that there is are an even number <strong>of</strong> 1’s. Suppose the bit strings are <strong>of</strong><br />

length 3. There are 4 bit strings beginning with 1: {100, 101, 110, 111}. There<br />

are 3 strings with an even number <strong>of</strong> 1’s: {101, 110, 011}. Hence, p(E) = 0.5<br />

and p(F) = 0.375. E3F = {101, 110}, so p(E3F) = 0.25. Thus, p(E3F) -<br />

p(E)p(F). Hence, E and F are not independent.<br />

104

Note: For bit strings <strong>of</strong> length 4, 0.25 = p(E3F) = (0.5)$(0.5) = p(E)p(F), so the<br />

events are independent. We can speculate on whether or not the even/odd length<br />

<strong>of</strong> the bit strings plays a part in the independence characteristic.<br />

Definition: Each performance <strong>of</strong> an experiment with exactly two outcomes,<br />

denoted success (S) and failure (F), is a Bernoulli trial.<br />

Definition: The Bernoulli distribution is denoted b(k; n,p) = C(n,k)p k q n-k .<br />

Theorem: The probability <strong>of</strong> exactly k successes in n independent Bernoulli<br />

trials, with probability <strong>of</strong> success p and failure q = 1 – p is b(k; n,p).<br />

Pro<strong>of</strong>: When n Bernoulli trials are carried out, the outcome is an n-tuple<br />

(t 1 , t 2 , …, t n ), all n t i ,{S, F}. Due to the trials independence, the probability <strong>of</strong><br />

each outcome having k successes and n-k failures is p k q n-k . There are C(n,k)<br />

possible tuples that contain exactly k successes and n-k failures.<br />

105<br />

Example: Suppose we generate bit strings <strong>of</strong> length 10 such that p(0) = 0.7 and<br />

p(1) = 0.3 and the bits are generated independently. Then<br />

• b(8; 10,0.7) = C(10,8)(0.7) 8 (0.3) 2 = 45$0 .0823543$0.09 = 0.3335<br />

• b(7; 10,0.7) = C(10,7)(0.7) 7 (0.3) 3 = 120$0 .05764801$0.027 = 0.1868<br />

n<br />

k=0<br />

Theorem: ! b(k;n,p) = 1.<br />

n<br />

k=0<br />

n<br />

k=0<br />

Pro<strong>of</strong>: ! b(k;n,p) = ! C(k; n,p)p k q n-k = (p+q) n = 1.<br />

Definition: A random variable is a function from the sample space <strong>of</strong> an<br />

experiment to the set <strong>of</strong> reals.<br />

Notes:<br />

• A random variable assigns a real number to each possible outcome.<br />

• A random function is not a function nor random.<br />

106

Example: Flip a fair coin twice. Let X(t) be the random variable that equals the<br />

number <strong>of</strong> tails that appear when t is the outcome. Then<br />

X(HH) = 0, X(HT) = X(TH) = 1, and X(TT) = 2.<br />

Definition: The distribution <strong>of</strong> a random variable X on a sample space is the set<br />

<strong>of</strong> pairs (r, p(X=r)) (r,X(S), where p(X=r) is the probability that X takes the<br />

value r.<br />

Note: A distribution is usually described by specifying p(X=r) (r,X(S).<br />

Example: For our coin flip example above, each outcome has probability 0.25.<br />

Hence,<br />

p(X=0) = 0.25, p(X=1) = 0.5, and p(X=2) = 0.25.<br />

107<br />

Definition: The expected value (or expectation) <strong>of</strong> the random variable X(s) in<br />

the sample space S is E(X)= " p(s)X(s) .<br />

s!S<br />

n<br />

i=1<br />

Note: If S = {x i<br />

} n i=1<br />