CONTENTS - Department of Mathematics and Statistics - University ...

CONTENTS - Department of Mathematics and Statistics - University ...

CONTENTS - Department of Mathematics and Statistics - University ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

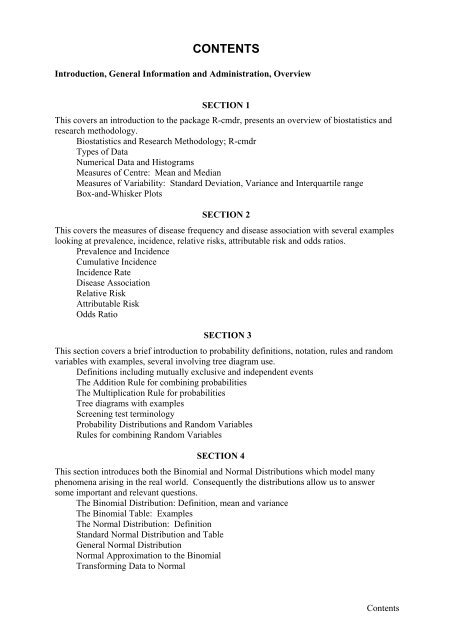

<strong>CONTENTS</strong><br />

Introduction, General Information <strong>and</strong> Administration, Overview<br />

SECTION 1<br />

This covers an introduction to the package R-cmdr, presents an overview <strong>of</strong> biostatistics <strong>and</strong><br />

research methodology.<br />

Biostatistics <strong>and</strong> Research Methodology; R-cmdr<br />

Types <strong>of</strong> Data<br />

Numerical Data <strong>and</strong> Histograms<br />

Measures <strong>of</strong> Centre: Mean <strong>and</strong> Median<br />

Measures <strong>of</strong> Variability: St<strong>and</strong>ard Deviation, Variance <strong>and</strong> Interquartile range<br />

Box-<strong>and</strong>-Whisker Plots<br />

SECTION 2<br />

This covers the measures <strong>of</strong> disease frequency <strong>and</strong> disease association with several examples<br />

looking at prevalence, incidence, relative risks, attributable risk <strong>and</strong> odds ratios.<br />

Prevalence <strong>and</strong> Incidence<br />

Cumulative Incidence<br />

Incidence Rate<br />

Disease Association<br />

Relative Risk<br />

Attributable Risk<br />

Odds Ratio<br />

SECTION 3<br />

This section covers a brief introduction to probability definitions, notation, rules <strong>and</strong> r<strong>and</strong>om<br />

variables with examples, several involving tree diagram use.<br />

Definitions including mutually exclusive <strong>and</strong> independent events<br />

The Addition Rule for combining probabilities<br />

The Multiplication Rule for probabilities<br />

Tree diagrams with examples<br />

Screening test terminology<br />

Probability Distributions <strong>and</strong> R<strong>and</strong>om Variables<br />

Rules for combining R<strong>and</strong>om Variables<br />

SECTION 4<br />

This section introduces both the Binomial <strong>and</strong> Normal Distributions which model many<br />

phenomena arising in the real world. Consequently the distributions allow us to answer<br />

some important <strong>and</strong> relevant questions.<br />

The Binomial Distribution: Definition, mean <strong>and</strong> variance<br />

The Binomial Table: Examples<br />

The Normal Distribution: Definition<br />

St<strong>and</strong>ard Normal Distribution <strong>and</strong> Table<br />

General Normal Distribution<br />

Normal Approximation to the Binomial<br />

Transforming Data to Normal<br />

Contents

SECTION 5<br />

This section defines sampling distributions, establishes the st<strong>and</strong>ard deviations <strong>of</strong> these<br />

distributions called st<strong>and</strong>ard errors, <strong>and</strong> set up confidence intervals for population means,<br />

differences between the means <strong>of</strong> two populations, proportions <strong>and</strong> difference between<br />

proportions based on r<strong>and</strong>om samples drawn from the populations.<br />

An outline <strong>of</strong> the Research Process<br />

The Distribution <strong>of</strong> Sample Means<br />

The St<strong>and</strong>ard Error <strong>of</strong> the Mean<br />

Confidence Interval for a Mean<br />

The t-distribution <strong>and</strong> Its Use<br />

Comparison <strong>of</strong> Two Independent Groups<br />

The St<strong>and</strong>ard Error <strong>of</strong> the Difference Between Two means<br />

Pooled Estimate for the Common Variance<br />

Comparison <strong>of</strong> Two Dependent Groups (Paired Data)<br />

Confidence Interval for a Proportion<br />

Confidence Interval for Difference Between Two Proportions<br />

Summary <strong>of</strong> Distributions <strong>and</strong> Confidence Intervals<br />

SECTION 6<br />

This section reviews hypothesis testing, type 1 <strong>and</strong> type 2 errors, conclusive <strong>and</strong> inconclusive<br />

results <strong>and</strong> the power <strong>of</strong> a study.<br />

Null <strong>and</strong> Alternative Hypotheses<br />

Study Based <strong>and</strong> Data Driven Hypotheses<br />

One <strong>and</strong> Two Sided Tests<br />

Four Steps in the Hypothesis Testing Procedure<br />

Examples<br />

Pooled proportion estimate<br />

Clinical <strong>and</strong> Ecological Importance<br />

Conclusive <strong>and</strong> Inconclusive Results<br />

Errors in Hypothesis Testing<br />

Power <strong>of</strong> a Study<br />

Examples<br />

SECTION 7<br />

One factor analysis <strong>of</strong> variance<br />

Post analysis <strong>of</strong> variance tests on means<br />

Multiple comparison procedures<br />

SECTION 8<br />

This section covers the analysis <strong>of</strong> count data including the Chi-square test for contingency,<br />

the chi-square test for trend as well as relative risks, attributable risks <strong>and</strong> odds ratios along<br />

with their confidence intervals. The analysis <strong>of</strong> a three way table <strong>and</strong> Simpson’s paradox are<br />

investigated as a way <strong>of</strong> introducing the concept <strong>of</strong> a confounding variable in the lead up to<br />

regression analyses.<br />

Categorical Data Examples<br />

Relative Risk <strong>and</strong> its Confidence Interval<br />

Attributable Risk <strong>and</strong> its Confidence Interval<br />

Odds Ratio <strong>and</strong> its Confidence Interval<br />

Chi-square Test for Contingency<br />

Chi-square Test for Trend<br />

Interpretation <strong>of</strong> Confidence Intervals<br />

Simpson’s Paradox <strong>and</strong> Confounder Control<br />

Contents

SECTION 9<br />

This section introduces the topic <strong>of</strong> Simple Linear Regression which sets out to fit a straight<br />

line through what is called a scatter diagram. One purpose <strong>of</strong> this analysis is to establish<br />

whether one predictor variable is influencing the outcomes <strong>of</strong> a response variable <strong>and</strong> also<br />

measuring the magnitude <strong>of</strong> the effect <strong>of</strong> this predictor variable on the outcome. It is possible<br />

to use the fitted straight line to make predictions.<br />

Simple linear regression is also the first step in controlling for a confounder variable. This<br />

occurs with the extension to multiple regression which will be considered in the next section.<br />

Scatter Diagrams <strong>and</strong> Examples<br />

Equation <strong>of</strong> Fitted Straight Line<br />

Analysis <strong>of</strong> Variance for Regression Model<br />

Confidence Interval for Slope<br />

Confidence Interval for Prediction<br />

Correlation as Measure <strong>of</strong> Linear Association<br />

Review Exercises<br />

SECTION 10<br />

Multiple regression models <strong>and</strong> logistic regression models are introduced in this section. In<br />

the case <strong>of</strong> ordinary multiple regression the response or outcome variable is on a continuous<br />

scale whereas in the case <strong>of</strong> a logistic regression the outcome measure is binary taking<br />

therefore only two possible values interpreted as success versus failure.<br />

The models allow us to identify those variables which have an effect on the outcomes <strong>and</strong><br />

those variables which do not.<br />

Adding additional variables leads to adjusted values for estimated parameters <strong>and</strong> it is this<br />

that allows us to control for confounding.<br />

The Multiple Regression Model<br />

R-cmdr Printout for Multiple Regression<br />

Dummy Variables<br />

Checking Model Fit<br />

Parallel Regression Lines <strong>and</strong> Analysis <strong>of</strong> Covariance<br />

Binary Outcomes <strong>and</strong> Logistic Regression<br />

Study Design principles<br />

Critical appraisal<br />

Confounding analysis<br />

Sources <strong>of</strong> bias<br />

SECTION 11<br />

Appendix 1: The Basics – mathematical rules <strong>and</strong> statistical concepts<br />

Appendix 2: Some summaries<br />

Appendix 3: Formulae<br />

Contents

STAT115 INTRODUCTION TO BIOSTATISTICS 2012<br />

Advances in our underst<strong>and</strong>ing <strong>of</strong> factors which affect health <strong>and</strong> wellbeing come through<br />

research in the health sciences. Examples <strong>of</strong> such research include surveys to describe<br />

patterns <strong>of</strong> disease in a community or risk factors for disease such as diet <strong>and</strong> smoking; studies<br />

trying to find out whether a newly developed treatment works; studies <strong>of</strong> factors which may<br />

prevent disease such as physical activity; studies <strong>of</strong> barriers to improving health such as<br />

reasons for declining vaccination rates in children, prevention <strong>of</strong> smoking. Biostatistics<br />

(statistics applied in the health sciences) is a vital tool in our mission to improve health <strong>and</strong><br />

wellbeing for all people.<br />

STAT115 provides an introduction to the core principles <strong>and</strong> methods <strong>of</strong> biostatistics. In this<br />

course you will gain an underst<strong>and</strong>ing <strong>of</strong> how statistics is used to answer research questions:<br />

how to look for patterns in data, how to test hypotheses about disease causation <strong>and</strong> prevention<br />

<strong>and</strong> improvement in well-being. The underst<strong>and</strong>ing <strong>and</strong> skills gained in STAT115 can be a<br />

starting point for a career in biostatistics or can be used to assist underst<strong>and</strong>ing <strong>of</strong> research in<br />

other disciplines including physiology, anatomy, human nutrition, sports science, <strong>and</strong><br />

psychology.<br />

Lecturers<br />

GENERAL INFORMATION AND ADMINISTRATION<br />

Dr Katrina Sharples, Dept <strong>of</strong> Preventive <strong>and</strong> Social Medicine, Adams Building<br />

Dr Janine Wright, Room 237, Science III building<br />

Mr Daniel Turek, Room 231, Science III building<br />

Dr David Bryant, Room 514, Science III building<br />

Lectures<br />

Lectures are held as follows: Monday, Tuesday, Thursday <strong>and</strong> Friday at 11.00 am,<br />

commencing Monday 9 July. Although these notes are extensive, experience shows that<br />

students who miss lectures have a severe disadvantage.<br />

Help Sessions <strong>and</strong> Tutorials<br />

These will be held in 539 Castle St Laboratory which has 36 computers. Tutorials are<br />

cafeteria style which means that you can attend at any scheduled time when tutors are<br />

available to help with weekly exercises. Times can be found on the STAT115 paper page on<br />

the <strong>Mathematics</strong> <strong>and</strong> <strong>Statistics</strong> <strong>Department</strong> website. In addition, you may access the<br />

computers to complete assignments outside <strong>of</strong> scheduled sessions. Attend early in the week<br />

to avoid the inevitable rush before submission day.<br />

STAT 115 Web Page <strong>and</strong> Resource Area<br />

The STAT 115 web page: www.maths.otago.ac.nz/stat115 will contain course resource<br />

material. Answers to weekly exercises, notices, old exam papers with solutions <strong>and</strong> any other<br />

useful information will be posted here. You can access such information by clicking on the<br />

Resources button. You are strongly advised to read through the solutions to weekly exercises<br />

as students who fail to do this are at a severe disadvantage.<br />

i<br />

Introduction & overview

Support Classes<br />

There is also a Wednesday evening support class for students worried about their mathematics<br />

background for this course. This class will be held in 539 Castle St at 6pm on Wednesday<br />

evening. If you wish to attend the support class you will need to register using the form<br />

which is available on the resource page or from the Maths <strong>and</strong> <strong>Statistics</strong> Reception, Science<br />

III, 2 nd floor. Our experience is that only a small number <strong>of</strong> students will need to use the<br />

support class. Note, there is no mathematics pre-requisite for this course. If you have<br />

difficulty in carrying out the calculations in the Basics Booklet <strong>of</strong> Appendix 1 <strong>of</strong> these notes<br />

you may find it helpful to attend the support class. In addition, you can access Mathercize by<br />

going to the web page mathercize.otago.ac.nz, log-in password line. The options<br />

STAT115 Exercises for Biostatistics<br />

STAT115 Revision mathematics<br />

will take you through background material for this course in an easy to use self-testing<br />

environment.<br />

Study Centre<br />

A Study Centre will operate in a room at the back <strong>of</strong> 539 Castle St. This is an area where you<br />

can go to work with fellow students. There will also be statistics help available at times as<br />

shown on the door.<br />

References<br />

There is no set text for the course as this course booklet contains all material necessary. The<br />

book: Harraway, J. Introductory Statistical Methods for Biological, Health <strong>and</strong> Social<br />

Sciences. (<strong>University</strong> <strong>of</strong> Otago Press) has multiple copies on reserve in the Science Library at<br />

the Loans Desk. The first 17 chapters are relevant for this course. A second book on close<br />

reserve: Clark, M.J. <strong>and</strong> R<strong>and</strong>al, J.A. A First Course in Applied <strong>Statistics</strong> (Pearson).<br />

Computing<br />

The R-comm<strong>and</strong>er (R-cmdr) package will be used in tutorials. No prior knowledge <strong>of</strong> the<br />

package is needed as a h<strong>and</strong>out <strong>and</strong> full instructions will be available in the tutorials. All<br />

students will have their own User Name <strong>and</strong> Password. The User Name is the name on your<br />

student ID card <strong>and</strong> the Password is your student ID number.<br />

Time Commitment<br />

STAT 115 is a one semester course worth 18 points. It is expected that students should spend<br />

an average <strong>of</strong> 12 hours per week on this course. After allowing four hours per week attending<br />

lectures, this leaves eight hours for other course related activities such as assignments, reading<br />

notes <strong>and</strong> revising.<br />

Calculators<br />

There is no restriction on the type <strong>of</strong> calculator that can be used except that no device with<br />

communication capability shall be accepted as a calculator.<br />

ii<br />

Introduction & overview

Course content (in approximate lecture order)<br />

Introduction: research methods <strong>and</strong> study design; designed experiments versus<br />

observational studies; case control, cohort <strong>and</strong> intervention studies.<br />

Data description <strong>and</strong> presentation: the use <strong>of</strong> R-comm<strong>and</strong>er; histograms, box<strong>and</strong>-whisker<br />

plots, measures <strong>of</strong> centre <strong>and</strong> spread <strong>of</strong> data, measures <strong>of</strong> disease<br />

frequency <strong>and</strong> association.<br />

Probability: the nature <strong>of</strong> r<strong>and</strong>om variation; diagnostic tests; probability<br />

distributions including the binomial <strong>and</strong> normal distributions.<br />

Estimation: sampling distributions; confidence intervals for means, differences<br />

proportions.<br />

Hypothesis testing: classical procedures for means, proportions, <strong>and</strong> differences;<br />

the p-value; statistical vs clinical significance; power <strong>and</strong> sample size.<br />

Analysis <strong>of</strong> variance: completely r<strong>and</strong>omised design; bonferroni procedure for<br />

multiple comparisons.<br />

Categorical data: tests for association; rates, relative risk <strong>and</strong> risk differences,<br />

odds ratios; confidence intervals for relative risk <strong>and</strong> odds ratio.<br />

Regression <strong>and</strong> correlation: the simple linear regression model; tests on the slope;<br />

predictions; confidence intervals for predictions; correlation.<br />

Multiple regression: tests on the estimated parameters; dummy variables for<br />

qualitative predictors; parallel regressions <strong>and</strong> control <strong>of</strong> confounding.<br />

Ethics <strong>and</strong> Study design: Ethical issues, bias <strong>and</strong> confounding.<br />

2 lectures<br />

6 lectures<br />

8 lectures<br />

5 lectures<br />

3 lectures<br />

3 lectures<br />

4 lectures<br />

5 lectures<br />

4 lectures<br />

7 lectures<br />

Internal Assessment<br />

There will be eight assignments <strong>and</strong> three mastery tests. Each assessment will have a mark<br />

recorded out <strong>of</strong> 20. These assessments will be admininstered on-line. The assignments can<br />

be completed anywhere you have an internet connection. The mastery tests will be conducted<br />

in the Castle St Computer Laboratory. A booking system for half-hour slots in which to<br />

attempt the tests will operate. Cut<strong>of</strong>f times for each assignment will be announced in lectures.<br />

Exam format<br />

A three-hour exam will produce a mark out <strong>of</strong> 100.<br />

Final mark<br />

In your overall mark we will count your exam mark for 2/3 <strong>of</strong> the total <strong>and</strong> the internal<br />

assessment for 1/3. However, if your final exam mark taken out <strong>of</strong> 100 is greater than this,<br />

we will use just the final exam mark. That is, the final mark F will be calculated as:<br />

F = {E, (2E + A)/3}<br />

where E (exam mark) is out <strong>of</strong> 100 <strong>and</strong> A (internal assessment) is out <strong>of</strong> 100. The internal<br />

assessment marks will be made up 1/3 from the eight assignments <strong>and</strong> 2/3 from the three<br />

mastery tests.<br />

iii<br />

Introduction & overview

Email Contact with Students<br />

From time to time lecturers may wish to email students taking STAT 115. This will be done<br />

by contacting you using your Student email address. You should check your student address<br />

regularly. If you have another address then you might like to arrange that emails sent to your<br />

student address are forwarded automatically.<br />

Disability <strong>and</strong> Impairment Support<br />

The <strong>Department</strong> <strong>of</strong> <strong>Mathematics</strong> <strong>and</strong> <strong>Statistics</strong> encourages students to seek support if they<br />

find they are having difficulty with their studies due to a disability, temporary or permanent<br />

impairment, injury, chronic illness or deafness.<br />

Contact either The Course Convenor,<br />

or Disability Information <strong>and</strong> Support<br />

Telephone 479 8235<br />

Email: disabilities@otago.ac.nz<br />

Website: http://www.otago.ac.nz/disabilities<br />

Plagiarism<br />

Students should make sure that all submitted work is their own. “Plagiarism is a form <strong>of</strong><br />

dishonest practice. Plagiarism is defined as copying or paraphrasing another’s work <strong>and</strong><br />

presenting it as one’s own” (<strong>University</strong> Council, December 2004). In practice this means that<br />

plagiarism includes any attempt in any piece <strong>of</strong> submitted work (e.g. an assignment or test) to<br />

present as one’s own work the work <strong>of</strong> another (whether <strong>of</strong> another student or a published<br />

authority). Any student found to be responsible for plagiarism in any piece <strong>of</strong> work submitted<br />

for assessment shall be subject to the <strong>University</strong>’s dishonest practice regulations which may<br />

result in various penalties, including forfeiture <strong>of</strong> marks for the piece <strong>of</strong> work submitted, a<br />

zero grade for the paper or in extreme cases exclusion from the <strong>University</strong>.<br />

SURV 102 Computational Methods for Surveyors<br />

Students enrolled for SURV102 will attend lectures in STAT115 for four weeks beginning on<br />

Monday 23 July.<br />

A separate notice about assessment in SURV102 will be made in the Surveying <strong>Department</strong>.<br />

iv<br />

Introduction & overview

Biostatistics <strong>and</strong> Health Research - An Overview<br />

1 Health Research<br />

Billions <strong>of</strong> dollars are spent every year in a quest to improve human health <strong>and</strong> well-being.<br />

The broad goal <strong>of</strong> this quest is to acquire new knowledge to help prevent, detect, diagnose <strong>and</strong><br />

treat disease.<br />

What sort <strong>of</strong> knowledge do we look for<br />

What causes a disease<br />

Once you have a disease, what happens<br />

Who has the disease<br />

What is the best strategy for treatment or prevention<br />

How do societal factors affect health<br />

What causes a disease<br />

Underst<strong>and</strong>ing the factors which lead to the development <strong>of</strong> disease gives ideas about how to<br />

prevent disease. For example:<br />

• Drinking water is treated to kill bacteria, virus <strong>and</strong> other contaminants like giardia.<br />

• Our ability to prevent heart disease has improved with our underst<strong>and</strong>ing <strong>of</strong> specific<br />

dietary components which increase risk, <strong>and</strong> with our underst<strong>and</strong>ing <strong>of</strong> how exercise<br />

works to reduce risk.<br />

• The realization that the cause <strong>of</strong> AIDS was a virus (HIV) which could be transmitted<br />

through sexual intercourse <strong>and</strong> blood transfusions led to prevention strategies to<br />

reduce transmission. These included use <strong>of</strong> condoms, screening <strong>of</strong> blood products<br />

<strong>and</strong> drugs to reduce <strong>of</strong> transmission from mother to baby.<br />

• Underst<strong>and</strong>ing how <strong>and</strong> when sports injuries occur helps to develop rules <strong>of</strong> play <strong>and</strong><br />

training schedules which reduce injury burden.<br />

Once you have a disease, what happens<br />

Underst<strong>and</strong>ing how a disease progresses gives ideas about how to cure disease, or to prolong<br />

survival or to improve quality <strong>of</strong> life. For example:<br />

• Underst<strong>and</strong>ing how HIV affects the immune system has led to the development <strong>of</strong><br />

drugs such as zidovudine which prevent the virus from reproducing <strong>and</strong> seem to<br />

slow the destruction <strong>of</strong> the immune system.<br />

• Underst<strong>and</strong>ing how bacteria work allowed the development <strong>of</strong> different types <strong>of</strong><br />

antibiotics with different actions.<br />

• Cancer develops when cells in a part <strong>of</strong> the body begin to grow out <strong>of</strong> control.<br />

Knowledge <strong>of</strong> the cell cycle was important in developing cancer drugs<br />

(chemotherapy) which work only on actively reproducing cells.<br />

Who has the disease<br />

Detecting who has a disease <strong>and</strong> diagnosing disease are the first steps in delivering effective<br />

treatments. For example:<br />

• Development <strong>of</strong> non-invasive technologies for looking inside the body (such as<br />

ultrasound, CT scans, MRI) provided techniques for making the initial diagnosis <strong>of</strong><br />

cancer, or for identifying the form <strong>of</strong> damage to a knee following injury.<br />

• Tests which look at cells from biopsies or blood can give more accurate diagnosis <strong>of</strong><br />

cancer than the non-invasive technologies.<br />

• We identify people with HIV infection though a blood test which detects antibodies<br />

to the virus.<br />

v<br />

Introduction & overview

What is the best strategy for treatment or prevention<br />

Once we have developed a new treatment or approach to prevention we need to evaluate the<br />

risks <strong>and</strong> benefits <strong>of</strong> that treatment before it is made available for use. For example:<br />

• Exercise <strong>and</strong> balance programmes have been demonstrated to reduce the risk <strong>of</strong><br />

falling in the elderly<br />

• The statin family <strong>of</strong> drugs have been demonstrated to reduce risk <strong>of</strong> death from<br />

cardiovascular disease<br />

• Evaluations <strong>of</strong> the use <strong>of</strong> beta-carotene (which the body converts to vitamin A)<br />

found that contrary to expectations, it did not prevent lung cancer; in fact it increased<br />

the risk <strong>of</strong> lung cancer.<br />

How do societal factors affect health<br />

Working with individuals can lead to significant improvements in health, but societal factors<br />

can also have an impact.<br />

• Societal attitudes to alcohol <strong>and</strong> smoking can make it difficult for individuals to<br />

change behaviour<br />

• Underst<strong>and</strong>ing how societal factors operate is important for developing systems <strong>of</strong><br />

health care.<br />

Where does knowledge come from<br />

During the last century we have gained an enormous amount <strong>of</strong> knowledge, but there are still<br />

many gaps.<br />

• Cancer <strong>and</strong> cardiovascular disease still end many people’s lives prematurely.<br />

• Back pain is very common. We still are not very good at treating it or preventing it.<br />

• Diabetes is becoming increasingly common, particularly among Maori <strong>and</strong> Pacific<br />

Isl<strong>and</strong> populations. It has many serious health consequences.<br />

• New diseases provide additional challenges. HIV/AIDS, a disease thought to have<br />

jumped the species barrier into humans, has had an enormous impact. Avian<br />

influenza is common in birds in Asia, <strong>and</strong> can cause severe disease in humans, but<br />

doesn’t currently spread directly from human to human. But it would only take a<br />

small change in the genome <strong>of</strong> the virus to make it highly infectious amongst<br />

humans.<br />

Knowledge can come from ‘experience’ or ‘research’<br />

Experience is a very unreliable way <strong>of</strong> obtaining knowledge. Humans are not objective; our<br />

recall is very selective. The history <strong>of</strong> medicine is littered with treatments which doctors were<br />

convinced, through their own experience, worked, but time has shown to be ineffective or<br />

harmful in many <strong>of</strong> the settings where they were used: bloodletting, ground woodlice,<br />

mercury, arsenic, <strong>and</strong> so on. These treatments were widely used centuries ago, but there are<br />

more modern examples.<br />

• An early treatment for heart attack, where blood flow to part <strong>of</strong> the heart muscle is<br />

blocked, involved sprinkling powdered asbestos on to the heart to increase blood flow<br />

to the affected areas. It was never truly shown to work, but thous<strong>and</strong>s <strong>of</strong> these<br />

operations were done.<br />

• Hormone replacement therapy was widely used initially for treatment <strong>of</strong> the symptoms<br />

<strong>of</strong> menopause, but was also believed to reduce risk <strong>of</strong> heart disease in postmenopausal<br />

women. The results <strong>of</strong> a study published recently found in fact it<br />

increased the risk <strong>of</strong> heart disease.<br />

That leaves research.<br />

vi<br />

Introduction & overview

2 The Research Process <strong>and</strong> Biostatistics<br />

What is research<br />

Research is a systematic process for providing answers to questions<br />

Examples <strong>of</strong> research questions:<br />

• What are the causes <strong>of</strong> meningococcal meningitis<br />

• What is the best treatment strategy for chronic back pain<br />

• What are the genetic events that lead to childhood cancer<br />

• Can this new drug improve survival in people with colon cancer<br />

• What is the role <strong>of</strong> selenium as an antioxidant in the protection against risk factors for<br />

cardiovascular disease<br />

• To what extent do western diet <strong>and</strong> exercise habits need to change in order to reduce<br />

insulin resistance<br />

• Does this conditioning programme reduce serious knee injury in team sports<br />

Biostatistics is the field <strong>of</strong> development <strong>and</strong> application <strong>of</strong> statistical methods to research in<br />

health-related fields, including medicine, public health, <strong>and</strong> biology. Since early in the<br />

twentieth century, biostatistics has become an indispensable tool for health research.<br />

<strong>Statistics</strong> is <strong>of</strong>ten defined as the art <strong>and</strong> science <strong>of</strong> collecting, summarising, presenting <strong>and</strong><br />

interpreting data. <strong>Statistics</strong> is a set <strong>of</strong> techniques which formally implement the fundamental<br />

principles <strong>of</strong> the scientific method. The scientific method underlies the research process:<br />

observation <strong>and</strong> theories lead to the development <strong>of</strong> hypotheses. We work out the best test <strong>of</strong><br />

the hypothesis, then collect data <strong>and</strong> determine to what extent the data are consistent with the<br />

hypothesis.<br />

The research process<br />

When we carry out research we <strong>of</strong>ten collect data on a sample or subgroup from a population.<br />

Our goal is to use the information collected on that sample to draw inferences about a larger<br />

population.<br />

Underlying populations<br />

Inference<br />

Sample<br />

<strong>Statistics</strong><br />

vii<br />

Introduction & overview

Examples<br />

• We use the frequency with which diabetes occurs in a sample to estimate the<br />

frequency with which diabetes occurs in the population the sample came from.<br />

• We study a new treatment in a subgroup <strong>of</strong> patients in order to be able to make claims<br />

about the effects <strong>of</strong> the treatment in all such patients.<br />

Steps in the research process<br />

Development <strong>of</strong> the research questions<br />

Design <strong>of</strong> the study<br />

Collection <strong>of</strong> information<br />

Data description <strong>and</strong> analysis<br />

Interpretation <strong>of</strong> results<br />

Ideas for research come from many places – from reading the literature, observation <strong>and</strong><br />

clinical experience, from talking to colleagues <strong>and</strong> from just sitting <strong>and</strong> thinking.<br />

The first step is to refine the idea into a question, or series <strong>of</strong> questions, which can be<br />

answered in a single study; that is, we need to be able to design a study to answer the<br />

question. The question may be framed as a hypothesis. For example, we might wish to<br />

answer the question “Does a low fat diet reduce risk <strong>of</strong> diabetes” The hypothesis would be<br />

“Low fat diet reduces the risk <strong>of</strong> diabetes”. We then need to work out how best to test the<br />

hypothesis.<br />

The study design specifies the methods for selecting people (or other units) for the study <strong>and</strong><br />

for collecting the information that will be used to answer the questions. It needs to be feasible<br />

<strong>and</strong> ethical. We need to identify which study designs can give us appropriate data, <strong>and</strong> how to<br />

maximize our chance <strong>of</strong> being able to distinguish a true relationship from r<strong>and</strong>om noise.<br />

Once we have collected the data we use statistical methods to describe <strong>and</strong> analyse the data<br />

<strong>and</strong> interpret the results. The analysis <strong>and</strong> the interpretation <strong>of</strong> the results will depend on the<br />

study design.<br />

Biostatisticians work with scientists to identify <strong>and</strong> implement the correct statistical methods<br />

for designing studies <strong>and</strong> analyzing <strong>and</strong> interpreting the results.<br />

3. Introduction to study design<br />

Underst<strong>and</strong>ing where data come from is vital for making sensible choices about statistical<br />

analysis. At this stage in the course we will give an overview <strong>of</strong> some <strong>of</strong> the study designs<br />

that are commonly used in epidemiology <strong>and</strong> clinical research. We will return to this material<br />

in the second half <strong>of</strong> the course.<br />

There are several different ways to classify study designs, <strong>and</strong> several specific ‘named’ study<br />

designs. It can be confusing since different epidemiology books use the terms differently. The<br />

classifications <strong>and</strong> definitions exist to help us think about the strengths <strong>and</strong> weaknesses <strong>of</strong> a<br />

particular study for addressing the research questions. The differences in the ways the<br />

definitions are used arise where textbooks emphasize the relative strengths <strong>and</strong> weaknesses a<br />

little differently.<br />

viii<br />

Introduction & overview

2.1 Classifications <strong>of</strong> Study Designs<br />

1. Descriptive versus analytic<br />

This classification relates to the primary aims or objectives <strong>of</strong> the study. Where the study aims<br />

to test an hypothesis we say the study is analytic. For example, does this vaccine reduce the<br />

risk <strong>of</strong> meningococcal disease Here we hypothesize a relationship between vaccine <strong>and</strong> risk<br />

<strong>of</strong> meningococcal disease (we hypothesize that vaccine reduces risk) <strong>and</strong> aim to test that<br />

hypothesis. Analytic studies are studies which test hypotheses.<br />

Descriptive studies are used where the aims are simply to describe something, with no prespecified<br />

hypothesis. For example, if we wish to describe trends in incidence <strong>of</strong><br />

meningococcal disease over time we carry out a descriptive study. Here there are no prespecified<br />

hypotheses about the reasons for a change over time.<br />

Many descriptive studies in epidemiology describe patterns <strong>of</strong> disease in populations. This can<br />

provide clues about causes <strong>of</strong> disease <strong>and</strong> lead on to further studies. The st<strong>and</strong>ard approach is to<br />

examine the characteristics <strong>of</strong> disease according to time, place, <strong>and</strong> person:<br />

TIME A descriptive study can be repeated in order to examine trends over time<br />

examples: epidemics, seasonality eg: influenza<br />

PLACE Many diseases vary according to country, or even within countries<br />

examples: breast cancer incidence by country, multiple sclerosis <strong>and</strong> latitude<br />

PERSON Characteristics <strong>of</strong> people with the disease can be studied, for instance age, sex,<br />

ethnic group, socioeconomic group, occupation<br />

example: heart disease in New Zeal<strong>and</strong> according to age <strong>and</strong> sex <strong>and</strong> ethnic group<br />

2. Experimental versus observational<br />

In experimental studies the investigators intervene in the natural order (hence the alternative<br />

name intervention study). The investigator decides the exact nature <strong>of</strong> the intervention,<br />

chooses a control strategy, <strong>and</strong> decides who will receive the intervention under study <strong>and</strong> who<br />

will be part <strong>of</strong> the control group. The goal is to control the conditions so that the effect <strong>of</strong><br />

interest can be isolated <strong>and</strong> studied. For example, if investigators want to know whether a<br />

drug (nevirapine) reduces maternal-infant transmission <strong>of</strong> HIV they can construct an<br />

experiment which isolates the effect <strong>of</strong> the drug from any other factors which might affect risk<br />

<strong>of</strong> transmission. The extent to which we can isolate the effect <strong>of</strong> the intervention (eg drug)<br />

determines how good the experiment is. Of course ethics are a fundamental consideration.<br />

In observational studies we simply observe a naturally occurring process without intervening.<br />

It is much harder to test a hypothesis in an observational study, but for many research<br />

questions in the health sciences it is not ethical or feasible to conduct an experiment. We aim<br />

to design our observational studies to get as close as possible to the information we would<br />

have got if the experiment could have been done.<br />

3. R<strong>and</strong>omised versus non-r<strong>and</strong>omised (applies to experiments only)<br />

Experiments always (should) have a control group as well as a group (or groups) which gets<br />

the intervention(s) under study. R<strong>and</strong>omisation is a process we can use to allocate people to<br />

either the intervention group or the control group – the simplest version <strong>of</strong> r<strong>and</strong>omisation is<br />

like flipping a coin: each person has a 50% chance <strong>of</strong> being in the intervention group. Careful<br />

use <strong>of</strong> r<strong>and</strong>omisation gives the best test <strong>of</strong> an hypothesis.<br />

ix<br />

Introduction & overview

In some experiments the investigators use a method other than r<strong>and</strong>omisation to decide who<br />

will be in the intervention group <strong>and</strong> who will be in the control group. For example in a<br />

community intervention study the investigators might choose a set <strong>of</strong> communities to get the<br />

intervention (<strong>of</strong>ten those interested or with structures in place to take part), <strong>and</strong> then choose a<br />

matched set <strong>of</strong> control communities. Experiments like this which are non-r<strong>and</strong>omised are<br />

sometimes referred to as quasi-experiments. Sometimes they are the only practical alternative,<br />

but they never provide the same strength <strong>of</strong> evidence as a r<strong>and</strong>omised trial.<br />

Note that the process <strong>of</strong> r<strong>and</strong>omisation is not the same as r<strong>and</strong>om sampling. The purpose <strong>of</strong><br />

r<strong>and</strong>om sampling is to select a single group which is representative <strong>of</strong> a population (see<br />

below).<br />

4. Cross-sectional versus longitudinal<br />

This classification refers to the data themselves <strong>and</strong> the (calendar) time points or periods<br />

about which the information is collected. For example, we might do a study looking at the<br />

relationship between oral contraceptive use <strong>and</strong> coronary heart disease. Fully cross-sectional<br />

data would refer to one point in (calendar) time. For example, in a survey we might ask, do<br />

you have coronary heart disease today Are you taking oral contraceptives today Note that if<br />

we are collecting data on existing disease we are working with prevalence <strong>of</strong> coronary heart<br />

disease rather than incidence <strong>of</strong> coronary heart, <strong>and</strong> so cross-sectional data is not very good<br />

for testing hypotheses about the causes <strong>of</strong> disease. (The exposures may have changed after<br />

disease was diagnosed.)<br />

Longitudinal data have some time course present. The ideal for testing hypotheses about<br />

disease causation is to get information about things that occurred before the disease<br />

developed. Often the best we can do is collect information about exposures that occurred<br />

before diagnosis <strong>of</strong> disease since the time between developing disease <strong>and</strong> diagnosis is <strong>of</strong>ten<br />

unclear. Longitudinal studies collect information over a period <strong>of</strong> time, eg exposures which<br />

occur before disease is diagnosed.<br />

5. Study unit<br />

The majority <strong>of</strong> studies in epidemiology collect data on individuals. However, there are some<br />

where the ‘unit’ under study is something bigger – such as a family, a community or a<br />

country. In some studies it is the group that is <strong>of</strong> interest, not the individual, <strong>and</strong> we might<br />

want to test a hypothesis relating to the group (an analytic study). For example, the COMMIT<br />

study asked, does a community prevention programme reduce the prevalence <strong>of</strong> smoking in<br />

the community The intervention is carried out at the community level, <strong>and</strong> we can evaluate<br />

by examining whether the prevalence <strong>of</strong> smoking in the community changes. Note the<br />

outcome data are collected on the individual (whether someone smokes or not), to measure the<br />

effect <strong>of</strong> the intervention in a community.<br />

2.1 Common study designs in epidemiology <strong>and</strong> clinical research<br />

1. Case report<br />

Usually describes the occurrence <strong>of</strong> disease in one person. The purpose is to alert others to the<br />

fact that this combination <strong>of</strong> factors can occur, <strong>and</strong> to encourage people to keep a look out for<br />

other similar cases. Such case reports (to a central registry) led to the initial recognition <strong>of</strong><br />

AIDS. Case reports are always descriptive <strong>and</strong> observational. The cross-sectional longitudinal<br />

x<br />

Introduction & overview

classification doesn’t really apply, but they could be considered ‘longitudinal’ in the sense<br />

that they may collect data on the person’s experience over time.<br />

2. Case series<br />

A case series takes a group <strong>of</strong> people with a recognised disease <strong>and</strong> describes patterns among<br />

them. A study <strong>of</strong> the initial case series <strong>of</strong> men diagnosed with AIDS recognised a common<br />

dysfunction <strong>of</strong> the immune system <strong>and</strong> that the disease occurred in gay men, injecting drug<br />

users <strong>and</strong> blood product recipients. This led to the hypothesis that it was caused by a<br />

transmissible agent, <strong>and</strong> gave clues as to the modes <strong>of</strong> transmission. Case series are always<br />

descriptive, observational, <strong>and</strong> are generally cross-sectional, but could be longitudinal if they<br />

describe changes in individuals over time.<br />

3. Descriptive study using population data<br />

Many descriptive epidemiological studies make use <strong>of</strong> data that is collected routinely on a<br />

population. This includes census data, death certificates, data reported to cancer registries,<br />

hospital morbidity <strong>and</strong> mortality data, <strong>and</strong> infectious disease data reported as ‘notifiable’<br />

diseases. Provided the data sources are reliable this can provide valuable descriptions <strong>of</strong> the<br />

disease (or risk factor) experience in a population. These studies are descriptive <strong>and</strong><br />

observational.<br />

4. Sample survey<br />

Where data are collected specifically for a research study, they generally involve collecting<br />

data for only a sample (subset) <strong>of</strong> the population <strong>of</strong> interest. This will give the opportunity to<br />

collect more information about each person, at the cost <strong>of</strong> the r<strong>and</strong>om variation that comes<br />

with sampling from a population. There are many way to go about selecting a sample. In<br />

quantitative research we generally choose r<strong>and</strong>om samples. In a r<strong>and</strong>om sample everyone has<br />

a known chance <strong>of</strong> being selected for the study; this allows us to use statistical methods to<br />

accurately determine the influence <strong>of</strong> r<strong>and</strong>om error (through use <strong>of</strong> confidence intervals).<br />

And hence, to make valid inferences regarding the population the sample came from. R<strong>and</strong>om<br />

sampling gives us the best chance <strong>of</strong> getting a sample which is representative <strong>of</strong> the<br />

population.<br />

The simplest type <strong>of</strong> r<strong>and</strong>om sample is a simple r<strong>and</strong>om sample, where everyone has the same<br />

chance <strong>of</strong> being chosen. We can also draw stratified samples or cluster samples. In stratified<br />

sampling we divide the population into groups (or strata) – for example ethnic groups. We<br />

then choose to sample a fixed number from each stratum to ensure all groups are adequately<br />

represented in the study. For example, we might wish to choose the same number <strong>of</strong> people<br />

from each ethnic group to ensure we have enough data for reliable estimates in each group.<br />

Cluster sampling is used where we can’t easily select a sample <strong>of</strong> individuals. For example, if<br />

we wish to study children, we can’t carry select a simple r<strong>and</strong>om simple because we have no<br />

list <strong>of</strong> children from which to select the sample. One approach commonly used is to select<br />

schools at r<strong>and</strong>om, classrooms within a school at r<strong>and</strong>om, <strong>and</strong> children from a class at<br />

r<strong>and</strong>om.<br />

A true survey generally means getting people to fill in a questionnaire. However people have<br />

extended the idea to include other forms <strong>of</strong> data collection: we may take measurements <strong>of</strong><br />

height <strong>and</strong> weight, fitness tests, blood tests <strong>and</strong> so on.<br />

xi<br />

Introduction & overview

These studies are most <strong>of</strong>ten descriptive, but can be analytic, are observational, <strong>and</strong> can be<br />

cross-sectional or longitudinal.<br />

5. Cross-sectional study<br />

In epidemiology the term cross-sectional study <strong>of</strong>ten refers to a survey. The data are <strong>of</strong>ten not<br />

fully cross-sectional according to the definition above. For example we might carry out a<br />

survey <strong>of</strong> use <strong>of</strong> hormone replacement therapy (HRT) among New Zeal<strong>and</strong> women.<br />

Such a survey would generally ask about past life experiences <strong>and</strong> past use <strong>of</strong> HRT, rather<br />

than just current use, which gives a longitudinal element to the data. When the study collects<br />

information about disease status, it is generally prevalent disease. So while cross-sectional<br />

studies can be used to test hypotheses they are not very good for testing hypotheses about<br />

disease causation.<br />

6. Case-control study<br />

Two groups<br />

Group with disease (cases)<br />

Group free from disease (controls)<br />

In a case-control study, people are selected for the study according to whether they have the<br />

disease <strong>of</strong> interest (cases) or not (controls). Generally case-control studies identify incident cases<br />

<strong>and</strong> collect information about experiences before diagnosis <strong>of</strong> disease <strong>of</strong> the cases, <strong>and</strong> for an<br />

equivalent time period for the controls. Case-control studies are sometimes called retrospective<br />

studies because information is collected about exposures that occurred in the past. For example, a<br />

case-control study <strong>of</strong> cervical cancer selected a group <strong>of</strong> women with cervical cancer <strong>and</strong> a<br />

control group <strong>of</strong> women who did not have cervical cancer. Information was collected about past<br />

experiences which were hypothesised to be related to risk <strong>of</strong> cervical cancer including number <strong>of</strong><br />

sexual partners. Case-control studies are analytic, observational <strong>and</strong> longitudinal.<br />

7. Cohort Study<br />

A group <strong>of</strong> people is observed over a period <strong>of</strong> time in order to measure the frequency <strong>of</strong> the<br />

disease being investigated. A cohort study starts by documenting exposures <strong>and</strong> then measuring<br />

the subsequent risk <strong>of</strong> developing disease, according to exposure. Cohort studies aim to identify<br />

associations between exposure to suspected causal agents <strong>and</strong> the development <strong>of</strong> disease. The<br />

cohort may be selected by taking a r<strong>and</strong>om sample from a population (eg the Scottish Heart<br />

Study); by selecting some geographical areas (eg Framingham study) or taking a particular group<br />

(eg British Doctors study, Nurses Health Study). Researchers may also identify an exposed group<br />

<strong>of</strong> interest (eg people working in a particular industry) <strong>and</strong> find an appropriate control group who<br />

are not exposed to the substance under study. Exposure can be measured at the beginning <strong>of</strong> the<br />

study (baseline) <strong>and</strong> also periodically during the follow-up period. The entire cohort <strong>of</strong> people is<br />

followed up to determine if <strong>and</strong> when disease develops.<br />

8. R<strong>and</strong>omised controlled trial (RCT)<br />

In a r<strong>and</strong>omised controlled trial a group <strong>of</strong> study participants are selected <strong>and</strong> then r<strong>and</strong>omly<br />

allocated to an intervention group (s) (who get the intervention under study) <strong>and</strong> a control<br />

group. Since group allocation is entirely by chance, this is the best approach for getting two<br />

groups who are comparable is all respects. This means that if there is a difference in outcome<br />

xii<br />

Introduction & overview

etween the two groups it can be attributed to the intervention (provided other aspects <strong>of</strong> the<br />

study are well carried out).<br />

9. Clinical trial<br />

This the term used for an experiment which evaluates a treatment. They are <strong>of</strong>ten, but not<br />

always, r<strong>and</strong>omised controlled trials.<br />

10. Prevention trial<br />

This is the term used for an experiment used to evaluate a prevention strategy. They can be<br />

r<strong>and</strong>omised controlled trials.<br />

11. Community intervention study<br />

This is the term used for a study to evaluate a community intervention. They are usually<br />

experiments, but <strong>of</strong>ten not r<strong>and</strong>omised, <strong>and</strong> may not involve a control group.<br />

4. Content <strong>of</strong> STAT115<br />

Learning aims <strong>and</strong> objectives<br />

By the end <strong>of</strong> the course students should<br />

• be aware <strong>of</strong> the appropriate use <strong>of</strong> common study designs <strong>and</strong> their strengths <strong>and</strong><br />

weaknesses<br />

• be able to describe the information contained in a data set<br />

• be able to carry out common statistical data analyses<br />

• be able to interpret the results <strong>of</strong> common statistical analyses in the context <strong>of</strong> the<br />

particular study design used<br />

• be aware <strong>of</strong> ethical issues relating to research involving humans<br />

• be able to critically evaluate selected research articles published in health sciences<br />

journals.<br />

The material in this course will provide skills for interpreting research in your chosen field <strong>of</strong><br />

study, as well as some basic skills for analysing data that you collect through course projects<br />

or labs using a computer <strong>and</strong> a statistical s<strong>of</strong>tware package. If you have mathematical skills,<br />

<strong>and</strong> are stimulated by the idea <strong>of</strong> being involved in health research, you may wish to pursue a<br />

career in biostatistics. There are many jobs available for biostatisticians, in New Zeal<strong>and</strong> <strong>and</strong><br />

overseas. Most are employed in research groups at universities or government or in<br />

pharmaceutical or biotech companies.<br />

Types <strong>of</strong> research questions covered in STAT 115<br />

There are many types <strong>of</strong> research question in the health sciences:<br />

• Laboratory studies: research involves underst<strong>and</strong>ing how cells <strong>and</strong> cell components<br />

work, identifying compounds which can be used to treat disease <strong>and</strong> how they affect<br />

cells.<br />

• Animal studies: used as models for humans<br />

xiii<br />

Introduction & overview

• Human studies:<br />

– anatomy <strong>and</strong> physiology consider the structure <strong>and</strong> function <strong>of</strong> the human body<br />

– clinical research asks questions relating to patient care including evaluation <strong>of</strong><br />

new treatments<br />

– epidemiology is the study <strong>of</strong> the distribution <strong>and</strong> causes <strong>of</strong> disease<br />

• Studies <strong>of</strong> public health: the science <strong>and</strong> art <strong>of</strong> promoting health, preventing disease<br />

<strong>and</strong> prolonging life through organised efforts <strong>of</strong> society<br />

• Studies <strong>of</strong> society:<br />

– medical sociology examines topics such as the social aspects <strong>of</strong> physical <strong>and</strong><br />

mental illness, physician-patient relationships, the organization <strong>and</strong> structure <strong>of</strong><br />

health organizations <strong>and</strong> the socio-economic basis <strong>of</strong> the health care system.<br />

In STAT 115 we will focus on research questions involving humans, mainly clinical research<br />

<strong>and</strong> epidemiology. There are many research questions in these areas which can be understood<br />

without specialised knowledge. In the other areas, particularly laboratory studies, an in-depth<br />

underst<strong>and</strong>ing <strong>of</strong> the field (eg biochemistry, molecular biology, anatomy or physiology) is<br />

needed to underst<strong>and</strong> the research questions.<br />

Studying humans brings particular challenges, <strong>and</strong> it is these challenges which have driven the<br />

specialised development <strong>of</strong> biostatistics from it statistical basis. The challenges arise from the<br />

more complex ethical issues in research involving humans, as well as the complexities <strong>of</strong> the<br />

biological system <strong>and</strong> the consequential research questions we wish to answer.<br />

xiv<br />

Introduction & overview

SECTION 1<br />

This covers an introduction to the package R-cmdr, presents an overview <strong>of</strong> biostatistics <strong>and</strong><br />

research methodology.<br />

Biostatistics <strong>and</strong> Research Methodology; R-cmdr<br />

Types <strong>of</strong> Data<br />

Numerical Data <strong>and</strong> Histograms<br />

Measures <strong>of</strong> Centre: Mean <strong>and</strong> Median<br />

Measures <strong>of</strong> Variability: St<strong>and</strong>ard Deviation, Variance <strong>and</strong> Interquartile range<br />

Box-<strong>and</strong>-Whisker Plots<br />

1<br />

Section 1

Biostatistics <strong>and</strong> research: an overview<br />

Course aim:<br />

An introduction to the core biostatistical methods<br />

essential to the health sciences<br />

• scientific method<br />

• design <strong>of</strong> research studies<br />

• description <strong>and</strong> analysis <strong>of</strong> data<br />

The scientific method underpins the design <strong>of</strong><br />

research studies. Sound research design is vital<br />

for obtaining reliable information. A major part<br />

<strong>of</strong> this course is about techniques for describing<br />

data <strong>and</strong> underst<strong>and</strong>ing the analysis principles.<br />

This enables us to make sense <strong>of</strong> the mass <strong>of</strong><br />

information collected in a research study.<br />

2<br />

Section 1

Learning aims <strong>and</strong> objectives<br />

By the end <strong>of</strong> the course students should<br />

• be aware <strong>of</strong> the appropriate use <strong>of</strong><br />

common study designs <strong>and</strong> their strengths<br />

<strong>and</strong> weaknesses<br />

• be able to describe the information<br />

contained in a data set<br />

• be able to carry out common statistical<br />

data analyses<br />

• be able to interpret the results <strong>of</strong> common<br />

statistical analyses in the context <strong>of</strong> the<br />

particular study design used<br />

• be aware <strong>of</strong> ethical issues relating to<br />

research involving humans<br />

• be able to critically evaluate selected<br />

research articles published in health<br />

sciences journals<br />

3<br />

Section 1

Goal <strong>of</strong> health sciences pr<strong>of</strong>essions<br />

To improve the health <strong>and</strong> well-being <strong>of</strong><br />

individuals <strong>and</strong> communities<br />

This involves<br />

• treatment <strong>of</strong> disease<br />

• prevention <strong>of</strong> disease<br />

• promotion <strong>of</strong> health<br />

In order to do this we need knowledge about<br />

• causes <strong>of</strong> disease<br />

• diagnosis<br />

• disease processes<br />

• effectiveness <strong>of</strong> treatments<br />

• societal factors which affect health<br />

4<br />

Section 1

Examples <strong>of</strong> current gaps in knowledge<br />

• causes <strong>of</strong> meningococcal meningitis<br />

How to prevent Vaccine<br />

• SARS, avian influenza<br />

New diseases<br />

• back pain<br />

Not good at treating<br />

• cancer<br />

Nasty treatments for child cancer<br />

• diabetes<br />

Common in Pacific communities<br />

• cardiovascular disease<br />

Common cause <strong>of</strong> death<br />

• prevention <strong>of</strong> overweight <strong>and</strong> obesity<br />

• effective promotion <strong>of</strong> behaviour change<br />

Prevention <strong>of</strong> smoking<br />

Knowledge may come from<br />

• teaching<br />

• experience<br />

• research<br />

5<br />

Section 1

Research<br />

A process for providing answers to questions for<br />

which the answer is not immediately available<br />

General research areas<br />

What are the causes <strong>of</strong> meningococcal<br />

meningitis<br />

Can we develop a vaccine to prevent SARS<br />

What are the genetic events which lead to<br />

childhood cancer<br />

Can a new drug improve survival in people with<br />

colorectal cancer<br />

How can we prevent childhood overweight <strong>and</strong><br />

obesity<br />

What are the main factors affecting quality <strong>of</strong> life<br />

<strong>of</strong> people with a chronic illness<br />

Research provides a systematic process for<br />

answering these questions<br />

6<br />

Section 1

Iron Deficiency – Should NZ parents be<br />

Concerned<br />

[Dr Elaine Ferguson, Dept <strong>of</strong> Human<br />

Nutrition]<br />

A survey r<strong>and</strong>omly selecting 323 children<br />

aged 6-24 months in Dunedin, Christchurch<br />

<strong>and</strong> Invercargill.<br />

To assess prevalence <strong>of</strong> iron deficiency.<br />

To explore factors associated with low body<br />

iron store. Possible Factors are:<br />

Categorical:<br />

Continuous:<br />

• Sex<br />

• Ethnicity<br />

• Maternal Education<br />

• Household Income<br />

• Breast feeding<br />

• Age<br />

• Meat intake<br />

Regression methods are used as well as<br />

procedures for summarising data.<br />

7<br />

Section 1

Does early childhood circumcision reduce the<br />

risk <strong>of</strong> acquiring genital herpes<br />

[Dr Nigel Dickson, Dept <strong>of</strong> Preventive <strong>and</strong><br />

Social Medicine]<br />

• Cohort <strong>of</strong> over 1000 births in 1972 in<br />

Dunedin.<br />

• Called the Dunedin Multidisciplinary<br />

Health <strong>and</strong> Development study.<br />

• Does early circumcision reduce the risk<br />

<strong>of</strong> genital herpes.<br />

• Initially appears to be the case but it is<br />

an observational study.<br />

• Number <strong>of</strong> sexual partners is a<br />

confounder.<br />

• When confounder allowed for early<br />

circumcision appears not to be<br />

protected.<br />

• Designed experiments (or clinical trials)<br />

set up in Africa to investigate effect <strong>of</strong><br />

circumcision on HIV.<br />

8<br />

Section 1

The research process<br />

The objective for most studies is to use data from<br />

a sample to draw inference about a larger<br />

population:<br />

Underlying population<br />

Inference<br />

Sample<br />

<strong>Statistics</strong><br />

Examples:<br />

• we use the frequency with which a disease<br />

occurs in a sample to estimate the<br />

frequency with which disease occurs in the<br />

population<br />

• we study a new treatment in a group <strong>of</strong><br />

patients in order to be able to make claims<br />

about the effects <strong>of</strong> the treatment in all such<br />

patients<br />

9<br />

Section 1

Steps in the research process:<br />

Development <strong>of</strong> the research question<br />

Design <strong>of</strong> the study<br />

Collection <strong>of</strong> information<br />

Data description <strong>and</strong> analysis<br />

Interpretation <strong>of</strong> results<br />

• the research question<br />

- needs to be framed very carefully<br />

- must be specific enough to be<br />

answerable by a research study<br />

• the study design<br />

- is determined by the research<br />

question<br />

- describes the methods used to collect<br />

the information<br />

• analysis <strong>and</strong> interpretation<br />

- depends on the study design<br />

10<br />

Section 1

Research questions relevant to this course:<br />

Epidemiology:<br />

the study <strong>of</strong> distribution<br />

<strong>and</strong> determinants <strong>of</strong> disease<br />

frequency<br />

Clinical research: the study <strong>of</strong> questions<br />

relating to care <strong>of</strong> patients<br />

Descriptive questions:<br />

What is the distribution <strong>of</strong> a disease<br />

What is the natural history <strong>of</strong> a disease<br />

Analytic questions:<br />

What are the causes <strong>of</strong> a disease<br />

Will this approach prevent disease<br />

Does this treatment improve outcome<br />

11<br />

Section 1

Data Analysis <strong>and</strong> Computer S<strong>of</strong>tware<br />

Easy to use s<strong>of</strong>tware is essential for data<br />

management <strong>and</strong> data analysis. In this course R-<br />

cmdr (Statistical Package for the Social Sciences)<br />

will be used. This package is widely available on<br />

campus, used in most <strong>Department</strong>s which specify<br />

first year statistics as a pre-requisite, <strong>and</strong> widely<br />

available internationally.<br />

At school you may have used EXCEL. Possibly<br />

at <strong>University</strong> you have used EXCEL. EXCEL<br />

is excellent for data management <strong>and</strong> reporting<br />

but is poor for statistical analyses <strong>and</strong> clumsy for<br />

graphical procedures.<br />

R-cmdr is easy to use with good pull down menu<br />

options. There are three windows in R-cmdr<br />

• Data Editor (where data being analysed are<br />

located)<br />

• Output Window (where results appear)<br />

• Syntax Window (not used in this course)<br />

12<br />

Section 1

Introduction to study design<br />

1. Descriptive studies<br />

2. Analytic studies<br />

Experimental studies<br />

Observational studies<br />

Examples <strong>of</strong> analytic study types<br />

3. Summary<br />

Classification <strong>of</strong> research designs<br />

Classification <strong>of</strong> common study types<br />

There are two types <strong>of</strong> research questions.<br />

Descriptive – describing things<br />

Analytic – testing hypotheses<br />

Strengths <strong>and</strong> weaknesses <strong>of</strong> the different designs<br />

will be discussed.<br />

13<br />

Section 1

1. Descriptive studies<br />

Aim: to describe, for example:<br />

• the characteristics <strong>of</strong> people with a disease<br />

(person, place, time)<br />

• lifestyle patterns <strong>of</strong> a population<br />

• attitudes to health care<br />

• etc<br />

Descriptive studies are <strong>of</strong>ten called surveys or<br />

cross-sectional studies<br />

Descriptive studies generally use a sample from a<br />

population<br />

14<br />

Section 1

Example: What are the serum cholesterol levels<br />

<strong>of</strong> New Zeal<strong>and</strong>ers<br />

Method:<br />

Select a subgroup (sample) <strong>of</strong> people<br />

<strong>and</strong> measure their serum cholesterol<br />

levels<br />

R<strong>and</strong>om sampling<br />

• choose the sample in such a way that<br />

every individual in the population has a<br />

known chance <strong>of</strong> being selected<br />

• in a simple r<strong>and</strong>om sample, everyone has<br />

an equal chance <strong>of</strong> being chosen<br />

• this method is the best way <strong>of</strong> obtaining a<br />

sample which is representative <strong>of</strong> the<br />

population<br />

Suppose we want to estimate mean cholesterol in<br />

the population:<br />

15<br />

Section 1

Sample average = true mean + error<br />

unknown<br />

r<strong>and</strong>om error:<br />

systematic<br />

error<br />

r<strong>and</strong>om<br />

error<br />

• due to natural biological variability<br />

• increasing the sample size will reduce<br />

the r<strong>and</strong>om fluctuations in the sample<br />

mean<br />

systematic error (=bias)<br />

• due to aspects <strong>of</strong> the design or<br />

conduct <strong>of</strong> the study which<br />

systematically distort the results<br />

• occurs if a sample is not representative <strong>of</strong><br />

the population<br />

• cannot be reduced by increasing the<br />

sample size<br />

16<br />

Section 1

2. Analytic studies<br />

Purpose: to test hypotheses, about, for<br />

example:<br />

• causes <strong>of</strong> disease<br />

• methods for prevention <strong>of</strong> disease<br />

• the effects <strong>of</strong> treatments<br />

Experimental studies<br />

• the researcher intervenes <strong>and</strong> records the<br />

result <strong>of</strong> their intervention<br />

• the aim is to control all other factors to<br />

isolate the effects <strong>of</strong> the intervention<br />

• best way to study causation<br />

Observational studies<br />

• the investigator does not intervene, simply<br />

observes a naturally occurring process,<br />

<strong>and</strong> collects information<br />

• ideal is to get as close as possible to the<br />

information that would have been<br />

obtained if the experimental study could<br />

have been done<br />

17<br />

Section 1

Example: Options for studying the<br />

relationship between smoking <strong>and</strong> lung cancer<br />

Experimental study<br />

R<strong>and</strong>omly assign people<br />

Follow for 20 years<br />

Check lung cancer rates<br />

Clearly unethical<br />

Smokers (start)<br />

Non-smokers<br />

Observational study<br />

Cohort<br />

Smokers (known) 20yrs % with lung CA<br />

Compare<br />

Non-smokers (known) 20yrs % with lung<br />

CA<br />

Problem: groups may differ in other ways that are<br />

related to CA risk – confounding.<br />

Case control<br />

% smokers 20 yrs lung cancer (now) (cases)<br />

% smokers 20 yrs no lung cancer (now)(controls)<br />

No long term follow up needed. Smaller<br />

samples. Could be recall bias from 20 years ago.<br />

Also confounding.<br />

18<br />

Section 1

Examples <strong>of</strong> analytic study types<br />

R<strong>and</strong>omised controlled trial (RCT)<br />

• a “Gold st<strong>and</strong>ard” analytic study (best)<br />

• experimental<br />

Characteristics <strong>of</strong> a RCT:<br />

• select a group <strong>of</strong> people<br />

• r<strong>and</strong>omly allocate them to either an<br />

intervention or a control group<br />

• follow participants up over time, <strong>and</strong><br />

measure outcome<br />

A control group is used to isolate the effects <strong>of</strong><br />

the intervention<br />

R<strong>and</strong>om allocation, or r<strong>and</strong>omisation means<br />

every person has the same chance <strong>of</strong> being in<br />

each. This gives the best chance <strong>of</strong> getting two<br />

groups which are comparable in all respects<br />

Used to evaluate new treatments<br />

Often not ethical in studies <strong>of</strong> disease causation<br />

19<br />

Section 1

Example RCT: LIPID study (NEJM, 1998)<br />

Does treatment with pravastatin reduce the risk<br />

<strong>of</strong> death in patients with coronary heart disease<br />

Study participants:<br />

9014 patients<br />

age 31-75<br />

coronary heart disease<br />

cholesterol 155 - 271mg/decilitre<br />

participants (selected)<br />

control<br />

(n=4502)<br />

r<strong>and</strong>omisation<br />

r<strong>and</strong>omly<br />

allocated<br />

intervention<br />

pravastatin<br />

(n=4512)<br />

6 yrs<br />

8.3% mortality 6.4%<br />

20<br />

Section 1

Advantages <strong>of</strong> RCT:<br />

• experiment – the best way to test an<br />

hypothesis<br />

• differences in outcome can be attributed to<br />

the exposure<br />

Disadvantages <strong>of</strong> RCT:<br />

• may not be ethical<br />

Cohort study<br />

Observational study, generally carried out to test<br />

hypotheses<br />

Characteristics:<br />

• participants are selected before<br />

disease has developed<br />

• followed over time to determine<br />

development <strong>of</strong> disease<br />

• information is collected about<br />

exposures at baseline <strong>and</strong> during<br />

follow-up<br />

• longitudinal<br />

21<br />

Section 1

Example <strong>of</strong> cohort study:<br />

Study to investigate the relationship between<br />

smoking <strong>and</strong> lung cancer (eg British Doctors<br />

study)<br />

Group <strong>of</strong> people<br />

without lung cancer<br />

smokers<br />

non-smokers<br />

20 years<br />

% develop % develop<br />

lung cancer lung cancer<br />

Compare<br />

22<br />

Section 1

Case-control study<br />

Observational study, generally carried out to test<br />

hypotheses<br />

Characteristics<br />

• participants are chosen on the basis <strong>of</strong><br />

their disease status: a group with disease<br />

(cases) <strong>and</strong> a group without (controls)<br />

• information is collected from people with<br />

<strong>and</strong> without disease about exposures that<br />

occurred in the past<br />

• longitudinal (retrospective)<br />

23<br />

Section 1

Example <strong>of</strong> case-control study<br />

Study to investigate the relationship between<br />

smoking <strong>and</strong> lung cancer<br />

Known at start<br />

group <strong>of</strong> people<br />

with lung<br />

cancer<br />

group <strong>of</strong> people<br />

without<br />

lung cancer<br />

document smoking history<br />

% smokers %smokers<br />

in the past<br />

in the past<br />

24<br />

Section 1

Cohort vs case-control studies<br />

Cohort study<br />

Advantages:<br />

• closest observational study to<br />

r<strong>and</strong>omised controlled trial<br />

• good for examining common outcomes<br />

• can evaluate the effect <strong>of</strong> exposure on<br />

multiple outcomes<br />

Disadvantages:<br />