Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Example: Direct Mapping° Cache size 8 Bytes = 2 3 Bytes, N = 3° Cache Block size = 1 Byte, M = 0° I = N-M = 3; i.e., 2 3 =8 blocks indexed 000 … 111° Let a program execution refers to addresses 22, 26, 22, 18, 16, 3, 16, 26, …Address ReferenceA (in decimal)Address ReferenceA (in binary)IndexTag22 10110 11026 11010 01030 1111011017 1000100116 100000003 0001101116 1000000020 101001001011111010001010PDE1C_9Cache referenceThe cache contents just after <strong>the</strong> references to 22, 26, 22,were doneCache Index000001010011100101110111VNNYNNNYNCache Tag1110Cache DataMem(11010)Mem(10110)PDE1C_10

Example: Cache with 2-Byte Blocks° Say, <strong>the</strong> cache size is 8 Bytes; 8 = 2 N , N=3° Block size = 2 Bytes, i.e., 2 M with M =1;° So, I = N – M = 3-1 = 2• 2 2 = 4 cache blocks numbered 0..3 (00..11 in binary)Decimal address<strong>of</strong> ReferenceBinary address<strong>of</strong> ReferenceIndex22 10110 1126 11010 0122 10110 1118 10010 0116 10000 003 00011 0116 10000 0026 11010 01Byte Offset00000100PDE1C_15Cache reference° A reference address has 3 parts:• Lower N bits: last M bits <strong>the</strong> Byte-<strong>of</strong>fset, <strong>the</strong> rest <strong>the</strong> index• The remaining bits, as before, <strong>the</strong> tag° A cache block contains data <strong>of</strong> a reference address and associatedwith:• Cache tag: cache tag <strong>of</strong> <strong>the</strong> reference address• Valid bit: indicates whe<strong>the</strong>r <strong>the</strong> block has been initialised° Cache contents after 22, 26, 22 (, 18, 16, 3, 16, 26):Cache Index0001VNCache TagY1011NY 10Cache Data11 <strong>Memory</strong>(11011) <strong>Memory</strong>(11010)<strong>Memory</strong>(10111)<strong>Memory</strong>(10110)PDE1C_16

Cache reference° Cache contents after 22, 26, 22, 18, 16, (3, 16, 26, …):° 22, 26 are misses° Second reference to 22 is a hit° 18 in binary = 10010 and a miss and replacesMem(26)° 16 in binary = 10000, is a missCache Index0001VYCache TagY1011NY 10<strong>Memory</strong>(23)Cache Data10 <strong>Memory</strong>(17) <strong>Memory</strong>(16)10 27; <strong>Memory</strong>(19) 26; <strong>Memory</strong>(18)<strong>Memory</strong>(22)PDE1C_17Cache referenceCache contents after 22, 26, 22, 18, 16 3, 16, (26,… :° 3 in binary = 00011 and is a miss, replaces<strong>Memory</strong>(19)° 16 is a hitCache Index0001VYCache TagY1011NY 10Cache Data10 <strong>Memory</strong>(17) <strong>Memory</strong>(16)00 27; 19; Mem(3) 26; 18; Mem(2)<strong>Memory</strong>(23)<strong>Memory</strong>(22)PDE1C_18

Cache reference° Approximate Cache contents after 22, 26, 22, 18, 163, 16, 26 (… :° 26 is a miss• 26 in binary = 11010, so replaces Mem(2)Cache Index0001VYCache Tag1011Y1011NY 10Cache Data<strong>Memory</strong>(17) <strong>Memory</strong>(16)27; 19; 3; 27 26; 18; 2; 26<strong>Memory</strong>(23) <strong>Memory</strong>(22)PDE1C_19Block Size Trade-<strong>of</strong>f° In general, larger block size take advantage <strong>of</strong> spatiallocality BUT:• Larger block size means larger miss penalty:- Takes longer time to fill up <strong>the</strong> block• If block size is too big relative to cache size, miss rate will go up- Too few cache blocks° In general, Average Access Time:• = Hit Time x (1 - Miss Rate) + Miss Penalty x Miss RateMissPenaltyMissRateExploits Spatial LocalityAverageAccessTimeFewer blocks:compromisestemporal localityIncreased Miss Penalty& Miss RateBlock SizeBlock SizeBlock SizePDE1C_20

Fully Associative° Fully Associative Cache• Store <strong>the</strong> data in any block (instead <strong>of</strong> using a specific,direct-mapped block) and• Do not bo<strong>the</strong>r with <strong>the</strong> cache index at all• An address reference, if in <strong>the</strong> cache, can be in any block• So an address reference needs to be compared with <strong>the</strong>tag <strong>of</strong> every cache block• When block size is 1 byte, <strong>the</strong>n <strong>the</strong>re are as many tags as<strong>the</strong> number <strong>of</strong> blocks in <strong>the</strong> cachePDE1C_21Fully Associative Cache – example° Fully Associative Cache• Store <strong>the</strong> data in any block (instead <strong>of</strong> using a specific, directmappedblock) and do not bo<strong>the</strong>r with <strong>the</strong> cache index• Example: Cache Size = 32 Bytes, block size = 1, we need 32comparators operating in parallel, each <strong>of</strong> 32-bit• Example: Cache Size = 512 Bytes, block size = 1, we need 512comparators operating in parallel, each <strong>of</strong> 32-bit31Cache Tag (32 bits long)0XXXXCache TagValid BitCache DataX:::PDE1C_22

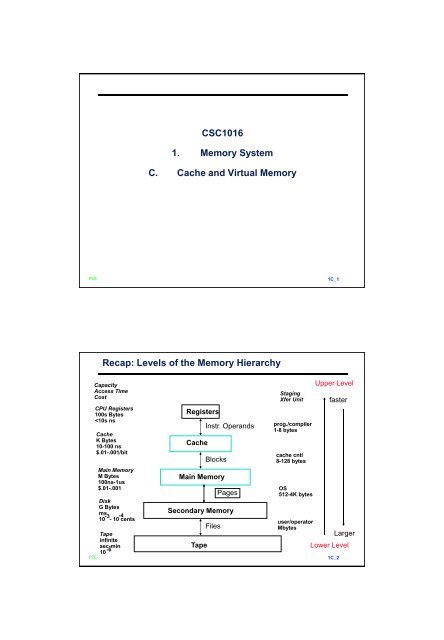

Q4: What happens on a write?PDE° Modifications <strong>of</strong> cache data must be passed ontomain memory updatesTwo approaches:° Write through—The information is written to both<strong>the</strong> block in <strong>the</strong> cache and to <strong>the</strong> block in <strong>the</strong> lowerlevelmemory.° Write back—The information is written only to <strong>the</strong>block in <strong>the</strong> cache. The modified cache block iswritten to main memory only when it is replaced.• is block clean or dirty? Use <strong>of</strong> a dirty bit per block: set if <strong>the</strong>cache block is modified.° Pros and Cons <strong>of</strong> each?• WT: replacement can be quicker as writes are done in real-timeon main memory as well• WB: no writes <strong>of</strong> repeated writes due to <strong>the</strong> dirty bit1C_25Recall: <strong>Levels</strong> <strong>of</strong> <strong>the</strong> <strong>Memory</strong> <strong>Hierarchy</strong>CapacityAccess TimeCostPDECPU Registers100s Bytes

Virtual <strong>Memory</strong>: Introduction° Main memory (DRAM) acts as a ‘cache’ for <strong>the</strong>secondary store (Disk)• The technique for doing this is called virtual memory° Two Main Reasons• An illusion <strong>of</strong> a (mostly) fast main memory that is as large as <strong>the</strong>disk itself (as done in cache management)• To support efficient and safe sharing <strong>of</strong> main memory acrossmultiple programs- Note <strong>the</strong> granularity change: cache assumes a singleprogram accessing data, while in virtual memory manyprogrammes sharing main memory are considered• Total memory required by all programs may be much larger than<strong>the</strong> actual size <strong>of</strong> <strong>the</strong> main memory itself• But each program actively uses a fraction <strong>of</strong> its memoryrequirement at any given time- Just like cache holds <strong>the</strong> active portion <strong>of</strong> a given programPDE1C_27Virtual <strong>Memory</strong>: Requirements & Approach° The number <strong>of</strong> programs executing at <strong>the</strong> sametime cannot be known a priori• It varies dynamically and hence portions <strong>of</strong> main memorycannot simply be pre-allocated° While sharing is dynamic, programs should also beprotected from each o<strong>the</strong>r• If two programs share data (shared variable) <strong>the</strong>n that must besupported as well° When a program is compiled, it is given its ownvirtual address space – a separate range <strong>of</strong>memory locations accessible only to this program° Virtual memory translates this address space intophysical addresses• This translation provides <strong>the</strong> necessary protection and <strong>the</strong>permitted sharingPDE1C_28

Address TranslationV = {0, 1, . . . , n - 1} virtual address spaceM = {0, 1, . . . , m - 1} physical address spacen >> mMAP: V --> M U {0} address mapping functionMAP(a) = a' if data at virtual address a is present in physicaladdress a' and a' in M= 0 if data at virtual address a is not present in MaProcessoraName Space VAddr TransMechanism0a'missing item faultfaulthandlerMain<strong>Memory</strong>Secondary<strong>Memory</strong>physical addressOp Sys performsthis transferPDE1C_31P.A.010247168Paging Organizationframe 017Physical<strong>Memory</strong> (8KB)1K1K1KAddrTransMAP0102431744Address Translation (assuming a hit)VA page no. <strong>of</strong>fsetV.A.page 01311K1K1KVirtual <strong>Memory</strong> (32KB)unit <strong>of</strong>mappingalso unit <strong>of</strong>transfer fromvirtual tophysicalmemoryPage TableRegisterindexintopagetablePDEPage TableV AccessRights PA +table locatedin physicalmemoryphysicalmemoryaddressactually, concatenation1C_32

Page Table and Process state° Each program, on compilation, is given its ownpage table° Page Table itself resides in <strong>the</strong> main memory° Address <strong>of</strong> <strong>the</strong> start <strong>of</strong> <strong>the</strong> page table is in <strong>the</strong> pagetable register° Page table Register, toge<strong>the</strong>r with o<strong>the</strong>r registers,specifies <strong>the</strong> state <strong>of</strong> that program – referred to as<strong>the</strong> process state• If a program needs to relinquish CPU for ano<strong>the</strong>r program (preemption),<strong>the</strong> process state needs to be stored first by <strong>the</strong>Operating System• Restoring <strong>the</strong> program execution involves Operating System reloading<strong>the</strong> state° Program Counter (PC):• One <strong>of</strong> <strong>the</strong> o<strong>the</strong>r registers• holds <strong>the</strong> address <strong>of</strong> next instruction to be executed.PDE1C_33Page Table – Continued° Indexed by virtual page number• That is, <strong>the</strong> page table has an entry for each virtual page.• How many page table entries <strong>the</strong> virtual memory <strong>of</strong> figurein #1C_32 will require?° So, no index is needed as in fully associative (FA) cache° But … <strong>the</strong>re is a subtle difference with FA cache• For a given memory address, we extract <strong>the</strong> tag andsearch <strong>the</strong> entire cache (see #1C_22, 23)• The outcome is ei<strong>the</strong>r an entry for <strong>the</strong> reference addressis found (hit) or not found° With Page table, an entry for a virtual page is always found(see above) – this does not mean it is a hit° Hit or miss (page fault) is decided by <strong>the</strong> V-bit in <strong>the</strong> entry° V bit = 1 means that <strong>the</strong> entry has <strong>the</strong> physical address where<strong>the</strong> desired virtual page resides (hit)° V bit = 0 means <strong>the</strong> entry points to <strong>the</strong> disk address where <strong>the</strong>desired page can be fetched from (page fault)PDE1C_34

Page fault° If <strong>the</strong> V bit for a virtual page is 0, it is page fault: <strong>the</strong>entry contains <strong>the</strong> secondary memory address(disk address) where <strong>the</strong> page can be found• V bit = 0 implies a page faultPage TableV101101VP No.main<strong>Memory</strong>DISKPDE1C_35Page replacement° If a page fault occurs and if all pages in memory arein use, OS chooses a page to replace• By LRU Scheme (seen earlier)• If pages have been referred in <strong>the</strong> order: 10, 12, 9, 7,11, 10• If reference to page 8 results in a page fault needingreplacement- The LRU Page is page 12 which is replaced by page 8• If ano<strong>the</strong>r reference also results in a page fault, <strong>the</strong>n <strong>the</strong> LRUpage is ….° Difficult to Implement LRU scheme strictly• Impractical to record every time is page is referred to• So, use a reference bit in <strong>the</strong> page table for each virtual page• A reference bit is set to 1 whenever that page is referred to• OS periodically records <strong>the</strong> pages have been referred to, in <strong>the</strong>past period and also re-sets <strong>the</strong> ref bits to 0• Qn: will <strong>the</strong> ref bit <strong>of</strong> a page with V-bit = 0 be ever be set to 1?PDE1C_36

Writes° Write-through scheme requires write buffers whichare useful only when write is fast° Writing a page in a disk requires many many clockcycles° So, only write-back scheme is used° A Dirty bit is kept (in <strong>the</strong> page table) for each page• It is set to 1 when <strong>the</strong> page is written° If <strong>the</strong> OS chooses to replace <strong>the</strong> page, <strong>the</strong> dirty bitwill indicate whe<strong>the</strong>r or not <strong>the</strong> page needs to bewritten to <strong>the</strong> disk before being replacedPDE1C_37Virtual Address and a CacheCPUVA PA missTranslationCachedatahitMain<strong>Memory</strong>It takes an extra memory access to translate VA to PAThis makes cache access very expensive, and this is <strong>the</strong> "innermostloop" that you want to go as fast as possibleASIDE: Why access cache with PA at all? VA caches have a problem!synonym / alias problem: two different virtual addresses map to samephysical address => two different cache entries holding data for<strong>the</strong> same physical address!for update: must update all cache entries with samephysical address or memory becomes inconsistentdetermining this requires significant hardware, essentially anassociative lookup on <strong>the</strong> physical address tags to see if youhave multiple hits;PDE1C_38

TLBsA way to speed up translation is to use a special cache <strong>of</strong> recentlyused page table entries -- this has many names, but <strong>the</strong> mostfrequently used is Translation Lookaside Buffer or TLBVirtual Address Physical Address Dirty Ref Valid AccessTLB access time comparable to cache access time(much less than main memory access time)PDE1C_39Translation Look-Aside BuffersJust like any o<strong>the</strong>r cache, <strong>the</strong> TLB can be organized as fully associative,set associative, or direct mappedTLBs are usually small, typically not more than 128 - 256 entries even onhigh end machines. This permits fully associativelookup on <strong>the</strong>se machines. Most mid-range machines use smalln-way set associative organizations.Translationwith a TLBPDECPUApproximate access timehitVATLB hitPA missTLBLookupCacheTranslation1/2 tmisshittdata20tMain<strong>Memory</strong>1C_40

Virtual <strong>Memory</strong> Example° A virtual memory system has a page size <strong>of</strong> 1024bytes, eight virtual pages, and four physical pages.Based on <strong>the</strong> contents <strong>of</strong> <strong>the</strong> Page Table shown in#1C-42, answer each <strong>of</strong> <strong>the</strong> following threequestions.• What is <strong>the</strong> main memory address for virtual address 4096?• What is <strong>the</strong> main memory address for virtual address 1024?• A fault occurs on page 0. Will any <strong>of</strong> <strong>the</strong> pages currently inmain memory need to be replaced to handle this page fault?Explain your answer.° Hints:• The virtual page i, 0 ≤ i ≤ 7, contains memory locations withaddress ranging from i1024 to (i+1)1024 - 1 (inclusive).• 2 10 = 1024.PDE1C_41The Page TableVirtual PageNo.V-Bit O<strong>the</strong>r Bits +Access RightsAddress000 0 … 01001011100001 0 … 11101110010010 1 … 00011 0 … 00001001111100 1 … 01101 0 … 10100111001110 1 … 10111 0 … 01010001011PDE1C_42

Summary #1/ 4:° The Principle <strong>of</strong> Locality:• Program likely to access a relatively small portion <strong>of</strong> <strong>the</strong> addressspace at any instant <strong>of</strong> time.- Temporal Locality: Locality in Time- Spatial Locality: Locality in Space° Cache Design Space• Total size, block size, associativity• Replacement policy• Write policy (write-through, write-back)PDE1C_43Summary #2 / 4: The Cache Design Space° Several interacting dimensions• cache size• block size• associativity• write-through vs write-back° The optimal choice is acompromise• depends on access characteristics• depends on technology / cost° Simplicity <strong>of</strong>ten winsBadCache SizeAssociativityBlock SizeGoodFactor ALessFactor BMorePDE1C_44

Summary #3 / 4 : TLB, Virtual <strong>Memory</strong>° Caches, TLBs, Virtual <strong>Memory</strong> all understood byexamining how <strong>the</strong>y deal with 4 questions: 1) Wherecan block be placed? 2) How is block found? 3) Whatblock is repalced on miss? 4) How are writeshandled?° Page tables map virtual address to physical address° TLBs are important for fast translation° TLB misses are significant in processor performancePDE1C_45Summary #4 / 4: <strong>Memory</strong> <strong>Hierarchy</strong>° Virtual memory was controversial at <strong>the</strong> time:can SW automatically manage 64KB across manyprograms?• 1000X DRAM growth removed <strong>the</strong> controversy° Today VM allows many processes to share singlememory without having to swap all processes to disk;VM protection is more important than memoryhierarchy° Today CPU time is a function <strong>of</strong> (operations, cachemisses) vs. just f(operations):• What does this mean to Compilers, Data structures, Algorithms?PDE1C_46

![Manuel Mazzara Full CV (November 2011) [PDF] - Computing ...](https://img.yumpu.com/33775457/1/184x260/manuel-mazzara-full-cv-november-2011-pdf-computing-.jpg?quality=85)