The Impact of Data Center and Server Relocation ... - Network World

The Impact of Data Center and Server Relocation ... - Network World

The Impact of Data Center and Server Relocation ... - Network World

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

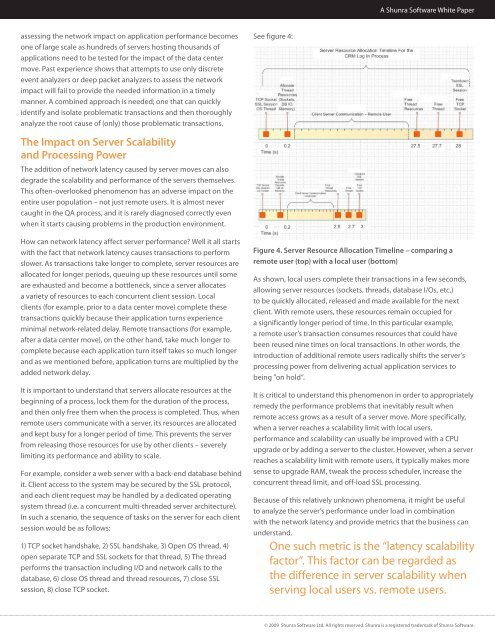

A Shunra S<strong>of</strong>tware White Paperassessing the network impact on application performance becomesone <strong>of</strong> large scale as hundreds <strong>of</strong> servers hosting thous<strong>and</strong>s <strong>of</strong>applications need to be tested for the impact <strong>of</strong> the data centermove. Past experience shows that attempts to use only discreteevent analyzers or deep packet analyzers to assess the networkimpact will fail to provide the needed information in a timelymanner. A combined approach is needed; one that can quicklyidentify <strong>and</strong> isolate problematic transactions <strong>and</strong> then thoroughlyanalyze the root cause <strong>of</strong> (only) those problematic transactions.See figure 4:<strong>The</strong> <strong>Impact</strong> on <strong>Server</strong> Scalability<strong>and</strong> Processing Power<strong>The</strong> addition <strong>of</strong> network latency caused by server moves can alsodegrade the scalability <strong>and</strong> performance <strong>of</strong> the servers themselves.This <strong>of</strong>ten-overlooked phenomenon has an adverse impact on theentire user population – not just remote users. It is almost nevercaught in the QA process, <strong>and</strong> it is rarely diagnosed correctly evenwhen it starts causing problems in the production environment.How can network latency affect server performance? Well it all startswith the fact that network latency causes transactions to performslower. As transactions take longer to complete, server resources areallocated for longer periods, queuing up these resources until someare exhausted <strong>and</strong> become a bottleneck, since a server allocatesa variety <strong>of</strong> resources to each concurrent client session. Localclients (for example, prior to a data center move) complete thesetransactions quickly because their application turns experienceminimal network-related delay. Remote transactions (for example,after a data center move), on the other h<strong>and</strong>, take much longer tocomplete because each application turn itself takes so much longer<strong>and</strong> as we mentioned before, application turns are multiplied by theadded network delay.It is important to underst<strong>and</strong> that servers allocate resources at thebeginning <strong>of</strong> a process, lock them for the duration <strong>of</strong> the process,<strong>and</strong> then only free them when the process is completed. Thus, whenremote users communicate with a server, its resources are allocated<strong>and</strong> kept busy for a longer period <strong>of</strong> time. This prevents the serverfrom releasing those resources for use by other clients – severelylimiting its performance <strong>and</strong> ability to scale.For example, consider a web server with a back-end database behindit. Client access to the system may be secured by the SSL protocol,<strong>and</strong> each client request may be h<strong>and</strong>led by a dedicated operatingsystem thread (i.e. a concurrent multi-threaded server architecture).In such a scenario, the sequence <strong>of</strong> tasks on the server for each clientsession would be as follows:1) TCP socket h<strong>and</strong>shake, 2) SSL h<strong>and</strong>shake, 3) Open OS thread, 4)open separate TCP <strong>and</strong> SSL sockets for that thread, 5) <strong>The</strong> threadperforms the transaction including I/O <strong>and</strong> network calls to thedatabase, 6) close OS thread <strong>and</strong> thread resources, 7) close SSLsession, 8) close TCP socket.Figure 4. <strong>Server</strong> Resource Allocation Timeline – comparing aremote user (top) with a local user (bottom)As shown, local users complete their transactions in a few seconds,allowing server resources (sockets, threads, database I/Os, etc.)to be quickly allocated, released <strong>and</strong> made available for the nextclient. With remote users, these resources remain occupied fora significantly longer period <strong>of</strong> time. In this particular example,a remote user’s transaction consumes resources that could havebeen reused nine times on local transactions. In other words, theintroduction <strong>of</strong> additional remote users radically shifts the server’sprocessing power from delivering actual application services tobeing ”on hold”.It is critical to underst<strong>and</strong> this phenomenon in order to appropriatelyremedy the performance problems that inevitably result whenremote access grows as a result <strong>of</strong> a server move. More specifically,when a server reaches a scalability limit with local users,performance <strong>and</strong> scalability can usually be improved with a CPUupgrade or by adding a server to the cluster. However, when a serverreaches a scalability limit with remote users, it typically makes moresense to upgrade RAM, tweak the process scheduler, increase theconcurrent thread limit, <strong>and</strong> <strong>of</strong>f-load SSL processing.Because <strong>of</strong> this relatively unknown phenomena, it might be usefulto analyze the server’s performance under load in combinationwith the network latency <strong>and</strong> provide metrics that the business canunderst<strong>and</strong>.One such metric is the “latency scalabilityfactor”. This factor can be regarded asthe difference in server scalability whenserving local users vs. remote users.© 2009 Shunra S<strong>of</strong>tware Ltd. All rights reserved. Shunra is a registered trademark <strong>of</strong> Shunra S<strong>of</strong>tware.