Digital Technical Journal, Volume 5, Number 2 ... - 1000 BiT

Digital Technical Journal, Volume 5, Number 2 ... - 1000 BiT

Digital Technical Journal, Volume 5, Number 2 ... - 1000 BiT

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

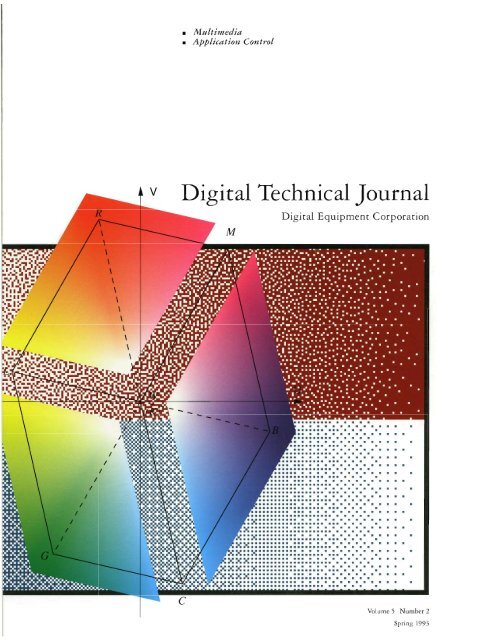

I ............IIIwMultimediaApplication ControlC....I...IX:I...KG:#:.XX : :kx::x:X X x:::,. m a m a n m m ~ m......................... m a n . . m . L "..................m...m.raa8.m.mm8.......m ...............m......m.II.I.........................m.........I......me.... ........em ....I..I.........I. .I.....n a n......I..... ......mmm...I;mrnmrn mar a m....I.....m.......................II . . . .I.....II......I IIIl l .<strong>Volume</strong> J <strong>Number</strong>2

EditorialJane C. Blake, Managing EdltorKathleen M Stetson, Ed~torHelen L. Patterson, EditorCirculationCatherine M. I'hillips, AdministratorDorothea B. Cassady, SecretaryProductionTerri Auticri, Production EditorAnne S. Katzeff, vpographerPeter R. Woodbury, IllustratorAdvisory BoardSamuel H. Fuller, Chairmankchard W BemeDonalcl 2. HarbcrtRichardJ. HollingsworthNan G. NemethJeffrey H. RudyStan SmitsMichael C. ThurkGayn B. WintersCover DesignDithering and color space conversion are twoof the concepts discussed in "Video Rendering,".which opens this issue's set ofpapers on multimediatechnologies. On the covw the bandof blue across the bottom of the cover graphicshows the rectangularpatterning createdby an ordered ditherprocess using a populccrrecursLue tessellation array The band of burgtrtzdyacross the top shows the sziperiorpatterningof the same ordered ditherprocesswith a newly designed void-ancl-cluster array,which produces a higher quality image for displayby eliminating the rectangulc~r patternsand the textrires of white noise. The line illustrationoverlayiiag these two arrays presentstwo color spaces, one within the other: RGBand YW(Itiminance-chrominance space usedby television systems; Y axis not shown). In thecolor conversion process, data transmittedin YWspace is converted lo RGB space. Thecover design shows threefc~ces ofthe RGBspace "lifted o r ancl infused with the colorsnoted at each corner oftheparallelepiped,The cover concept and ill~rstrations arederived from the paper "Video Rendering"by Bob Ulichney. The design was implementedby Linda Falvella of QuanticCommunications, Inc.The <strong>Digital</strong> Ecbnicc~lJorr~nal is a refereed journal published quarterly by <strong>Digital</strong>Equipment Corporation, 30 Porter Road LJ02/D10, Littleton, Massachusetts 01460.Subscriptions to the Jou,nal are $40.00 (non-U.S. $60) for four issues and $75.00 (non-U.S. $115) for eight issues and must be prepaid in U.S. finds. University and collcge professorsand Ph.D. students in the electrical engineering and computer science fieldsreceive complimentary subscriptions upon request. Orders, inquiries, and addresschanges should be sent to the <strong>Digital</strong> <strong>Technical</strong><strong>Journal</strong>at the published-by address.Inquiries can also be sent electronically to DTJ@CKL.DEC.COM. Single copies and backissues are available for $16.00 each from <strong>Digital</strong> Press of <strong>Digital</strong> Equipment Corporation,129 Parker Street, Maynard, MA 01754. Recent back issues of the Joufnal are alsoavailable on the Internet at gatekecper.dec.com in the di.rectory /pub/DEC/DECinfo/DTJ.<strong>Digital</strong> employees may sencl subscription orders on the ENET to R.DVAX::JOI'IWi\LOrders should include badge number, site location code, and address.Comments on the content of any paper are welcomed and may be sent to the managingeditor at the published-by or network address.Copyright O 1993 <strong>Digital</strong> Equipment Corporation. Copying without fee is permittedprovided that such copies are made for use in educational institutions by faculty membersand are not distributed for commercial advantage. Abstracting with credit of<strong>Digital</strong> Equipment Corporation's authorship is permitted. All rights reserved.The information in the <strong>Journal</strong> is subject to change without notice and should not beconstrued as a commitment by <strong>Digital</strong> Equipment Corporation. Digival EquipmentCorporation assumes no responsibility for any errors that may appear in the <strong>Journal</strong>.ISSN 0898-901XDocumentation <strong>Number</strong> EY-P963E-DPThe following are trademarks of <strong>Digital</strong> Equipment Corporation: Alpha AXP, AXP, CDA,CDD/Repository, COHESION, CX, DDJF, DEC, DEC 3000 AXP, DEC CkiC;l;rnce, DEC OSWlAXP, DECaudio, DECchip 21064, DECimage, DECnet, DECNIS, DECpc, DE(:spin,DECstation, DECvideo, DECwindows, <strong>Digital</strong>, the <strong>Digital</strong> logo, GIGAS\VI'I'CN, HSCSO,Megadoc, OpenVMS, OpenVhlS AXP, Q-bus, RA, RV20, SQL Multimedia, TURUOchannel,ULTRIX, UNIBUS, VAX, VAXstation, and VMS.Apple, Macintosh, and QuickDraw are registered trademarks and QuickTime is a trademarkof Apple Computer, Inc.Display PostScript is a registered trademark of Adobe Systems Inc.DM and INDEO are registered trademarks and Intel is a trademark of Intel Corporation.HP is a registered traclemark of Hewlett-Packard CompanylOhl is a registered trademark of International Business Machines Corporation.Kodak is a registered trademark of Eastman Kodak Company.Lotus and 1-2-3 are registered tradcmarks of Lotus Development CorporationMicrosoft, MS-DOS, and Excel are regisrered trademarks and Video for Windows.Windows, and Windows NT are tradcmarks of Microsoft Corporation.MIPS is a trademark of MIPS Computer Systcms.Motif, OSF, and OSWl are registered tradcmarks and Open Software Foundation is atrademark of Open Software Foundation, Inc.Perceptics is a registered trademark and LascrStar is a trademark of PerccpticsCorporation.SCO is a trademark of Santa Cruz Operations, IncSolaris, Sun, and SunOS are registered trademarks and SPARCstation is a trademark ofSun Microsystems, Inc.SPARC is a registerecl trademark of SPARC International, Inc.System V is a trademark of American Telephone and Telegraph Company.UNM is a registered trademark of UNK System Laboratories, IncXvideo is a trademark of Parallax Graphics, Inc.X Window System is a trademark of the Massachusetts Institute of Technology.Book production was clone by Quantic Communications, Inc.

Contents7 ForewordJohn A. MorseMultimedia9 Video RenderingRobert Ulichney19 SoJtware Motion PicturesBurkhard K. Neidecker-Lutz and Robert Ulichney28 <strong>Digital</strong> Audio CompressionDavis Yen Pan41 The Megadoc Image Document Management SystemJan B. te Kiefte, Robert Hasenaar, Joop W Mevius, and Theo H. van Hunnik50 The Design ofMultimedia Object Support in DEC RdbMark F. Rile): James J. Feenan, Jr., John L. Janosik, Jr., and T. K. Rengardjan65 DECspim A Networked Desktop Videoconferencing ApplicationLawrence R. Palmer and Ricky S. Palmer77 LAN Addressing for <strong>Digital</strong> Video Datal'eter C. HaytlenApplication Corltrol84 CASE Integration Using ACA ServicesPaul 0. Patrick, Sr.100 DEC @aGlance-Integration ofDesktop Tools andManufactun'ng Process Information SystemsIlavid Ascher

I Editor5 IntroductionJane C. Blake~Vunqqing EditorThis issue of the <strong>Digital</strong> <strong>Technical</strong>Jotrr~zal featurespapers on multimetlia technologies ant1 applications,and on uses of the Application ControlArchitecture (ACA), <strong>Digital</strong>'s implementation of theObject Management Group's CORHA specification.The high quality of today's television, film, andsound recordings have set expectations for computer-basedmultimedia; we expect high-qualityimages, fast response times, good quality audio,availability-including network transmission, andall at "reasonable" cost. Bob Ulichney has writtenabout video image-rendering methotls that are it1fact fast, simple, and inexpensive to implement. Hereviews a color rendering system and conlparestechniques that address the problem of insufficientcolors for displaying video images. Dithering is oneof these techniques, and he describes a new algorithmwhich provides good quality color and highspeedimage rendering.The dithering algorithm is utilizetl in SoftwareMotion Pictures. SIMP is a method for generatingdigital video on desktop systems without the needfor expensive decompression hardware. BurkhardNeidecker-Lutz and Bob Ulichney tliscuss issuesencountered in designing portable vitleo compressionsoftware to display digital video on a range ofdisplay types. SMP has been ported to Alph;~ AXP,Sun, IBM, Hewlett-Packartl, and Microsoft platforms.Digitized data-video or audio-must be compressedfor efficient storage and transmission.Davis Pan surveys audio compression techniques,beginning with analog-to-digital conversion anddata compression. He then discusses the MotionPicture Experts audio algorithm and the interestingproblem of tleveloping ;I real-time software implementationof this algorithm.Even conipressctl, digitizetl data takes up tremendousm mounts of storage space. A relationaldatabase can not only store this data but providefast retrieval. Mark Riley, Jay Feenan, John Janosik,and T.K. Rengarajan describe DEC Rdb enhancementsthat support multimedia objects, i.e., text,still frame images, compountl documents, andbinary large objects.Managing image documents is the subject of apaper by Jan te Kiefte, Bob Hasenaar, Joop Mevius,and Theo van Hunnik. Megadoc is a hartlware andsoftware framework for building custon~ized imagemanagement applications quickly and at low cost.They describe the UNIX file system interface to\VOliM tlrives, a storage manager, ant1 ;In image;~pplication framework.Distributing multimedia over a network presentsboth engineering challenges and opportunities forapplications. DE

Biographies IDavid Ascher Dave Ascher joined <strong>Digital</strong>'s Industrial Products SoftwareEngineering Group in 1977 to work on the DECDataway industrial multidropnetwork. Since then, he has worked on distributed manufacturing systems asa developer, group leader, and technical consultant, and as an architect of theDEC @aGlance product. As a principal software engineer, Dave leads an effort todevelop DEC BaGlance service offerings. He holds a B.S. in psychology from CityCollege of New York and a Ph.D. in psychology from McMaster University,Hamilton, Ontario.James J. Feenan, Jr. Principal engineer Jay Feenan has been implementingapplication code on database systems since 1978. Presently a technical leader forthe design and implementation of stored proced~~res in DEC Rdb version 6.0, hehas contributed to various Rdb and DBMS projects. Prior to joining <strong>Digital</strong> in1984, he implemented Manufacturing Resource Planning systems and receivedAmerican Production and Inventory Control Society certification. Jay holds a B.S.from Worcester Polytechnic Institute and an M.B.A. from Anna Maria College.He is a member of the U.S. National Rowing Team.Robert Hasenaar Bob Hasenaar is an engineering manager for the Megadocoptical file system team, part of <strong>Digital</strong>'s Workgroup Systems SoftwareEngineering Group in Apeldoorn, Holland. He has seven years' software engineeringexperience in operating systems and image document management systems.Bob was responsible for the implementation of the first Megadoc opticaldisk file system in a UNlX context. He has an M.Sc. degree in theoretical physicsfrom the University of Utrecht, Holland.Peter C. Hayden Peter Hayden is an engineer in the Windows NT SystemsGroup. He joined <strong>Digital</strong> in 1986 as a member of the FDDI team and led severalefforts contributing to the development of the FDDI technology and product set.He then led the Personal Computing Systems Group's multimedia projectsbefore joining the Windows NT Systenls Group in 1992. Before coming to <strong>Digital</strong>,Peter worked on PBX development at AT&T Bell Laboratories. He holds a B.S. inelectrical engineering and an M.S. in computer science from Union College inSchenectady, NY, and has several patent applications pending.

John L. Janosik, Jr. A principal software engineer, John Janosik was the projectleader for DEC Rdb version 5.0, the Alpha ASP port version. John has been amember of the Database Systems Group since joining <strong>Digital</strong> in 1988. Prior tothis, he was a senior software engineer for Wang Laboratories Inc. and workedon PACE, Wang's relational database engine and application development environmcnt.John received a B.S in computer science from Worcester PolytechnicInstitute in 1983.Joop W. Mevius A systems architect for the Megadoc system, Joop Mevius hasover 25 years' experience in software engineering. He has made contributionsin the areas of database management systems, operating systems, and imagedocument management systems. Joop has held both engineering managementpositions and technical consultancy positions. He has an M.Sc. clegree in mathematicsfrom the <strong>Technical</strong> University of Delft, Holland.Burkhard K. Neidecker-Lutz Burkhard Neiclecker-Lutz is a principalengineer in the Distributed Multimedia Group of <strong>Digital</strong>'s C;~mpus-basedEngineering Center in Karlsriihe. He currently works on distributecl multirnecliaservices for broadband networks. Burkhard contributed to the XMedia layeredprocluct. Prior to that work he led the design of the NESTOR distributed learningsystem. He joined <strong>Digital</strong> in 1988 after working for PCS computer systems.Burkhard earned an M.S. in computer science from the University of Karlsriihein 1987Lawrence G. Palmer Larry Palmer is a principal engineer with the NetworksEngineering Architecture Group. He currently leads the DECspin project for thePC ant1 has been with <strong>Digital</strong> since 1984. Larry is one of three softw~re developerswho initiated the PLMAX software project for the DECstation 3100 product byporting the ULTRlX operating system to the MIPS architecture. He has a B.S. (highesthonors, 1982) in chemistry from the University of Oklahoma ancl is a memberof Phi Beti1 Kappa. Hc IS co-inventor for five patents pending on enabling softwaretechnology for audio-video teleconferencing.(%'Ricky S. Palmer liicky Palmer is a principal engineer with the ConlputerSystems Group. He joined <strong>Digital</strong> in 1984 and currently leads the DECspin project.Ricky is one of three software developers who initiated the I'NwX softwareproject for the DECstation 3100 product by porting the ULTRIX operating systemto the MII'S architecture. He has a B.S. (high honors, 1980) in physics, a B.S. (1980)in mathematics, and an M.S. (1982) in physics, all from the University ofOklahoma. He is co-inventor for five patents pending on enabling software technologyfor audio-video teleconferencing.

Davis Yen Pan Davis Pan joined <strong>Digital</strong> in 1986 after receiving a Ph.D. in electricalengineering from MIT. A principal engineer in the Alpha Personal SystemsGroup, he is responsible for the development of audio signal processing algorithmsfor multimedia products. He was project leader for the Alpha/OSF baseaudio driver. He is a participant in the Interactive Multimedia Association <strong>Digital</strong>Audio <strong>Technical</strong> Working Group, the ANSI X3L3.1 <strong>Technical</strong> Worliing Group onMPEG standards activities, and the ISO/MPEG standards committee. Davis is alsochair of the ISO/MPEG ad hoc committee of MPEG/audio software verification.Paul B. Patrick, Sr. Paul Patrick is a principal software engineer in the A

BiographiesIRobert Ulichney Robert Ulichney received his Ph.D. (1986) in electrical engineeringand computer science from the Massachusetts Institute of Technologyand his B.S. (1976) in physics and computer science from the University ofDayton, Ohio. He is a consulting engineer in Alpha Personal Systems, where hemanages the Codecs and Algorithms Group BOO has nine patents pcnding forhis contributions to a variety of <strong>Digital</strong> products, is the author of <strong>Digital</strong>Hnlftoni~zg, published by The MIT Press, ant1 herves as a referee for several technicalsocieties includingrEEE.Theo M. van Hunnik Theo van Hunnik is an engineering project manager for<strong>Digital</strong>'s Workgroup Systems Software Engineering Group in Apeldoorn. Holland.He has over 20 years' software engineering experience in compiler developmentand the tlevelopment of office automation protlucts. Theo has participated inseveral international systems architecture task forces. He managed the developmentteam for RetrievAll, the Megadoc image application framework.

I ForewordJohn A. MorseSr: Engineering Munc~gel;Corporate Research GArchitectureIn the late '80s, "multimedia" was a magic word.It seduced us with glimpses of a brave new worldwhere audio ant1 vitleo technology merged withcomputer technology. It promised us everythingfrom instant high-impact business presentationsto virti~al reality. Words like "paradigm shift" and"multibillion-dollar intlustry" were enough to snareboth the technophilcs and the eager entrepreneursinto believing that the world had suddenlychanged, and we were all going to get rich in theprocess.Somewhere on the way to the bank, reality set in,ant1 it wasn't virtual. The reality is that multimedia isa lot harder than it looks. S~~ccessh~l rni~ltimetliarequires ;I marriage between analog 'W technologyand digital computer technology; it requires reconciliationbetween a technical/professional marketplaceand a consumer marketplace. As in anymarriage, a lot of hard work is required to make itsucceed, and much of that work is yet to be done.For certain segments of the computer industr):multimedia was relatively easy to implement ant1 socaught on quickly. The first successes have been atthe extremes of the cost spectrum-very low-endclesktop multimedia on the one hancl, and veryhigh-entl virtual reality systems on the other. Thishas left <strong>Digital</strong>, with its traditional focus on themitltlle, temporarily out of the game.For desktop multimedia, all that is reqi~ired is theability to capture and display video and audio. Sincemachines like the Commodore Amiga were alreadybased more on Tv technology than on computertechnology (for cost reasons), they coultl be quicklyant1 cheaply adapted to handle audio and motionvideo. Thus desktop multimedia was born. TheCD-ROM, adapted from autlio CD technology, was theperfect storage rnetlii~rn for distribution of multimediacontent; and so for this market segment,CD-ROM and multimedia became almost synonymous.There has emerged a whole industry based aroundthe production of multimedia titles on CD-ROM.At the high end, for purposes such as full-realismaircraft simulation or virtual reality applications,the solution was to use the highest performancehardware available, at whatever expense. Typically,high-end, three-dimensional graphics systems werecoupled either to supercompilters or to massivelyparallel processor arrays. The result was, and still is,impressive. But the cost is still so high that such virtualreality systems arc not yet commercially viableexcept in specialized low-volume markets.The vast area in the middle, into which all of<strong>Digital</strong>'s business falls, has developed very slowly.The problem is that our business is based on amodel of enterprise-wide computation. The computersystems we design ant1 sell not only inclucleprocessors and displays but incorporate networksand servers as well. To introduce multimedia intosuch a model, one touches every aspect of the system,from the desktop, through the network, andback to the servers. At every turn, we have foundthat the technology that has evolved over 30 to 40years for handling numbers, text, and (morerecently) two-dimensional and three-dimensionalgraphics is not quite right for video and audio.Every component of the system, both hardware andsoftware, needs to change in some way. We need toevolve to a model of networked client-server multimediacomputing. Change of this magnitude is aslow process.Two challenges are so pervasive that alniostevery paper in this issue addresses them, each froma different perspective. First of all, multimediainvolves the handling of large quantities of data.Second, for many applications, that data must behandled under very tight time constraints. Theresulting stress and strain on all components of thesystem translates into a set of technical challengesthat has occupied us for the last four years andprornises to keep us busy through at least the rest ofthis decade.Depending on the picture quality chosen, it mayrequire from one million to one hundred millionbytes of storage to save each second of live video indigital form. Since many applications of multimedia,such as archiving television footage forresearch or historic preservation purposes, willneed to save many hozirs of video, it is easy to see

th21t n~ultimedia quickly builds dem;lnd for manygigabytes (1,000,000,000 bytes) of magnetic or opticaldisk storage. But storage is only part of the problem.Once such enormous amounts of data arestored, the challenge becomes how to retrieve aparticular item of interest. Standard database techniquesare orielited toward retrieval of text andr~un~bers. Retrieval of autlio ant1 video informationwill require new file and database techniclues that;ire only beginning to be untlers~ood.An obvious application of multirnedia technology,once the networks are in place, is teleconferencing.We can envision a day when we canconnect to anyone any place in the world via thenetwork ant1 carry on a conversation with them,while each of us sees the other in full-motion video,using the a~~dio ant1 vitleo capabilities of our clesktopworkstations and PCs. But realizing this visionhas proved surprisingly hard. People expect theimages they see to be synchronized with. the soundsthey hear, and they expect delays to be no worsethan tliose experienced on a long-distance telephonecall. Unfortunately, data networks have beendesigned to maximize throughput and reliability.They do this at the expense of some delay in transmission-delaythat is annoying at best, and unacceptableat worst, foi- teleconferencing applications.Successful infusion of multimedia technologyinto enterprise-wide computation is proving torequire change on a scale that almost no one anticipated.We at <strong>Digital</strong> are in the midst of this processof change, ant1 this issuc of the <strong>Digital</strong> <strong>Technical</strong>Jozirrzcrl is a snapsliot, t;~ken at one point in time, ofthat process. Together, the papers describe some ofthe toughest technical challenges that we face andin many cases give glimpses into possible solutions.

Robert Ulicbney IVideo RenderingWdeo rendering, the process of generating device-dependent pixel data fromdevice-independent sampled image data, is key to image quality. System componentsinclude scaling, color adjustment, quantization, and color space conuersion.This paper emphasizes methods that yield high image qualit34 are fc~st, and ye1 aresimple and inexpensiue to implement. Particular attention is placed on the dc~iuationand analysis of new multilevel dithering schemes. While permitting smallerframe bzlffers, dithering also prouides faster transport of the processed image to thedisplay-a k q benefit ~ for the massive pixel rates associated with full-motion videoPerhaps the most influential characteristic governingthe perceived value of a system that displaysimages is the way the pictures look. Image appearanceis largely dependent upon the quality of rendering,that is, the process of taking device-independentdata and generating device-dependent data tailoredfor a particular target display.The topic of this paper is the processing of sampledimage data and not synthetic graphics. Forgraphics rendering, primitives such as specificationsof triangles are converted to displayable pictureelements or pixels. The atomic elementshandled by a video rendering system are deviceindependentpixels. Whereas a prerendered graphicsimage can be compactly represented as acollection of triangle vertices, prerendered videoachieves compaction by means of compressiontechniques.Sampling broadcast video requires a data rate ofmore than 9 million color pixels per second; theneed of some relief for storage and networks isclear. Video compression reduces redundancy inthe source image and thereby reduces the amountof data to be transmitted. Dramatic reductions indata rate can be achieved with little degradation inimage quality. The Joint Photographic ExpertsGroup (JPEG) standard for still frame and theMotion Picture Experts Group (MPEG) and Px64standards for motion video are current committeecompression techniques.' Several other nonstandardschemes exist, including a simple compressionmethod conclucive to software-onlyimplernentati~n.~Vicleo rendering receives decompressed imagedata as input. Since every decompressed pixel mustbe processed, speed is essential. This paper focuseson rendering methods that are fast, simple, andinexpensive to implement. Performance at videorates can be achieved with minimal hardware oreven software-only solutions.The Rendering Architecture section reviews thecomponents of a rendering system and examinesdesign trade-offs. The paper then presents detailsof new and efficient dithering implementations.Finally, video color mapping is discussed.Rendering ArchitectureFigure 1 illustrates the major phases of a video renderingsystem: (1) filter and scale, (2) color atljust,(3) quantize, and (4) color space convert.In the first stage, the original image data must beresampled to match the target window size. A separatescaling system should be used for the horizontaland vertical directions to handle the case wherethe pixel aspect ratio must be changed. For example,such asymmetric scaling is needed when thetarget display expects square pixels and the originalpixels are not square.The best filters to use in combination with scalinghave been determined from a perceptual pointof view.? When limiting the bandwidth to reducethe data rate, a Gaussian filter with a standard deviationu = 0.30 X output period is recommended.For interpolation, the filter preferred (because thefiltered results looked most like the original) wasa cascade of two: first, sharpen with a Laplacianfilter, and second, follow by convolution with aGaussian filter with u = 0.375 X input period.A typical sharpening scheme can be expressedby the following equation:I,,,,,, [x,yI = I[XJJI - P*\II[x,yI * J[x,yI, (1)<strong>Digital</strong> <strong>Technical</strong> Jourrral Vo1.5 No. 2 Sprtng 1993

MultimediaIIIIFigure IDECOMPRESSEDIMAGE DATAFILTERAND SCALEaADJUSTQUANTIZESPACECONVERT*---RENDERED PIXELSImage Rendering Systemwhere I[x, y] is the input image, 9[x, y] is a digitalLaplacian filtcr, and "*" is the convolution ~perator.~The nonnegative parameter P controls tlie degreeof sharpness, with p = 0 i~itlicating no change insharpness. When enlarging, sharpening shouldoccur before scaling, and when reducing, sliarpeningshould take place after scaling. The filtering discussedhere is assumed to be two-dimensional,which requires image line buffering. For economy,horizontal-only filtering is sometimes used.The simplest means of scaling is known asnearest-neighbor scaling, and its simplest imple-mentation is based on the Bresenham scan conversionalgorithm for drawing straight li~ies.~ Thisalgorithm can be applied to image scaling ant1 performedwith only three registers and one adder."Further optimizations make this algorithm especiallysuitetl for real-time use.'The second stage of rendering is color adjust,most easily achieved with a look-up table (LIJ'T).Each color component uses a separate adjust 1.liT.In the case of a luminance-chrominance color, anadjust 1.m for the luminance component controlscontrast and brightness, and LUTs for the chrominancecomponents control saturation.For so-called true-color frame buffers with 24-bitdepths, visual artifacts that can result from insufficientamplitude resolution do not occur. Withsmaller frame buffers, restricting the amplitucle ofthe color components red, green, and blue (R

of amplitude resolution per color component, the2-by-2-pixel case results in an average of ((2 X 2 X 8luminance bits) + (8 + 8 chrominance bits))/(2 X 2 pixels) or 12 bits per pixel; similarly, the4-by-4-pixel case results in 9 bits per pixel. Thisapproach requires expensive hardware to up-samplethe chrominance components and convert thecolor space at vitleo rates. These nonstandardframe buffers can also cause severe incompatibilityproblems with most applications that expect RGDframe buffers. While chrominance subsampleclframe buffers can accommodate most sampled naturalimages, thin-line graphics can be annihilated.The third alternative for quantization is to usea dithering method. Several methods exist that aredesigned primarily for binary output, but all areextendable to multilevel color.' li A "level" is ashacle of gray, from black to white, or a shade ofa color component, from black to the brightestvalue. The basic principle of dithering is to use theavailable subset of colors to protluce, by judiciousarrangement, the illusion of any color in between.Although neighborhood operations, most notablyerror diffusion, produce good-quality dithering,they are computationally complex and requireadditional storage. For video processing, wherespeed is essential, we turnetl our focus to thosedithering methotls that are point operations, that is,methods that operate on the current pixel onlywithout considering its lleighhors. Each color coniponentof every pixel in the image has an associatetl"noise" or tlither amplitude that is added to it beforethat component is passed to a uniform quantizer.Historically, the first dithering method used forvideo processing was white noise dithering, wherea pseudorandom number was adtled to each luminancevalue before quantization. This method waspracticed soon after the dawn of television.I6However, the low-frequency energy in white noisecauses undesir;~ble textures and graininess.A preferred method is the point process ofordered dithering, where a tleterministic noisearray tiles tlie plane in a periodic manner. Ditherarrays can be tlcsigned to mi~iiniize low-frequencytexture. The most popular are tlie so-called recursivetessellation arrays.l7lVThese arrays yield resultssuperior to those of white noise dithering but sufferfrom structured rectangular patterns.A new ortleretl tlither array design, called the"void-and-cluster" method, eliminates both the lowfrequencytextures of white noise and the rectangularpatterns of recursive tessellation arrays.I9 Thename describes the dither array tlesign process inwhich voids and clusters are located and mitigated.For the high-speed case of motion video, anordered dithering scheme has important advantagesover chrominance-subsampled frame buffersand histogram-based approaches. Quantization bydithering allows the use of conventional franiebuffers, does not require tlie time-consuming processof making two passes over each frame (oreve17 Nth frame), does not cause other applicationsto change color maps with every Nth frame, andallows any number of colors to be selected at rendertime. Also, experiments have shown that theimage quality achieved by dithering is very competitivewith the other methods, when comparecl overa range of sample images. Even when 24-bit framebuffers are available, the increasetl speetl of loadingthree or four 8-bit color pointers or index values inthe time required to load a single 24-bit pixel makesdithering a viable alternative in the design of desktopvideo systems.By way of comparison, Figure 2 illustrates sonicof the methocls described in this section. A 240-by-560-pixel, 8-bit monochrome image was renderedto only two levels and displayetl at 100 dots per inch(dpi). Figure 2a depicts an image that was ditherctlwit11 white noise; in Figure 2b, the same image wastlitherecl using an 8-by-8 recursive tessellationdither array; and Figure 2c shows the imageditherecl with the new 32-by-32 voicl-and-clusterarray. To illustrate the effect of sharpening, Figure2d shows the image in Figure 2c presharpenetlusing a digital Laplacian filter as in equation (I),with a sharpening factor of = 2.0. The goal of thiscoarse example is to ampl@ the different effects.Tlie same methods apply to multilevel and coloroutput, where the resulting quality is much higher.Fast Multilevel DitheringThis section presents the cletails of simple, yet powerfulnew designs to perform multilevel ortleretldithering. Tlie simplicity of these methods allowsfor imp1ement;ltion with minimum hardware orsoftware only, yet guarantees output that preservesthe mean of the input. The designs are flexible inthat they allow dithering from any number of inputlevels 4, to any number of output levels No, provicledA; 2 IY,. Note that and A;, are not restrictedto be powers of two.Each color component of a color image is treatedas an indepentlent image. The input image Li c;lnhave valuesDigitul Technicnl Jorrrtral I/'( 5 /\'o 2 \/II.III~ I993 1 1

Multimedia(a) Dither with a White Noise Breshold(6) Dither with an 864,-8 RecursiiwTesselllntion Threshold Arrojl(c) Dither zuitb a 32-6~,3,-.32 Void-ancl-cl~isterTlireshold An-aj~(61) Smne as (c) zuitl'l LLI~INCI'UIZ .Y/x?rflenitl~,p = 2.0Figure 2 Ex6i1npke.s of Rendering to ~ ~ LOutpttt Y J LezMsLi€ {0,1,2,..., (l'y - I)),and the output image L , can have valuesA deterministic dither array of size M X N is usedthat is periodic and tiles the entire input image. Tosimplify aclclressing of this array M and i\r 5houltleach be powers of two. A tlither template definesthe order in which dither values are arranged. Theelements of the dither template T have valuesTE {0,1,2,..., (4 - I))>where IV, is the number of template levels, whichrellresent the levels ag:~inst which image inputvalues are compared to determine their ni;~ppingto the output values. The tlither template is thuscentral to determining the nature of the resultingdither patterns.Figure 3 shows a dithering system that comprisestwo memories and an atlcler. l'he system takes aninput level Li at image location lx,.y] and producesoutput level Lo at the corresponding location in thedithered output image. The dither array is addressedby x'antl y', which represent the low-orcler bits of

Video Renderingx'-IOne-menzory Dithering System+ ARRAY Using the above expressions, it is possible to sim-Figt~re 3 Dithering Systern with Tujo LUTsthe image address. The selected dither valued[x: y'] is added to the input level to produce thesum s. This sum is then quantized by addressing aquantizer I.UT to produce the output level L,,.The trick to achieving mean-preserving ditheringis to properly generate the LUT values. The ditherarray is a normalized version of the dither templatespecifiecl as follows:d[x:y'l = int (A,(T[x: y'] + i)),where A,, the step size between normalized dithervalues. is defined asand AV isthe quantizer step size(N, - 1)A =--U cry, - 1)Note that AQ also defines the range of dither values.The qi~antizer LUT is a uniform quantizer with A;equal steps of size Ag.The precise expressions in equations (2), (3), and(4) were ;irrivetl at through extensive al~alysis of theaverage oi~tpi~t resulting from processing inputimages of a constant value, over a wide range of y,y,, and N,.pllfy the system by exchanging one degree of freedomfor another. A bit shifter can replace thequantizer LUT at the expense of forcing [he numberof input levels 4, to be set by the system. For hardwareimplementations, this design affords a considerablecost reduction.The system and method of Figure 3 assume thatq. is given as a fixed parameter, as is usually the casewith most imaging systems and file formats.However, for image sources such as hardware thatgenerates synthetic graphics, arbitrarily setting N;.often has no effect on the amount of computationinvolvetl. If an adjust LUT is used to modify theimage data, including a gain makes a "modifiedadjust LUT." Figure 4 depicts such a system, whereL,. is the raw input level. The unadjusted or rawinput image can have the valuesL,. € {0,1,2,. . .,(fV. - 111,where q. is the number of raw input levels, typically256. Therefore, the modified adjust LIJT mustimpart a gain of4. - 11y. - 1 'To solve for 4, recall that in the method of Figure3 the quantizer was defined to have equal steps ofsize AQ as definecl in equation (4). The qi~antizerLUT can be replaced by a simple R-bit shifter, if thevariable Ap can be forced to be an exact binarynumber,A, = 2Y (5)4 can then be set by the expressionN;. = (1V0 - 1)2~ + 1. (6)The integer R is the number of bits the R-bitshifter shifts to the right to achieve quantization.Speceing R in terms of q,, equation (6) becomes- Lr[x.yI ADJUSTMODIFIEDLUTI dlx' v'lSHIFTERFigure 4One-memory Dithering System with an Adjcut LUTand Bit ,Bfyter<strong>Digital</strong> Technicul Jour?~aI Vol. 5 No. 2 Spring 1993 13

MultimediaR = log,(q - 1)(4, - 1)'To completely specify this problem requires specifyingthe range for 4,. It is rcason;tble to do this byspecifying the number of bits 6 by which the imageinput values are to be represented. Specifying 6lilliits I\: to tlie rangeParameter 6 will be a key value in specitjling tlieresultin&. ' s y stem.Given the two expressions, (7) and (8), and thetwo unknowns. R and A:, a unique solution existsbecause tlie range of IY. is less than a factor of two.and R ant1 A$ are integers. To solve for R, substituteequation (6) for N, in equation (8). The res~~ltingequation islog - - 1) < R s l o( )(10)N" - I 4, - 1Since 2 I 4, 5 A:., the range of the expression inequation (10) must be less than one. Hence, give11that K is ;in integer,(*)IR = int [log24) - 1in equation (6) is now specified.As an example, consitler the case where 4,equals 87 (levels), 6 eqilals 9 (bits), 4 equnls 1,024(levels, for a 32-by-32 templ;ite). and IY. eqilals 256(levels). 'T'hus, R equals 2, ant1 the R-bit shifter dropsthe least-significant 2 bits. 4. equals 345 (levels);the tlither ;trrav is norni:~lizetl by equation (2) withA, = 1/256; and the gain factor to be incluclrd in themodified adjust LUT is 344/255. This data is loatledinto the system represented by Figure 4 i~ntl uni-formly ni;~ps input pixels ;tcross tlie 87 true outputlevels, giving the illusion of 256 levels.The output image that results from either of thedithering systems illustratetl in Figure 3 or Figure 4appears to contain more effective levels than areactu:~lly displayed. An effective level is either a perceivetlaverage level that is tlitheretl between twotrue oi~tlx~t levels or shades or an actual true outputlevel. k small nuniher of tenlplate levels 4 clictatesthe resulting number of effective levels. \Vhen4 js I;trge, the number of effective levels is equalto the number of input levels I\:, because it is notpossible to display more effective outputs thaninputs. [More precisely,Effective Levels = 1 (12)Note that Aa/N, in equation (12) is equal to A,/.When A , < 1, the normalization of the tlither array,i.e., equation (2), results in integer truncated valuesthat are not all unique. At this point, the number ofeffective levels saturates to N,.Data Width AnalysisThe design of an efficient dithering system, particularlyin hardware, depends on knowing the numberof bits recluired for all data paths in the system. Thissection presents an analysis of the one-memorydithering system shown in Figure 4.The system input 6, it., the bit depth of theimage input values, limits tlie data path for L,lx;y].The analysis shows the derivation of the precise bitdepths for the other dat;~ paths. In summary, tlietlerivation proves that the dither values in thedither matrix memory recluire R bits, where Rllll,, =(I? - I) and s = Li + d (and thus tlie R-bit shifter)require only 6 bits.Bits iVee~/e~/ fbr Dither iP1~1Lrin' The amount ofmemory needed to store the dither matrix is animport;~nt COIIC~~II; d,l,l,,,, denotes the maximumvalue. To determine dlll~,,, substitute the maximi~mvalue of T[x: y 'I, which is (IV, - l), into equation(2).'['he resulting equation isdl,,,,, which depends on iV,, thus has a value in therange1;or the case of ;I dither matrix with one value,namely A, = 1, equals the lower end of thisrange. dl,,,, equals the high encl of the range forlarge dither nlatrices, where 2R-' 5 N,. An iniportantobservation is that for all valiles in the range ofexpression (14), the number of bits needetl isex:tctly K.

Vi~leo RenderingFrom equation (ll), the value of R increases as Nodecreases. The smallest possible value of No is 2,which is for biton;~l output. In this case, the maximumvalue of R isSo, the number of bits needed for the dither valuesis R, which can be as large as (b - 1).Bit Width oj'Aclder Recall that s[x,y] = L,[x,y] +d[x, y]. The number of bits needed for this sumdetermines the size of the adder and the size ofthe R-bit shifter. L, can be at most (A:. - I) and, asdetermined in the last section, d,,, can be at most(zK- 1). SO,From equation (6),which givess,,,~~ = ~ ~ (- 41) , + (2'- 1) = 2'% - 1. (18)\Ve can express R in terms of No by using equation(11):R = int(logl(2" - 1) - log, (Nj, - 1)). (19)Each of the two terms in the equation (19) can beexpressed in terms of :un integer part and a fractionalpart:wherelog,(2" - 1) = (6 - 1) + E l,log,(ly, - 1) = K + E,,where K is an integer, and01c2< 1.Now equation (19) can be rewritten asR=(O-1)-K+int(~,-E,) (22)E, IS largest when 4, (an integer) is a large power of2 Because iV, cannot be greater than 4,2" 24,.This fact, combinetl with equations (20) ancl (21),yields the further contlitionEl 2 E,.Therefore, int(~, - E,) in equation (22) must beequal to zero, and the value of R becomesR=b-1 -K. (23)We can express No in equation (18) in terms of thesame integer K of equation (21) by noting thatwherelog, ly, = K + E3. (24)O

MultimediaPrior to proceeding to the quantize subsystemshown in Figure 1, all color components must be atthe same final spatial resolution for a ditheringmethod to work correctljr. Chrominance components,then, need to be up-sampled to the same rateas luminance components.Although the chromaticities of the RGB primariesof the major television standards vary slightly, alltelevision systems transmit and store the color datain YUV space. Y represents the achromatic componentthat is loosely called the luminance cornponent.(The term luminance has a specificphotometric definition that is not what is representedin a video Y component.) U and V are colordifference components, where U is proportional to(Blue - Y) and V is proportional to @ed - Y).Figure 5 is an orthographic projection of ylrvspace. Inside the W rectangular solid is the( (Y-axis out)FQure 5 Feasible RGB Values in the YW Color SpaceVol 5 No 2 Spring 199.3<strong>Digital</strong> Technicof Jourrral

parallelepiped of "feasible" RGB space. Feasible RGBpoints are those that are nonnegative and are notgreater than the maximum supported value. For reference,the corners of the RGB parallelepiped arelabeled black (K), white (W), red (R), green (G),blue (B), cyan (C), magenta (M), and ~rellow (L). KGBand W values are related linearly and can be interconvertedby means of a 3-by-3 matrix multiply.In the United States video broadcast system, thechrominance plane (i.e., the U-V plane in Figure 5)is rotated 33 degrees by introducing a phase in thequatlrat~lre modulation of the chrominance signal.The resulting rotated chrominance signals arerenamed I and Q (for inphase and quadrature), butthe unmodulated color space is still W.Figure 6 shows the back end of a rendering systemthat uses dithering as a quantization step priorto color space conversion. A serenclipitous consequenceof dithering is th21t color space conversioncan be achieved by means of table look-up. Thecollective address formed by the dithered Y, U, andV values is small enough to require a reasonablysized color mapping LUT. There are two advantagesto this approach. First, a costly dematrixing operationis not required, ant1 second, infeasible RGB valuescan he intelligently mapped back to feasiblespace off-line during the generation of the colormapping LIIT.This second advantage is an important one,because 77 percent of the valid W coortlinatesare in invalid RGR space, i.e., the space around theRGB parallelepiped in Figure 5. Color adjustmentssuch as increasing the brightness or saturation canpush otherwise valid KGB values into infeasiblespace. In alternative systems that perform colorconversion by dematrixing, out-of-bounds RGB val-DITHERSYSTEMSYSTEMRGB--t COLORINDEX1Fig~~re 6 System for Dithering Three-colorCompotzent.~ and Color Mclppingthe Collectiue Resultues are simply truncated. This operation effectivelymaps colors back to feasible RGR space along linesperpendicular to a parallelepiped surklce illustratedin Figure 5, which can change the color in anundesirable way. The use of n color mapping LIITavoids these problems.SummaryVideo is beconling an increasingly important datatype for desktop systems. This is especially true asdistinctions between cornp~~ting, consumer electronics,and communications continue to blur.While many f;~ctors contribute to the impressionone has of the value of a product that tlisplays information,the way the images look can 11i;llie thebiggest difference. This paper focuses on renderingsystem designs that are k~st, low cost, procl~~cegootl-quality video, and are conducive to hardwareor software implementation.References1. Special Issue 077 Digitcil M~lltinzedia .(;3~.slenzs,Com.wi~lnications of the ACfil, vol. 34, no. 1(April 1991).2. B. Neiclecker-Lutz and R. Ulichne): "SoftwareMotion Pictures;' Digitc~l Teclmzicc~l Jo~~nzal,vol. 5, no. 2 (Spring 1993, this issue): 19-273. W Schreiber ancl D. Troxel, "Transformationbetween

Multimedia9. S. Wan, K. Wong, and I? Prusinkiewicz, "AnAlgorithm for Multidimensional Data Clustering,"ACIW Trnnsuctions on Muthemutic~ilSoflzunre, vol. 14, no. 2 (1988): 153-162.10. C. Sigei. R. Abruzzi, and J. ,Munson, "ChromaticSubsampling for Display of ColorImages," Ol~lical Society of Arneric~~ TopicnlMeeling on Applied Vision, 1989 <strong>Technical</strong>D~gest Series, vol. 16 (1989). 158-161.11. A. Luther, <strong>Digital</strong> Video in the PC Enuiromzmeizt(New York, NY: Intertext Publications,McGraw-Hill, 1989): 193-194.12. L. Glass, "<strong>Digital</strong> Video Interactive," Byte (May1989): 284.13. I? Roetling, "Binary Approximation of Continuous-toneImages," Photographic Scienceand Engineering, vol. 21 (1977): 60-65.14. J. Stoffel ant1 J. Moreland, "A Survey ofElectronic 'I'echniques for Pictorial Reproduction,''IEEE Transactions on Co~?zmulziccrtions,vol. 29 (1981): 1898-1925.15. J. Jarvis, C. Judice, and W Ninke, "A Survey ofTechniques for the Display of ContinuoustonePictures on Bilevel Displays," ComnputerCrn,!!hics umzd Image Processing, vol. 5(1976): 13-40.16. \X! Goodall, "Television by Pulse Code Modulat ion," Bell Systems Teclhlical journal, vol.30 (1951): 33-4917 B. Bayer, "An Optimum Method for Two LevelRendition of Continuous-tone Pictures, Proceedingsof the IEEE Internation~il ConferenceOH Co~7znzui~icutior~~, Confere~zceKccord (1973): (26-11)-(26.15).18. R. Ulichney, "Frequency Analysis of OrderedDither," Proceedings of tlx Society of Pbotoo~ltic~~lIizstrzlrrze~?lutiori L3zgil?eers (SPIE),VOI. 1079 (1989): 361-373.19 R. Ulichne): "The Void-antl-cluster Method forIli t her Array Gener;ition," Tl7c Society forIrn~~ging Science and Tcchtzolog~~/~S~~~~zposiiiinon Electronic I~rzclgiiig Science anclTecl!izolog)) (ISGT/SPIE) (February 1993).18 Vol 5 .\lo 2 $p~,~ng 199.3 Digitnl <strong>Technical</strong> Jounrnl

Burkhard K. Neidecker-LutzRobert UlichneySoftware Motion PicturesSoftzvcrre nzotion pictures is a method of generating digital video on general-)Lir/!ose desktop colnl)ulers zvitho~it using special decompression bardivare. Theco~npressio~z algorilhnz is designed for rapid decomnpression in softzuare and generatesdeterministic data rates for use fronz CDROIM and netu~ork connections. Thedecompression part oflers device indepe~zdence and integrates well with existingwi~zdo~u sj~stenls and applicatio~z progralnming ilzteflces. Software motion picluresfecrtures n yortable, low-cost solution to digital video playback.The necessary initial investment is one of the majorobstacles in making video a generic data type, likegraphics and text, in general-purpose computersystems. The ability to display vicleo usually requiressome combination of specialized frame buffer,decompression hartlware, and a high-speed network.A software-only methocl of generating a videodisplay provides an attractive way of solving theproblems of cost and general access but poses challengingqi~estions in terms of efficiency. Althoughseveral digital vicleo standards either exist or havebeen proposed, their computational complexityexceeds the power of most current desktop systems.1In addition, 21 compression algorithm alonedoes not adclress the integration with existing windowsystem hardware and software.Software motion picti~res (SMP) is both a vitleocompression algorithm ancl a complete softwareimplementation of that algorithm. SMP was specdicallydesigned to address all the issues concerningintegration with desktop systems. A typical applicationof SMP on ;I low-entl workstation is to play backcolor digital vicleo at ;I resolution of 320 by 240pixels with a cocled data rate of 1.1 megabits persecond. On a DECstation 5000 Model 240 HX workstation,this task uses less than 25 percent of theoverall rn;tchine resources.Together with suit;~ble ;~i~clio s~~pport (audio supportis beyond the scope of this paper), softwaremotion pictures provides portable, low-cost digitalvideo playback.The SMP Product<strong>Digital</strong> supplies SMP in several forms. The mostcomplete version of SMIIP come5 with the XMediaToolkit This toolkit is primarily clesigned for developersof multimeclia applications who include theSMP functionality inside their own ;~pplications.Figure 1 shows the user controls as displayed on aworkstation screen. SMP players are also availableon <strong>Digital</strong>'s freeware compact disc (CD) for usewith Alpha AXP worltstations running the DEC.:OSF/l AXP operating system. In addition, SMP plapbackis included with several <strong>Digital</strong> products suchas the video help utility on the SPIN (sound pictureinformation networks) application, as well as othervendors' products, such as the MediaImpact multimediaauthoring system.2In the XMedia Toolkit, access to the SMP functionsis possible through X applications, command lineutilities, and C language libraries. The applicationsand utilities support simple editing operations,frame capture, compression, and other functions.Most of these features are intenclecl for use by producersof simple file formats called SivIP clips.The decompression fiinctionality is offerecl as anX toolkit widget that readily integrates into theOpen Software Foundation's (OSF) Motif-basedapplications. Multiple SMP coclecs (compressors/decompressors) on a given screen all share thesame color resources with one another and withthe Display I'ostScript X-server extension, which isoffered by all major workstation vendors. It alsoplays well with the standard color allocations usedin die Macintosh QuickDraw rendering system andMicrosoft Windows standard color allocations.To facilitate flexible but simple access to entirefilms of SMP frames, SMP defines SMI-' clips. Ratherthan publisl~ing that file format directly all applicationsand widgets are accessed through an encapsulatinglibrary. This method allows future releasesto have application-transparent changes to theunderlying file structure and completely differentways to store and obtain SMP frames.<strong>Digital</strong> Tecbrrical Jorirrrcrl Vol. 5 iVo. 2 ,S/>J-;JI~ 199.3

a cotletl dat:~ rate for this size and frame ratethat woultl allow playback from a CD-ROM. TOachieve this goal, we limited tlie cotletl data ratefor the video component to 135 to 142 kilobytesper seconcl for video, leaving 8 to 15 kilobytes persecond for audio. In adtlition, we had to limit fluctuationsof the coded data rate to allow sensibleuse of bandwidth reservation protocols for playbackover a network without coniplex bufferingschemes.More interesting were the issues that becameapparent when we attempted to use the prototypefor real applications. The digital video material haclto be usable on a wide range of display types, anddue to its large volume, keeping specializetl versionsfor different displays was prohibitive. \Yewould li;~ve to adapt the rendition of the codedm;~terial to the device-tlej>enclent color c;rpabilitiesof the target tlisplay at run time.Our design center used 8-bit color-mapped displays.These were (antl still are) the most commoncolor tlisplays, ant1 tlie demonstrator was basedon them. Integration of tlie video into applicationsin a multitasking environment necessitated thatcomputational as well as color resources wereavailable for use by other i~pplications. The systemwoultl have to perform cooperative sharing ofthe scarce color resources on tlisplays with limitedcolor ci~pitbil ities.From the perspective of portability, we neededto con€orni to existing X11 interfaces, without anyhidden back tloors into the window system. TheX Window System affords no direct way of writinginto the frame buffer. Rather. the MITSHM extensionis used to write an image into a shared memory segment,and then the X server must copy it into theframe buffer. This method woultl impact our;~lreatly strained CPIJ butlget for the codec operation.We woultl need to decompress video in ourcode and have the X server perform a copy operationof the deconipressed video to tlie screen, againusing the main CPU. Quick measurements showedthat the copy alone woultl use ;ipproximately 50percent of the ~~tation;~Ily veryexpensive and usually clefeats re;~l-tim encotling,even for special-purpose Ii;~rdw;~re.One of the earliest algorithms was digital videointeractive (DVI) from Intel/lHM. It comes in twovariations, real-time video (RTV) ant1 protluctionlevel video (PL\J). RTV uses an unknown blockencoding scheme and frame difkrencing. I'L\'aclds motion estimation to this. 1U'I1 is cornp;lr;ibleto SMP in compression efficiency cornpt~t;~tionallymore expensive, and much worse in ini;ige clu;~litj!PLV cannot be clone in software ;~nd I-equjresspecial-purpose supercomputers for compression.Compression efficiency of Pl,V is about twice asgood as SMP, ancl image quality is somewhat hetter.The more recent INDEO video boards from Inreluse 1W.In 1992 Apple introtluced QuickTime, whichcontains several video cornpression cotlecs. "l'heinitial Roadpizza (1V) video cotlec uses .simpleframe differencing and a block encoding similar toCCC, but without the color quantization step. (Thisis a guess based on the visual appearance and performancecharacteristics.) Compression efficiencyof IW is three times worse than SM t', ;lntl irn;~ge clualityis comparable on 24-bit displ;~ys nntl muchworse than SIMI-' on 8-bit displays. I'erformance is<strong>Digital</strong> Tecbtric~l Jour-nnl VoI. 5 No. 2 Spring I993 2 1

Multimediadifficult to compare since SMP does not yet run onMacintosh computers.The newer Compact Vicleo (Cv) codec introducedin QuickTime version 1.5 is similar to CCCwith frame differencing and has con~pressionefficiency much closer to SMP. Image quality on8-bit displays is still lower than SIMP, and compressiontimes are almost unusable (i.e., long).The newest entry into the market for softwarevideo codecs is the video 1 codec in Microsoft'sVideo for Windows procluct. Very little is knownabout it, but it seems to be close to CCC with framedifferencing. Finally, Sun Microsystems has inclutledCCC with frame differencing in their ilpcoming versionof the xl~. imaging library.Three well-known standards for image and videocompression have been established by the JointPhotographic Experts Group (JPEG) and the MotionPictilre Experts Group (MPEG) committees ofthe International Organization for Standardization([SO) and by the Conlit6 Consultatif Internationalede T616graphique et Ttltphoniqi~e (CCITT). Thesestandards are computationally too expensive to beperformecl in softw;lre in all but the most pon~erfulworkstations totlay.The AlgorithmThe SMP algorithm is a pixel-based, lossy compressionalgorithm, designed for minimum Cl'u loatling.It features acceptable image quality, medium compressionratios, and a totally pretlictable coded datarate. No entropy-based or computationally expensivetransform-based coding techniques are used.The downsicle of this approach is a limited imagequality and compression ratio; however, for ;I widerange ofapplications, SMP quality is sufficient.Block Truncation CodingIn 1978, the method referred to as block truncationcoding (HTC) was independently reported in theUnited States by Mitchell, Delp, and Carlton and inJapan by Kishimoto, Mitsuya, ancl Ho~hida.3.~,6.?BTC is a gray-scale image compression technique.The image is first segmented into 4 by 4 blocks. Foreach block, the 16-pixel average is found and usedas a threshold. Each pixel is then assigned to a highor a low group in relation to this threshold. Anexample of the first stage in the coding process isshown in Figure 2a, in which the sample meal1is 101. Each pixel in the blocl< is thus truncated to1 bit, based on this threshold (see Figure 2b).(a) The arlerage of these 16pixels is 101.(6) The auernge (g 101 is ~lsecl 61s cf thr~sl~oldto segment the block.Figure 2 Block Tr~lncution Coding ofn 4 03) 4 BlotkFor each of the two groups, the ;Iver;cge is thencalculated again, giving a low average, a, ant1 a highaverage, b. Mathematically, the first ant1 second statisticalmoments of the block are preserved.Therefore, for a block of m pixels, with y pixelsgreater than the sample mean x2, and sample varianceZ2, it can be shown thatMore intuitively, the bit mask represents theshape of things in the block, and the avemge luminanceand contrast of the block contents are preserved.With this coding method, for blocks of 4 by4 pivels and 8-bit gray values, ;I 16-bit mask ant1 two8-bit values encode the 16 pixels in 32 bits for a rateof 2.0 bits per pixel.Color Cell CompressionLema and Mitchell first extended 111'

Softtunre Plotion Pictzrresblue values. This :~llows each block to be representetlby a 16-bit mask and two 24-blt color values,for a cotling rate of 4 bits per pixel.The 24-bit values are mapped to a set of 256 8-bitcolor inclex values by means of a histogram-basedpalette selection scheme known as the median cutalgorithm "Thus every block can be represented bytwo 8-bit color indiccs and the 16-bit mask, yielding2 b~ts per pixel; however, each image frame mustalso send the table of 256 24-bit color values.Softzuc~re Motion Pictures CompressionWith our goal of 320 by 240 image resolution playbackat 15 frames per second, straight CCC codingwould have resulted in a data stream of more than292 kilobytes per second, which is well beyond thecapabilities of stanclarcl CD-ROM drives. Thus SMPneetletl to improve the compression ratio of CCCapproximately twofold.Given that we could not apply any of the moreexpensive compression techniques, we lookecl forcomp~~tationally cheap data-reduction techniques.Since most of these techniques negatively impactimage quality, we needed a visual test bed to judgethe impact of each change.We compi~tetl the images off-line for a shortsequence, frame by frame, and then preloaded theimages into the workstation memory. The playerprogram then moved the images to the frame bufferin a loop, allowing us to view the results as theywould be seen in the final version. The use of thistechnique provided two advantages. First, weco~llcl discover motion artifacts that were invisiblein any individual frame. Second, n7e could judge thecovering aspects of motion, which tends to brushover some defects that look objectionable in a stillframe.At first, interfr21rne or frame difference codinglooked like a reasonable technique for achievingbetter compression results without sacrificingimage clirality, but this was highly dependent on thenatilre of the input material. Due to the low CPUbudget, we coulcl not use any of the more elaboratemotion compens;ltion algorithms, so even slightmovements in the input video material largelydefeated frame differencing. Typically, we achievedonly 10 percent better compression with interframecoding, while introducing considerablecomplexity to the compression and decoding operations.As ;I result, we clropped interframe codingand m;~tle SMP a pure intraframe method, simplfiingediting operations and random access todigitizecl material. At the same time, this opened upuse of SMP for still image applications.To reach our final compression ratio goal ofapproximately 1 bit per pixel, we settled for a combinationof two subsampling techniques. Similartechniclues have been independently described byPins, who conducted an exhaustive search and evaluationof compression techniques.I0 His finclingsserved as a check on our experiments.Blocks with a low ratio of foreground-to-backgroundluminance (a metric that can be interpretedas contrast) are represented in SMP by a single colorand no mask. This reduces the coded representationto a single byte compared to 4 bytes in CC

Multimediafourfold, which makes the image look very block\..If too many structured blocks are allocatetl. regionsof the image that have little clet;~il ;Ire encoded withunnecessary overhead. Over the wide range ofimages we tested, allocating between 30 percent;inti 50 percent of structured blocks worked best,yieltljng a bit rate of 0.9 to 1.0 bits per pixel. Forcolor ini;iges, the overhead of the color t;ible (768bytes) must be added.Decompressionl'he most challenging part of the design of theS&lP system. given the performance requirements,is the decompression step. Efficient renderingtechniques of block-truncation cotling are wellknown for certain classes of oiitpl~t tlevices.5SMI-' improves on the in1plement;itions describedin the literature by coniple~nenting the r;lw algorithmwith efficient, device-independe~it rencleringengi~ies.~.~.H~(~" To maximize code eflicienc): n separatedecompression routine is used for each clisplaysitii;ttion, rather than using contlitionals in a moregeneric routine. The current implement;ition canrcnder to 1-, 8.. and 24-bit displ;~ys.1)ecompression of BT(: involves filling 4 by 4blocks of pixels with two colors untler a mask.Recause the size and alignment of thc blocks isknown, ;I very fast, fiill!~ unrol let1 code sequencec:in be ilsed. Changes of brightness and contrast ofthc image can be rapidly adaptetl to tlifl'erent viewingconclitions by manipulating the entries of thecolormap of the SMP encotling. Most of the worklies in adaptation of the color contcnt of the decom-pressed tlata to the device cli:~r;~cteristics of theframe buffer.For displays wit11 fitll-color c;~pabilities (24-bittrue color). the process is straiglitforwartl. Themain problem is performing the copy of the tlecom-pressed vicleo to the screen. Sincc 24-bit dnt;~ is usuall),;~lloc;ited in 32-bit wortls, the ;rnlount of data tocopy is four times the 8-bit case. "ryypically, SIMPspentls 90 percent of the c:I-'rl time in the screencol>y on 24-bit systems.The Inore common and interesting case is toclecompress to an 8-bit color representation. Giventliat SMP is an 8-bit, color-indexed format, it wouldseem straightforwarcl to tlownlo:itl the SMP framecolor table to the window system color t;tble andfill the image with the pixel indices directly. Thismethotl is impractical for two reasons. First, mostwintlow systems (including X11) tlo not allowreservation of all 256 colors in the 1i:irdware colortables. Typically, applications and window managersitse a few of the entries for system colors ant1cursors. Qu;~ntizing down to a smaller number ofcolors (such ;IS 240) could overcome this drawbackto a certain tlegree; however, it would make theSMP-cotled materi;il depenclent on the device cliaracteristicsof ;I p;irticitlar window system.The second and mucli more problematic aspectis that the SMP frames in a sequence usually havedifferent color tables. Consequently, each framerequires a change of color table that causes a kaleidoscopiceffect for the windows of other applicationson the screen. In fact, flashing cannot beeliminated within the sMp window itself.Neither XI1 nor other popular window sjlstenissuch as Microsoft Windows allow reload of thecolor table and the content of an image at the sametime. "Illerefore, regartlless of whether the colortable or image contents is modified first, a flashingcolor effect takes place in the SMP window. It mayseem that the update would have to be done in asingle screen refresh time as opposecl to simultaneously.This is true but irrelevant. Most windowsystems clo not allow for such fine-grain synclironization;and for performance reasons, it was unrealisticto expect to be able to update the image in asingle, vertical blanking periocl.Alternative suggestions to avoid this problemhave been proposed in the literature. One suggestionis to use ;I single color table for the entiresequence of frames.1o," This method is computationallyexpensi\.e and fails for long sequences anclediting operations. Another proposes quantizationto less than half of the available colors or partialupdates of the color map and use of plane masks."This alternative is not particularly portablebetween different window systems, and the use ofplane masks can have a disastrous impact on performanceh)r some frame-buffer implementationssuch 21s the (:X adapter in the DECstation protluctline.Neither of these methods addresses the issue ofmonochrome displays or the use of multiple sinlultaneousSMP movies on a single display. (This effectcan be witnessed in Sun Microsystems' recent additionof C

So(trifar-e ~llotio?z PicturesA well-known technique for matching colorimages to devices with a limited color resolution isdithering. Dithering trades spatial resolution for anapparent increase in color and luminance resolutionof the display device. The decrease in spatialresolution is less of an issue for SMP images becauseof their inherently limited spatial resolution capability.Thus the only challenge was the computationalcost of performing dithering in real time.Fortunately, we found a dithering algorithm thatallowecl both good quality and high speed.I2 Itreduces quantization and mapping to a few tablelook-up operations, which have a trivial hardwareimplementation (random access memory) and areasonable software implementation with a fewadds, shifts, and loatls.The general software implementation of thedithering algorithm takes 12 instructions in theMIPS instruction set to map a single pixel to its outputrepresentation. For SMP decoding, two differentcolors at most are in each 4 by 4 block. With thisdistribution, the cost of dithering is spread over the'16 PLYCIS in each block.Another optimization used heavily in the 8-bitdecoder is to manipulate 4 pixels simultaneouslywith a single machine instruction. This techniqueincreases performance for decompressing anddithering to 3.2 instructions per pixel in the MIPSinstruction set, including all loop overhead, decodingof the encoded data stream, and acljusting contrastant1 brightness of the image (2.7 instri~ctionsper pixel for gray-scale). This efficiency is achieveelby carefill merging of tlie decoding, decompression,ant1 dithering phases into a single block ofcode and avoiding intermediate results written tomemory. The cost of the 1-bit and 24-bit decoders isthe same or lower (3.2 and 2.9 instructions perpixel, respectively).CompressionThe SMP compressor takes an input image, a desiredcoded image size, and an output buffer as arguments.It operates in five phases:Input scaling (optional)Block truncation (luminance)Flat block selectionColor quantization (color SMP only)Encotling and output writingAlthough the initial scaling is not strictly part ofthe sMp algorithm, it is necessary for different inputsources. Fast scaling is offered as part of both thelibrary and the command-line SMP compressors.Instead of simple subsampling, true averaging isused to ensure maximum input image quality.The block truncation phase makes two passesthrough each 4 by 4 block of the input. The firstpass calci~lates the luminance of each individualpixel and sums them to fintl the average luminanceof the entire block. The second pass partitions tliepixel pairs into the foreground and backgroundsets and calculates their respective luminance anclchrominance averages.The flat-block-selection phase uses the desiredcompression ratio to decicle how many blocks canbe kept as structured blocks and how many need tobe convertecl to flat blocks. The luminance differenceof the blocks is calculated, and blocks in thelow-contrast range are marked for transition to flatblocks. Because the total average was calculated foreach block in the preceding phase, no additionalcalculations are needed for the conversion ofblocks, and the mask is thrown away. Colors areentered into a search structure during this phase.The color quantization phase uses a median cutalgorithm, biased to ensure good coverage of thecolor contents of the image rather than minimizethe overall quantization error. The bias methotlensures that small, colored objects are not lost dueto large, smoothly shaclecl areas getting the lion'sshare of the color allocations. These small objectsoften are tlie import;uit featilres in motionsequences and have a high visibility despite theirsmall size.The final encotling phase builcls the color tableand matches the foreground/background colors ofthe blocks to the best ni;~tclies in the chosen colortable.The gray-scale compression can be much fasterbecause neither the quantization nor the matchingstep need be performed. Also, only one-thkd of theuncompressed video data is usually read in, makinggray-scale compression fast enough to enable realtimecompression on faster workstations and videoconferencingtype applications.This speed is partly due to the %bit restriction inthe mask of each structured block. This restrictionpermits the algorithm to store all intermediateresults of the block truncation step in registers ontypical reduced instruction set conlputer (MSC)machines with 32 registers. The entire gray-scale<strong>Digital</strong> <strong>Technical</strong> Joul7rnl Vol 5 iVo 2 Spring 199.3 2 5

[Multimediacompression algorithm can be done on a MIPSR3000 with 8 machine instructions per input pixelon average, all overlieacl (except input scaling)included.Unfortunately, for color processing, SMP compressionremains an off-line, non-real-time process,albeit a reasonably fast one at 220 instructionsper pixel. A 25-MHz R3000 processor can processmore than 40,000 frames in 24 hours (DECstation5000 Model 200, 320 by 240 at 15 frames per second,TX/PIP as frame grabber), equivalent to 45 minutesof compressed video material per day. Themore recent DEC 3000 AXP Model 500 workstationimproves this number threefold, so special-purposehardware for compression is unnecessary even forcolor SMP.PortabilityA crucial part of the SMP design for portability is theplacement of the original SMP codec on the clientside of the X Window System. This allows portingand use of SAW on other systems, without beingat the mercy of a particular system vendor for integrationof the codec into their X server or witidowsystem.This placement is enabled by tlie efficiency of theSiLlP decompression engine, which allows manyspare cycles for performing the copy of tlie decompressed,device-dependent video to the windowsystem.Currently, SMP is offered as a product only on theDECstation family of workstations, but it has beenported to a variety of platforms, includingDEC AXP workstations running the DEC OSF/lAXP operating systemAlpha AXP systems running the OpenVMS operatingsystemDEC~C AXP personal computers running theWindows NT AXP operating systemVAX systems running the VMS operating systemSun SPARCstationIBM ~~/6000 systemHP/PA Precision systemMicrosoft Windows version 3.1Generally, porting the SMP system to another platformsupporting tlie X Wintlow System requires theselection of two parameters (host byte order andpresence of the MITSHM extension) and then a compilation,l'he same codec source is used on all theabove machines; no assembly language or machinespecificoptimizations are used or needed.The port to Microsoft Windows shows thatthe same base technology can be usetl with otherwindow systems, although parts specific to tlie windowsystem had to be rewritten. The codec code isessentially identical, but the extreme shortage ofregisters in the 80x86 architecture and the lack ofreasonable handling of 32-bit pointers in C languageunder Winclows warrant a rewrite in assemblylanguage on this platform. We do not expectthis to be an issue on Windows version 3.2, clue tobe released later in 1993.ConclusionSoftware motion pictures offers a cost-effective,totally portable way of bringing digital video to thedesktop without requiring special investments foradd-on hardware. Combined with audio facilities,SMP can be used to bring a complete video playbackto most desktop systen~s. The algorithm and irnplementationwere designed to be used from (:ll-ROMsas well as network connections. SMP ~eilnile~~lyintegrates with the existing windowing system software.Hecause of its potentially universal i~vailability,SiMP can serve an important function as thelowest common denominator for digital videoacross nlultiple platforms.AcknowledgmentsWe would like to thank all the people who havecontributed to making software motion pictures areality. Particular thanks go to Paul Tallett for writingthe original demonstrator and insisting on theimportance of a color version. He also implementedthe vMS versions. Thanks also to European ExternalResearch for making the initial research ant1 laterproduct transition possible. Last but not least,thanks to Susan Angebranndt and her engineeringteam for their help and confidence in this work.Refmences1. Special Issue on <strong>Digital</strong> multimedia Systems,Com~~z~.~~?icatiolzs of the AGM, vol. 34, no. 4(April 1991).W,1 5 iVo 2 ([)r117

2. L. Palnier ancl R. Palmer, "DE

Davis Yen Pan I<strong>Digital</strong> Audio CompressionCompared to most digital data types, with the exception of digit611 uideo, the datarates associated wzth uncompressed digital audio are su6st~~ntial <strong>Digital</strong> azidiocor~zpression enables Inore eflicient storage and t~~~in.s~nission of ~udzo data Theinany fonns of cl~lclio co~npression tec/?niqz~es offer a range Of encoder and decodercomnplexitJ,, co~npressed audzo quality, nizd dffer/iig ornotints of d~ilcl ccorrzpressionThe p-law transJor~nation and ADPCJI coder are simllple ~rpproaches with lozi1-complexity, lou8-compression, and medium azidio quality algoritl~nzs The ;UPEG/audio standard is a high-complexity, high-compression, arzd high audio qzialityalgorithm These techniques apply to general audio sigrznls and are not specificallytuned for speech signals<strong>Digital</strong> audio compression allows the efficient storageand transmission of audio data. The variousaudio compression techniques offer different levelsof complexity, compressecl audio quality, ant1:imount of clata compression.This paper is a survey of techniques itsetl to cornpressdigital audio signals. Its intent is to provideusefi~l information for readers of all levels of experiencewith digital audio processing. The paperbegins with a summary of the basic audio digitizationprocess. The next two sections presentdetailed descriptions of two relatively simpleapproaches to audio compression: p-law ant1 adaptivedifferential pulse code modulation. In the followingsection, the paper gives an overview of athird, much more sophisticated, compressionaudio algorithm from the Motion Pictilre ExpertsGroup. The topics covered in this section are quitecomplex and are intended for the reader who isfamiliar with digital signal processing. The paperconcludes with a discussion of software-only realtimeimplementations.<strong>Digital</strong> Audio DataThe digital representation of audio data offersmany advantages: high noise irnmunit): stabilityand reproclucibil.ity, iludio in digital form ;11soallows the efficient implementation of many audioprocessing fiinctions (e.g., mixing, filtering, ant1equalization) through the digital computer.The conversion from the analog to the digitaldomain begins by s:~mpling the audio input in regular,discrete intervals of time ant1 quantizing thesampled villues into a tliscrete number of evenlyspaced levels. "l'he digital audio data consists of aseqilence of binary values representing the numberof quantizer levels h,r each audio sample. Themethod of representing each sample with an intlependentcode word is called pulse code motlul;~tion(PCI\l). Figure 1 shows the digital audio process._ I -mANALOGANALOGAUDIOPCM PCMAUDIOINPUT ANALOG-TO-DIGITAL VALUES DIGITAL SIGNAL VALUES DIGITAL-TO-ANALOG OUTPUTCONVERSION PROCESSING CONVERSIONFigure I<strong>Digital</strong> Audio Process28 Val. 5 No. 1 Spri~t,? 199.5 <strong>Digital</strong> Tecbnicnl Jorrrrzal