Bayesian Clustering with Regression - Facultad de Matemáticas ...

Bayesian Clustering with Regression - Facultad de Matemáticas ...

Bayesian Clustering with Regression - Facultad de Matemáticas ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

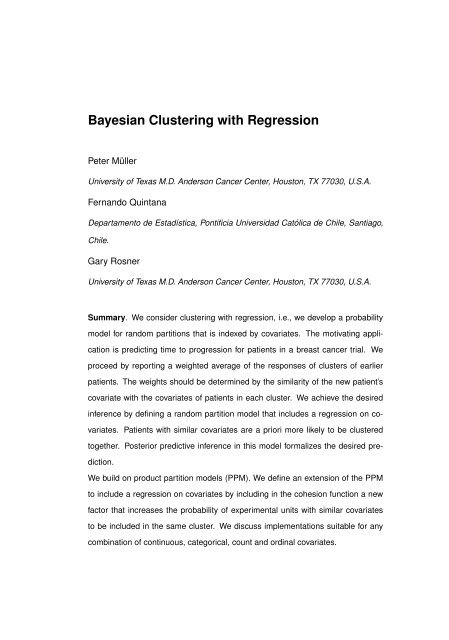

<strong>Bayesian</strong> <strong>Clustering</strong> <strong>with</strong> <strong>Regression</strong>Peter MüllerUniversity of Texas M.D. An<strong>de</strong>rson Cancer Center, Houston, TX 77030, U.S.A.Fernando QuintanaDepartamento <strong>de</strong> Estadística, Pontificia Universidad Católica <strong>de</strong> Chile, Santiago,Chile.Gary RosnerUniversity of Texas M.D. An<strong>de</strong>rson Cancer Center, Houston, TX 77030, U.S.A.Summary. We consi<strong>de</strong>r clustering <strong>with</strong> regression, i.e., we <strong>de</strong>velop a probabilitymo<strong>de</strong>l for random partitions that is in<strong>de</strong>xed by covariates. The motivating applicationis predicting time to progression for patients in a breast cancer trial. Weproceed by reporting a weighted average of the responses of clusters of earlierpatients. The weights should be <strong>de</strong>termined by the similarity of the new patient’scovariate <strong>with</strong> the covariates of patients in each cluster. We achieve the <strong>de</strong>siredinference by <strong>de</strong>fining a random partition mo<strong>de</strong>l that inclu<strong>de</strong>s a regression on covariates.Patients <strong>with</strong> similar covariates are a priori more likely to be clusteredtogether. Posterior predictive inference in this mo<strong>de</strong>l formalizes the <strong>de</strong>sired prediction.We build on product partition mo<strong>de</strong>ls (PPM). We <strong>de</strong>fine an extension of the PPMto inclu<strong>de</strong> a regression on covariates by including in the cohesion function a newfactor that increases the probability of experimental units <strong>with</strong> similar covariatesto be inclu<strong>de</strong>d in the same cluster. We discuss implementations suitable for anycombination of continuous, categorical, count and ordinal covariates.

1. IntroductionWe <strong>de</strong>velop a probability mo<strong>de</strong>l for clustering <strong>with</strong> covariates, that is a probabilitymo<strong>de</strong>l for partitioning a set of experimental units, where the probabilityof any particular partition is allowed to <strong>de</strong>pend on covariates. The motivatingapplication is inference in a clinical trial. The outcome is time to progressionfor breast cancer patients. The covariates inclu<strong>de</strong> treatment dose, initial tumorbur<strong>de</strong>n, an indicator for menopause and more. We wish to <strong>de</strong>fine a probabilitymo<strong>de</strong>l for clustering patients <strong>with</strong> the specific feature that patients <strong>with</strong> equalor similar covariates should be more likely to co-cluster than others.Let i = 1, . . . , n, in<strong>de</strong>x experimental units, and let ρ n = {S 1 , . . . , S k } <strong>de</strong>notea partition of the n experimental units into k subsets S j . When n is obviousfrom the context we omit the subin<strong>de</strong>x and write ρ. Let x i and y i <strong>de</strong>note thecovariates and response reported for the i-th unit. Let x n = (x 1 , . . . , x n ) andy n = (y 1 , . . . , y n ) <strong>de</strong>note the entire set of recor<strong>de</strong>d covariates and data, and letx ∗ j = (x i, i ∈ S j ) and yj ∗ = (y i, i ∈ S j ) <strong>de</strong>note covariates and data correspondingto units in the j-th cluster. Sometimes it is convenient to introduceindicators e i ∈ {1, . . . , k} <strong>with</strong> e i = j if i ∈ S j , and use (k, e 1 , . . . , e n ) to <strong>de</strong>scribethe partition. We call a probability mo<strong>de</strong>l p(ρ) a clustering mo<strong>de</strong>l, excludingin particular purely constructive <strong>de</strong>finitions of clustering as a specific algorithm(<strong>with</strong>out reference to probability mo<strong>de</strong>ls). Many clustering mo<strong>de</strong>ls inclu<strong>de</strong>, implicitlyor explicitly, a sampling mo<strong>de</strong>l p(y | ρ). Probability mo<strong>de</strong>ls for p(ρ)and inference for clustering have been extensively discussed over the past fewyears. See Quintana (2006) for a recent review. In this paper we are interestedin adding a regression to replace p(ρ) <strong>with</strong> p(ρ | x).We focus on the product partition mo<strong>de</strong>ls (PPM). The PPM (Hartigan, 1990;Barry and Hartigan, 1993) constructs p(ρ) by introducing cohesion functionsc(A) ≥ 0 for A ⊂ {1, . . . , n} that measure how tightly grouped the elements in2

A are thought to be, and <strong>de</strong>fines a probability mo<strong>de</strong>l for a partition ρ and datay asp(ρ) ∝ ∏ k∏c(S i ) and p(y | ρ) = p j (y Sj ). (1)j=1Mo<strong>de</strong>l (1) is conjugate. The posterior p(ρ | y) is again in the same product form.Alternatively, the species sampling mo<strong>de</strong>l (SSM) (Pitman, 1996; Ishwaranand James, 2003) <strong>de</strong>fines an exchangeable probability mo<strong>de</strong>l p(ρ) that <strong>de</strong>pendson ρ only indirectly through the cardinality of the partitioning subsets, p(ρ) =p(|S 1 |, . . . , |S k |). The SSM can be alternatively characterized by a sequence ofpredictive probability functions (PPF) that <strong>de</strong>scribe how individuals are sequentiallyassigned to either already formed clusters or to start new ones. The choiceof the PPF is not arbitrary. One has to make sure that a sequence of randomvariables that are sampled by iteratively applying the PPF is exchangeable. Thepopular Dirichlet process (DP) mo<strong>de</strong>l (Ferguson, 1973; Antoniak, 1974) is a specialcase of a SSM. Moreover, the marginal distribution that a DP induces onpartitions is also a PPM <strong>with</strong> cohesions c(A) = M × (|A| − 1)! (Quintana andIglesias, 2003; Dahl, 2003). Here M <strong>de</strong>notes the total mass parameter of the DPprior.Mo<strong>de</strong>l based clustering (Banfield and Raftery, 1993; Dasgupta and Raftery,1998; Fraley and Raftery, 2002) implicitly <strong>de</strong>fines a probability mo<strong>de</strong>l on clusteringby assuming a mixture mo<strong>de</strong>lk∑p(y i | η, k) = τ j p j (y i | θ j ),j=1where η = (θ 1 , . . . , θ k , τ 1 , . . . , τ k ) are the parameters of a size k mixture mo<strong>de</strong>l.Together <strong>with</strong> a prior p(k) on k and p(θ, τ | k), the mixture <strong>de</strong>fines a probabilitymo<strong>de</strong>l on clustering. Consi<strong>de</strong>r the equivalent hierarchical mo<strong>de</strong>lp(y i | e i = j, k, η) = p j (y i | θ j ) and P r(e i = j | k, η) = τ j . (2)3

The implied posterior distribution on (e 1 , . . . , e n ) and k <strong>de</strong>fines a probabilitymo<strong>de</strong>l on ρ n . Richardson and Green (1997) <strong>de</strong>velop posterior simulation strategiesfor mixture of normal mo<strong>de</strong>ls. Green and Richardson (1999) discuss therelationship to DP mixture mo<strong>de</strong>ls.Especially in the context of spatial data, a popular clustering mo<strong>de</strong>l is basedon Voronoi tessellations (Green and Sibson, 1978; Okabe et al., 2000; Kim et al.,2005). For a review see, for example, Denison et al. (2002). Although notusually consi<strong>de</strong>red as a probability mo<strong>de</strong>l on clustering, it can be <strong>de</strong>fined assuch by means of the following construction. We inclu<strong>de</strong> it in this brief review ofclustering mo<strong>de</strong>ls because of its similarity to the mo<strong>de</strong>l proposed in this paper.Assume x i are spatial coordinates for observation i. Let d(x 1 , x 2 ) <strong>de</strong>note adistance measure, for example Eucli<strong>de</strong>an distance in R 2 . Clusters are <strong>de</strong>fined<strong>with</strong> latent cluster centers T j , j = 1, . . . , k, by setting e i = arg min j d(x i , T j ).That is, a partition is <strong>de</strong>fined by allocating each observation to the cluster whosecenter T j is closest to x i . Conditional on T , cluster allocation is <strong>de</strong>terministic.Marginalizing <strong>with</strong> respect to a prior on T we could <strong>de</strong>fine a probability mo<strong>de</strong>lp(ρ | k).In this paper we build on the PPM (1) to <strong>de</strong>fine a covariate-<strong>de</strong>pen<strong>de</strong>nt randompartition mo<strong>de</strong>l by augmenting the PPM <strong>with</strong> an additional factor that inducesthe <strong>de</strong>sired <strong>de</strong>pen<strong>de</strong>nce on the covariates. We refer to the additional factoras similarity function. Focusing on continuous covariates a similar approach isbeing proposed in in<strong>de</strong>pen<strong>de</strong>nt current work by Park and Dunson (2007). Shahbabaand Neal (2007) introduce a related mo<strong>de</strong>l for categorical outcomes, i.e.,classification. They use a logistic regression for the categorical outcome on covariatesand a random partition of the samples, <strong>with</strong> cluster-specific regressionparameters in each partitioning subset. The random partition is <strong>de</strong>fined by aDP prior and inclu<strong>de</strong>s the covariates as part of the response vector.4

In section 2 we state the proposed mo<strong>de</strong>l and consi<strong>de</strong>rations in choosingthe similarity function. In section 3 we show that the computational effort ofposterior simulation remains essentially unchanged from PPM mo<strong>de</strong>ls <strong>with</strong>outcovariates. In 4 we propose specific choices of the similarity function for commondata formats. In section 5 we show a simulation study and a data analysisexample. In the context of the simulation study we contrast the proposed approachto an often used ad-hoc implementation of including the covariates in anaugmented response vector.2. <strong>Clustering</strong> <strong>with</strong> CovariatesWe build on the PPM (1), modifying the cohesion function c(S j ) <strong>with</strong> an additionalfactor that achieves the <strong>de</strong>sired regression. Recall that x ∗ j = (x i, i ∈ S j )<strong>de</strong>notes all covariates for units in the j-th cluster.Let g(x ∗ j ) <strong>de</strong>note a nonnegativefunction of x ∗ jthat formalizes similarity of the x i <strong>with</strong> larger valuesg(x ∗ j ) for sets of covariates that are judged to be similar. We <strong>de</strong>fine the mo<strong>de</strong>lp(ρ | x) ∝k∏g(x ∗ j ) · c(S j ) (3)<strong>with</strong> the normalization constant g n (x n ) = ∑ ρ n∏ kj=1 g(x∗ j )c(S j).j=1By a slightabuse of notation we inclu<strong>de</strong> x behind the conditioning bar even when x is not arandom variable. We will later discuss specific choices for the similarity functiong. As a <strong>de</strong>fault choice we propose to <strong>de</strong>fine g(·) as the marginal probability inan auxiliary probability mo<strong>de</strong>l q, even if x i are not consi<strong>de</strong>red random,∫g(x ⋆ j ) ≡∏i∈S jq(x i | ξ j ) q(ξ j )dξ j . (4)The function (4) satisfies the following two properties that are <strong>de</strong>sirable for a similarityfunction in (3). First, we require symmetry <strong>with</strong> respect to permutationsof the sample indices i. The probability mo<strong>de</strong>l must not <strong>de</strong>pend on the or<strong>de</strong>r5

x n , x n+1 ):p(ρ n | x n ) = ∑ e n+1∫p(ρ n+1 | x n , x n+1 ) q(x n+1 | x n ) dx n+1 , (5)for the probability mo<strong>de</strong>l q(x n+1 | x n ) ∝ g n+1 (x n+1 )/g n (x n ).We complete the random partition mo<strong>de</strong>l (3) <strong>with</strong> a sampling mo<strong>de</strong>l that<strong>de</strong>fines in<strong>de</strong>pen<strong>de</strong>nce across clusters and exchangeability <strong>with</strong>in each cluster.We inclu<strong>de</strong> cluster-specific parameters θ j and common hyperparameters η:k∏ ∏p(y n | ρ n , θ, η, x n ) =p(θ j | η).j=1i∈S jp(y i | x i , θ j , η) and p(θ | η) = ∏ jThe resulting mo<strong>de</strong>l extends PPMs of the type (1) while keeping the productstructure.(6)3. Posterior InferenceA practical advantage of the proposed <strong>de</strong>fault choice for g(x ∗ j ) is that it greatlysimplifies posterior simulation. In words, posterior inference in (3) and (6) isi<strong>de</strong>ntical to the posterior inference that we would obtain if x i were part of therandom response vector y i . Formally, <strong>de</strong>fine an auxiliary mo<strong>de</strong>l q(·) by replacing(6) <strong>with</strong>q(y n , x n | ρ n , θ, η) = ∏ j∏i∈S jp(y i | x i , θ j , η) q(x i | ξ j ) and q(θ, ξ | η) = ∏ jp(θ j | η)q(ξ j )and replace the covariate-<strong>de</strong>pen<strong>de</strong>nt prior p(ρ n | x n ) by the PPM q(ρ n ) ∝∏ c(Sj ). We continue to use q(·) for the auxiliary probability mo<strong>de</strong>l that isintroduced as a computational <strong>de</strong>vice only, and p(·) for the proposed inferencemo<strong>de</strong>l.The posterior distribution q(ρ | y n , x n ) un<strong>de</strong>r the auxiliary mo<strong>de</strong>l isi<strong>de</strong>ntical to p(ρ | y n , x n ) un<strong>de</strong>r the proposed mo<strong>de</strong>l. An important caveat is that(7)7

ξ j and θ j must be a priori in<strong>de</strong>pen<strong>de</strong>nt. In particular the prior on θ j and q(ξ j )must not inclu<strong>de</strong> common hyperparameters. If we had p(θ j | η) and q(ξ j | η) <strong>de</strong>pendon a common hyperparameter η, then the posterior distribution un<strong>de</strong>r theauxiliary mo<strong>de</strong>l (7) would differ from the posterior un<strong>de</strong>r the original mo<strong>de</strong>l. Letq(x n | η) = ∑ ρ n∏j q(x⋆ j | ξ j)q(ξ j | η). The two posterior distributions woulddiffer by a factor 1/q(x n | η). The implication on the <strong>de</strong>sired inference can besubstantial. See the results in section 5 for an example and more discussion.However, the restriction that θ j and ξ j be in<strong>de</strong>pen<strong>de</strong>nt is natural. The functiong(x ∗ j ) <strong>de</strong>fines similarity of the experimental units in one cluster and is chosen bythe user. There is no notion of learning about the similarity.A minor complication arises <strong>with</strong> posterior predictive inference, i.e., reportingp(y n+1 | x n , x n+1 ). Using ˜x = x n+1 , ỹ = y n+1 and ẽ = e n+1 to simplify notation,we find p(ỹ | ˜x, x n , y n ) = ∫ p(ỹ | ˜x, ρ n+1 , y n ) dp(ρ n+1 | ˜x, y n , x n ). The integral issimply a sum over all configurations ρ n+1 . But it is not immediately recognizableas a posterior integral <strong>with</strong> respect to p(ρ n | y n ). This can easily be overcomeby an importance sampling reweighting step. The prior on ρ n+1 = (ρ n , ẽ) canbe written asp(ẽ = l, ρ n | ˜x, x n ) ∝ ∏ j≠lc(S j )g(x ∗ j ) c(S l ∪ {n + 1})g(x ∗ l , ˜x) =p(ρ n | x n ) g(x∗ l , ˜x)g(x ∗ l ) .c(S l ∪ {n + 1})c(S l )Let q l (˜x | x ∗ l ) ≡ g(x∗ l , ˜x)/g(x∗ l). The posterior predictive distribution becomesp(ỹ | ˜x, y n , x n ) ∝∫ kn+1∑l=1p(ỹ | y ∗ l , ẽ = l) q l (˜x | x ∗ l ) c(S l ∪ {n + 1})c(S l )p(ρ n | y n , x n ) dρ n .Therefore, sampling from (8) is implemented on top of posterior simulation forρ n ∼ p(ρ n | y n ). For each imputed ρ n , generate ẽ = l <strong>with</strong> probabilities propor-(8)8

tional tow l = q l (˜x | x ∗ l ) c(S l ∪ {n + 1})c(S l )and generate ỹ from p(ỹ | yl ∗, ẽ = l), weighted <strong>with</strong> w l, noting that in the specialcase l = k n + 1 we get w kn+1 = g(x ∗ )c({n + 1}).Reporting posterior inference for random partition mo<strong>de</strong>ls is complicated byproblems related to the label switching problem. See Jasra et al. (2005) for arecent summary and review of the literature. Usually the focus of inference insemiparametric mixture mo<strong>de</strong>ls like (6) is on <strong>de</strong>nsity estimation and prediction,rather than inference for specific clusters and parameter estimation. Predictiveinference is not subject to the label switching problem, as the posterior predictivedistribution marginalizes over all possible partitions. However, in some exampleswe want to highlight the choice of the clusters. We briefly comment on theimplementation of such inference. We use an approach that we found particularlymeaningful to report inference about the regression p(ρ | x). We report inferencestratified by k, the number of clusters. For given k we first find a set of kindices I = (i 1 , . . . , i k ) <strong>with</strong> high posterior probability of the correspondingcluster indicators being distinct, i.e., P r(D I | y) is high for D I = {e i ≠ e j for i ≠j and i, j ∈ I}. To avoid computational complications, we do not insist onfinding the k-tuple <strong>with</strong> the highest posterior probability. We then use i 1 , . . . , i kas anchors to <strong>de</strong>fine cluster labels by restricting posterior simulations to k clusters<strong>with</strong> the units i j in distinct clusters. Post-processing MCMC output, this iseasily done by discarding all imputed parameter vectors that do not satisfy theconstraint. We re-label clusters, in<strong>de</strong>xing the cluster that contains unit i j ascluster j. Now it is meaningful to report posterior inference on specific clusters.The proposed post-processing is similar to the pivotal reor<strong>de</strong>ring suggested inMarin and Robert (2007, chapter 6.4). An alternative loss function based methodto formalize inference on partitions is discussed in Lau and Green (2007).9

4. Similarity FunctionsFor continuous covariates we suggest as a <strong>de</strong>fault choice for g(x ∗ j ) the marginaldistribution of x ∗ jun<strong>de</strong>r a normal sampling mo<strong>de</strong>l. Let N(x; m, V ) <strong>de</strong>notea normal mo<strong>de</strong>l for the random variable x, <strong>with</strong> moments m and V , and letGa(x; a, b) <strong>de</strong>note a Gamma distributed random variable <strong>with</strong> mean a/b. Weuse q(x ∗ j | ξ j) = ∏ i∈S jN(x i ; m j , s j ), <strong>with</strong> a conjugate prior for ξ j = (m j , s j )as p(ξ j ) = N(m j ; m, B) · Ga(s −1j ; ν, S 0 ), <strong>with</strong> fixed m, B, ν, S 0 . The mainreason for this choice is operational simplicity. A simplified version uses fixeds j ≡ s. The resulting function g(x ⋆ j ) is the joint <strong>de</strong>nsity of a collection ofcorrelated multivariate normal vectors, <strong>with</strong> mean m and Cor(x i , x i ′) = B/(V +B). The fixed variance s specifies how strong we weigh similarity of the x. Inimplementations we used s = c 1 Ŝ, wherecovariate, and c 1Ŝ is the empirical variance of the= 0.5 is a scaling factor that specifies over what range weconsi<strong>de</strong>r values of this covariate as similar. Analogously we set B = c 2 Ŝ <strong>with</strong>c 2 = 10. The choice between fixed s versus variable s j should reflect priorjudgement on the variability of clusters. Variable s j allows for some clusters toinclu<strong>de</strong> a wi<strong>de</strong>r range of x values than others.When constructing a cohesion function for categorical covariates, a <strong>de</strong>faultchoice is based on a Dirichlet prior. Assume x i is a categorical covariate, x i ∈{1, . . . , C}. To <strong>de</strong>fine g(x ∗ j ), let q(x i = c) = ξ jc <strong>de</strong>note the probability massfunction. Together <strong>with</strong> a conjugate Dirichlet prior, q(ξ j ) = Dir(α 1 , . . . , α C ) we<strong>de</strong>fine the similarity function as a Dirichlet-categorical probability∫g(x ∗ j ) =∏∫ξ j,xi dq(ξ j ) =i∈S jC∏c=1ξ njcjc dq(ξ j ), (9)<strong>with</strong> n jc = ∑ i∈S jI(x i = c). This is a Dirichlet-multinomial mo<strong>de</strong>l <strong>with</strong>out themultinomial coefficient. For binary covariates the similarity function becomes aBeta-Binomial probability <strong>with</strong>out the Binomial coefficient. The choice of the10

hyperparameters α needs some care. To facilitate the formation of clusters thatare characterized by the categorical covariates we recommend Dirichlet hyperparametersα c < 1. For example, for C = 2, the bimodal nature of a Betadistribution <strong>with</strong> such parameters assigns high probability to binomial successprobabilities ξ j1 close to 0 or 1. Similarly, the Dirichlet distribution <strong>with</strong> parametersα c < 1 favors clusters corresponding to specific levels of the covariate.mo<strong>de</strong>l.A convenient specification of g(·) for ordinal covariates is an ordinal probitThe mo<strong>de</strong>l can be <strong>de</strong>fined by means of latent variables and cutoffs.Assume an ordinal covariate x <strong>with</strong> C categories. Following Johnson and Albert(1999), consi<strong>de</strong>r a latent trait Z and cutoffs −∞ = γ 0 < γ 1 ≤ γ 2 ≤ · · · ≤γ C−1 < γ C = ∞, so that x = c if and only if γ c−1 < Z ≤ γ c . Consi<strong>de</strong>r fixedcutoff values γ c = c − 1, c = 1, . . . , C − 1, and a normally distributed latenttrait, Z i ∼ N(m j , s j ). Let Φ c = Pr(γ c−1 < Z ≤ γ c | m j , s j ) <strong>de</strong>note the normalquantiles. We <strong>de</strong>fine q(x i | s i = j, m j , s j ) = Φ c . The <strong>de</strong>finition of the similarityfunction is completed <strong>with</strong> q(m j , s j ) as a normal-inverse gamma distribution asfor the continuous covariates. We have∫∏ Cq(x ∗ j ) =c=1Φ ncjcwhere n cj = ∑ i∈S jI{x i = c}.N(m j ; m, B) Ga(s −1j ; ν, S 0 ) dm j ds j ,Finally, to <strong>de</strong>fine g(·) for count-type covariates, we use a mixture of Poissondistributions∫g(x ∗ 1j ) = ∏i∈S jx i !Pi∈S x iξjj exp(−ξ j |S j |) dq(ξ j ). (10)With a conjugate gamma prior, q(ξ j ) = Ga(ξ j ; a, b), the similarity function g(x ∗ j )allows easy analytic evaluation. As a <strong>de</strong>fault, we suggest choosing a = 1 anda/b =cŜ, where Ŝ is the empirical variance of the covariate, and a/b is theexpectation of the gamma prior.The main advantage of the proposed similarity functions is computational11

simplicity of posterior inference. A minor limitation is the fact that the proposed<strong>de</strong>fault similarity functions, in addition to the <strong>de</strong>sired <strong>de</strong>pen<strong>de</strong>nce of therandom partition mo<strong>de</strong>l on covariates also inclu<strong>de</strong> a <strong>de</strong>pen<strong>de</strong>nce on the clustersize n j = |S j |. From a mo<strong>de</strong>ling perspective this is un<strong>de</strong>sirable. The mechanismto <strong>de</strong>fine size <strong>de</strong>pen<strong>de</strong>nce should be the un<strong>de</strong>rlying PPM and the cohesion functionsc(S j ) in (3). However, we argue that the additional cluster size penaltythat is introduced through g(·) can be ignored in comparison to, for example,the cohesion function c(S j ) = M(n j − 1)! that is implied by the popular DPprior.Proposition 2:penalty in mo<strong>de</strong>l (3).The similarity function introduces an additional cluster sizeConsi<strong>de</strong>r the case of constant covariates, x i ≡ x, andlet n j <strong>de</strong>note the size of cluster j. The <strong>de</strong>fault choices of g(x ⋆ j ) for continuous,categorical and count covariates introduce a penalty for large n j , <strong>with</strong>lim nj→∞ g(x ⋆ j ) = 0. But the rate of <strong>de</strong>crease is ignorable compared to the cohesionc(S j ). Let f(n j ) be such that lim nj→∞ g(x ⋆ j )/f(n j) ≥ M <strong>with</strong> 0 < M < ∞.For continuous covariates the rate is f(n j ) = (2π) − n j2 V − n j −12 (ρ+n j ) 1 2 <strong>with</strong> ρ =V/B. For categorical covariates it is f(n j ) = (A + n j ) A−αx , <strong>with</strong> A = ∑ c α c.For count covariates it is f(n j ) = C − n j2 (α + n j x) 1 2 <strong>with</strong> C = 2πx exp(1/6x).Proof: see appendix. ✷Another important concern besi<strong>de</strong>s the <strong>de</strong>pen<strong>de</strong>nce of g(·) on n j is the <strong>de</strong>pen<strong>de</strong>nceon q(ξ j ) in the auxiliary mo<strong>de</strong>l in (4). In particular, we focus on the<strong>de</strong>pen<strong>de</strong>nce on possibly hyperparamters φ that in<strong>de</strong>x q(ξ j ). We write q(ξ j | φ)when we want to highlight the use of such (fixed) hyperparameters. The auxiliarymo<strong>de</strong>ls q(·) for all proposed <strong>de</strong>fault similarity functions inclu<strong>de</strong> such hyperpa-12

ameters. Mo<strong>de</strong>l (3) and (4) implies conditional cluster membership probabilitiesp(e n+1 = j | x n+1 , x n , ρ n ) ∝ c(S j ∪ {n + 1})c(S j )g(x ⋆ j , x n+1)g(x ⋆ j} {{ ) (11)}≡q j(x n+1|x ⋆ j ,φ)j = 1, . . . , k n , <strong>with</strong> the convention g(∅) = c(∅) = 1. The cluster membershipprobabilities (11) are asymptotically in<strong>de</strong>pen<strong>de</strong>nt of φ. Noting that q j (x n+1 |x ⋆ j , φ) can be written as a posterior predictive distribution in the auxiliary mo<strong>de</strong>l,the statement follows from the asymptotic agreement of posterior predictivedistributions.Proposition 3:Consi<strong>de</strong>r any two similarity functions g h (x ⋆ j ), h = 1, 2, basedon an auxiliary mo<strong>de</strong>l (4) <strong>with</strong> different probability mo<strong>de</strong>ls q h (ξ j ), s = 1, 2,but the same mo<strong>de</strong>l q(x i | ξ j ). For example, q h (ξ j ) = q(ξ j | φ h ). Assumethat both auxiliary mo<strong>de</strong>ls satisfy the regularity conditions of Schervish (1995,Section 7.4.2) and assume q(x i | ξ) ≤ K is boun<strong>de</strong>d. Let q h j (x n+1 | x ⋆ j ) <strong>de</strong>notethe un-normalized cluster membership probability in equation (11) un<strong>de</strong>r q h (ξ j ).Thenlimn q1 j (x n+1 | x ⋆ j ) − qj 2 (x n+1 | x ⋆ j ) = 0 (12)j→∞The limit is a.s. un<strong>de</strong>r i.i.d. sampling from q(x i | ξ 0 ).See the appendix for a straightforward proof making use of asymptotic posteriornormality only. The statement is about asymptotic cluster membershipprobabilities for a future unit only and should not be overinterpreted.marginal probability for a set of elements forming a cluster does <strong>de</strong>pend onhyperparameters.proposition 2.TheAn example are the expressions for g(x ⋆ j ) in the proof of13

Y0 1 20 5 10 15 20 25TY−0.5 0.0 0.5 1.0 1.534567●●●●●●●●●●●●●●●●●0 5 10 15 20 25 30T●●●●●●●●●34567datatrue mean responseFig. 1. Simulation example. Data (left panel) and simulation truth for the mean responseE(y i | x i) as a function of the covariate x i (right panel).5. Examples5.1. Simulation StudyWe set up a simulation study <strong>with</strong> a 6-dimensional response y i , and a continuouscovariate x i , and for n = 47 data points (y i , x i ), i = 1, . . . , n. The simulationis set up to mimic responses of blood counts over time for patients un<strong>de</strong>rgoingchemo-immunotherapy. Each data point corresponds to one patient. The sixresponses for each patient could be for six key time points over the course of onecycle of the therapy. The covariate is a treatment dose. In the simulation studywe sampled x i from 5 possible values, x i ∈ {3, 4, 5, 6, 7}. For each value of x we<strong>de</strong>fined a different mean vector. Figure 1b shows the 5 distinct mean vectors forthe 6-dimensional response (plotted against days T ). The bullets indicate thesix responses. Adding a 6-dimensional normal residual we generated the datashown in Figure 1a.We then implemented the proposed mo<strong>de</strong>l for covariate-<strong>de</strong>pen<strong>de</strong>nt clustering14

to <strong>de</strong>fine a random partition mo<strong>de</strong>l <strong>with</strong> a regression on the covariate x i , asproposed earlier. Let N(x; m, s) indicate a normal distribution for the randomvariable x, <strong>with</strong> moments (m, s), let W (V ; ν, S) <strong>de</strong>note Wishart prior for a randommatrix V <strong>with</strong> scalar parameter ν and matrix variate parameter S, and letGa(s; a, b) <strong>de</strong>note a gamma distribution parameterized to have mean a/b. Letθ j = (µ j , V j ). We used a multivariate normal mo<strong>de</strong>l p(y i | θ j ) = N(µ j , V j ), <strong>with</strong>a conditionally conjugate prior for θ j , i.e., p(θ j ) = N(µ j ; m y , B y ) W (V −1j ; s, Sy −1 ).The mo<strong>de</strong>l is completed <strong>with</strong> conjugate hyperpriors for m y , B y and S y . The similarityfunction is a normal kernel. We use∫ ∏g(x ⋆ j ) = N(x i ; m j , s j ) N(m j ; m, B) Ga(s −1j ; ν, S 0 ).i∈S jThe hyperparameters m, B, S 0 are fixed.Figure 2 summarizes posterior inference by the posterior predictive distributionfor y n+1 arranged by x n+1 . The marginal posterior distribution forthe number of clusters assigns posterior probabilities p(k = 3 | data) = 76%,p(k = 4 | data) = 20% and p(k = 5 | data) = 3%.We used the procedure<strong>de</strong>scribed at the end of section 3 to report cluster-specific summaries. Conditionalon k = 3, the three clusters are characterized by x i = 3.4(0.2) for thefirst cluster, x i = 5(0) for the 2nd cluster and x i = 7(0) for the third cluster(posterior means and standard <strong>de</strong>viations of x i assigned to each cluster). Theaverage cluster sizes are 24, 11 and 11.For comparison, the right panel of the same figure shows posterior predictiveinference in a mo<strong>de</strong>l using a PPM prior on clustering, <strong>with</strong>out the use of covariates.In this case, the inference is by construction the same for all covariatevalues.A technically convenient ad-hoc solution to inclu<strong>de</strong> covariates in a samplingmo<strong>de</strong>l is to proceed as if the covariates were part of the response vector. Thisapproach is used, for example in Mallet et al. (1988) or Müller et al. (2004).15

E(Z | dta)−0.5 0.0 0.5 1.0 1.53●4●5●6●7●●●●●x34567●●●●●●●●0 5 10 15 20 25 30TIME●●●●●●●●● 5● 34● 6● 7E(Z | dta)−0.5 0.0 0.5 1.0 1.5●●●●●●0 5 10 15 20 25 30TIMEFig. 2. Simulation example. Estimated mean response un<strong>de</strong>r the proposed covariateregression (left panel) and <strong>with</strong>out (right panel).Doing so introduces an additional factor in the likelihood.Let y generically<strong>de</strong>note the response, x the covariate, and θ the mo<strong>de</strong>l parameters. Treating thecovariate as part of the response vector is equivalent to replacing the samplingmo<strong>de</strong>l p(y | x, θ) by p(y | x, θ) · p(x | θ). Equivalently, one can interpret theadditional factor p(x | θ) as part of the prior. The reported posterior inference isas if we had changed the prior mo<strong>de</strong>l from the original p(θ) to ˜p(θ) ∝ p(θ)p(x | θ).Wong et al. (2003) use this interpretation for a similar construction in the contextof prior probability mo<strong>de</strong>ls for a positive <strong>de</strong>finite matrix.The implied modification of the prior probability mo<strong>de</strong>l could be less innocuousthan what it seems. We implemented inference un<strong>de</strong>r the PPM prior mo<strong>de</strong>l(1), <strong>with</strong>out covariates, and a sampling mo<strong>de</strong>l as before, but now for an exten<strong>de</strong>dresponse vector augmented by the covariate x i . We assume a multivariate normalsampling mo<strong>de</strong>l p(y i , x i | θ j ) = N(µ j , V j ), <strong>with</strong> a conditionally conjugateprior for θ j , i.e., p(θ j ) = N(µ j ; m y , B y ) W (V −1j; s, Sy−1 ). As before the mo<strong>de</strong>l iscompleted <strong>with</strong> conjugate hyperpriors for m y , B y and S y . The hyperparameters16

●●●●●E(Z | dta)0.0 0.5 1.03●4●5●6●7●●●x34567●●●●●●●●●●●● 3● 4● 5● 6● 7●●0 5 10 15 20 25 30TIMEFig. 3. Prediction for ỹ arranged by ˜x for the covariate response mo<strong>de</strong>l <strong>with</strong> the additionalfactors p(x n j | θ j, η) in the prior (left panel). Posterior distribution on the size of thelargest cluster un<strong>de</strong>r the covariate response mo<strong>de</strong>l (right panel). The example has atotal sample size of n = 47.are chosen exactly as before. The additional 7-th row and column of the priormeans for B y and S y are all zero except for the (7,7) diagonal element whichwe fix at E(m j ) and E(s j ) to match the moments in the auxiliary mo<strong>de</strong>l q(·)from before. We refer to the mo<strong>de</strong>l as covariate response mo<strong>de</strong>l, in contrast tothe proposed covariate regression mo<strong>de</strong>l. Figure 3 shows inference un<strong>de</strong>r thismodified mo<strong>de</strong>l which differs from the proposed mo<strong>de</strong>l by the additional factorsp(x n j | θ j ) in the prior. In this example, the covariate response mo<strong>de</strong>l leadsto a much reduced size of the partition. With posterior probability 97% thepartition inclu<strong>de</strong>s only one cluster. This in turn leads to essentially a simplelinear regression implied by the dominating multivariate normal p(x i , y i | θ 1 ),as clearly seen in Figure 3. In contrast, un<strong>de</strong>r the proposed covariate regressionmo<strong>de</strong>l the size of the largest cluster is estimated between 16 and 26 (not shown).The covariate response mo<strong>de</strong>l could be modified to better match the simulation17

truth. This could be achieved, for example, by fixing the hyperparameters ina way that avoids correlation of the first 6 and the last dimension of µ j . Theresulting mo<strong>de</strong>l would have exactly the format of (3).We thus do not consi<strong>de</strong>r the principled way of introducing the covariatesin the PPM to be the main feature of the proposed mo<strong>de</strong>l. Rather, we arguethat the proposed mo<strong>de</strong>l greatly simplifies the inclusion of covariates includinga variety of data formats. It would be unnecessarily difficult to attempt the constructionof a joint mo<strong>de</strong>l for continuous responses and categorical, binary andcontinuous covariates. We argue that it is far easier to focus on a modificationof the cohesion function and that this does not imply restricting the scope of theproposed mo<strong>de</strong>ls. This is illustrated in the following data analysis example.5.2. A Survival Mo<strong>de</strong>l <strong>with</strong> Patient Baseline CovariatesWe consi<strong>de</strong>r data from a high-dose chemotherapy treatment of women <strong>with</strong>breast cancer. The data for this particular study have been discussed in Rosner(2005) and come from Cancer and Leukemia Group B (CALGB) Study 9082.It consists of measurements taken from 763 randomized patients, available asof October 1998 (enrollment had occurred between January 1991 to May 1998).The response of interest is the survival time, <strong>de</strong>fined as the time until <strong>de</strong>ath fromany cause, relapse, or diagnosis <strong>with</strong> a second malignancy. There are two treatments,one involving a low dose of the anticancer drugs, and the other consistingof aggressively high dose chemotherapy. The high-dose patients were given consi<strong>de</strong>rableregenerative blood-cell supportive care (including marrow transplantation)to help <strong>de</strong>creasing the impact of opportunistic infections rising from theseverely-affected immune system. The number of observed failures was 361, <strong>with</strong>176 un<strong>de</strong>r high dose and 185 un<strong>de</strong>r low dose chemotherapy.The dataset also inclu<strong>de</strong>s information on the following covariates for each18

patient: a treatment indicator <strong>de</strong>fined as 1 if a high-dose was administered and0 otherwise (HI); age in years at baseline (AGE); the number of positive lymphno<strong>de</strong>s found at diagnosis (POS) (the more the worse the prognosis, i.e. the morelikely the cancer has spread); tumor size in millimeters (TS), a one-dimensionalmeasurement; an indicator of whether the tumor is positive for the estrogenor progesterone receptor (ER+) (patients who were positive also received thedrug tamoxifen and are expected to have better risk) and an indicator of thewoman’s menopausal status, <strong>de</strong>fined as 1 if she is either perimenopausal or postmenopausalor 0 otherwise (MENO). Two of these six covariates are continuous(AGE,TS), three are binary (ER+,MENO,HI) and one is a count (POS).First we carried out inference in a mo<strong>de</strong>l using the indicator for high-doseas the only covariate, i.e, x i = HI. We implemented mo<strong>de</strong>l (6) <strong>with</strong> a similarityfunction for the binary covariate based on the beta-binomial mo<strong>de</strong>l (9).used α = (0.1, 0.1) to favor clusters <strong>with</strong> homogenous dose assignment. Conditionalon an assumed partition ρ n we use a normal sampling mo<strong>de</strong>l p(y i |θ j ) = N(µ j , V j ), <strong>with</strong> a conjugate normal-inverse gamma prior p(θ j | η) =N(µ j | m y , B y ) Ga(V −1jWe| s/2, sS y /2) and hyperprior m y ∼ N(a m , A m ), S y ∼Ga(q, q/R). Here η = (a m , A m , B y , q, R) are fixed hyperparameters. We usea m = ̂m, the sample average of y i , A m = 100, B y = 100 2 ,s = q = 4, andR = 100. Figure 4a shows inference summaries.The posterior distributionp(k | data) for the number of clusters is shown in Figure 5. The three largestclusters contain 28%, 23%, and 14% of the experimental units.Next we exten<strong>de</strong>d the covariate vector to inclu<strong>de</strong> all six covariates. Denoteby x i = (x i1 , . . . , x i6 ) the 6-dimensional covariate vector. We implement randomclustering <strong>with</strong> regression on covariates as in mo<strong>de</strong>l (6). The similarity functionis <strong>de</strong>fined as6∏g(x ∗ j ) = g l (x ∗ jl). (13)l=119

S0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0LOHIHAZARD0.000 0.005 0.010 0.015 0.020a=10.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0LOHI0 20 40 60 80 100 120DAYS0 20 40 60 80 100 120MONTHS0 20 40 60 80 100 120 140S(t) ≡ p(y n+1 ≥ t | data) hazard h(t) data (KM)Fig. 4. Survival example: Estimated survival function (left panel) and hazard (centerpanel), arranged by x ∈ {HI, LO}. The grey sha<strong>de</strong>s show pointwise one posterior predictivestandard <strong>de</strong>viation uncertainty. The right panel shows the data for comparison(Kaplan-Meier curve by dose).p(k | data)0.00 0.02 0.04 0.06 0.08% HI DOSE0 20 40 60 80 100●●●●●●5 10 15 20 25 30 35k0 20 40 60 80 100 120PFSp(k | data)Proportion high dose and mean PFSFig. 5. Survival example: Posterior for the number of clusters k (left panel), andproportion of patients <strong>with</strong> high dose (%HI) and average progression free survival (PFS)by cluster. The size (area) of the bullets is proportional to the average cluster size.20

For each covariate we follow the suggestion in Section 4 to <strong>de</strong>fine a factor g l (·) ofthe similarity function, using hyperparameters specified as follows. The similarityfunction for the three binary covariates is <strong>de</strong>fined as in (9) <strong>with</strong> α o = (0.1, 0.1)for HI, and α o = (0.5, 0.5) for ER+, and MENO. The two continuous covariatesAGE and TS were standardized to sample mean 0 and unit standard <strong>de</strong>viation.The similarity functions were specified as <strong>de</strong>scribed in section 4, <strong>with</strong>fixed s = 0.25, m = 0 and B = 1. Finally, for the count covariate POS weused the similarity function (10) <strong>with</strong> (a, b) = (1.5, 0.1). The sampling mo<strong>de</strong>l isunchanged from before.We assume that censoring times are in<strong>de</strong>pen<strong>de</strong>nt of the event times andall parameters in the mo<strong>de</strong>l. Posterior predictive survival curves for variouscovariate combinations are shown in Figure 6. In the figure, “baseline” refers toHI = 0, tumor size 38mm (the empirical median), ER = 0, Meno = 0, averageage (44 years), and POS = 15 (empirical mean). Other survival curves arelabeled to indicate how the covariates change from baseline, <strong>with</strong> TS− indicatingtumor size 26mm (the empirical first quartile), TS+ indicating tumor size 50mm(third quartile), HI referring to high-dose chemotherapy, and ER+ indicatingpositive estrogen or progesterone receptor status. The inference suggests thattreated patients <strong>with</strong> tumor size below the empirical median and that werepositive for estrogen or progesterone receptor have almost uniformly highestpredicted survival curves than any other combination of covariates.Figure 7 summarizes features of the posterior clustering. Interestingly, clustersare typically highly correlated to the postmenopausal status, as seen in theright panel. The high-dose indicator is also seen to be positively correlated tothe progression-free survival (PFS).The mo<strong>de</strong>l used so far consisted of a mixture of normal sampling mo<strong>de</strong>l forthe event times y i . A more parsimonious sampling mo<strong>de</strong>l is proposed in Rosner21

S0.2 0.4 0.6 0.8 1.0TS− HI ER+TS+ HI ER+HI ER+TS− HITS−HITS− ER+(baseline)ER+TS+ ER+0 20 40 60 80 100 120MONTHSS(t | x)Fig. 6. Survival example: Posterior predictive survival function S(t | x) ≡ p(y n+1 ≥t | x n+1 = x, data), arranged by x. The “baseline” case refers to all continuous andcount covariates equal to the empirical mean, and all binary covariates equal 0. Thelegend indicates TS− and TS+ for tumor size equal 26mm and 50mm (first and thir<strong>de</strong>mpirical quartile), HI for HI = 1 and ER+ for ER = 1. The legend is sorted by thesurvival probability at 5 years, indicated by a thin vertical line..22

p(k | data)0.00 0.02 0.04 0.06 0.08 0.10 0.1220 25 30 35 40k% HI DOSE0 20 40 60 80 100● ●●●●●●0 20 40 60 80 100 120PFS% Meno0 20 40 60 80 100●●●●0 20 40 60 80 100 120PFS●p(k | data) PFS and % high dose PFS and % postmenopausalFig. 7. Survival example: Posterior distribution for the number of clusters (left panel),mean PFS and % high dose patients per cluster (center panel), mean PFS and %postmenopausal patients per cluster (right panel).S0.2 0.4 0.6 0.8 1.0TS− HI ER+ER+TS− ER+HI ER+TS− HITS−TS+ HI ER+HI(baseline)TS+ ER+0 20 40 60 80 100 120 140MONTHSFig. 8. Survival example <strong>with</strong> the piecewise exponential sampling mo<strong>de</strong>l: estimatedsurvival S(t | x) arranged by covariates x.23

(2005) who uses a piece-wise exponential sampling mo<strong>de</strong>l <strong>with</strong> survival functionP r(y ≥ t) =⎧⎪⎨S(t | λ 1 , λ 2 , λ 3 , τ 1 , τ 2 ) =⎪⎩e −λ1t if t ≤ τ 1 ,e −λ1τ1 e −λ2(t−τ1) if τ 1 < t ≤ τ 2 ,e −λ1τ1 e −λ2(τ2−τ1) e −λ3(t−τ2) if τ 2 < t,(14)where λ = (λ 1 , λ 2 , λ 3 ) <strong>de</strong>fines the exponential rates for each piece, and thechange-points τ 1 and τ 2 are fixed as 1 and 2 years, respectively.Inference un<strong>de</strong>r sampling mo<strong>de</strong>l (14) is summarized in Figure 8. The posteriordistribution on the number of clusters (not shown) now shows a posteriormo<strong>de</strong> for k = 7, and positive posterior probability for 4 ≤ k ≤ 14. The averagecluster sizes of the 3 largest clusters are 43%, 30% and 18% of all observations.Compare <strong>with</strong> Figure 7a. The simple normal sampling mo<strong>de</strong>l un<strong>de</strong>rlying theinference in Figure 7 requires larger size mixtures than the more parsimoniousmo<strong>de</strong>l (14). On the other hand the fixed change-points τ 1 and τ 2 lead to discontinuitiesin the <strong>de</strong>nsities that are visible as the corners in the estimated survivalfunctions in Figure 8. The terms in the mixture are mo<strong>de</strong>ls of the form (14).Each mixture term is a distribution <strong>with</strong> support over the entire real line. Thisis in contrast to the mixture of normal kernels <strong>with</strong> more localized support. 6.6. ConclusionWe have proposed a novel mo<strong>de</strong>l for random partitions <strong>with</strong> a regression oncovariates. The mo<strong>de</strong>l builds on the popular PPM random partition mo<strong>de</strong>ls byintroducing an additional factor to modify the cohesion function. We refer to theadditional factor as similarity function. It increases the prior probability thatexperimental units <strong>with</strong> similar covariates are co-clustered. We provi<strong>de</strong> <strong>de</strong>faultchoices of the similarity function for popular data formats.The main features of the mo<strong>de</strong>l are the possibility to inclu<strong>de</strong> additional prior24

information related to the covariates, the principled nature of the mo<strong>de</strong>l construction,and a computationally efficient implementation. We compared themo<strong>de</strong>l <strong>with</strong> a popular ad-hoc implementation that inclu<strong>de</strong>s the covariates aspart of an augmented response vector. This approach is feasible in particularwhen both, the response variable y i and the covariate x i are continuous. Ajoint multivariate normal mo<strong>de</strong>l for the augmented response vector implicitlyintroduces the <strong>de</strong>sired regression on covariates. Besi<strong>de</strong>s issues of appropriatelikelihood specification, the main limitation of such an approach is the practicalrestriction to continuous responses and covariates. Building a joint mo<strong>de</strong>l forother data formats is possible, but would be more complicated than a straightforwardprincipled approach that treats x i as covariates.Among the limitations of the proposed method is an implicit penalty forthe cluster size that is implied by the similarity function. Consi<strong>de</strong>r all equalcovariates x i ≡ x. The value of the similarity functions proposed in section 4<strong>de</strong>creases across cluster size. This limitation could be mitigated by allowing anadditional factor c ⋆ (|S j |) in (6) to compensate the size penalty implicit in thesimilarity function.The programs are available as a function in the R package clusterX athttp://odin.mdacc.tmc.edu/∼pm/prog.html The function clusterx(.) implementsthe proposed covariate <strong>de</strong>pen<strong>de</strong>nt random partition mo<strong>de</strong>l for an arbitrarycombination of continuous, categorical, binary and count covariates, usinga mixture of normal sampling mo<strong>de</strong>l for y i .AcknowledgmentResearch was partially supported by NIH un<strong>de</strong>r grant 1R01CA75981, by FONDE-CYT un<strong>de</strong>r grant 1060729 and the Laboratorio <strong>de</strong> Análisis Estocástico PBCT-ACT13. Most of the research was done while the first author was visiting at25

Pontificia Universidad Católica <strong>de</strong> Chile.AppendixProof of Proposition 2For simplicity we drop the j in<strong>de</strong>x in n j , x ⋆ j etc., relying on the context to preventambiguity. Let 1 <strong>de</strong>note a (n × 1) vector of all ones. For continuous covariates,evaluation of the g(x ⋆ ) gives{g(x ⋆ ) = (2π) − n 2 [(V + nB)V n−1 ] − 1 2 exp − 1 2 (x − 1 ) }B(I m)2 V 1′ − J 1V + nBwhich after some further simplification becomes(2πV n−1n) − n2(ρ + n) 1 2 M(n)<strong>with</strong> lim n→∞ M(n) = M, 0 < M < ∞. For categorical covariates, Stirling’sapproximation and some further simplifications givesg(x ⋆ ) = ∏ Γ(A)∏ Γ(αc ) Γ(αx+n)Γ(α x) 1≥M(n).Γ(αcA−αx) Γ(A + n) A + nSimilarly, for count covariates we find( n 1g(x ⋆ b) =x!) a Γ(a) + nxΓ(a) (b + n) a+nx ≥ (2πx)− n 2 e− n112x (α + nx) 2 · M(n).Proof of Proposition 3The ratio g h (x ⋆ j , x n+1)/g h (x ⋆ j ) <strong>de</strong>fines the conditional probability qh j (x n+1 | x ⋆ j )un<strong>de</strong>r i.i.d. sampling in the auxiliary mo<strong>de</strong>l, and thus qj h(x n+1 | x ⋆ j ) = ∫ q(x n+1 |ξ j ) q h (ξ j | x ⋆ j ) dξ j.The result follows from asymptotic normality of q h (ξ j | x ⋆ j ), as n j → ∞.Let ̂ξ j <strong>de</strong>note the m.l.e. for ξ j based on n j observations in cluster j. LetLL ′ = [−I( ̂ξ j )] −1 <strong>de</strong>note a Choleski <strong>de</strong>composition of the negative inverse ofthe observed Fisher information matrix (Schervish, 1995, equation 7.88), and let26

ψ n = L(ξ j − ̂ξ j ). Theorem 7.89 of Schervish (1995) implies for any ɛ > 0 andany compact subset B of the parameter space:()lim P ξ j0sup |q 1 (ψ n | x ⋆ j ) − q 2 (ψ n | x ⋆ j )| > ɛ = 0.n j→∞ ψ n∈B} {{ }π nThe limit is in the cluster size n j . The probability is un<strong>de</strong>r an assumed truesampling mo<strong>de</strong>l q(x i | ξj o ), and the supremum is over B.Consi<strong>de</strong>r a sequence of reparametrizations of q(x i | ξ j ) to q(x i | ψ n ). LetB M = {ξ j : |ξ o j − ξ j| < M} be an increasing sequence of compact sets, andrecall the <strong>de</strong>finition of π n = P ξ oj(sup B . . . > ɛ). Thenlimn∫q(x n+1 | ξ j ) ( q 2 (ξ j | x ⋆ j ) − q 1 (ξ j | x ⋆ j ) ) dξ j =≤ limMlimnɛK(1−π n )+π n K = ɛK,for any ɛ > 0.ReferencesAntoniak, C. E. (1974) Mixtures of Dirichlet processes <strong>with</strong> applications to<strong>Bayesian</strong> nonparametric problems. The Annals of Statistics, 2, 1152–1174.Banfield, J. D. and Raftery, A. E. (1993) Mo<strong>de</strong>l-based Gaussian and non-Gaussian clustering. Biometrics, 49, 803–821.Barry, D. and Hartigan, J. A. (1993) A <strong>Bayesian</strong> analysis for change point problems.Journal of the American Statistical Association, 88, 309–319.Bernardo, J.-M. and Smith, A. F. M. (1994) <strong>Bayesian</strong> theory. Wiley Seriesin Probability and Mathematical Statistics: Probability and MathematicalStatistics. Chichester: John Wiley & Sons Ltd.Dahl, D. B. (2003) Modal clustering in a univariate class of product partitionmo<strong>de</strong>ls. Tech. Rep. 1085, Department of Statistics, University of Wisconsin.27

Dasgupta, A. and Raftery, A. E. (1998) Detecting Features in Spatial PointProcesses With Clutter via Mo<strong>de</strong>l-Based <strong>Clustering</strong>. Journal of the AmericanStatistical Association, 93, 294–302.Denison, D. G. T., Holmes, C. C., Mallick, B. K. and Smith, A. F. M. (2002)<strong>Bayesian</strong> methods for nonlinear classification and regression. Wiley Series inProbability and Statistics. Chichester: John Wiley & Sons Ltd.Ferguson, T. S. (1973) A <strong>Bayesian</strong> analysis of some nonparametric problems.The Annals of Statistics, 1, 209–230.Fraley, C. and Raftery, A. E. (2002) Mo<strong>de</strong>l-based clustering, discriminant analysis,and <strong>de</strong>nsity estimation. J. Amer. Statist. Assoc., 97, 611–631.Green, P. J. and Richardson, S. (1999) Mo<strong>de</strong>lling Heterogeneity <strong>with</strong> and <strong>with</strong>outthe Dirichlet Process.Mathematics.Tech. rep., University of Bristol, Department ofGreen, P. J. and Sibson, R. (1978) Computing Dirichlet tessellations in the plane.Comput. J., 21, 168–173.Hartigan, J. A. (1990) Partition mo<strong>de</strong>ls. Communications in Statistics, Part A– Theory and Methods, 19, 2745–2756.Ishwaran, H. and James, L. F. (2003) Generalized weighted Chinese restaurantprocesses for species sampling mixture mo<strong>de</strong>ls. Statist. Sinica, 13, 1211–1235.Jasra, A., Holmes, C. C. and Stephens, D. A. (2005) Markov chain MonteCarlo methods and the label switching problem in <strong>Bayesian</strong> mixture mo<strong>de</strong>ling.Statist. Sci., 20, 50–67.Johnson, V. E. and Albert, J. H. (1999) Ordinal data mo<strong>de</strong>ling. Statistics forSocial Science and Public Policy. New York: Springer-Verlag.28

Kim, H.-M., Mallick, B. K. and Holmes, C. C. (2005) Analyzing nonstationaryspatial data using piecewise Gaussian processes.Statistical Association, 100, 653–668.Journal of the AmericanLau, J. W. and Green, P. J. (2007) <strong>Bayesian</strong> Mo<strong>de</strong>l-Based <strong>Clustering</strong> Procedures.Journal of Computational and Graphical Statistics, 16, 526–558.Mallet, A., Mentré, F., Gilles, J., Kelman, A., Thomson, A., S.M., Brysonand Whiting, B. (1988) Handling covariates in population pharmacokinetics<strong>with</strong> an application to gentamicin. Biomedical Measurement Informatics andControl, 2, 138–146.Marin, J.-M. and Robert, C. P. (2007) <strong>Bayesian</strong> Core. A Practical Approach toComputational <strong>Bayesian</strong> Statistics. New York: Springer-Verlag.Müller, P., Quintana, F. and Rosner, G. (2004) A method for combining inferenceacross related nonparametric <strong>Bayesian</strong> mo<strong>de</strong>ls. J. R. Stat. Soc. Ser. B Stat.Methodol., 66, 735–749.Okabe, A., Boots, B., Sugihara, K. and Chiu, S. N. (2000) Spatial tessellations:concepts and applications of Voronoi diagrams. Wiley Series in Probabilityand Statistics. Chichester: John Wiley & Sons Ltd., second edn.foreword by D. G. Kendall.With aPark, J.-H. and Dunson, D. (2007) <strong>Bayesian</strong> generalized product partition mo<strong>de</strong>ls.Tech. rep., Duke University.Pitman, J. (1996) Some Developments of the Blackwell-MacQueen Urn Scheme.In Statistics, Probability and Game Theory. Papers in Honor of David Blackwell(eds. T. S. Ferguson, L. S. Shapeley and J. B. MacQueen), 245–268.Haywar, California: IMS Lecture Notes - Monograph Series.29

Quintana, F. A. (2006) A predictive view of <strong>Bayesian</strong> clustering.Statistical Planning and Inference, 136, 2407–2429.Journal ofQuintana, F. A. and Iglesias, P. L. (2003) <strong>Bayesian</strong> <strong>Clustering</strong> and ProductPartition Mo<strong>de</strong>ls. Journal of The Royal Statistical Society Series B, 65, 557–574.Richardson, S. and Green, P. J. (1997) On <strong>Bayesian</strong> analysis of mixtures <strong>with</strong>an unknown number of components (<strong>with</strong> discussion). Journal of the RoyalStatistical Society, Series B, 59, 731–792.Rosner, G. L. (2005) <strong>Bayesian</strong> monitoring of clinical trials <strong>with</strong> failure-timeendpoints. Biometrics, 61, 239–245.Shahbaba, B. and Neal, R. M. (2007) Nonlinear mo<strong>de</strong>ls using dirichlet processmixtures. Tech. rep., University of Toronto.Wong, F., Carter, C. K. and Kohn, R. (2003) Efficient estimation of covarianceselection mo<strong>de</strong>ls. Biometrika, 90, 809–830.30