Smart Industry 1/2018

Smart Industry 1/2018 - The IoT Business Magazine - powered by Avnet Silica

Smart Industry 1/2018 - The IoT Business Magazine - powered by Avnet Silica

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Smart</strong> Business Title Story: Self-driving cars<br />

The company has invested heavily in<br />

research involving machine learning,<br />

which Huang says is the “bottom-up<br />

approach to artificial intelligence” –<br />

and probably the most promising<br />

technology today. Machine learning<br />

requires the processing of huge<br />

amounts of data, and as it turns out,<br />

the company’s computative graphics<br />

processing units (GPUs) can do the<br />

job both faster and using less energy<br />

than the traditional central processing<br />

units (CPUs) found at the heart of<br />

most mainframe, desktop, and laptop<br />

computers today.<br />

The computative power of GPUs has<br />

increased as computer images have<br />

become more complex and, in 2007,<br />

Nvidia pioneered a new generation<br />

of GPU/CPU chips that now power<br />

many energy-efficient data centers in<br />

government laboratories, universities,<br />

and enterprises.<br />

It was almost by accident that the<br />

company became a big player in the<br />

nascent autonomous car sector, but it<br />

now plans to release the Nvidia Drive<br />

PX2 platform next year, describing it<br />

as the first "AI brain" capable of full<br />

autonomy.<br />

Nvidia’s approach is so revolutionary<br />

that other chipmakers are scrambling<br />

to catch up. Intel and AMD, two of the<br />

largest manufacturers of computer<br />

chips, have teamed up to pool their<br />

resources in order to head off Nvidia<br />

by developing a GPU/CPU combo of<br />

their own. In addition, Intel made the<br />

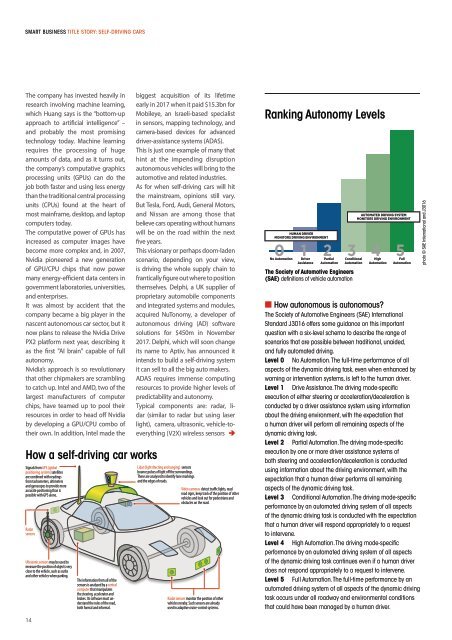

How a self-driving car works<br />

Signals from GPS (global<br />

positioning system) satellites<br />

are combined with readings<br />

from tachometers, altimeters<br />

and gyroscopes to provide more<br />

accurate positioning than is<br />

possible with GPS alone.<br />

Radar<br />

sensors<br />

Ultrasonic sensors may be used to<br />

measure the position of objects very<br />

close to the vehicle, such as curbs<br />

and other vehicles when parking.<br />

The information from all of the<br />

sensors is analysed by a central<br />

computer that manipulates<br />

the steering, accelerator and<br />

brakes. Its software must understand<br />

the rules of the road,<br />

both formal and informal.<br />

biggest acquisition of its lifetime<br />

early in 2017 when it paid $15.3bn for<br />

Mobileye, an Israeli-based specialist<br />

in sensors, mapping technology, and<br />

camera-based devices for advanced<br />

driver-assistance systems (ADAS).<br />

This is just one example of many that<br />

hint at the impending disruption<br />

autonomous vehicles will bring to the<br />

automotive and related industries.<br />

As for when self-driving cars will hit<br />

the mainstream, opinions still vary.<br />

But Tesla, Ford, Audi, General Motors,<br />

and Nissan are among those that<br />

believe cars operating without humans<br />

will be on the road within the next<br />

five years.<br />

This visionary or perhaps doom-laden<br />

scenario, depending on your view,<br />

is driving the whole supply chain to<br />

frantically figure out where to position<br />

themselves. Delphi, a UK supplier of<br />

proprietary automobile components<br />

and integrated systems and modules,<br />

acquired NuTonomy, a developer of<br />

autonomous driving (AD) software<br />

solutions for $450m in November<br />

2017. Delphi, which will soon change<br />

its name to Aptiv, has announced it<br />

intends to build a self-driving system<br />

it can sell to all the big auto makers.<br />

ADAS requires immense computing<br />

resources to provide higher levels of<br />

predictability and autonomy.<br />

Typical components are: radar, lidar<br />

(similar to radar but using laser<br />

light), camera, ultrasonic, vehicle-toeverything<br />

(V2X) wireless sensors<br />

Lidar (light dtecting and ranging) sensors<br />

bounce pulses of light off the surroundings.<br />

These are analysed to identify lane markings<br />

and the edges of roads.<br />

Video cameras detect traffic lights, read<br />

road signs, keep track of the position of other<br />

vehicles and look out for pedestrians and<br />

obstacles on the road.<br />

Radar sensors monitor the position of other<br />

vehicles neraby. Such sensors are already<br />

used in adaptive cruise-control systems.<br />

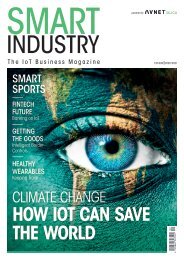

Ranking Autonomy Levels<br />

HUMAN DRIVER<br />

MONITORS DRIVING ENVIRONMENT<br />

0 1 2<br />

No Automation Driver<br />

Assistance<br />

3<br />

Partial Conditional<br />

Automation Automation<br />

The Society of Automotive Engineers<br />

(SAE) definitions of vehicle automation<br />

AUTOMATED DRIVING SYSTEM<br />

MONITORS DRIVING ENVIRONMENT<br />

4High<br />

Automation<br />

5<br />

Full<br />

Automation<br />

■ How autonomous is autonomous?<br />

The Society of Automotive Engineers (SAE) International<br />

Standard J3016 offers some guidance on this important<br />

question with a six-level schema to describe the range of<br />

scenarios that are possible between traditional, unaided,<br />

and fully automated driving.<br />

Level 0 No Automation. The full-time performance of all<br />

aspects of the dynamic driving task, even when enhanced by<br />

warning or intervention systems, is left to the human driver.<br />

Level 1 Drive Assistance. The driving mode-specific<br />

execution of either steering or acceleration/deceleration is<br />

conducted by a driver assistance system using information<br />

about the driving environment, with the expectation that<br />

a human driver will perform all remaining aspects of the<br />

dynamic driving task.<br />

Level 2 Partial Automation. The driving mode-specific<br />

execution by one or more driver assistance systems of<br />

both steering and acceleration/deceleration is conducted<br />

using information about the driving environment, with the<br />

expectation that a human driver performs all remaining<br />

aspects of the dynamic driving task.<br />

Level 3 Conditional Automation. The driving mode-specific<br />

performance by an automated driving system of all aspects<br />

of the dynamic driving task is conducted with the expectation<br />

that a human driver will respond appropriately to a request<br />

to intervene.<br />

Level 4 High Automation. The driving mode-specific<br />

performance by an automated driving system of all aspects<br />

of the dynamic driving task continues even if a human driver<br />

does not respond appropriately to a request to intervene.<br />

Level 5 Full Automation. The full-time performance by an<br />

automated driving system of all aspects of the dynamic driving<br />

task occurs under all roadway and environmental conditions<br />

that could have been managed by a human driver.<br />

photo © SAE International and J3016<br />

14