Residual Component Analysis: Generalising PCA for more flexible ...

Residual Component Analysis: Generalising PCA for more flexible ...

Residual Component Analysis: Generalising PCA for more flexible ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong>: <strong>Generalising</strong><br />

<strong>PCA</strong> <strong>for</strong> <strong>more</strong> <strong>flexible</strong> inference in<br />

linear-Gaussian models<br />

Alfredo A. Kalaitzis<br />

Neil D. Lawrence<br />

Department of Computer Science<br />

Sheffield Institute <strong>for</strong> Translational Neuroscience<br />

University of Sheffield<br />

March 23, 2012

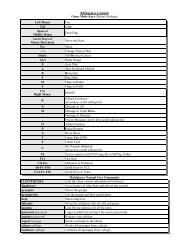

Outline<br />

Probabilistic Principal <strong>Component</strong> <strong>Analysis</strong> (P<strong>PCA</strong>)<br />

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong> (RCA)<br />

Optimising a Low-rank + Sparse-Inverse Covariance Model<br />

Experimental Results

Probabilistic <strong>PCA</strong> (sensible <strong>PCA</strong>)<br />

(Hotelling, 33) (Roweis, 98) (Tipping & Bishop, 99)<br />

◮ P<strong>PCA</strong> seeks a low dimensional representation of a data<br />

set in the presence of independent spherical Gaussian<br />

noise σ 2 I.<br />

Y ∈ R N×p<br />

X ∈ R N×q<br />

p(x) = N (0, I)<br />

p(y|x) = N (Wx, σ 2 I)

Probabilistic <strong>PCA</strong> (sensible <strong>PCA</strong>)<br />

(Hotelling, 33) (Roweis, 98) (Tipping & Bishop, 99)<br />

◮ P<strong>PCA</strong> seeks a low dimensional representation of a data<br />

set in the presence of independent spherical Gaussian<br />

noise σ 2 I.<br />

Y ∈ R N×p<br />

X ∈ R N×q<br />

p(x) = N (0, I)<br />

p(y|x) = N (Wx, σ 2 I)<br />

◮ Marginalising the latent variable X gives,<br />

�<br />

n�<br />

p(Y, X|W)dX = p(Y|W) =<br />

i=1<br />

⊤ 1<br />

WML = UqLqR NY⊤Y = UΛU ⊤<br />

N (yi,:|0, WW ⊤ + σ 2 I)<br />

Lq = (Λq − σ 2 I) 1<br />

2

Dual P<strong>PCA</strong> (principal Coordinate analysis)<br />

(Lawrence, 06)<br />

◮ Instead of the latent variable X, we can place a prior<br />

distribution on the mapping W. Marginalising W instead<br />

of X,<br />

�<br />

p(Y, W|X)dW =<br />

the P<strong>PCA</strong> dual solution can be obtained <strong>for</strong><br />

likelihoods of the <strong>for</strong>m<br />

p(Y|X) =<br />

p�<br />

j=1<br />

N (y:,j|0, XX ⊤ + σ 2 I)

Dual P<strong>PCA</strong> (principal Coordinate analysis)<br />

(Lawrence, 06)<br />

◮ Instead of the latent variable X, we can place a prior<br />

distribution on the mapping W. Marginalising W instead<br />

of X,<br />

�<br />

p(Y, W|X)dW =<br />

the P<strong>PCA</strong> dual solution can be obtained <strong>for</strong><br />

likelihoods of the <strong>for</strong>m<br />

p(Y|X) =<br />

p�<br />

j=1<br />

N (y:,j|0, XX ⊤ + σ 2 I)<br />

◮ Note the conditional independence is across features.<br />

Now, dual-P<strong>PCA</strong> solves <strong>for</strong> the latent coordinates,<br />

XML = U ′ ⊤<br />

qLqR

From dual-P<strong>PCA</strong> to the GPLVM<br />

(Lawrence, 06) (Titsias & Lawrence, 10) (Damianou et. al, 11)<br />

◮ Note the covariance is an inner-product term (of latent<br />

variables) plus spherical-Gaussian noise.<br />

p(Y|X) =<br />

p�<br />

j=1<br />

N (y:,j|0, XX ⊤ + σ 2 I)

From dual-P<strong>PCA</strong> to the GPLVM<br />

(Lawrence, 06) (Titsias & Lawrence, 10) (Damianou et. al, 11)<br />

◮ Note the covariance is an inner-product term (of latent<br />

variables) plus spherical-Gaussian noise.<br />

p(Y|X) =<br />

p�<br />

j=1<br />

N (y:,j|0, XX ⊤ + σ 2 I)<br />

◮ This likelihood is a product of independent Gaussian<br />

processes with linear kernels (or covariance functions).

From dual-P<strong>PCA</strong> to the GPLVM<br />

(Lawrence, 06) (Titsias & Lawrence, 10) (Damianou et. al, 11)<br />

◮ Note the covariance is an inner-product term (of latent<br />

variables) plus spherical-Gaussian noise.<br />

p(Y|X) =<br />

p�<br />

j=1<br />

N (y:,j|0, XX ⊤ + σ 2 I)<br />

◮ This likelihood is a product of independent Gaussian<br />

processes with linear kernels (or covariance functions).<br />

◮ A generalisation of the above to a non-linear kernel (but<br />

still an inner-product in some Hilbert space) is known as<br />

the Gaussian Process Latent Variable Model (GPLVM).

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I<br />

◮ ΣFA = diag(a)

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I<br />

◮ ΣFA = diag(a)<br />

◮ ΣCCA =<br />

� Y1Y ⊤ 1<br />

0<br />

0 Y2Y ⊤ 2<br />

�<br />

+ σ 2 I, <strong>for</strong> Y =<br />

� Y1<br />

Y2<br />

�

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I<br />

◮ ΣFA = diag(a)<br />

◮ ΣCCA =<br />

� Y1Y ⊤ 1<br />

0<br />

0 Y2Y ⊤ 2<br />

◮ ΣLR = ZZ ⊤ + σ2 I<br />

�<br />

+ σ 2 I, <strong>for</strong> Y =<br />

� Y1<br />

Y2<br />

�

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I<br />

◮ ΣFA = diag(a)<br />

◮ ΣCCA =<br />

� Y1Y ⊤ 1<br />

0<br />

0 Y2Y ⊤ 2<br />

�<br />

+ σ 2 I, <strong>for</strong> Y =<br />

◮ ΣLR = ZZ ⊤ + σ 2 I<br />

◮ ΣGP = K + σ 2 I s.t. Kij = k(zi, zj)<br />

� Y1<br />

Y2<br />

�

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I<br />

◮ ΣFA = diag(a)<br />

◮ ΣCCA =<br />

� Y1Y ⊤ 1<br />

0<br />

0 Y2Y ⊤ 2<br />

�<br />

+ σ 2 I, <strong>for</strong> Y =<br />

◮ ΣLR = ZZ ⊤ + σ 2 I<br />

◮ ΣGP = K + σ 2 I s.t. Kij = k(zi, zj)<br />

◮ ΣGlasso = Λ −1<br />

s.t. �<br />

i,j (Λij �= 0) ≪ p 2<br />

� Y1<br />

Y2<br />

�

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I<br />

◮ ΣFA = diag(a)<br />

◮ ΣCCA =<br />

� Y1Y ⊤ 1<br />

0<br />

0 Y2Y ⊤ 2<br />

�<br />

+ σ 2 I, <strong>for</strong> Y =<br />

◮ ΣLR = ZZ ⊤ + σ2 I<br />

◮ ΣGP = K + σ2 ◮<br />

I<br />

ΣGlasso = Λ<br />

s.t. Kij = k(zi, zj)<br />

−1<br />

s.t. �<br />

i,j (Λij �= 0) ≪ p 2<br />

◮ Given Σ, can we solve <strong>for</strong> X?<br />

� Y1<br />

Y2<br />

�

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

◮ Discussed: low-rank + spherical noise term.<br />

◮ Next:<br />

◮ Instances of Σ:<br />

XX ⊤ + Σ<br />

low-rank arbitrary positive definite<br />

◮ Σ<strong>PCA</strong> = σ 2 I<br />

◮ ΣFA = diag(a)<br />

◮ ΣCCA =<br />

� Y1Y ⊤ 1<br />

0<br />

0 Y2Y ⊤ 2<br />

�<br />

+ σ 2 I, <strong>for</strong> Y =<br />

◮ ΣLR = ZZ ⊤ + σ2 I<br />

◮ ΣGP = K + σ2 ◮<br />

I<br />

ΣGlasso = Λ<br />

s.t. Kij = k(zi, zj)<br />

−1<br />

s.t. �<br />

i,j (Λij �= 0) ≪ p 2<br />

◮ Given Σ, can we solve <strong>for</strong> X?<br />

� Y1<br />

◮ If so, <strong>more</strong> importantly, what <strong>for</strong>ms of Σ model <strong>for</strong><br />

important problems in machine learning?<br />

Y2<br />

�

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

(Kalaitzis & Lawrence, 11)<br />

◮ RCA theorem (dual version). The maximum likelihood<br />

estimate of X, <strong>for</strong> positive-definite Σ, is<br />

XML = ΣS(D − I) 1<br />

2 , (1)<br />

where S is the solution to the generalised eigenvalue<br />

problem (GEP)<br />

1<br />

p YY⊤ S = ΣSD.

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

(Kalaitzis & Lawrence, 11)<br />

◮ RCA theorem (dual version). The maximum likelihood<br />

estimate of X, <strong>for</strong> positive-definite Σ, is<br />

XML = ΣS(D − I) 1<br />

2 , (1)<br />

where S is the solution to the generalised eigenvalue<br />

problem (GEP)<br />

1<br />

p YY⊤ S = ΣSD.<br />

◮ Theorem extends to the primal version of RCA,<br />

WML = ΣS(D − I) 1<br />

2 ,<br />

1<br />

n Y⊤ YS = ΣSD.

<strong>Residual</strong> <strong>Component</strong> <strong>Analysis</strong><br />

(Kalaitzis & Lawrence, 11)<br />

◮ RCA theorem (dual version). The maximum likelihood<br />

estimate of X, <strong>for</strong> positive-definite Σ, is<br />

XML = ΣS(D − I) 1<br />

2 , (1)<br />

where S is the solution to the generalised eigenvalue<br />

problem (GEP)<br />

1<br />

p YY⊤ S = ΣSD.<br />

◮ Theorem extends to the primal version of RCA,<br />

WML = ΣS(D − I) 1<br />

2 ,<br />

1<br />

n Y⊤ YS = ΣSD.<br />

◮ Easier to see objective function by re-expressing into the<br />

equivalent regular symmetric eigenvalue problem.

Low-rank + Sparse-Inverse Covariance<br />

(Kalaitzis & Lawrence, 12)<br />

p(Λ) ∝ exp(−λ�Λ�1)<br />

p(z|Λ) = N (0, Λ −1 )<br />

p(x) = N (0, I)<br />

p(y|x, z) = N (Wx + z, σ 2 I)<br />

◮ Marginalising x and z from the joint log-density gives the<br />

objective<br />

log{p(Y|Λ)p(Λ)} =<br />

n�<br />

log{N (yi,:|0, WW ⊤ + ΣGlasso)p(Λ)}<br />

i=1<br />

�<br />

(bounded below by) ≥<br />

q(Z) log<br />

p(Y, Z, Λ)<br />

dZ<br />

q(Z)

Low-rank + Sparse-Inverse Covariance<br />

(Kalaitzis & Lawrence, 12)<br />

p(Λ) ∝ exp(−λ�Λ�1)<br />

p(z|Λ) = N (0, Λ −1 )<br />

p(x) = N (0, I)<br />

p(y|x, z) = N (Wx + z, σ 2 I)<br />

◮ Marginalising x and z from the joint log-density gives the<br />

objective<br />

log{p(Y|Λ)p(Λ)} =<br />

n�<br />

log{N (yi,:|0, WW ⊤ + ΣGlasso)p(Λ)}<br />

i=1<br />

�<br />

(bounded below by) ≥<br />

q(Z) log<br />

p(Y, Z, Λ)<br />

dZ<br />

q(Z)<br />

◮ Lower bound is maximised by a hybrid EM/RCA algorithm.

Optimising the LR + SI Covariance Model via EM/RCA<br />

◮ Initialise σ 2 , W and Λ.

Optimising the LR + SI Covariance Model via EM/RCA<br />

◮ Initialise σ 2 , W and Λ.<br />

◮ REPEAT<br />

◮ E-step: Update posterior density p(Z|Y) given current Λ.

Optimising the LR + SI Covariance Model via EM/RCA<br />

◮ Initialise σ 2 , W and Λ.<br />

◮ REPEAT<br />

◮ E-step: Update posterior density p(Z|Y) given current Λ.<br />

◮ M-step: Update Λ by GLASSO optimisation on the<br />

expected complete data log-density (expectation wrt<br />

current posterior p(Z|Y)).

Optimising the LR + SI Covariance Model via EM/RCA<br />

◮ Initialise σ 2 , W and Λ.<br />

◮ REPEAT<br />

◮ E-step: Update posterior density p(Z|Y) given current Λ.<br />

◮ M-step: Update Λ by GLASSO optimisation on the<br />

expected complete data log-density (expectation wrt<br />

current posterior p(Z|Y)).<br />

◮ RCA-step: Update W via RCA <strong>for</strong> ΣGlasso = Λ −1 + σ 2 I<br />

W = ΣGlassoS(D − I) 1<br />

2 , 1<br />

n Y⊤ YS = ΣGlassoSD<br />

◮ UNTIL the lower-bound converges.

Simulations<br />

Precision<br />

1<br />

0.9<br />

0.8<br />

0.7<br />

0.6<br />

0.5<br />

0.4<br />

0.3<br />

0.2<br />

0.1<br />

EM−RCA<br />

Glasso<br />

Glasso (no confounders)<br />

0<br />

0 0.1 0.2 0.3 0.4 0.5<br />

Recall<br />

0.6 0.7 0.8 0.9 1<br />

Y = XW ⊤ + Z + E<br />

Y ∈ R 100×50<br />

W ∈ R 50×3<br />

X ∈ R 100×3<br />

xi,: iid ∼ N (0, I3)<br />

zi,: iid ∼ N (0, Λ −1 )<br />

Λ sparsity 1%, with non-zero entries sampled from N (1, 2).<br />

Confounders and latent variables explain equal variance.<br />

Signal-noise ratio is 10.

Reconstructing the Human Form<br />

◮ 3-D point data from CMU motion-capture database.<br />

◮ Uni<strong>for</strong>m mixture of frames from walking, jumping,<br />

runnning and dancing motions.<br />

◮ Similarity matrix computed from inter-point squared<br />

distances; can be interpreted as a stiffness matrix of a<br />

physical spring system.<br />

◮ Cond. independence across frames and across features<br />

(sensor coordinates). Meaning each y contains only one<br />

of x, y or z coordinates of 31 sensors of one frame.<br />

◮ ∼7000 frames × |{x, y, z}|<br />

Y ∈ R 21000×31

Reconstructing the Human Form<br />

y<br />

0.2<br />

0.1<br />

0<br />

−0.1<br />

−0.2<br />

−0.3<br />

−0.4<br />

−0.2<br />

EMRCA−inferred stickman (at recall: 0.76667)<br />

0<br />

x<br />

0.2<br />

0.3<br />

0.2<br />

0.1<br />

z<br />

0<br />

−0.1<br />

−0.2<br />

GLASSO−inferred stickman (at recall: 0.76667)<br />

y<br />

0.2<br />

0<br />

−0.2<br />

−0.4<br />

−0.2<br />

x<br />

0<br />

0.2<br />

−0.4<br />

−0.2<br />

0<br />

0.2<br />

z

Reconstructing the Human Form<br />

y<br />

0.2<br />

0.1<br />

0<br />

−0.1<br />

−0.2<br />

−0.3<br />

−0.4<br />

−0.2<br />

EMRCA−inferred stickman (at recall: 1)<br />

0<br />

x<br />

0.2<br />

0.2<br />

z<br />

0<br />

−0.2<br />

y<br />

GLASSO−inferred stickman (at recall: 1)<br />

0.2<br />

0<br />

−0.2<br />

−0.4 −0.2 00.2<br />

x<br />

0.2<br />

0<br />

z<br />

−0.4<br />

−0.2

Reconstructing the Human Form<br />

Confounding regularities in the motions

Reconstructing the Human Form<br />

Precision<br />

1<br />

0.9<br />

0.8<br />

0.7<br />

0.6<br />

0.5<br />

0.4<br />

0.3<br />

0.2<br />

0.1<br />

Glasso<br />

inv.cov<br />

EM/RCA<br />

0<br />

0 0.1 0.2 0.3 0.4 0.5<br />

Recall<br />

0.6 0.7 0.8 0.9 1

Reconstruction of a protein-signalling network from<br />

heterogeneous data<br />

Precision<br />

(Protein-signaling data from Sachs et.al, 08)<br />

(Kronecker-GLasso from Stegle et.al, 11)<br />

1<br />

0.9<br />

0.8<br />

0.7<br />

0.6<br />

0.5<br />

0.4<br />

0.3<br />

0.2<br />

0.1<br />

EM−RCA<br />

Kronecker−Glasso (reported)<br />

Glasso (reported)<br />

0<br />

0 0.1 0.2 0.3 0.4 0.5<br />

Recall<br />

0.6 0.7 0.8 0.9 1<br />

266<br />

measurements of<br />

11 protein-signals<br />

under 3 different<br />

pertubations<br />

(uni<strong>for</strong>m mix).<br />

Y ∈ R 266×11