Lecture 8 Objectives Physical Database Design

Lecture 8 Objectives Physical Database Design

Lecture 8 Objectives Physical Database Design

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

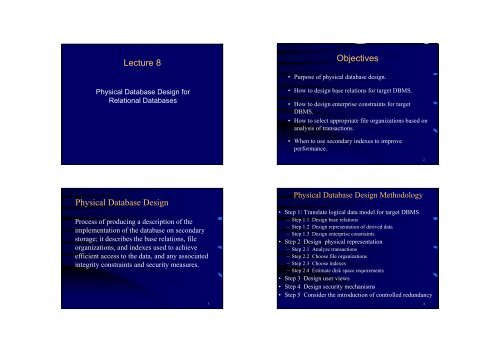

<strong>Lecture</strong> 8<br />

<strong>Physical</strong> <strong>Database</strong> <strong>Design</strong> for<br />

Relational <strong>Database</strong>s<br />

<strong>Physical</strong> <strong>Database</strong> <strong>Design</strong><br />

Process of producing a description of the<br />

implementation of the database on secondary<br />

storage; it describes the base relations, file<br />

organizations, and indexes used to achieve<br />

efficient access to the data, and any associated<br />

integrity constraints and security measures.<br />

3<br />

<strong>Objectives</strong><br />

• Purpose of physical database design.<br />

• How to design base relations for target DBMS.<br />

• How to design enterprise constraints for target<br />

DBMS.<br />

• How to select appropriate file organizations based on<br />

analysis of transactions.<br />

• When to use secondary indexes to improve<br />

performance.<br />

<strong>Physical</strong> <strong>Database</strong> <strong>Design</strong> Methodology<br />

• Step 1: Translate logical data model for target DBMS<br />

– Step 1.1 <strong>Design</strong> base relations<br />

– Step 1.2 <strong>Design</strong> representation of derived data<br />

– Step 1.3 <strong>Design</strong> enterprise constraints<br />

• Step 2 <strong>Design</strong> physical representation<br />

– Step 2.1 Analyze transactions<br />

– Step 2.2 Choose file organizations<br />

– Step 2.3 Choose indexes<br />

– Step 2.4 Estimate disk space requirements<br />

• Step 3 <strong>Design</strong> user views<br />

• Step 4 <strong>Design</strong> security mechanisms<br />

• Step 5 Consider the introduction of controlled redundancy<br />

2<br />

4

Step 1 Translate Logical Data Model for<br />

Target DBMS<br />

To produce a relational database schema that can be<br />

implemented in the target DBMS from the logical data<br />

model.<br />

• Need to know functionality of target DBMS such as<br />

how to create base relations and whether the system<br />

supports the definition of:<br />

– PKs, and FKs;<br />

– required data – i.e. whether system supports NOT NULL;<br />

– domains;<br />

– relational integrity constraints;<br />

– enterprise constraints.<br />

Example: PropertyForRent Relation<br />

5<br />

7<br />

Step 1.1 <strong>Design</strong> Base Relations<br />

To decide how to represent base relations in target DBMS.<br />

• For each relation, need to define:<br />

– the name of the relation;<br />

– a list of simple attributes in brackets;<br />

– the PK and FKs.<br />

– a list of any derived attributes and how they should be computed;<br />

– referential integrity constraints for any FKs identified.<br />

• For each attribute, need to define:<br />

– its domain, consisting of a data type, length, and any constraints on the<br />

domain;<br />

– an optional default value for the attribute;<br />

– whether the attribute can hold nulls.<br />

Step 1.2 <strong>Design</strong> Representation of Derived Data<br />

To decide how to represent any derived data present in<br />

the logical data model in the target DBMS.<br />

• Produce list of all derived attributes.<br />

• Derived attribute can be stored in database or calculated<br />

every time it is needed. Option selected is based on:<br />

• additional cost to store the derived data and keep it<br />

consistent with operational data from which it is derived;<br />

• cost to calculate it each time it is required.<br />

• Less expensive option is chosen subject to performance<br />

constraints.<br />

6<br />

8

PropertyforRent Relation and Staff Relation with<br />

Derived Attribute noOfProperties<br />

Step 2 <strong>Design</strong> <strong>Physical</strong> Representation<br />

To determine optimal file organizations to store<br />

the base relations and the indexes that are<br />

required to achieve acceptable performance; that<br />

is, the way in which relations and tuples will be<br />

held on secondary storage.<br />

9<br />

11<br />

Step 1.3 <strong>Design</strong> Enterprise Constraints<br />

To design the enterprise constraints for the<br />

target DBMS.<br />

• Some DBMS provide more facilities than others for<br />

defining enterprise constraints. Example:<br />

CREATE TABLE PropertyForRent (<br />

…<br />

CONSTRAINT StaffNotHandlingTooMuch<br />

CHECK (NOT EXISTS (SELECT staffNo<br />

FROM PropertyForRent<br />

GROUP BY staffNo<br />

HAVING COUNT(*) > 100))<br />

…);<br />

Step 2 <strong>Design</strong> <strong>Physical</strong> Representation<br />

• Number of factors that may be used to measure<br />

efficiency:<br />

- Transaction throughput: number of transactions<br />

processed in given time interval.<br />

- Response time: elapsed time for completion of a single<br />

transaction.<br />

- Disk storage: amount of disk space required to store<br />

database files.<br />

• Typically, have to trade one factor off against<br />

another to achieve a reasonable balance.<br />

10<br />

12

Step 2 <strong>Design</strong> <strong>Physical</strong> Representation –<br />

Preliminaries<br />

• Data is stored in a number of types of media<br />

– Primary Storage - here data that is accessed directly<br />

by the CPU in the form of main memory or faster but<br />

smaller capacity cache memory<br />

• provides fast access but has limited capacity and is also<br />

volatile (i.e data that is not stored permanently)<br />

– Secondary Storage - here data is not directly accessed<br />

by the CPU so it needs to be loaded into primary<br />

memory<br />

• 4-5 times slower than primary storage, has unlimited capacity<br />

and is non-volatile (stores data permanently)<br />

• Most databases are stored permanently on<br />

magnetic disk secondary storage<br />

Step 2 <strong>Design</strong> <strong>Physical</strong> Representation –<br />

Preliminaries<br />

• Data is organised on the disk as files.<br />

• File records are stored in disk blocks- this is because<br />

a block is the unit of data transfer between disk and<br />

memory.<br />

• The number of complete records that can fit into a<br />

block is the blocking factor (bfr).<br />

• If a block size is B bytes and the record size is R<br />

bytes, then<br />

bfr = Int(B/R)<br />

13<br />

15<br />

Step 2 <strong>Design</strong> <strong>Physical</strong> Representation –<br />

Preliminaries<br />

Operating<br />

System<br />

Typical disk configuration<br />

DB Files Index file Recovery<br />

log file<br />

Step 2.1 Analyze Transactions<br />

To understand the functionality of the<br />

transactions that will run on the database and to<br />

analyze the important transactions.<br />

• Attempt to identify performance criteria, such as:<br />

– transactions that run frequently and will have a significant<br />

impact on performance;<br />

– transactions that are critical to the business;<br />

– times during the day/week when there will be a high demand<br />

made on the database (called the peak load).<br />

– attributes that are updated in an update transaction;<br />

– criteria used to restrict tuples that are retrieved in a query.<br />

14<br />

16

Cross-Referencing Transactions and Relations<br />

Example Transaction Analysis Form<br />

17<br />

19<br />

Transaction Usage Map for Some Sample<br />

Transactions Showing Expected Occurrences<br />

Step 2.2 Choose File Organisations<br />

• Objective: To determine an efficient file organisation for<br />

each relation, if the DBMS allows this.<br />

• File organisation is the physical arrangement of data in a<br />

file into records and pages (blocks) on secondary storage.<br />

• Existing types of file organisations:<br />

– Heap (unordered) files<br />

– Sequential (ordered) files<br />

–Hashfiles<br />

• E.g., if we want to retrieve staff tuples in alphabetical<br />

order of name, sorting the file by staff name is a good file<br />

organisation.<br />

18<br />

20

Insert<br />

block 1 block 2 block 3<br />

Heap Files<br />

Here records are placed in the file in the same order as<br />

they are inserted<br />

Insert new record into last block creating a new block if necessary.<br />

Very efficient - last block address is readily available<br />

Find<br />

block 1 block 2 block 3<br />

Go through blocks one at a time until required record is found.<br />

Very inefficient - requires a linear search which works out at an<br />

average of b/2 block accesses if the file occupies b blocks<br />

Ordered Files<br />

• Here records are physically ordered (sorted)<br />

on disk based on the values of one or more<br />

fields (a.k.a. ordering fields)<br />

– if the ordering field is guaranteed to have a<br />

unique value in each record then it is also a key<br />

field of the file and is a.k.a an ordering key<br />

21<br />

23<br />

Heap Files<br />

Delete<br />

block i<br />

record 1 record 2 record 3 record 4 …..<br />

First find then mark for deletion records that are no<br />

longer required. Very inefficient because records have to be found.<br />

Reorganise<br />

block i<br />

record 1 record 3 …..<br />

Repacking needed only occasionally to remove unused space.<br />

Reasonably efficient.<br />

Insert<br />

Main file:<br />

Ordered Files<br />

block 1 block 2 block 3<br />

1 2 3 4 5 7 8 9 10 11 13<br />

Insert new record into overflow file and merge periodically<br />

Overflow file: block 1<br />

6 12<br />

Delete<br />

Mark for deletion records no longer required<br />

block 1 block 2 block 3<br />

1 2 (3) 4 5 (6) 7 (8) 9 10 11 12<br />

Reorganise<br />

Repack records to remove unused space<br />

block 1 block 2 block 3<br />

1 2 4 5 7 9 10 11 12<br />

22<br />

24

Ordered Files<br />

Find<br />

Say we wanted to find record with ordering key value K then we require<br />

a binary search<br />

The binary search starts by reading the middle block, M, calculated as:<br />

M = (1 + B) div 2 where B is the number of blocks<br />

Suppose:<br />

the smallest value in block M is called S and<br />

the largest value in block M is called L<br />

There are 3 possibilities:<br />

1. K < S – this means record K is somewhere in blocks 1 to M-1<br />

2. K < L – this means record K is somewhere in blocks M+1 to B<br />

3. Neither 1 or 2 in which case record K is in the current block, M<br />

In cases 1 and 2 the search continues at the next mid block<br />

For Ordered Files on average we will require ⎡log 2 B ⎤ block access<br />

Hash Files<br />

• Here one or more fields in a record is used to<br />

calculate the location of record for storage and<br />

retrieval<br />

• Unit of storage is a bucket<br />

– these contain blocks which store up to bfr records<br />

• e.g if bfr = 4 then you can store 4 records max per block<br />

• A hash function is used to calculate location of<br />

bucket<br />

– Enter record into next free space in block<br />

– If all the blocks are used up then create an overflow<br />

25<br />

Find cont.<br />

Ordered Files<br />

Given the following blocks, find record with key value, K=300<br />

block 1 block 2 block 3 block 4 block 5 block 6<br />

1 2 3 4 5 7 8 11 12 14 16 18 20 30 40 50 100 150 200 300 400<br />

B = 6 so read mid-block number i.e (1 +6) div 2 = block 3<br />

300 not in block 3 and K>18 so look between blocks 4 to 6<br />

block 4 block 5 block 6<br />

20 30 40 50 100 150 200 300 400<br />

Read new mid-block number (4+6) div 2 = block 5<br />

300 not in block 5 and K>200 so look at block 6 - found<br />

Correct block found with 3 block accesses i.e ⎡log2 6 ⎤ =3<br />

- quicker than a linear search which requires 6 file block access<br />

Hash Files<br />

Insert<br />

Assume we have 2 blocks per bucket with bfr = 4<br />

Also assume we have a hash function on K mod 5 – so could have:<br />

Bucket 1 Bucket 2<br />

1 6 11 16 21 26 31 36 22<br />

2 7 12 17<br />

Use overflow buckets when there is no room (collisions)<br />

e.g insert 41 and 46<br />

Bucket 1 Bucket 2<br />

1 6 11 16 21 26 31 36 22<br />

2 7 12 17<br />

Overflow Bucket<br />

bucket and place record in there 41 46<br />

27<br />

28<br />

26

Hash Files<br />

Find<br />

Use hash function to locate bucket then do a linear search of blocks<br />

- this is 1 block access or maybe more<br />

Delete and Reorganise<br />

Remove from bucket and replace from overflow bucket if necessary<br />

e.g delete 46<br />

Bucket 1 Bucket 2<br />

1 6 11 16 21 26 31 36 22<br />

2 7 12 17<br />

Overflow Bucket<br />

41 46<br />

Guidelines for selecting a file organisation<br />

• Ordered: Supports retrievals based on exact<br />

key match, pattern matching and range of<br />

values.<br />

• However its performance deteriorates as the<br />

relation is updated (loss of access key<br />

sequence).<br />

29<br />

31<br />

Guidelines for selecting a file organisation<br />

• Heap (unordered): is a good storage structure in<br />

the following situations:<br />

– When every tuple in the relation has to be retrieved<br />

(in any order) every time the relation is accessed<br />

– When the relation has an additional access<br />

structure, such as an index key.<br />

• Heap files are inappropriate when only selected<br />

tuples of a relations are to be accessed.<br />

Guidelines for selecting a file organisation<br />

•Hash:is a good storage structure when tuples<br />

are retrieved based on an exact match on the<br />

hash field value.<br />

• It is not so good in the following situations:<br />

– When tuples are retrieved based on a pattern match<br />

or range of the hash field value. E.g., staffno begins<br />

with “S1”, or salary in range 1000-2000<br />

– When tuples are retrieved based on a field other<br />

than the hash field.<br />

– When the hash field is frequently updated.<br />

30<br />

32

Step 2.3 Choose Indexes<br />

To determine whether adding indexes will improve the<br />

performance of the system.<br />

• An ordering index may be seen as a data structure<br />

designed to speed up the access to records in a file using<br />

an indexing field. An index, being much smaller, will be<br />

quicker to search than the main file.<br />

• There are 3 different types of indexes:<br />

– Primary Index<br />

– Secondary Index<br />

– Clustering Index<br />

• An index can be Multilevel.<br />

Primary Index<br />

• A Primary index is an ordered file whose records have<br />

two fields. The first field is of the same type as the<br />

ordering key field (in the Data file). The second field is<br />

a pointer to a disk block.<br />

• There is one index entry (or index record) in the index<br />

file for each block in the data file.<br />

– This is an example of a non-dense (sparse) index.<br />

– The first record in each block of the data file is called the<br />

anchor record.<br />

33<br />

35<br />

Sparse or Dense Indexes<br />

• An index can be sparse or dense:<br />

– A sparse (non-dense) index has an index record for<br />

only some of the search key values in the file<br />

– A dense index has an index record for every search<br />

key value in the file<br />

• The search key for an index can consist of one<br />

or more fields.<br />

Primary Index Example<br />

Say we had the following relation:<br />

Cars(reg#, engine#, make, model, price)<br />

Assume:<br />

1. There are 30,000 records<br />

2. Block size is 1024 bytes<br />

3. reg# (primary key) is 7 bytes long, the block pointer is 6 bytes long<br />

4. Record length is 100 bytes<br />

Therefore, bfr = Int(B/R) = Int(1024/100) = 10, so we need 3000 blocks<br />

to store all records i.e 30,000/10<br />

- A binary search on the data file needs 12 block accesses i.e ⎡log 2 3000⎤<br />

- A better option is to use the primary index: only 6 block accesses needed<br />

i.e., ⎡log 2 39⎤ + 1 (1 accounts for reading the data file block)<br />

The size of an index entry = 13 bytes. Bfr i = int(1024/13)=78. Number of<br />

index entries = 3000. Number blocks = ⎡3000/78⎤ = 39<br />

34<br />

36

E246WFC<br />

G123RMR<br />

G889VDU<br />

H203PBR<br />

H311MHG<br />

…<br />

Primary Index Example cont.<br />

3000 records, 13 bytes each<br />

39 blocks, 78 records each<br />

Primary Index File<br />

…<br />

7 bytes 6 bytes<br />

to read block<br />

Block access = ⎡log2 39⎤+ 1 = 6<br />

(compare with 12 for a binary search)<br />

30,000 records, 100 bytes each<br />

3000 blocks, 10 records<br />

Main Data File<br />

E246WFC 7648378<br />

F651DEK 3096275<br />

G123RMR 7493874<br />

G551JBA 2098377<br />

G889VDU 6587969<br />

G994PBR 5675789<br />

H203PBR 4654786<br />

H266MHU 6345234<br />

H311MHG 5675489<br />

H626RPG 5673455<br />

Clustering Index Example<br />

Consider the same relation: Cars(reg#, engine#, make, model, price)<br />

Assume clustering field is: make<br />

Clustering<br />

Clustering Index File<br />

Clustering Block<br />

Main Data File Filed<br />

G551JBA 7648378 Fiat<br />

F651DEK 3096275 Ford<br />

1<br />

Filed Value Pointer<br />

Fiat<br />

Ford<br />

Renault<br />

Rover<br />

Vauxhall<br />

G123RMR 7493874 Ford<br />

E246WFC 2098377 Ford<br />

G889VDU 6587969 Renault<br />

H266MHU 5675789 Renault<br />

H203PBR 4654786 Renault<br />

G994PBR 6345234 Rover<br />

H311MHG 5675489 Rover<br />

H626RPG 5673455 Vauxhall<br />

1<br />

2<br />

3<br />

4<br />

5<br />

2<br />

3<br />

4<br />

5<br />

1024<br />

block<br />

size<br />

37<br />

39<br />

Clustering Index<br />

• If records of a file are physically ordered on a non-key<br />

field. That field is called the clustering field.<br />

• A clustering index differs from a primary index, which<br />

requires that the ordering field of the data file have a<br />

distinct value for each record.<br />

• There is one entry in the clustering index for each<br />

distinct value of the clustering field. This is an example<br />

of a dense index.<br />

• A data file can have at most one primary index or one<br />

clustering index.<br />

Secondary Index<br />

• A secondary index is also an ordered file with<br />

two fields. The first field is of the same type as<br />

some non-ordering field of the data file. The<br />

second field is a block pointer or a record<br />

pointer.<br />

• There can be many secondary indexes for the<br />

same data file.<br />

38<br />

40

Example 1: Dense Secondary Index<br />

Say we had the same relation:<br />

Cars(reg#, engine#, make, model, price)<br />

Assume that engine# (secondary key) is 7 bytes long.<br />

A secondary key field has a distinct value for each data record.<br />

Hence, the secondary index will be dense.<br />

We want to know if it would be better to use a secondary index file<br />

constructed with engine# as the secondary index key, or<br />

Perform a simple linear search on the data file (cost = 1500<br />

accesses).<br />

Example 2: Non-dense Secondary Index<br />

• We can also create a secondary index on a non-key field<br />

of a file. In this case, many records in the data file can<br />

have the same value for the indexing field. There are<br />

several options for implementing such an index.<br />

• The option which is more commonly used is to have a<br />

single entry for each index filed value, but to create an<br />

extra level of indirection. In this non-dense scheme, the<br />

secondary index pointer will point to a block of record<br />

pointers; each record pointer points to one record in the<br />

data file.<br />

41<br />

43<br />

Example 1: Dense Secondary Index<br />

30000 records, 13 bytes each<br />

385 blocks, 78 records each<br />

Secondary Index File<br />

2098377<br />

3096275<br />

4654786<br />

5673455<br />

5675489<br />

5675789<br />

6345234<br />

6587969<br />

7493874<br />

7648378<br />

7 bytes 6 bytes<br />

30,000 records, 100 bytes each<br />

3000 blocks, 10 records each<br />

Main Data File<br />

E246WFC 7648378<br />

F651DEK 3096275<br />

G123RMR 7493874<br />

G551JBA 2098377<br />

G889VDU 6587969<br />

G994PBR 5675789<br />

H203PBR 4654786<br />

H266MHU 6345234<br />

H311MHG 5675489<br />

H626RPG 5673455<br />

to read block<br />

Block access = ⎡log2 385⎤ + 1 = 10<br />

(Better than a linear search on the data file which needs 1500 accesses)<br />

Example 2: Non-dense Secondary Index<br />

30 records, 26 bytes each<br />

1 block, 30 records in it<br />

Secondary Index File<br />

Fiat<br />

Ford<br />

Renault<br />

Rover<br />

Vauxhall<br />

20 bytes 6 bytes<br />

Pointers<br />

to read block and pointer<br />

30,000 records, 100 bytes each<br />

3000 blocks, 10 records<br />

Main Data File<br />

E246WFC 7648378 Fiat<br />

F651DEK 3096275 Rover<br />

G123RMR 7493874 Ford<br />

G551JBA 2098377 Rover<br />

G889VDU 6587969 Renault<br />

G994PBR 5675789 Vauxhall<br />

H203PBR 4654786 Rover<br />

H266MHU 6345234 Ford<br />

H311MHG 5675489 Renault<br />

H626RPG 5673455 Fiat<br />

Block access = ⎡log2 1⎤ + 1+ 2 = 3<br />

(Better than a linear search on the data file which needs 1500 accesses)<br />

1<br />

2<br />

3<br />

4<br />

5<br />

1024<br />

block<br />

size<br />

42<br />

1<br />

2<br />

3<br />

4<br />

5<br />

44<br />

1024<br />

block<br />

size

Step 2.3 Choose Indexes<br />

• An index can be created in SQL using the CREATE<br />

INDEX statement.<br />

• To create a primary index:<br />

CREATE UNIQUE INDEX indexname ON<br />

table(attribute);<br />

• To create a clustering index:<br />

CREATE INDEX indexname ON table(attribute)<br />

CLUSTER;<br />

• To create a secondary index:<br />

CREATE INDEX indexname ON table(attribute);<br />

Guidelines for selecting indexes: “wish-list”<br />

1. Do not index small relations (more efficient to search the<br />

relation in memory)<br />

2. Index the primary key of a relation if it is not a key of the file<br />

organisation<br />

3. Add a secondary index to a foreign key if it is frequently<br />

accessed<br />

4. Add a secondary index to any attribute that is heavily used as a<br />

secondary key<br />

5. Add a secondary index on attributes that are frequently involved<br />

in: selection (WHERE) or join criteria, ORDER BY, GROUP<br />

BY, sorting (e.g., DISTINCT…).<br />

6. Avoid indexing an attribute or relation that is frequently updated<br />

7. Avoid indexing attributes that consist of long character strings<br />

8. Avoid indexing an attribute if the query will retrieve a<br />

significant proportion (e.g., 25%) of the tuples in the relation<br />

45<br />

47<br />

Step 2.3 Choose Indexes<br />

• One approach is to keep tuples unordered and create as<br />

many secondary indexes as necessary.<br />

• Another approach is to order tuples in the relation by<br />

specifying a primary or clustering index.<br />

• In this case, choose the attribute for ordering or<br />

clustering the tuples as:<br />

– attribute that is used most often for join operations - this<br />

makes join operation more efficient, or<br />

– attribute that is used most often to access the tuples in a<br />

relation in order of that attribute.<br />

Removing indexes from the “wish-list”<br />

• Overhead involved in maintenance and use of secondary<br />

indexes:<br />

– adding an index record to every secondary index whenever tuple<br />

is inserted;<br />

– updating a secondary index when corresponding tuple is<br />

updated;<br />

– increase in disk space needed to store the secondary index;<br />

– possible performance degradation during query optimization to<br />

consider all secondary indexes.<br />

• It is a good idea to experiment whether an index is<br />

improving performance, providing very little<br />

improvement, or adversely impacting performance.<br />

• Some DBMSs allow such experiments, e.g., MS Access<br />

has a Performance Analyser.<br />

46<br />

48