W10-09

W10-09

W10-09

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

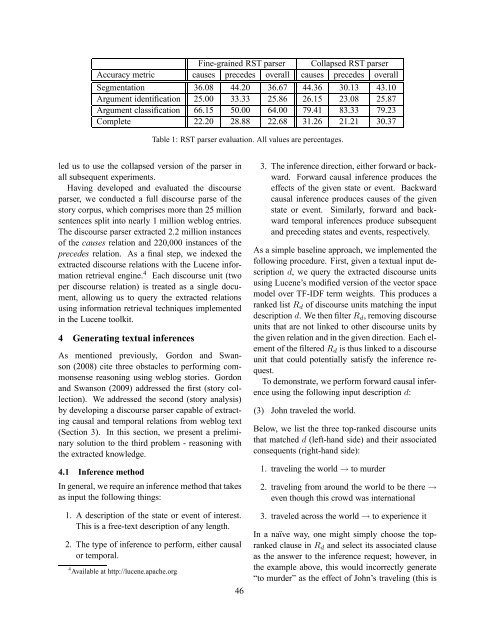

Fine-grainedRSTparser CollapsedRSTparser<br />

Accuracymetric causes precedes overall causes precedes overall<br />

Segmentation 36.08 44.20 36.67 44.36 30.13 43.10<br />

Argumentidentification 25.00 33.33 25.86 26.15 23.08 25.87<br />

Argumentclassification 66.15 50.00 64.00 79.41 83.33 79.23<br />

Complete 22.20 28.88 22.68 31.26 21.21 30.37<br />

ledustousethecollapsedversionoftheparserin<br />

allsubsequentexperiments.<br />

Having developed and evaluated the discourse<br />

parser, weconducted afull discourse parse of the<br />

storycorpus,whichcomprisesmorethan25million<br />

sentencessplitintonearly1millionweblogentries.<br />

Thediscourseparserextracted2.2millioninstances<br />

ofthecausesrelationand220,000instancesofthe<br />

precedes relation. Asafinalstep, weindexedthe<br />

extracteddiscourserelationswiththeLuceneinformationretrievalengine.<br />

4 Eachdiscourseunit(two<br />

perdiscourse relation) istreated asasingle document,<br />

allowing us to query the extracted relations<br />

usinginformationretrievaltechniquesimplemented<br />

intheLucenetoolkit.<br />

4 Generatingtextualinferences<br />

As mentioned previously, Gordon and Swanson(2008)citethreeobstaclestoperformingcommonsensereasoningusingweblogstories.<br />

Gordon<br />

andSwanson(20<strong>09</strong>)addressed thefirst(storycollection).<br />

Weaddressedthesecond(storyanalysis)<br />

bydevelopingadiscourseparsercapableofextractingcausalandtemporalrelationsfromweblogtext<br />

(Section 3). Inthissection, wepresent apreliminarysolutiontothethirdproblem-reasoningwith<br />

theextractedknowledge.<br />

4.1 Inferencemethod<br />

Ingeneral,werequireaninferencemethodthattakes<br />

asinputthefollowingthings:<br />

Table1:RSTparserevaluation.Allvaluesarepercentages.<br />

1. Adescriptionofthestateoreventofinterest.<br />

Thisisafree-textdescriptionofanylength.<br />

2. Thetypeofinferencetoperform,eithercausal<br />

ortemporal.<br />

4 Availableathttp://lucene.apache.org<br />

46<br />

3. Theinferencedirection,eitherforwardorbackward.<br />

Forwardcausal inference produces the<br />

effectsofthegivenstateorevent. Backward<br />

causalinferenceproducescausesofthegiven<br />

state or event. Similarly, forward and backwardtemporal<br />

inferences produce subsequent<br />

andprecedingstatesandevents,respectively.<br />

Asasimplebaselineapproach,weimplementedthe<br />

followingprocedure.First,givenatextualinputdescription<br />

d,wequerytheextracteddiscourseunits<br />

usingLucene’smodifiedversionofthevectorspace<br />

modeloverTF-IDFtermweights. Thisproducesa<br />

rankedlist Rdofdiscourseunitsmatchingtheinput<br />

description d.Wethenfilter Rd,removingdiscourse<br />

unitsthatarenotlinkedtootherdiscourseunitsby<br />

thegivenrelationandinthegivendirection.Eachelementofthefiltered<br />

Rdisthuslinkedtoadiscourse<br />

unitthatcouldpotentially satisfy theinference request.Todemonstrate,weperformforwardcausalinferenceusingthefollowinginputdescription<br />

d:<br />

(3) Johntraveledtheworld.<br />

Below,welistthethreetop-rankeddiscourseunits<br />

thatmatched d(left-handside)andtheirassociated<br />

consequents(right-handside):<br />

1. travelingtheworld →tomurder<br />

2. travelingfromaroundtheworldtobethere →<br />

eventhoughthiscrowdwasinternational<br />

3. traveledacrosstheworld →toexperienceit<br />

Inanaïve way, one mightsimply choose the toprankedclausein<br />

Rdandselectitsassociatedclause<br />

astheanswertotheinferencerequest; however,in<br />

theexampleabove,thiswouldincorrectlygenerate<br />

“tomurder”astheeffectofJohn’straveling(thisis