Anonymous (XXXX) Rubric scoring and item writing.pdf

Anonymous (XXXX) Rubric scoring and item writing.pdf

Anonymous (XXXX) Rubric scoring and item writing.pdf

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

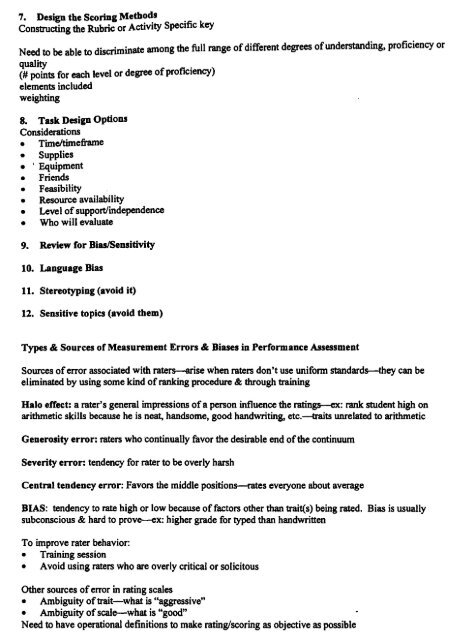

7. Design the Scoring Methods .<br />

Constructing the <strong>Rubric</strong> or Activity SpecIfic key<br />

Need to be able to discriminate among the full range ofdifferent degrees ofunderst<strong>and</strong>ing, proficiency or<br />

quality<br />

(# points for each level or degree ofproficiency)<br />

elements included<br />

weighting<br />

8. Task Design Options<br />

Considerations<br />

• Timeltimeframe<br />

• Supplies<br />

• i Equipment<br />

• Friends<br />

• Feasibility<br />

• ResoW'Ce availability<br />

• Levelofsuppo~independence<br />

• Who will evaluate<br />

9. Review for Bias/Sensitivity<br />

10. Language Bias<br />

11. Stereotyping (avoid it)<br />

12. Sensitive topics (avoid them)<br />

Types & Sources ofMeasurement Errors & Biases in Performance Assessment<br />

Sources oferror associated with raters-arise when raters don't use unifonn st<strong>and</strong>ards-they can be<br />

eliminated by using some kind ofranking procedure & through training<br />

Halo effect: a rater's general impressions ofa person influence the ratings-ex: rank student high on<br />

arithmetic skills because he is neat, h<strong>and</strong>some, good h<strong>and</strong><strong>writing</strong>, etc.-traits unrelated to arithmetic<br />

Generosity error: raters who 'continually favor the desirable end ofthe continuum<br />

Severity error: tendency for rater to be overly harsh<br />

Central tendency error: Favors the middle positions-rates everyone about average<br />

BIAS: tendency to rate high or low because offactors other than trait(s) being rated. Bias is usually<br />

subconscious & hard to prove--ex: higher grade for typed than h<strong>and</strong>written<br />

To improve rater behavior:<br />

• Training session<br />

• Avoid using raters who are overly critical or solicitous<br />

Other sources oferror in rating scales<br />

• Ambiguity oftrait-what is "aggressive"<br />

• Ambiguity ofscale-what is "good"<br />

Need to have operational definitions to make rating/<strong>scoring</strong> as objective as possible