CUDA Accelerated Linpack on Clusters - Nvidia

CUDA Accelerated Linpack on Clusters - Nvidia

CUDA Accelerated Linpack on Clusters - Nvidia

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

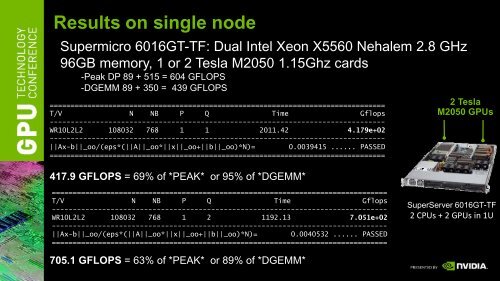

Results <strong>on</strong> single node<br />

Supermicro 6016GT-TF: Dual Intel Xe<strong>on</strong> X5560 Nehalem 2.8 GHz<br />

96GB memory, 1 or 2 Tesla M2050 1.15Ghz cards<br />

-Peak DP 89 + 515 = 604 GFLOPS<br />

-DGEMM 89 + 350 = 439 GFLOPS<br />

================================================================================<br />

T/V N NB P Q Time Gflops<br />

--------------------------------------------------------------------------------<br />

WR10L2L2 108032 768 1 1 2011.42 4.179e+02<br />

--------------------------------------------------------------------------------<br />

||Ax-b||_oo/(eps*(||A||_oo*||x||_oo+||b||_oo)*N)=<br />

0.0039415 ...... PASSED<br />

================================================================================<br />

417.9 GFLOPS = 69% of *PEAK* or 95% of *DGEMM*<br />

2 Tesla<br />

M2050 GPUs<br />

================================================================================<br />

T/V N NB P Q Time Gflops<br />

--------------------------------------------------------------------------------<br />

WR10L2L2 108032 768 1 2 1192.13 7.051e+02<br />

--------------------------------------------------------------------------------<br />

||Ax-b||_oo/(eps*(||A||_oo*||x||_oo+||b||_oo)*N)=<br />

0.0040532 ...... PASSED<br />

================================================================================<br />

SuperServer 6016GT-TF<br />

2 CPUs + 2 GPUs in 1U<br />

705.1 GFLOPS = 63% of *PEAK* or 89% of *DGEMM*