The Epoch-Greedy Algorithm for Contextual Multi-armed Bandits

The Epoch-Greedy Algorithm for Contextual Multi-armed Bandits

The Epoch-Greedy Algorithm for Contextual Multi-armed Bandits

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

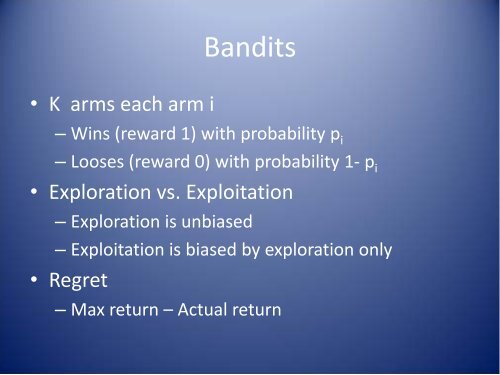

<strong>Bandits</strong><br />

• K arms each arm i<br />

– Wins (reward 1) with probability p i<br />

– Looses (reward 0) with probability 1- p i<br />

• Exploration vs. Exploitation<br />

– Exploration is unbiased<br />

– Exploitation is biased by exploration only<br />

• Regret<br />

– Max return – Actual return