Distributed Data Mining in Credit Card Fraud Detection

Distributed Data Mining in Credit Card Fraud Detection

Distributed Data Mining in Credit Card Fraud Detection

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

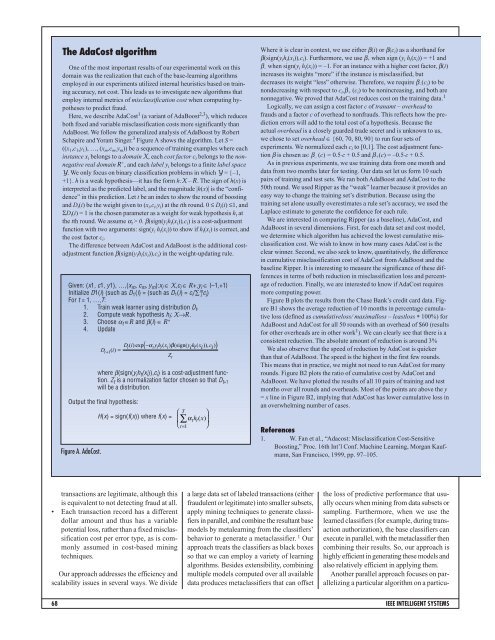

The AdaCost algorithmOne of the most important results of our experimental work on thisdoma<strong>in</strong> was the realization that each of the base-learn<strong>in</strong>g algorithmsemployed <strong>in</strong> our experiments utilized <strong>in</strong>ternal heuristics based on tra<strong>in</strong><strong>in</strong>gaccuracy, not cost. This leads us to <strong>in</strong>vestigate new algorithms thatemploy <strong>in</strong>ternal metrics of misclassification cost when comput<strong>in</strong>g hypothesesto predict fraud.Here, we describe AdaCost 1 (a variant of AdaBoost 2,3 ), which reducesboth fixed and variable misclassification costs more significantly thanAdaBoost. We follow the generalized analysis of AdaBoost by RobertSchapire and Yoram S<strong>in</strong>ger. 3 Figure A shows the algorithm. Let S =((x 1 ,c 1 ,y 1 ), …, (x m ,c m ,y m )) be a sequence of tra<strong>in</strong><strong>in</strong>g examples where each<strong>in</strong>stance x i belongs to a doma<strong>in</strong> X, each cost factor c i belongs to the nonnegativereal doma<strong>in</strong> R + , and each label y i belongs to a f<strong>in</strong>ite label spaceY. We only focus on b<strong>in</strong>ary classification problems <strong>in</strong> which Y = {–1,+1}. h is a weak hypothesis—it has the form h:X – R. The sign of h(x) is<strong>in</strong>terpreted as the predicted label, and the magnitude |h(x)| is the “confidence”<strong>in</strong> this prediction. Let t be an <strong>in</strong>dex to show the round of boost<strong>in</strong>gand D t (i) be the weight given to (x i ,c i ,y i ) at the tth round. 0 ≤ D t (i) ≤1, andΣD t (i) = 1 is the chosen parameter as a weight for weak hypothesis h t atthe tth round. We assume α t > 0. β(sign(y i h t (x i )),c i ) is a cost-adjustmentfunction with two arguments: sign(y i h t (x i )) to show if h t (x i ) is correct, andthe cost factor c i .The difference between AdaCost and AdaBoost is the additional costadjustmentfunction β(sign(y i h t (x i )),c i ) <strong>in</strong> the weight-updat<strong>in</strong>g rule.Given: (x1, c1, y1), …,(x m , c m , y m ):x i ∈ X,c i ∈ R+,y i ∈ {–1,+1}Initialize D1(i) (such as D 1 (i) = (such as D 1 (i) = c i /∑ m j c j )For t = 1, …,T:1. Tra<strong>in</strong> weak learner us<strong>in</strong>g distribution D t .2. Compute weak hypothesis h t : X→R.3. Choose α t ∈R and β(i) ∈ R +4. UpdateFigure A. AdaCost.Dt i − tyh i t xiy h x cDtii t i i+ 1 () ( ) exp α ( ) β( sign( ( )), )=Ztwhere β(sign(y i h t (x i )),c i ) is a cost-adjustment function.Z t is a normalization factor chosen so that D t+1will be a distribution.Output the f<strong>in</strong>al hypothesis:H(x) = sign(f(x)) where f(x) =( )⎛ T ⎞⎜∑α t h t ( x)⎟⎝ t=1 ⎠Where it is clear <strong>in</strong> context, we use either β(i) or β(c i ) as a shorthand forβ(sign(y i h t (x i )),c i ). Furthermore, we use β + when sign (y i h t (x i )) = +1 andβ – when sign(y i h t (x i )) = –1. For an <strong>in</strong>stance with a higher cost factor, β(i)<strong>in</strong>creases its weights “more” if the <strong>in</strong>stance is misclassified, butdecreases its weight “less” otherwise. Therefore, we require β – (c i ) to benondecreas<strong>in</strong>g with respect to c i ,β + (c i ) to be non<strong>in</strong>creas<strong>in</strong>g, and both arenonnegative. We proved that AdaCost reduces cost on the tra<strong>in</strong><strong>in</strong>g data. 1Logically, we can assign a cost factor c of tranamt – overhead tofrauds and a factor c of overhead to nonfrauds. This reflects how the predictionerrors will add to the total cost of a hypothesis. Because theactual overhead is a closely guarded trade secret and is unknown to us,we chose to set overhead ∈ {60, 70, 80, 90} to run four sets ofexperiments. We normalized each c i to [0,1]. The cost adjustment functionβ is chosen as: β – (c) = 0.5⋅c + 0.5 and β + (c) = –0.5⋅c + 0.5.As <strong>in</strong> previous experiments, we use tra<strong>in</strong><strong>in</strong>g data from one month anddata from two months later for test<strong>in</strong>g. Our data set let us form 10 suchpairs of tra<strong>in</strong><strong>in</strong>g and test sets. We ran both AdaBoost and AdaCost to the50th round. We used Ripper as the “weak” learner because it provides aneasy way to change the tra<strong>in</strong><strong>in</strong>g set’s distribution. Because us<strong>in</strong>g thetra<strong>in</strong><strong>in</strong>g set alone usually overestimates a rule set’s accuracy, we used theLaplace estimate to generate the confidence for each rule.We are <strong>in</strong>terested <strong>in</strong> compar<strong>in</strong>g Ripper (as a basel<strong>in</strong>e), AdaCost, andAdaBoost <strong>in</strong> several dimensions. First, for each data set and cost model,we determ<strong>in</strong>e which algorithm has achieved the lowest cumulative misclassificationcost. We wish to know <strong>in</strong> how many cases AdaCost is theclear w<strong>in</strong>ner. Second, we also seek to know, quantitatively, the difference<strong>in</strong> cumulative misclassification cost of AdaCost from AdaBoost and thebasel<strong>in</strong>e Ripper. It is <strong>in</strong>terest<strong>in</strong>g to measure the significance of these differences<strong>in</strong> terms of both reduction <strong>in</strong> misclassification loss and percentageof reduction. F<strong>in</strong>ally, we are <strong>in</strong>terested to know if AdaCost requiresmore comput<strong>in</strong>g power.Figure B plots the results from the Chase Bank’s credit card data. FigureB1 shows the average reduction of 10 months <strong>in</strong> percentage cumulativeloss (def<strong>in</strong>ed as cumulativeloss/ maximalloss – leastloss * 100%) forAdaBoost and AdaCost for all 50 rounds with an overhead of $60 (resultsfor other overheads are <strong>in</strong> other work 1 ). We can clearly see that there is aconsistent reduction. The absolute amount of reduction is around 3%We also observe that the speed of reduction by AdaCost is quickerthan that of AdaBoost. The speed is the highest <strong>in</strong> the first few rounds.This means that <strong>in</strong> practice, we might not need to run AdaCost for manyrounds. Figure B2 plots the ratio of cumulative cost by AdaCost andAdaBoost. We have plotted the results of all 10 pairs of tra<strong>in</strong><strong>in</strong>g and testmonths over all rounds and overheads. Most of the po<strong>in</strong>ts are above the y= x l<strong>in</strong>e <strong>in</strong> Figure B2, imply<strong>in</strong>g that AdaCost has lower cumulative loss <strong>in</strong>an overwhelm<strong>in</strong>g number of cases.References1. W. Fan et al., “Adacost: Misclassification Cost-SensitiveBoost<strong>in</strong>g,” Proc. 16th Int’l Conf. Mach<strong>in</strong>e Learn<strong>in</strong>g, Morgan Kaufmann,San Francisco, 1999, pp. 97–105.transactions are legitimate, although thisis equivalent to not detect<strong>in</strong>g fraud at all.• Each transaction record has a differentdollar amount and thus has a variablepotential loss, rather than a fixed misclassificationcost per error type, as is commonlyassumed <strong>in</strong> cost-based m<strong>in</strong><strong>in</strong>gtechniques.Our approach addresses the efficiency andscalability issues <strong>in</strong> several ways. We dividea large data set of labeled transactions (eitherfraudulent or legitimate) <strong>in</strong>to smaller subsets,apply m<strong>in</strong><strong>in</strong>g techniques to generate classifiers<strong>in</strong> parallel, and comb<strong>in</strong>e the resultant basemodels by metalearn<strong>in</strong>g from the classifiers’behavior to generate a metaclassifier. 1 Ourapproach treats the classifiers as black boxesso that we can employ a variety of learn<strong>in</strong>galgorithms. Besides extensibility, comb<strong>in</strong><strong>in</strong>gmultiple models computed over all availabledata produces metaclassifiers that can offsetthe loss of predictive performance that usuallyoccurs when m<strong>in</strong><strong>in</strong>g from data subsets orsampl<strong>in</strong>g. Furthermore, when we use thelearned classifiers (for example, dur<strong>in</strong>g transactionauthorization), the base classifiers canexecute <strong>in</strong> parallel, with the metaclassifier thencomb<strong>in</strong><strong>in</strong>g their results. So, our approach ishighly efficient <strong>in</strong> generat<strong>in</strong>g these models andalso relatively efficient <strong>in</strong> apply<strong>in</strong>g them.Another parallel approach focuses on paralleliz<strong>in</strong>ga particular algorithm on a particu-68 IEEE INTELLIGENT SYSTEMS

![{ public static void main (String[] args) { System.out.println (](https://img.yumpu.com/49719541/1/190x143/-public-static-void-main-string-args-systemoutprintln-hello-.jpg?quality=85)