Copyright © Congress of Neurological Surgeons. Unauthorized reproduction of this article is prohibited.EXPERTISE IN NEUROSURGERYA DEFINITION OF EXPERTISE?Beg<strong>in</strong>n<strong>in</strong>g <strong>in</strong> the late 19th century, surgeons were tra<strong>in</strong>ed andassessed <strong>in</strong> a prolonged and organized fashion under thesupervision of senior doctors. 2 This novice/expert apprenticeshipprogram is the dom<strong>in</strong>ant method of tra<strong>in</strong><strong>in</strong>g surgeons. 3 Newtechnologies, society’s pressure, and a host of external constra<strong>in</strong>tssuch as lawsuits and reduced residents’ work<strong>in</strong>g hours haveresulted <strong>in</strong> a review of this model of teach<strong>in</strong>g. 2,4 It is nowbecom<strong>in</strong>g <strong>in</strong>creas<strong>in</strong>gly difficult to practice one’s surgical skills onpatients without hav<strong>in</strong>g reached a certa<strong>in</strong> level of “expertise.”Most authors agree that new methods of tra<strong>in</strong><strong>in</strong>g are required,but a fundamental (unanswered) question rema<strong>in</strong>s: Are thecurrent tra<strong>in</strong><strong>in</strong>g programs supposed to tra<strong>in</strong> surgeons to an“expert” or to only a competent level of performance?In 1990, a report discuss<strong>in</strong>g the perception of expertise <strong>in</strong>medic<strong>in</strong>e used <strong>in</strong>dicators of expertise such as years of experience,specialty board certification, and/or academic rank or responsibility.5 It is evident that these criteria are poorly correlated withsuperior cl<strong>in</strong>ical performance. An example of such failure of these<strong>in</strong>dicators to capture expertise <strong>in</strong>volved peer-nom<strong>in</strong>ated diagnosticexperts that did not objectively perform better than noviceson standard cases. 6 A systematic review that analyzed the numberof years <strong>in</strong> practice along with cl<strong>in</strong>ical performance founda negative correlation, suggest<strong>in</strong>g that more experience can beparadoxically associated with lower cl<strong>in</strong>ical performance. 7 Thesef<strong>in</strong>d<strong>in</strong>gs outl<strong>in</strong>e the lack of consensus <strong>in</strong> what constitutes an“expert” physician or surgeon and the difficult task of objectivelyevaluat<strong>in</strong>g performance.A precise def<strong>in</strong>ition of expertise <strong>in</strong> neurosurgery is needed. Thecurrent literature predom<strong>in</strong>antly focuses on the theme of surgical“competency” rather than surgical “expertise.” 8 This theme preference,orig<strong>in</strong>at<strong>in</strong>g from the grow<strong>in</strong>g importance of competency-basedassessment programs, has become the favored method ofevaluation by tra<strong>in</strong><strong>in</strong>g programs. Def<strong>in</strong>itions of surgicalcompetence usually <strong>in</strong>volve 2 aspects: the technical skills andthe “other skills.” Thelatterskillshavebeenoutl<strong>in</strong>edby2majorgroups <strong>in</strong> North America: the Royal College of Surgeons andPhysicians of Canada and the Accreditation Council onGraduate Medical Education <strong>in</strong> the United States. These 2organizations have highlighted aspects such as professionalism,communication skills, medical expertise, and collaboration skillsas important “other skills.” 9,10 A recent and very comprehensivedef<strong>in</strong>ition of competence states that surgical competenceencompasses knowledge and technical and social skills to solvefamiliar and novel situations to provide adequate patient care. 8This def<strong>in</strong>ition focuses, <strong>in</strong>terest<strong>in</strong>gly, on “adequate” rather than“excellent” patient care as a goal.To evaluate competency, 2 general approaches can be used: thebehaviorist approach, <strong>in</strong> which specific behaviors are rated, and theholistic approach, <strong>in</strong> which many aspects are comb<strong>in</strong>ed andevaluated. Bhatti and Cumm<strong>in</strong>gs 8 have concluded that theholistic approach is probably the best way to assess competency,but also the most difficult because of the lack of def<strong>in</strong>edmethodologies to measure “other skills.” This review concentrateson a behavioristic approach because technical skills are measurablebehaviors.Confusion is apparent when patients and doctors def<strong>in</strong>e surgicalcompetency. For a researcher and a neurosurgical tra<strong>in</strong><strong>in</strong>g program,competency is more than just pass<strong>in</strong>g an exam<strong>in</strong>ation. 8 Forthe patient and family, competency is the complex set of “skills”that contribute to an excellent outcome. The def<strong>in</strong>ition of expertand expertise can vary from specialty to specialty and even with<strong>in</strong>1 surgical specialty. Is a consensus def<strong>in</strong>ition of expertise thereforepossible and to what extent would such a def<strong>in</strong>ition affect thetra<strong>in</strong><strong>in</strong>g of neurosurgeons and, ultimately, patient care?With regard to patient care, the report “To Err Is Human” 11concluded that more than 98,000 deaths are caused by medicalerrors each year and that the majority of these errors werepreventable. After the <strong>in</strong>troduction of laparoscopic cholecystectomy,studies showed that surgeons who performed fewer than 30laparoscopic cholecystectomies had a fivefold <strong>in</strong>crease <strong>in</strong> bile duct<strong>in</strong>jury. 12 This prompted the general surgical community toreevaluate tra<strong>in</strong><strong>in</strong>g methods and to develop box-tra<strong>in</strong>er simulationsand virtual reality (VR) simulators. 2 In most generalsurgery studies, technical competency was usually def<strong>in</strong>ed as thenumber of procedures performed. Many neurosurgical proceduresare not as stereotypical and as easily classified as those ofgeneral surgery. In neurosurgery, there may be significantvariability <strong>in</strong> the surgical approach used to deal with a specificoperative lesion. This <strong>in</strong>herent variability may be the result of nothav<strong>in</strong>g the necessary phase III data that can be used to evaluatethe short- and long-term patient outcomes of different operativeapproaches. This leads to a major problem when one tries todef<strong>in</strong>e technical competency <strong>in</strong> a specific operative <strong>in</strong>tervention.To remedy this problem, a group of authors state that clearbenchmarks for every operation should be available anddeveloped to m<strong>in</strong>imize complications and improve results. 2 Thisprocess is <strong>in</strong>herently difficult and needs to be implemented for allneurosurgical procedures. No clear operational def<strong>in</strong>ition oftechnical competency/expertise <strong>in</strong> neurosurgery can, at present,be outl<strong>in</strong>ed.A major assumption <strong>in</strong> most studies regard<strong>in</strong>g expertise <strong>in</strong>medic<strong>in</strong>e is that novices will <strong>in</strong>evitably become experts withenough practice. Mylopoulos and Regehr 13 argue that rout<strong>in</strong>eexpertise can be achieved this way, but a more accuraterepresentation of expert performance probably relates to adaptiveexpertise. Rout<strong>in</strong>e experts are ak<strong>in</strong> to skilled technicians <strong>in</strong> theirspecific doma<strong>in</strong>, but they lack the tra<strong>in</strong><strong>in</strong>g to face novel problems.Adaptive experts stretch the boundaries of their own limits byus<strong>in</strong>g flexible and creative means to solve complex andunexpected situations. A qualitative study on this topic <strong>in</strong>volv<strong>in</strong>gundergraduate medical students identified that students believedthat it was beyond the scope of their tra<strong>in</strong><strong>in</strong>g to acquire<strong>in</strong>novative thought processes. 14 The reasons for these ideas areunclear, but this issue needs to be addressed if adaptive expertiseis to be a reasonable goal of neurosurgical tra<strong>in</strong><strong>in</strong>g. <strong>Surgical</strong>expertise is best described as an adaptive expertise because, likeNEUROSURGERYVOLUME 73 | NUMBER 4 | OCTOBER 2013 SUPPLEMENT | S31

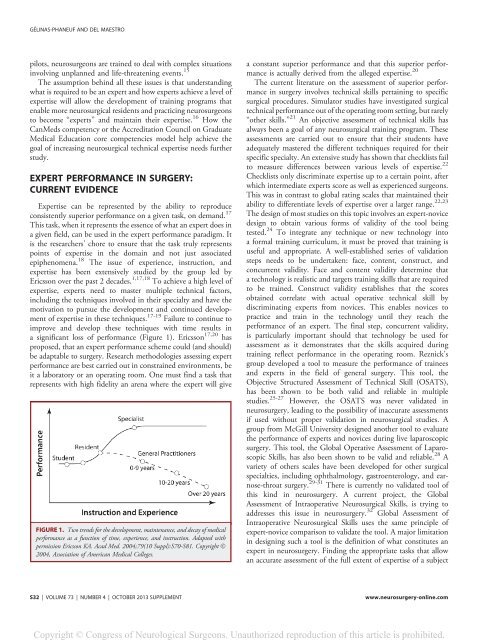

Copyright © Congress of Neurological Surgeons. Unauthorized reproduction of this article is prohibited.GÉLINAS-PHANEUF AND DEL MAESTROpilots, neurosurgeons are tra<strong>in</strong>ed to deal with complex situations<strong>in</strong>volv<strong>in</strong>g unplanned and life-threaten<strong>in</strong>g events. 15The assumption beh<strong>in</strong>d all these issues is that understand<strong>in</strong>gwhat is required to be an expert and how experts achieve a level ofexpertise will allow the development of tra<strong>in</strong><strong>in</strong>g programs thatenable more neurosurgical residents and practic<strong>in</strong>g neurosurgeonsto become “experts” and ma<strong>in</strong>ta<strong>in</strong> their expertise. 16 How theCanMeds competency or the Accreditation Council on GraduateMedical Education core competencies model help achieve thegoal of <strong>in</strong>creas<strong>in</strong>g neurosurgical technical expertise needs furtherstudy.EXPERT PERFORMANCE IN SURGERY:CURRENT EVIDENCE<strong>Expertise</strong> can be represented by the ability to reproduceconsistently superior performance on a given task, on demand. 17This task, when it represents the essence of what an expert does <strong>in</strong>a given field, can be used <strong>in</strong> the expert performance paradigm. Itis the researchers’ chore to ensure that the task truly representspo<strong>in</strong>ts of expertise <strong>in</strong> the doma<strong>in</strong> and not just associatedepiphenomena. 18 The issue of experience, <strong>in</strong>struction, andexpertise has been extensively studied by the group led byEricsson over the past 2 decades. 1,17,18 To achieve a high level ofexpertise, experts need to master multiple technical factors,<strong>in</strong>clud<strong>in</strong>g the techniques <strong>in</strong>volved <strong>in</strong> their specialty and have themotivation to pursue the development and cont<strong>in</strong>ued developmentof expertise <strong>in</strong> these techniques. 17-19 Failure to cont<strong>in</strong>ue toimprove and develop these techniques with time results <strong>in</strong>a significant loss of performance (Figure 1). Ericsson 17,20 hasproposed, that an expert performance scheme could (and should)be adaptable to surgery. Research methodologies assess<strong>in</strong>g expertperformance are best carried out <strong>in</strong> constra<strong>in</strong>ed environments, beit a laboratory or an operat<strong>in</strong>g room. One must f<strong>in</strong>d a task thatrepresents with high fidelity an arena where the expert will giveFIGURE 1. Two trends for the development, ma<strong>in</strong>tenance, and decay of medicalperformance as a function of time, experience, and <strong>in</strong>struction. Adapted withpermission Ericsson KA. Acad Med. 2004;79(10 Suppl):S70-S81. Copyright Ó2004, Association of American Medical Colleges.a constant superior performance and that this superior performanceis actually derived from the alleged expertise. 20The current literature on the assessment of superior performance<strong>in</strong> surgery <strong>in</strong>volves technical skills perta<strong>in</strong><strong>in</strong>g to specificsurgical procedures. Simulator studies have <strong>in</strong>vestigated surgicaltechnical performance out of the operat<strong>in</strong>g room sett<strong>in</strong>g, but rarely“other skills.” 21 An objective assessment of technical skills hasalways been a goal of any neurosurgical tra<strong>in</strong><strong>in</strong>g program. Theseassessments are carried out to ensure that their students haveadequately mastered the different techniques required for theirspecific specialty. An extensive study has shown that checklists failto measure differences between various levels of expertise. 22Checklists only discrim<strong>in</strong>ate expertise up to a certa<strong>in</strong> po<strong>in</strong>t, afterwhich <strong>in</strong>termediate experts score as well as experienced surgeons.This was <strong>in</strong> contrast to global rat<strong>in</strong>g scales that ma<strong>in</strong>ta<strong>in</strong>ed theirability to differentiate levels of expertise over a larger range. 22,23The design of most studies on this topic <strong>in</strong>volves an expert-novicedesign to obta<strong>in</strong> various forms of validity of the tool be<strong>in</strong>gtested. 24 To <strong>in</strong>tegrate any technique or new technology <strong>in</strong>toa formal tra<strong>in</strong><strong>in</strong>g curriculum, it must be proved that tra<strong>in</strong><strong>in</strong>g isuseful and appropriate. A well-established series of validationsteps needs to be undertaken: face, content, construct, andconcurrent validity. Face and content validity determ<strong>in</strong>e thata technology is realistic and targets tra<strong>in</strong><strong>in</strong>g skills that are requiredto be tra<strong>in</strong>ed. Construct validity establishes that the scoresobta<strong>in</strong>ed correlate with actual operative technical skill bydiscrim<strong>in</strong>at<strong>in</strong>g experts from novices. This enables novices topractice and tra<strong>in</strong> <strong>in</strong> the technology until they reach theperformance of an expert. The f<strong>in</strong>al step, concurrent validity,is particularly important should that technology be used forassessment as it demonstrates that the skills acquired dur<strong>in</strong>gtra<strong>in</strong><strong>in</strong>g reflect performance <strong>in</strong> the operat<strong>in</strong>g room. Reznick’sgroup developed a tool to measure the performance of tra<strong>in</strong>eesand experts <strong>in</strong> the field of general surgery. This tool, theObjective Structured Assessment of Technical Skill (OSATS),has been shown to be both valid and reliable <strong>in</strong> multiplestudies. 25-27 However, the OSATS was never validated <strong>in</strong>neurosurgery, lead<strong>in</strong>g to the possibility of <strong>in</strong>accurate assessmentsif used without proper validation <strong>in</strong> neurosurgical studies. Agroup from McGill University designed another tool to evaluatethe performance of experts and novices dur<strong>in</strong>g live laparoscopicsurgery. This tool, the Global Operative Assessment of LaparoscopicSkills, has also been shown to be valid and reliable. 28 Avariety of others scales have been developed for other surgicalspecialties, <strong>in</strong>clud<strong>in</strong>g ophthalmology, gastroenterology, and earnose-throatsurgery. 29-31 There is currently no validated tool ofthis k<strong>in</strong>d <strong>in</strong> neurosurgery. A current project, the GlobalAssessment of Intraoperative Neurosurgical Skills, is try<strong>in</strong>g toaddresses this issue <strong>in</strong> neurosurgery. 32 Global Assessment ofIntraoperative Neurosurgical Skills uses the same pr<strong>in</strong>ciple ofexpert-novice comparison to validate the tool. A major limitation<strong>in</strong> design<strong>in</strong>g such a tool is the def<strong>in</strong>ition of what constitutes anexpert <strong>in</strong> neurosurgery. F<strong>in</strong>d<strong>in</strong>g the appropriate tasks that allowan accurate assessment of the full extent of expertise of a subjectS32 | VOLUME 73 | NUMBER 4 | OCTOBER 2013 SUPPLEMENTwww.neurosurgery-onl<strong>in</strong>e.com