0681

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Proceedings of the 34th Chinese Control Conference<br />

July 28-30, 2015, Hangzhou, China<br />

A Novel Fast Haze Removal Technique for Single Image Using<br />

Image Pyramid<br />

ZHAO Dong 1,2 , BAI Yong-qiang 1,2<br />

1. Department of Automation, Beijing Institute of Technology, Beijing 100081<br />

2. Key Laboratory of Complex System Intelligent Control and Decision, Ministry of Education, Beijing 100081<br />

E-mail: zhaodong biti@163.com<br />

Abstract: Fast single image dehazing has been a challenging problem in many fields, such as computer vision and real-time<br />

applications. Recently, many dehazing algorithms have been proposed based on the dark channel prior (DCP). However, these<br />

algorithms aim to improve the refinement of the raw transmission map, while ignore the computational complexity of DCP itself.<br />

Therefore, this paper proposes a new technique for fast single image haze removal, which achieves a good tradeoff between<br />

the dehazing performance and the computational complexity. We first decompose the observed haze image into a coarse image<br />

and a detail image using Gaussian-Laplacian pyramid. Then, the coarse image is dehazed by Dark Channel Prior and Guided<br />

filter (GDCP). For the size of the coarse image is 1/4 of the original image, the computational complexity is reduced sufficiently.<br />

However, the recomposed image is blurred, since the detail image is still haze. So, we employ an unsharp filter to sharpen<br />

the blurred recomposed image. Experimental results show that the proposed dehazing technique effectively removes haze, and<br />

significantly reduces the computational complexity by 69.59% on average, compared with traditional GDCP algorithm.<br />

Key Words: single image dehazing, image restoration, dark channel prior, image pyramid, unsharp masking<br />

1 Introduction<br />

Images of outdoor scenes are usually degraded by bad<br />

weather conditions, such as haze and fog. Since the additional<br />

particles affect atmospheric absorption and scattering<br />

of lights, distant objects and scene are less visible. As a result,<br />

the captured images lose the color fidelity and contrast.<br />

Removal of weather effects has caught increasing attention,<br />

such as removals of haze[1–3, 5–7, 9, 10, 12, 13],<br />

fog[4], rain[18, 19] and snow[20] from image. Recently,<br />

single image dehazing algorithms have been developed to<br />

overcome the limitations of multiple-image dehazing approaches.<br />

These dehazing algorithms can be divided into<br />

two major categories: physically based and contrast-based<br />

algorithms[1].<br />

Physically based algorithms recover the hazy images<br />

based on the estimated transmission map. Fattal[2] decomposed<br />

the scene radiance of an image into the albedo and<br />

the shading, and then estimated the scene radiance based on<br />

independent component analysis, assuming that the shading<br />

and the scene transmission are locally uncorrelated. He et<br />

al. [3] estimated object depths in a hazy image based on the<br />

dark channel prior (DCP). The object depth was finally refined<br />

by a computationally expensive alpha-matting strategy<br />

in their work. The key idea of Nishino et al.[4] is to model<br />

the image with a factorial Markov random field in which<br />

the scene albedo and depth are two statistically independent<br />

latent layers and to jointly estimate them.<br />

Contrast-based algorithms, on the other hand, aim to restore<br />

the visibility of a hazy image by properly enhancing<br />

the contrast of an input degraded image. Tan[5] maximized<br />

the contrast of a hazy image, assuming that a haze-free image<br />

has a higher contrast ratio than the hazy image. Tarel<br />

and Hautiere[6] attempted to the atmospheric veil(the map<br />

of blended atmospheric light) through the median filter. Ancuti<br />

and Ancuti[7] introduced a fusion-based strategy that<br />

This work is supported by National Natural Science Foundation (NNS-<br />

F) of China under Grant 61304254.<br />

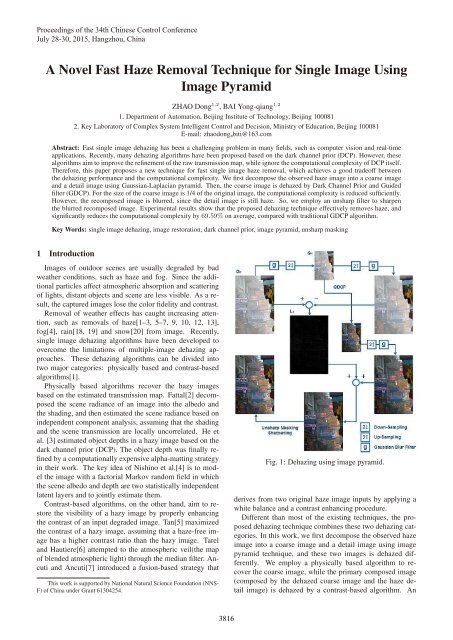

Fig. 1: Dehazing using image pyramid.<br />

derives from two original haze image inputs by applying a<br />

white balance and a contrast enhancing procedure.<br />

Different than most of the existing techniques, the proposed<br />

dehazing technique combines these two dehazing categories.<br />

In this work, we first decompose the observed haze<br />

image into a coarse image and a detail image using image<br />

pyramid technique, and these two images is dehazed differently.<br />

We employ a physically based algorithm to recover<br />

the coarse image, while the primary composed image<br />

(composed by the dehazed coarse image and the haze detail<br />

image) is dehazed by a contrast-based algorithm. An<br />

3816

overview diagram of the proposed approach is illustrated in<br />

Fig. 1. Here, we choose an improved dark channel prior<br />

called GDCP and an unsharp filter as the physically based<br />

and contrast-based algorithms, respectively. One of the advantages<br />

of the proposed technique is its speed: since the<br />

size of the coarse image is only 1/4 for the original captured<br />

image, the computational complexity is reduced significantly.<br />

Another obvious advantage is that, because of employing<br />

both the physically based and contrast-based algorithms, the<br />

proposed dehazing technique can recover a high quality and<br />

contrast image.<br />

The remaining of this paper is organized as follows. In<br />

Section 2, the related techniques and our observations are<br />

briefly reviewed. In Section 3, our fast haze removal technique<br />

for single image is introduced in detail. In Section 4,<br />

we report and discuss the experimental results. Finally, in<br />

Section 5, the conclusion is summarized.<br />

2 Related Work and Obervations<br />

The dark channel prior proposed in [3] can directly estimate<br />

the thickness of the haze. So, the proposed fast haze<br />

removal approach is based on dark channel prior aiming to<br />

recover a high quality haze-free image. By employing other<br />

techniques, we improve the computational speed effectively.<br />

In this section, we first review the haze image model.<br />

Then we introduce the dehazing using the dark channel prior<br />

in detail.<br />

2.1 Haze Image Model<br />

Based on the atmospheric optics and only considering s-<br />

ingle scattering [8], the formation hazy imaging model can<br />

be represented [2, 3, 9] as follows:<br />

I(x) =J(x)t(x)+A(1 − t(x)) (1)<br />

where I(x) is the observed haze image at pixel x, J(x) denotes<br />

the original haze-free image, A is the global atmospheric<br />

light, t(x) ∈ [0, 1] is the medium transmission describing<br />

the portion of the light that is not scattered and<br />

reaches the camera. When the atmosphere is homogenous,<br />

the transmission t(x) can be expressed as:<br />

t(x) =e −βd(x) (2)<br />

where d is the scene depth, β is the attenuation coefficient<br />

due to scattering in the medium. This equation indicates that<br />

the scene radiance is attenuated exponentially with the depth.<br />

So, the haze removal is to restore J by estimating A and t<br />

at each pixel x.<br />

2.2 Dehazing Using the Dark Channel Prior<br />

In order to estimate A and t, He et al.[3] proposed the<br />

dark channel prior based on the observation that at least one<br />

of color channel values (RGB) is close to zero in a haze-free<br />

image. That is, for an arbitrary image J, its dark channel<br />

J dark can be formally defined as [3]:<br />

J dark (x) = min [ min J c (y) ] (3)<br />

y∈Ω(x) c∈{R,G,B}<br />

where c denotes one of the three color channels in the RBG<br />

color space, and J c is a color channel of J, Ω(x) is a local<br />

patch centered at x, y denotes the index for a pixel in Ω(x).<br />

(a) (b) (c)<br />

(d) (e) (f)<br />

Fig. 2: Transmission maps and recovered images. (a)-<br />

(c)are estimated transmission maps using DCP(patch size is<br />

15 × 15),GDSP, and GDCP performed on G 1 (the first level<br />

Gaussian pyramid). (d)-(f)are recovered images using (a)-(c)<br />

respectively.<br />

This statistical observation is called dark channel prior (D-<br />

CP).<br />

The color of the sky in a hazy image I is usually very similar<br />

to the global atmospheric light. So, the brightest pixels<br />

in the veiling luminance are considered to be the atmospheric<br />

light. To estimate atmospheric light, in [3], the author<br />

pick the top 0.1% brightest pixels in the dark channel; then<br />

among these pixels, the pixels with highest intensity in the<br />

input image is selected as the global atmospheric light A. After<br />

estimating A, based on the haze imaging model shown in<br />

equation (1) and the dark channel prior, the raw transmission<br />

map t can be derived as:<br />

t(x) =1− w min [min I c (y)<br />

y∈Ω(x) c A c ] (4)<br />

where w is a constant parameter used to keep a very small<br />

amount of haze for the distant objects, 0

2.3 Observations<br />

Although the dark channel prior (DCP) can directly estimate<br />

the thickness of the haze and recover a high quality<br />

haze-free image, it have some limitations in dealing with natural<br />

image. The mainly defect of DCP concerned in this paper<br />

is its computational complexity. As we discussed above,<br />

since the transmission is not always constant in a patch, the<br />

raw transmission map need to be refined by a soft matting<br />

method. However, this matting method is computationally<br />

expensive. So, a lot of improvements[10–13] for dehazing<br />

based on DCP were proposed in recent years. These works<br />

are focus on simplifying the refinement of the raw transmission<br />

map, however, they ignore the cost of DCP itself.<br />

We observed an improved dehazing algorithm based on dark<br />

channel prior, in which the raw transmission map is refined<br />

by a guided image filter[14] (in this paper, we call this algorithm<br />

the GDCP). It should be noted that in our observations<br />

on the GDCP, the guidance of the guided filter is the grayscale<br />

of the input haze image. Although, comparing with the<br />

results which guided by the color haze image, this simplified<br />

guided filter may lose some edges information, its result<br />

is effective enough for our application and computing faster.<br />

Table 1 shows our observations on the computational time of<br />

the guided filter and dark channel. We find that, almost 94%<br />

runtime is costed on computing the dark channel. It indicates<br />

that, although the GDCP improves the transmission map refinement<br />

for cutting down the computational complexity effectively,<br />

how to improve the computational complexity of<br />

DCP becomes a prominent issue.<br />

Table 1: Computational Time<br />

Images Total Guided DCP (s)<br />

Time(s) Filtering(s) (DCP/Total time%)<br />

Fig. 1 9.538 0.079 9.136 (95.785%)<br />

Fig. 2 11.004 0.091 10.534 (95.729%)<br />

Fig. 5 8.933 0.089 8.386 (93.877%)<br />

Fig. 6(a) 13.519 0.113 12.884 (95.303%)<br />

Fig. 7(b) 11.247 0.092 10.507 (93.420%)<br />

The other defect is that the image contrast after DCP<br />

method become lower[10]. Considering the second term of<br />

equation (1), we know that the 1 − t(x) presents the density<br />

of haze. When different intensity of foreground irradiance<br />

passing though same thickness haze(in a same patch of dark<br />

channel), they have the same degree of attenuation. Thus,<br />

the haze-free image J recovered by DCP have low contrast<br />

(see Fig.2 (e)).<br />

3 Proposed Algorithm<br />

In this work, we assume that an arbitrary observed image<br />

is composed by the coarse and the detail components. The<br />

detail component generally presents higher gradient in the<br />

observed image. So we also assume that for a haze image,<br />

the haze removal degree of the coarse component should be<br />

deeper than the detail one. That is, in the proposed dehazing<br />

algorithm, the haze removal operators are different for<br />

the coarse and detail components. Based on these assumptions<br />

and considering what we observed in subsection 2.3<br />

that the contrast of image recovered by DCP becomes lower,<br />

it is reasonable to decompose a haze image into coarse and<br />

Fig. 3: Decompose by using Gaussian-Laplacian pyramid.<br />

detail images before haze removal. Thus, the detail information<br />

can be preserved and these two components are dehazed<br />

differently.<br />

In fact, these two components of an image can be decomposed<br />

into a coarse image and a detail image by using image<br />

pyramid technique. Here, we choose Gaussian-Laplacian<br />

pyramid proposed by Burt[15] which is an efficient nonorthogonal<br />

pyramid representation of image. Thus, the<br />

coarse image is the first level of Gaussian pyramid G 1 , and<br />

the detail image is the zeroth level of Laplacian pyramid L 0 .<br />

We employ GDCP algorithm to remove haze from the coarse<br />

image, while for the haze of detail image, we remove it by<br />

employing an unsharp masking sharpening technique performed<br />

at the primary recomposed image.<br />

3.1 Haze Removal for the Coarse Image<br />

As we discussed above, we first decompose the haze image<br />

into a coarse image and a detail image using Gaussian-<br />

Laplacian pyramid. The first problem is to conform the level<br />

number N (N =0, 1, 2,...) of the image pyramid. Noting<br />

that, only the highest frequency information presents higher<br />

contrast in the haze areas; and the more levels are chosen,<br />

the more complex the algorithm is. So, N =1is more reasonable.<br />

The decomposition of observed haze image is illustrated<br />

in Fig. 3. In this proposed strategy, the observed haze image<br />

(G 0 ) is firstly decomposed into a coarse image (G 1 , the<br />

first level of Gaussian pyramid) and a detail image (L 0 , the<br />

zeroth level of Laplacian pyramid) by Gaussian-Laplacian<br />

pyramid. Here, the original image G 0 is blurred by Gaussian<br />

filter firstly, then the coarse image G 1 is gained by downsample<br />

this blurred image. The detail image L 0 is the difference<br />

between blurred G 0 and the prediction of G 0 which is<br />

obtained by up-sampling and Gaussian blur filtering for G 1 .<br />

After this decomposition, we performed the GDCP haze<br />

removal algorithm on the coarse image G 1 . Noting that, the<br />

size of the coarse image is only 1/4 of the original image.<br />

So, the dehazing runtime is speeded up greatly.<br />

However, as a statistical prior, the pixel is a crucial element<br />

which has a great effect on the estimation accuracy.<br />

That means, perform GDCP on the original size and on<br />

down-sample size would be different, which mainly repre-<br />

3818

senting in the estimation of atmospheric light A. From Table<br />

2 (the G 0 and G 1 columns are the results estimated from the<br />

original image G 0 and the coarse image G 1 , respectively),<br />

we find that the value estimated at G 1 is lager than that estimated<br />

at G 0 , which is caused by Gaussian blurring when the<br />

original image is decomposed. Therefore, improvements on<br />

estimating atmospheric light is required.<br />

3.2 Estimating Atmospheric Light A<br />

In subsection 2.2, we introduced an estimating method<br />

proposed in [3]. If we pick the top 0.1% brightest pixels<br />

from the dark channel prior of the coarse image G 1 , the atmospheric<br />

light would be estimated form the pixels in the G 1<br />

with highest intensity. Noting that, the G 1 is down-passed<br />

from the Gaussian blurred original image, the selected highest<br />

intensity in the coarse image G 1 is not equal to the coordinates<br />

intensity in the original image G 0 . Therefore, instead<br />

of G 1 , we select the corresponding pixels with highest intensity<br />

in the original image G 0 as the atmospheric light.<br />

For example, the selected top 0.1% brightest pixels in dark<br />

channel of the coarse image G 1 are p dark<br />

g 1<br />

(i, j)(where i, j are<br />

the position of the selected pixels). Then, among these pixels,<br />

we select the pixels p g0 (2 × i, 2 × j) with highest intensity<br />

in the input haze image G 0 as the atmospheric light. We find<br />

that, estimating by this improvement is more accurately, see<br />

Table 2 and 3.<br />

(a) (b) (c)<br />

Fig. 4: An exmpel of unsharp filter.<br />

Table 2: The Estimation of A (I)<br />

Images G 0 G 1<br />

Fig. 1 [0.808, 0.830, 0.841] [0.793, 0.807, 0.808]<br />

Fig. 2 [0.694, 0.713, 0.716] [0.678, 0.648, 0.641]<br />

Fig. 5 [0.836, 0.836, 0.844] [0.837, 0.836, 0.844]<br />

Fig. 6(a) [0.773, 0.769, 0.772] [0.773, 0.772, 0.773]<br />

Fig. 7(b) [0.836, 0.836, 0.844] [0.778, 0.766, 0.780]<br />

Table 3: The Estimation of A (II)<br />

Images Improvement results<br />

Fig. 1 [0.801, 0.822, 0.825]<br />

Fig. 2 [0.703, 0.694, 0.699]<br />

Fig. 5 [0.836, 0.832, 0.843]<br />

Fig. 6(a) [0.774, 0.770, 0.769]<br />

Fig. 7(b) [0.812, 0.813, 0.849]<br />

3.3 Haze Removal for the Detail Image<br />

The primary haze removal image is recomposed by the dehazed<br />

coarse image and the detail image. However, this recomposed<br />

image is blurring. To address this issue, we tested<br />

several more recent edge preserving techniques (e.g. Self-<br />

Adapted Sharpening[16] and WLS[17]), but we did not obtain<br />

significant improvement, since the blurring phenomenon<br />

is generated by the still haze detail component while the<br />

coarse component is dehazed, which means that the haze is<br />

uneven in the primary recomposed image. Thus, the uneven<br />

haze at detail component should be ’dispersed’ at first by certain<br />

technique, then enhance the image sharpness. By these<br />

operators, the slight haze is distributed across the whole image<br />

and the edge is sharpened.<br />

To achieve these two operators, we employ an Unsharp<br />

Filter, and performed it at the dimension L ∗ of the Lab color<br />

space of the primary recomposed image. This unsharp<br />

Fig. 5: Detail image haze removal using the unsharp sharpening<br />

filter. In left column, image above is the primary recomposed<br />

image, while the blow image is the results obtained<br />

by unsharp sharpening the above one. Images in right<br />

column are details of the left one marked by yellow rectangle.<br />

masking sharpening process is equates to disperse the haze<br />

at detail edge to the whole image at first, and then enhance<br />

the sharpness.<br />

denote the sharpening filter. Our sharpening<br />

filter is constructed by a high-pass filter and a Gaussian blur<br />

filter linearly:<br />

Let F sharp<br />

n×n<br />

F sharp<br />

n×n = μFn×n blur + νF high<br />

n×n (6)<br />

where, Fn×n blur presents a n × n Gaussian blur filter (with n =<br />

5, δ =1in this paper); F high<br />

n×n presents a n × n high-pass<br />

filter (with the center pixel value is 1, and other pixels are<br />

0). In fact, the Fn×n blur is used to blur the primary recomposed<br />

image, thus the haze in detail image is distributed cross the<br />

whole image.<br />

To express the sharpening filter as an unsharp filer, we<br />

propose μ = −α while ν = α + β, then the equation (6) is<br />

derived as:<br />

F sharp<br />

n×n<br />

= α(F high<br />

n×n − F blur<br />

n×n)+βF high<br />

n×n (7)<br />

where, F sharp<br />

n×n is an unsharp filter. Parameter α specifies the<br />

linear strength of the sharpening effect. A higher α value<br />

leads to larger increase in the contrast of the sharpened pix-<br />

3819

(a)<br />

Fig. 6: Comparisons of recovered images with the sky. The<br />

first row is the input haze images. The second is the results<br />

of GDCP performed on original image. The last row is our<br />

results.<br />

(b)<br />

(a)<br />

(b)<br />

Fig. 7: Comparisons of recovered non-sky region images.<br />

The first row is the input haze images. The second is the<br />

results of GDCP performed on original image. The last row<br />

is our results.<br />

els. Parameter β is used to adjust the center pixel value of<br />

F sharp<br />

n×n , which affects the overall L ∗ dimension. Normally,<br />

α =0.9,β =1.<br />

Fig. 4 is an example to illustrate the unsharp filter in detail.<br />

In this figure, (a)-(c) are the local L ∗ dimension of primary<br />

recomposed image, Gaussian blurring image, and sharpening<br />

image. The values marked by red box and yellow box<br />

are the maximum and minimum value for each local L ∗ .We<br />

find that, when the Gaussian blur filter sliding the L ∗ channel,<br />

the value are tend to be flat, which means that the haze in<br />

the detail image is dispersed. While the unsharp filter makes<br />

the L ∗ channel more contrast. Fig. 5 shows the dehazing<br />

result using the unsharp filter in detail.<br />

4 Experimental Results<br />

In our experiments, we evaluate the dehazing effect and<br />

the computational complexity of the proposed algorithm by<br />

comparing with the GDCP performed on original size.<br />

4.1 Dehazing Effect Comparisons<br />

Fig.6 and 7 are our experimental results compared with<br />

traditional GDCP dehazing algorithm. To prove the robustness<br />

of our improvement, we have tested the new operator<br />

on a large dataset of different hazy images.<br />

In Fig.6 we illustrate images with the sky, while the Fig.7<br />

presents the results of non-sky region images. We can find<br />

that, compared with the traditional GDCP, performing GD-<br />

CP on the first level Gaussian pyramid G 1 does not have obvious<br />

impacts on haze removal effect. Since we employ an<br />

unsharp sharpening filter to refine the blurring recomposed<br />

image, our improvements achieve a better perceptual quality<br />

than the traditional GDCP.<br />

4.2 Computational Complexity Evaluations<br />

The proposed improvement combining the GDCP and<br />

Gaussian-Laplacian pyramid skillfully. So, in our computational<br />

complexity evaluations, we evaluate the quality of<br />

this algorithm by objective assessment. The minimum running<br />

time for different images are recorded in Table 4. The<br />

time reported in the paper is from MATLAB implementation<br />

on a PC with Intel(R) Core (TM) i5-3230M CPU @ 2.60<br />

GHz with 8 GB RAM). Compared with the traditional GD-<br />

CP algorithm, the proposed algorithm significantly reduces<br />

the computational complexity by 69.59% on average.<br />

Table 4: Computational Complexity<br />

Our algorithm<br />

Images<br />

Traditional<br />

Compare with<br />

GDCP (s) Time traditional<br />

(s) GDCP(%)<br />

Fig. 1 9.54 2.95 -69.08<br />

Fig. 2 11.01 3.36 -69.48<br />

Fig. 5 8.93 2.76 -69.09<br />

Fig. 6(a) 13.52 3.95 -70.78<br />

Fig. 7(a) 14.48 4.41 -69.54<br />

Average 11.50 3.49 -69.59<br />

5 Conclusions<br />

In this work, we propose a novel fast single image haze removal<br />

technique that dehazing the coarse and detail image d-<br />

ifferently: by using physically and contrast-based algorithms,<br />

respectively. In fact, the proposed technique achieves performing<br />

the haze removal algorithms at a higher image pyramid<br />

successfully, with sufficient low computational complexity<br />

while no obvious influence on dehazing effect. Another<br />

great contribute for this work is that, for almost any<br />

3820

other existing dehazing algorithms can be employed to recover<br />

the coarse image. In future work we would like to test<br />

our method on videos.<br />

References<br />

[1] C. Ancuti, and C. O. Ancuti, Effective Contrast-Based Dehazing<br />

for Robust Image Matching, IEEE Geoscience and Remote<br />

Sensing Letters, 11(11): 1871–1875, 2014.<br />

[2] R. Fattal, Single image dehazing, ACM Trans. Graph., 27(3):<br />

1–9, 2008.<br />

[3] K.M. He, J. Sun, X.O. Tang, Single image haze removal using<br />

dark channel prior, IEEE Transactions on Pattern Analysis and<br />

Machine Intelligence, 33(12): 1956–1963, 2011.<br />

[4] K. Nishino, L. Kratz, and S. Lombardi, Bayesian defogging,<br />

International Journal of Computer Vision, 98(3): 263–270,<br />

2012.<br />

[5] R.T. Tan, Visibility in bad weather from a single image, Proceedings<br />

of the IEEE Conference on Computer Vision and Pattern<br />

Recognition, 2008: 1–8.<br />

[6] J.P. Tarel, Fast visibility restoration from a single color or gray<br />

level image, Proceedings of the International Conference on<br />

Computer Vision, 2009: 2201–2208.<br />

[7] C.O. Ancuti and C. Ancuti, Single Image Dehazing by Multi-<br />

Scale Fusion, IEEE Transactions on Image Processing, 22(8):<br />

3271–3282, 2013.<br />

[8] S.G. Narasimhan and S.K. Nayar, Vision and the Atmosphere,<br />

International Journal of Computer Vision, 48(3): 233–254,<br />

2002.<br />

[9] J.H. Kim, W.D. Jang, J.Y. Sim, and C.S. Kim, Optimized Constrast<br />

Enhancement for Real-time Image and Video Dehazing,<br />

Journal of Visual Communication and Image Representation,<br />

24(3): 410–425, 2013.<br />

[10] Z. Wang and Y. Feng, Fast Single Haze Image Enhancement,<br />

Computers and Electrical Engineering, 40(3): 758–795, 2013.<br />

[11] C.X Xiao and J.J. Gan, Fast image dehazing using guided<br />

joint bilateral filter, The Visual Computer, 28(6-8): 713–721,<br />

2012.<br />

[12] J. Yu and Q. Liao, Fast single image fog removal using edgepreserving<br />

smoothing, IEEE International Conference on A-<br />

coustics, Speech, and Signal Processing, 2011: 1245–1248.<br />

[13] H.R. Xu, J.M. Guo, Q. Liu and L.L. Ye, Fast Image Dehazing<br />

Using Improved Dark Channel Prior, Information Science and<br />

Technology, 2012: 663–667.<br />

[14] K.M. He, J. Sun, X.O. Tang, Guided image filtering, IEEE<br />

Transactions on Pattern Analysis and Machine Intelligence,35<br />

(6): 1397–1409, 2013.<br />

[15] Peter J. Burt and Edward H. Adelson, The Laplacian pyramid<br />

as a compact image code, IEEE Transaction on Communications,<br />

31(4): 532-540,1983.<br />

[16] L.J. Kau and T.L. Lee, An Efficient and Self-Adapted Apprach<br />

to the Sharpening of Color Images, Scientific World Journal,<br />

2013: 105945.<br />

[17] Z. Farbman, R. Fattal, D. Lischinski, and R. Szeliski, Edgepreserving<br />

decompositions for multi-scale tone and detail manipulation,<br />

ACM Transactions on Graphics, 27(3): 67, 2008.<br />

[18] L. W. Kang, C. W. Lin, and Y. H. Fu, Automatic single-imagebased<br />

rain streaks removal via image decomposition, IEEE<br />

Transactions on Image Processing, 21(4):1742–1755, 2012.<br />

[19] L. W. Kang, C. W. Lin, C. T. Lin, and Y. C. Lin, Self-learningbased<br />

rain streak removal for image/video, IEEE International<br />

Symposium on Circuits and Systems (ISCAS), 2012: 1871–<br />

1874.<br />

[20] P. C. Barnum, S. Narasimhan, and T. Kanade, Analysis of rain<br />

and snow in frequency space, International Journal of Computer<br />

Vision, 86(2-3): 256C274, 2010.<br />

3821