Model-Based Testing - Testing Experience

Model-Based Testing - Testing Experience

Model-Based Testing - Testing Experience

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

different protocol simulators, system configuration interfaces<br />

and automatic log analyzers. This convinced us of the possible<br />

benefits and the decision was made to continue with the next<br />

goal to gather enough information for a business case calculation.<br />

To calculate return on investment, we started to model an existing<br />

and already tested functionality in the selected domain.<br />

The aim was to calculate the earnings both for an initial model<br />

creation and for a model update when some new functionality is<br />

added to the existing model. We were also interested in the fault<br />

finding capability of this new technology. As a result of this exercise<br />

we proved that model-based testing is less time consuming.<br />

We achieved 15% (650 hours to 550 hours) improvement during<br />

initial modelling (creating reusable assets) and 38% (278 hours<br />

to 172 hours) improvement during incremental add-on built on<br />

top of the existing assets. We also found three additional minor<br />

faults compared to previous manual tests of the same functionality.<br />

Hours compared cover all testing-related activities like documentation,<br />

execution and test result reporting.<br />

<strong>Based</strong> on these results we were able to prove a positive business<br />

case with break-even in 1.5 years, and we received an approval<br />

to deploy the technology on a smaller scale to enable additional<br />

studies.<br />

Deployment scope and goals<br />

<strong>Based</strong> on our experiences in deploying new technology, we decided<br />

to deploy MBT in a smaller project. Three different areas<br />

were selected for the deployment: Functional <strong>Testing</strong>, Call State<br />

Machine Component <strong>Testing</strong> and Database Component <strong>Testing</strong>.<br />

The goals of the deployment were to confirm the pilot results in<br />

a real project environment, to confirm the business case and ROI<br />

calculation, and to show that the technology can be adapted to<br />

other development projects. Furthermore, we also wanted to create<br />

a new mode of operation and collect engineer feedback.<br />

Results<br />

During Call State Machine Component <strong>Testing</strong>, manual and model-based<br />

testing activities were organized to go in parallel, in order<br />

to be able to get comparable results with both technologies.<br />

The results showed that in the first phase component testing<br />

with MBT was 25% faster compared to the corresponding manual<br />

test, while in the second phase of the implementation an additional<br />

5%-point gain was measured in terms of work hours. In<br />

code coverage analysis MBT again outperformed manual testing,<br />

resulting in 87% coverage compared to the 80.7% measured for<br />

the manual test design in the first phase. In the second phase of<br />

implementation, this was further increased to an excellent coverage<br />

level of 92%. These higher coverage levels resulted in an improvement<br />

also in fault finding: on top of the 12 faults found with<br />

manual design, MBT discovered 9 additional software defects. According<br />

to our defect escape analysis, three of these would have<br />

been probably caught only during a very late testing phase.<br />

During Database Component <strong>Testing</strong>, the same measures resulted<br />

in similarly attractive numbers; Component testing was<br />

performed in 22% less time than previously planned with outstanding<br />

code coverage of 93% for the database and 97% for the<br />

database interface controller.<br />

www.testingexperience.com<br />

In the Functional <strong>Testing</strong> area, our focus was on how to handle<br />

the complexity of the system under test. This was successfully resolved<br />

by our modellers. To have comparable results, part of the<br />

testing team performed manual test planning and design, while<br />

the modellers worked in parallel on their system models. The final<br />

result was in accordance with the gain in other deployment areas:<br />

20% gain in the speed of test planning and design phase. Analysis<br />

showed that 80% of the faults have been found by both methods,<br />

but the remaining 20% of the faults have been discovered only<br />

by one of the test design methods (10% only with MBT, 10% only<br />

with manual design). This also proves that the scope of manual<br />

and model-based test design is different in nature; so combining<br />

these two approaches is highly recommended for optimal results.<br />

Effect on every-day work<br />

We had to focus also on integrating modelling activities into our<br />

existing software lifecycle model, and processes.<br />

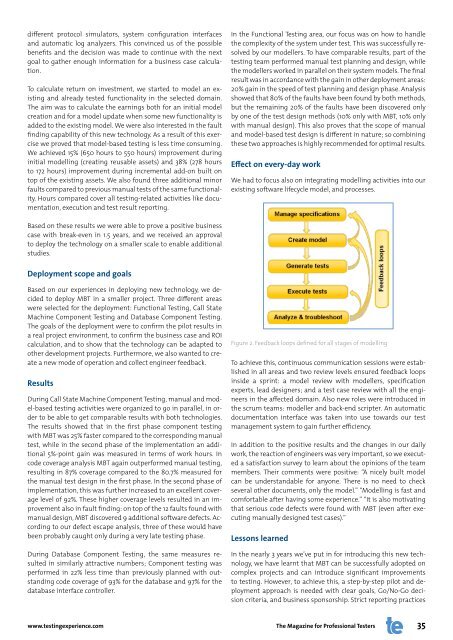

Figure 2. Feedback loops defined for all stages of modelling<br />

To achieve this, continuous communication sessions were established<br />

in all areas and two review levels ensured feedback loops<br />

inside a sprint: a model review with modellers, specification<br />

experts, lead designers; and a test case review with all the engineers<br />

in the affected domain. Also new roles were introduced in<br />

the scrum teams: modeller and back-end scripter. An automatic<br />

documentation interface was taken into use towards our test<br />

management system to gain further efficiency.<br />

In addition to the positive results and the changes in our daily<br />

work, the reaction of engineers was very important, so we executed<br />

a satisfaction survey to learn about the opinions of the team<br />

members. Their comments were positive: “A nicely built model<br />

can be understandable for anyone. There is no need to check<br />

several other documents, only the model.” “<strong>Model</strong>ling is fast and<br />

comfortable after having some experience.” “It is also motivating<br />

that serious code defects were found with MBT (even after executing<br />

manually designed test cases).”<br />

Lessons learned<br />

In the nearly 3 years we’ve put in for introducing this new technology,<br />

we have learnt that MBT can be successfully adopted on<br />

complex projects and can introduce significant improvements<br />

to testing. However, to achieve this, a step-by-step pilot and deployment<br />

approach is needed with clear goals, Go/No-Go decision<br />

criteria, and business sponsorship. Strict reporting practices<br />

The Magazine for Professional Testers<br />

35