Solution : Problem Set 2 - Sung Y. Park

Solution : Problem Set 2 - Sung Y. Park

Solution : Problem Set 2 - Sung Y. Park

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

CUHK Dept. of Economics<br />

Fall 2012 ECON 4120 <strong>Sung</strong> Y. <strong>Park</strong><br />

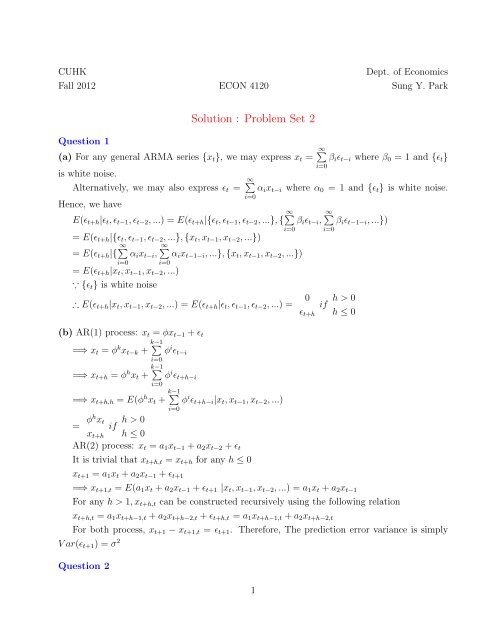

Question 1<br />

<strong>Solution</strong> : <strong>Problem</strong> <strong>Set</strong> 2<br />

(a) For any general ARMA series {xt}, we may express xt = ∞∑<br />

is white noise.<br />

Alternatively, we may also express ϵt = ∞∑<br />

Hence, we have<br />

E(ϵt+h|ϵt, ϵt−1, ϵt−2, ...) = E(ϵt+h|{ϵt, ϵt−1, ϵt−2, ...}, { ∞∑<br />

= E(ϵt+h|{ϵt, ϵt−1, ϵt−2, ...}, {xt, xt−1, xt−2, ...})<br />

= E(ϵt+h|{ ∞∑<br />

αixt−i, ∞∑<br />

αixt−1−i, ...}, {xt, xt−1, xt−2, ...})<br />

i=0<br />

i=0<br />

= E(ϵt+h|xt, xt−1, xt−2, ...)<br />

∵ {ϵt} is white noise<br />

∴ E(ϵt+h|xt, xt−1, xt−2, ...) = E(ϵt+h|ϵt, ϵt−1, ϵt−2, ...) = 0<br />

(b) AR(1) process: xt = ϕxt−1 + ϵt<br />

=⇒ xt = ϕkxt−k + k−1 ∑<br />

ϕ<br />

i=0<br />

iϵt−i =⇒ xt+h = ϕhxt + k−1 ∑<br />

ϕ<br />

i=0<br />

iϵt+h−i =⇒ xt+h,h = E(ϕhxt + k−1 ∑<br />

ϕ<br />

i=0<br />

iϵt+h−i|xt, xt−1, xt−2, ...)<br />

= ϕh xt<br />

xt+h<br />

if<br />

h > 0<br />

h ≤ 0<br />

AR(2) process: xt = a1xt−1 + a2xt−2 + ϵt<br />

It is trivial that xt+h,t = xt+h for any h ≤ 0<br />

xt+1 = a1xt + a2xt−1 + ϵt+1<br />

βiϵt−i where β0 = 1 and {ϵt}<br />

i=0<br />

αixt−i where α0 = 1 and {ϵt} is white noise.<br />

i=0<br />

βiϵt−i,<br />

i=0<br />

∞∑<br />

βiϵt−1−i, ...})<br />

i=0<br />

ϵt+h<br />

if<br />

h > 0<br />

h ≤ 0<br />

=⇒ xt+1,t = E(a1xt + a2xt−1 + ϵt+1 |xt, xt−1, xt−2, ...) = a1xt + a2xt−1<br />

For any h > 1, xt+h,t can be constructed recursively using the following relation<br />

xt+h,t = a1xt+h−1,t + a2xt+h−2,t + ϵt+h,t = a1xt+h−1,t + a2xt+h−2,t<br />

For both process, xt+1 − xt+1,t = ϵt+1. Therefore, The prediction error variance is simply<br />

V ar(ϵt+1) = σ 2<br />

Question 2<br />

1

xt = ϵt + 0.2ϵt−1<br />

x101 = ϵ101 + 0.2ϵt100 =⇒ x101,100 = 0.2ϵt100 = 0.002<br />

x102 = ϵ102 + 0.2ϵt101 =⇒ x102,100 = 0<br />

x101 − x101,100 = ϵ101 =⇒ σ1 = σϵ = √ 0.025 = 0.158<br />

x102 − x102,100 = ϵ102 + 0.2ϵt101 =⇒ σ2 = √ (1 + 0.2 2 )σϵ = 0.161<br />

V ar(xt) = (1 + 0.2 2 )σ 2 ϵ<br />

Cov(xt, xt−1) = Cov(ϵt + 0.2ϵt−1, ϵt−1 + 0.2ϵt−2) = 0.2σ 2 ϵ<br />

Cov(xt, xt−2) = Cov(ϵt + 0.2ϵt−1, ϵt−2 + 0.2ϵt−3) = 0<br />

=⇒ ρ(1) = 0.2<br />

(1+0.2 2 )<br />

Question 3<br />

= 0.192 and ρ(2) = 0<br />

xt = 0.01 + 0.2xt−2 + ϵt<br />

E(xt) = 0.01 + 0.2E(xt−2) =⇒ E(xt) = 0.01 = 0.0125<br />

0.8<br />

V ar(xt) = 0.22V ar(xt−2) + V ar(ϵt) =⇒ V ar(xt) = 0.02<br />

0.96<br />

= 0.0208<br />

Cov(xt, xt−1) = 0 and Cov(xt, xt−2) = Cov(0.01 + 0.2xt−2 + ϵt, xt−2) = 0.2V ar(xt)<br />

=⇒ ρ(1) = 0 and ρ(2) = 0.2<br />

x101 = 0.01 + 0.2x99 + ϵ101 =⇒ x101,100 = 0.01 + 0.2x99 = 0.014<br />

x102 = 0.01 + 0.2x100 + ϵ102 =⇒ x102,100 = 0.01 + 0.2x100 = 0.008<br />

x101 − x101,100 = ϵ101 and x102 − x102,100 = ϵ102 =⇒ σ1 = σ2 = σϵ = √ 0.02 = 0.141<br />

Question 4<br />

To generate 10 ARIMA(1,0,1) series, we provide two methods. The first one is to use random<br />

variable generator, and the second one is to directly use command “arima.sim”. See Code in<br />

Appendix for details.<br />

See Table 1 for required statistics, which is the output of the codes.You should notice that<br />

the average estimates are quite close to the true value as the differences is smaller than 2 times<br />

of estimated standard error.<br />

Question 5<br />

(a) See Figure 1 and Figure 2 for scatter diagrams. Just as the question mentioned, the scatter<br />

plots shows that the particulate level seems to have a linear relation with mortality while<br />

temperature is likely to be either linearly or quadratically related to mortality. To better show<br />

the relationships, we also imposed fitted lines to the scatter plots (See Code for details).<br />

(b) See Table 2 for regression result.<br />

(c) See Figure 3 and Figure 4 for residuals plot and correlogram, respectively.<br />

The residual plot clearly exhibits persistence and reveals no evidence against stationarity.<br />

2

The correlogram shows that the sample ACFs are significant at the first 5 lags and the<br />

PACF are significant at the first two lag.<br />

We also computed Q-Statistics, which rejects the null hypothesis at 1%. See Table 3.<br />

All these evidence indicate serial correlation in the residuals.<br />

As suggested by the sample correlogram, we fit the residuals using ARMA(2,q) where<br />

q=0,1,...,4 and perform model selection using AIC.<br />

Since ARMA(2,1) yields the smallest AIC among all the candidates, we proceed with<br />

ARMA(2,1). To check if it is adequate to capture the dynamics in the regression residual,<br />

we need to inspect the correlogram and Q-Statistics.<br />

See Figure 4 and Table 4.<br />

Fortunately, the correlogram and the Box-test indicates the absence of serial correlation.<br />

Therefore, we suggests that regression residuals follow ARIMA(2,0,1).<br />

(d) Since we need to transform the entire dataset, we proceed with ARIMA(2,0,0) for simplicity.<br />

To illustrate how we transform all the input variables, we provide the following deduction.<br />

Let ϕ(L) = 1 − θ1L − θ2L 2<br />

if εt=θ1εt−1 − θ2εt−2 + vt where vt is white noise and<br />

Mt = β00 + β0t + β1(Tt − T ) + β2(Tt − T ) 2 + β3Pt + εt<br />

Then we have<br />

ϕ(L) Mt = ϕ(L)β00 + β0ϕ(L)t + β1ϕ(L)(Tt − T ) + β2ϕ(L)(Tt − T ) 2 + β3ϕ(L)Pt + vt<br />

Therefore, we apply the transformation ϕ(L) = 1 − θ1L − θ2L 2 to all the input variables.<br />

See Code for data transformation.<br />

See Table 5 for regression output with the transfromed data.<br />

Please be noted that regression on transformed variables has a smaller residual standard<br />

error than 6.385 obtained by part (b).<br />

Question 6<br />

We first plot the two input series.From Figure 6, we can see that both series exhibit stochastic<br />

trend, which is further comfimed by correlogram in Figure 7 and Figure 8. Hence, we apply 1st<br />

difference to the input variables.Then we run the first differenced log(future) on first differenced<br />

log(spot).<br />

From Figure 9, the correlogram suggests that serial correlation problem. To tackle this<br />

problem, we include ARMA dynamics to the model. As we can see from the correlogram, both<br />

sample ACFs and PACFs exhibit exponential decline after lag 4. Therefore, we fit the model<br />

with ARMA(p,q) where (p,q)∈ [0, 4] × [0, 4] and perform model selection using AIC.<br />

Table 6 suggests MA(4) as it yields lowest AIC value. We proceed with MA(4) first. Again,<br />

we plot the correlogram to see whether serial correlation problem is eradicated this time. How-<br />

3

ever, Figure 10 clearly indicates the inadequacy of MA(4) as the sample ACFs and PACFs<br />

remain significant after the first few lags.<br />

Hence, we try ARMA(2,1), the one of second lowest AIC. Its correlogram seems nice as all<br />

the sample ACF’s and PACF’s are individually insignificant. Let’s perform Box-Pierce test to<br />

seek stronger support.<br />

From Table 8, The Q-statistic confirms that the residuals are white noise. Moreover, the<br />

histogram and density plot suggest that the residuals are normally distributed. Therefore, we<br />

select this as our proposed model.<br />

The estimation results are in Table 9.<br />

4