- Page 1 and 2:

Performance and Evaluation of Lisp

- Page 3 and 4:

ii When I began the adventure on wh

- Page 5 and 6:

Acknowledgements This book is reall

- Page 7 and 8:

Contents Chapter 1 Introduction . .

- Page 9 and 10:

Performance and Evaluation of Lisp

- Page 11 and 12:

2 to be a plethora of facts, only t

- Page 13 and 14:

4 An instruction pipeline is used t

- Page 15 and 16:

6 to assign any location the name o

- Page 17 and 18:

8 voking a closure) can be accompli

- Page 19 and 20:

10 (Lisp machine, SEUS [Weyhrauch 1

- Page 21 and 22:

12 which takes a function with some

- Page 23 and 24:

14 free objects or until a threshol

- Page 25 and 26:

16 1.1.2.6 Arithmetic Arithmetic is

- Page 27 and 28:

18 Different rounding modes in the

- Page 29 and 30:

20 1.3 Major Lisp Facilities There

- Page 31 and 32:

22 by studying the size of the tabl

- Page 33 and 34:

24 1.4.1 Know What is Being Measure

- Page 35 and 36:

26 With 100 such functions and no l

- Page 37 and 38:

28 the MacLisp compiler generates f

- Page 39 and 40:

30 machine will perform background

- Page 41 and 42:

32 2.1.2 Function Call MacLisp func

- Page 43 and 44:

34 2.2 MIT CADR Symbolics Inc. and

- Page 45 and 46:

36 2.3 Symbolics 2.3.1 3600 The 360

- Page 47 and 48:

38 Most of the 3600’s architectur

- Page 49 and 50:

40 Like the CADR, the compiler for

- Page 51 and 52:

42 2.4 LMI Lambda LISP Machine, Inc

- Page 53 and 54:

44 bits in the tag field of every o

- Page 55 and 56:

46 2.5 S-1 Lisp S-1 Lisp runs on th

- Page 57 and 58:

48 7 Program Counter 8 GC Pointer (

- Page 59 and 60:

50 2.5.4 Systemic Quantities Vector

- Page 61 and 62:

52 The next time FOO is invoked, th

- Page 63 and 64:

54 2.7 NIL NIL (New Implementation

- Page 65 and 66:

56 The arguments to the function ar

- Page 67 and 68:

58 2.8 Spice Lisp Spice Lisp is an

- Page 69 and 70: 60 3. Activating the frame by makin

- Page 71 and 72: 62 The benchmarks were run on a Per

- Page 73 and 74: 64 than there is for one without, a

- Page 75 and 76: 66 2.10 Portable Standard Lisp Port

- Page 77 and 78: 68 7. Finishing up and tuning 2.10.

- Page 79 and 80: 70 Apollo Dn300 This is an MC68010-

- Page 81 and 82: 72 2.10.5 Function Call Compiled-to

- Page 83 and 84: 74 array utility package. Additiona

- Page 85 and 86: 76 2.12 Data General Common Lisp Da

- Page 87 and 88: 78 To determine the type, the high

- Page 89 and 90: 80 2. The benchmark results contain

- Page 91 and 92: 82 little else but function calls (

- Page 93 and 94: 84 ;PUSH, ADJSP both do bounds ;che

- Page 95 and 96: 86 Raw Time Tak Implementation CPU

- Page 97 and 98: 88 Tak Times On Symbolics LM-2 4.44

- Page 99 and 100: 90 Tak Times On SAIL (KLB) in MacLi

- Page 101 and 102: 92 In TAKF, changing the variable F

- Page 103 and 104: 94 In Vax NIL, there appears to be

- Page 105 and 106: 96 3.2.4 Raw Data Raw Time Stak Imp

- Page 107 and 108: 98 As Peter Deutsch has pointed out

- Page 109 and 110: 100 that THROW uses is linear in th

- Page 111 and 112: 102 3.3.4 Raw Data Raw Time Ctak Im

- Page 113 and 114: 104 It measures EBOX milliseconds.

- Page 115 and 116: 106 Because of the average size of

- Page 117 and 118: 108 Raw Time Takl Implementation CP

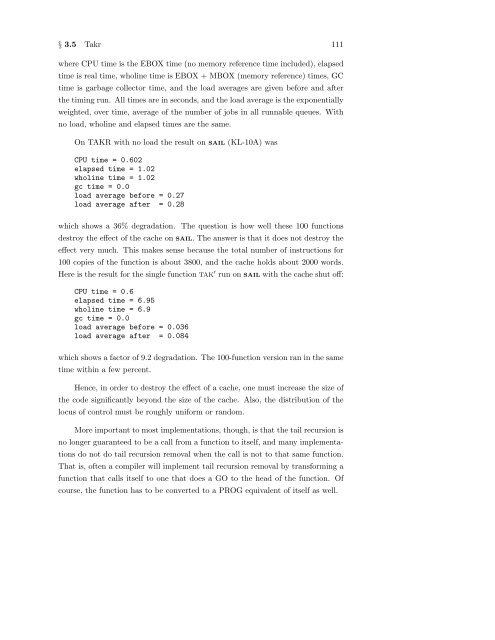

- Page 119: 110 3.5 Takr 3.5.1 The Program (def

- Page 123 and 124: 114 Raw Time Takr Implementation CP

- Page 125 and 126: 116 3.6 Boyer Here is what Bob Boye

- Page 127 and 128: 118 (defun rewrite-with-lemmas (ter

- Page 129 and 130: 120 (equal (equal (plus a b) (zero)

- Page 131 and 132: 122 (equal (power-eval (big-plus x

- Page 133 and 134: 124 (equal (numberp (greatest-facto

- Page 135 and 136: 126 (equal (times x (add1 y)) (if (

- Page 137 and 138: 128 (defun test () (prog (ans term)

- Page 139 and 140: 130 the program uses the axioms sto

- Page 141 and 142: 132 Meter for Add-Lemma during TEST

- Page 143 and 144: 134 Raw Time Boyer Implementation C

- Page 145 and 146: 136 3.7 Browse 3.7.1 The Program ;;

- Page 147 and 148: 138 ((eq (char1 (car pat)) #\*) (le

- Page 149 and 150: 140 The symbol names are GENSYMed;

- Page 151 and 152: 142 Meter for Match Car’s 1319800

- Page 153 and 154: 144 Raw Time Browse Implementation

- Page 155 and 156: 146 3.8 Destructive 3.8.1 The Progr

- Page 157 and 158: 148 3.8.3 Translation Notes Meter f

- Page 159 and 160: 150 3.8.4 Raw Data Raw Time Destruc

- Page 161 and 162: 152 Very few programs that I have b

- Page 163 and 164: 154 (defun traverse-remove (n q) (c

- Page 165 and 166: 156 (defmacro init-traverse() (prog

- Page 167 and 168: 158 node. The number of nodes visit

- Page 169 and 170: 160 3.9.3 Translation Notes In INTE

- Page 171 and 172:

162 (TSELECT (LAMBDA (N Q) (for N (

- Page 173 and 174:

164 (TIMIT (LAMBDA NIL (TIMEALL (SE

- Page 175 and 176:

166 Raw Time Traverse Initializatio

- Page 177 and 178:

168 Raw Time Traverse Implementatio

- Page 179 and 180:

170 3.10 Derivative 3.10.1 The Prog

- Page 181 and 182:

172 3.10.4 Raw Data Raw Time Deriv

- Page 183 and 184:

174 The benchmark should solve a pr

- Page 185 and 186:

176 (defun dderiv (a) (cond ((atom

- Page 187 and 188:

178 3.11.4 Raw Data Raw Time Dderiv

- Page 189 and 190:

180 I find it rather startling that

- Page 191 and 192:

182 3.12.2 Analysis This benchmark

- Page 193 and 194:

184 Raw Time Fdderiv Implementation

- Page 195 and 196:

186 3.13 Division by 2 3.13.1 The P

- Page 197 and 198:

188 3.13.4 Raw Data Raw Time Iterat

- Page 199 and 200:

190 Raw Time Recursive Div2 Impleme

- Page 201 and 202:

192 If naive users write cruddy cod

- Page 203 and 204:

194 l6 (cond ((< k j) (setq j (- j

- Page 205 and 206:

196 3.14.3 Translation Notes The IN

- Page 207 and 208:

198 (for J to LE1 do (* Loop thru b

- Page 209 and 210:

200 3.14.4 Raw Data Raw Time FFT Im

- Page 211 and 212:

202 This is not to say Franz’s co

- Page 213 and 214:

204 (defun place (i j) (let ((end (

- Page 215 and 216:

206 (definePiece 2 1 0 1) (definePi

- Page 217 and 218:

208 During the search, the first ei

- Page 219 and 220:

210 (CONSTANTS SIZE TYPEMAX D CLASS

- Page 221 and 222:

212 (START (LAMBDA NIL (for M from

- Page 223 and 224:

214 3.15.4 Raw Data Raw Time Puzzle

- Page 225 and 226:

216 Presumably this is because 2-di

- Page 227 and 228:

218 (defun try (i depth) (cond ((=

- Page 229 and 230:

220 Meter for Try =’s 19587224 Re

- Page 231 and 232:

222 (LAST-POSITION (LAMBDA NIL (OR

- Page 233 and 234:

224 3.16.4 Raw Data Raw Time Triang

- Page 235 and 236:

226 I also tried TRIANG, but gave u

- Page 237 and 238:

228 The test pattern is a tree that

- Page 239 and 240:

230 Raw Time Fprint Implementation

- Page 241 and 242:

232 3.18 File Read 3.18.1 The Progr

- Page 243 and 244:

234 Raw Time Fread Implementation C

- Page 245 and 246:

236 3.19 Terminal Print 3.19.1 The

- Page 247 and 248:

238 Raw Time Tprint Implementation

- Page 249 and 250:

240 3.20 Polynomial Manipulation 3.

- Page 251 and 252:

242 (defun pplus (x y) (cond ((pcoe

- Page 253 and 254:

244 (defun ptimes3 (y) (prog (e u c

- Page 255 and 256:

246 The variables in the polynomial

- Page 257 and 258:

248 Meter for (Bench 2) Signp’s 3

- Page 259 and 260:

250 3.20.3 Raw Data Raw Time Frpoly

- Page 261 and 262:

252 Raw Time Frpoly Power = 2 r2 =

- Page 263 and 264:

254 Raw Time Frpoly Power = 2 r3 =

- Page 265 and 266:

256 Raw Time Frpoly Power = 5 r = x

- Page 267 and 268:

258 Raw Time Frpoly Power = 5 r2 =

- Page 269 and 270:

260 Raw Time Frpoly Power = 5 r3 =

- Page 271 and 272:

262 Raw Time Frpoly Power = 10 r =

- Page 273 and 274:

264 Raw Time Frpoly Power = 10 r2 =

- Page 275 and 276:

266 Raw Time Frpoly Power = 10 r3 =

- Page 277 and 278:

268 Raw Time Frpoly Power = 15 r =

- Page 279 and 280:

270 Raw Time Frpoly Power = 15 r2 =

- Page 281 and 282:

272 Raw Time Frpoly Power = 15 r3 =

- Page 283 and 284:

274 Well, it certainly is hard to e

- Page 285 and 286:

276 sophisticated Lisp implementati

- Page 287 and 288:

278 [Foderaro 1982] Foderaro, J. K.

- Page 289 and 290:

280

- Page 291 and 292:

282 context-switching, 7. Cray-1, 4

- Page 293 and 294:

284 multi-processing Lisp, 7. MV400