computing lives - FTP Directory Listing

computing lives - FTP Directory Listing

computing lives - FTP Directory Listing

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

›± ¨ª ¨ § ±± § ±± ±´¨ ⁄±Æ ø ø¨ ± ¨Æ´ ¨ ± ¥ªø ª ¥ µ ªÆª ÕªøÆ ´ª § “ª®¨ –ø ª<br />

¨¨ ÊÒÒ©©©Ú ± ´¨ªÆÚ±Æ<br />

ÀÃäŒ ›ÿfl—ÕÙ –Ú Í<br />

fl“fl‘« “Ÿ fl“<br />

fl“› ¤“à ՛Œ –ÃÙ –Ú ÈÍ<br />

œÀ¤Õà —“ “Ÿ<br />

“ Õ fi ‘ ë٠–Ú ËÏ<br />

›± ¨ª ¨ § ±± § ±± ±´¨ ⁄±Æ ø ø¨ ± ¨Æ´ ¨ ± ¥ªø ª ¥ µ ªÆª<br />

ÕªøÆ ´ª § “ª®¨ –ø ª

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

Editor in Chief<br />

Carl K. Chang<br />

Iowa State University<br />

____________<br />

chang@cs.iastate.edu<br />

Associate Editor<br />

in Chief<br />

Sumi Helal<br />

University of Florida<br />

__________<br />

helal@cise.ufl.edu<br />

Area Editors<br />

Computer Architectures<br />

Steven K. Reinhardt<br />

AMD<br />

Databases and Information<br />

Retrieval<br />

Erich Neuhold<br />

University of Vienna<br />

Distributed Systems<br />

Jean Bacon<br />

University of Cambridge<br />

Graphics and Multimedia<br />

Oliver Bimber<br />

Johannes Kepler University Linz<br />

High-Performance Computing<br />

Vladimir Getov<br />

University of Westminster<br />

Information and<br />

Data Management<br />

Naren Ramakrishnan<br />

Virginia Tech<br />

Multimedia<br />

Savitha Srinivasan<br />

IBM Almaden Research Center<br />

Networking<br />

Ahmed Helmy<br />

University of Florida<br />

Software<br />

Robert B. France<br />

Colorado State University<br />

David M. Weiss<br />

Iowa State University<br />

Editorial Staff<br />

Scott Hamilton<br />

Senior Acquisitions Editor<br />

_____________<br />

shamilton@computer.org<br />

Judith Prow<br />

Managing Editor<br />

___________<br />

jprow@computer.org<br />

Chris Nelson<br />

Senior Editor<br />

James Sanders<br />

Senior Editor<br />

COMPUTER<br />

Associate Editor in Chief,<br />

Research Features<br />

Kathleen Swigger<br />

University of North Texas<br />

__________<br />

kathy@cs.unt.edu<br />

Associate Editor in Chief,<br />

Special Issues<br />

Bill N. Schilit<br />

Google<br />

schilit@computer.org<br />

____________<br />

Column Editors<br />

AI Redux<br />

Naren Ramakrishnan<br />

Virginia Tech<br />

Education<br />

Ann E.K. Sobel<br />

Miami University<br />

Embedded Computing<br />

Tom Conte<br />

Georgia Tech<br />

Green IT<br />

Kirk W. Cameron<br />

Virginia Tech<br />

Industry Perspective<br />

Sumi Helal<br />

University of Florida<br />

IT Systems Perspectives<br />

Richard G. Mathieu<br />

James Madison University<br />

Invisible Computing<br />

Albrecht Schmidt<br />

University of Duisburg-Essen<br />

The Known World<br />

David A. Grier<br />

George Washington University<br />

The Profession<br />

Neville Holmes<br />

University of Tasmania<br />

Security<br />

Jeffrey M. Voas<br />

NIST<br />

Contributing Editors<br />

Lee Garber<br />

Bob Ward<br />

Design and Production<br />

Larry Bauer<br />

Design<br />

Olga D’Astoli<br />

Cover Design<br />

Kate Wojogbe<br />

Computing Practices<br />

Rohit Kapur<br />

rohit.kapur@synopsys.com<br />

________________<br />

Perspectives<br />

Bob Colwell<br />

bob.colwell@comcast.net<br />

_____________<br />

Web Editor<br />

Ron Vetter<br />

vetterr@uncw.edu<br />

___________<br />

Software Technologies<br />

Mike Hinchey<br />

Lero—the Irish Software<br />

Engineering Research Centre<br />

Web Technologies<br />

Simon S.Y. Shim<br />

San Jose State University<br />

Advisory Panel<br />

Thomas Cain<br />

University of Pittsburgh<br />

Doris L. Carver<br />

Louisiana State University<br />

Ralph Cavin<br />

Semiconductor Research Corp.<br />

Dan Cooke<br />

Texas Tech University<br />

Ron Hoelzeman<br />

University of Pittsburgh<br />

Naren Ramakrishnan<br />

Virginia Tech<br />

Ron Vetter<br />

University of North Carolina,<br />

Wilmington<br />

Alf Weaver<br />

University of Virginia<br />

Administrative<br />

Staff<br />

Products and<br />

Services Director<br />

Evan Butterfield<br />

Manager,<br />

Editorial Services<br />

Jennifer Stout<br />

2010 IEEE Computer<br />

Society President<br />

James D. Isaak<br />

cspresident2010@jimisaak.com<br />

__________________<br />

CS Publications Board<br />

David A. Grier (chair), David<br />

Bader, Angela R. Burgess, Jean-<br />

Luc Gaudiot, Phillip Laplante,<br />

Dejan Milojičić, Linda I. Shafer,<br />

Dorée Duncan Seligmann, Don<br />

Shafer, Steve Tanimoto, and<br />

Roy Want<br />

CS Magazine<br />

Operations Committee<br />

Dorée Duncan Seligmann<br />

(chair), David Albonesi, Isabel<br />

Beichl, Carl Chang, Krish<br />

Chakrabarty, Nigel Davies, Fred<br />

Douglis, Hakan Erdogmus, Carl<br />

E. Landwehr, Simon Liu, Dejan<br />

Milojičić, John Smith, Gabriel<br />

Taubin, Fei-Yue Wang, and<br />

Jeffrey R. Yost<br />

Senior Business<br />

Development Manager<br />

Sandy Brown<br />

Senior Advertising<br />

Coordinator<br />

Marian Anderson<br />

Circulation: Computer (ISSN 0018-9162) is published monthly by the IEEE Computer Society. IEEE Headquarters, Three Park Avenue, 17th Floor, New York, NY<br />

10016-5997; IEEE Computer Society Publications Office, 10662 Los Vaqueros Circle, PO Box 3014, Los Alamitos, CA 90720-1314; voice +1 714 821 8380; fax +1<br />

714 821 4010; IEEE Computer Society Headquarters, 2001 L Street NW, Suite 700, Washington, DC 20036. IEEE Computer Society membership includes $19 for<br />

a subscription to Computer magazine. Nonmember subscription rate available upon request. Single-copy prices: members $20.00; nonmembers $99.00.<br />

Postmaster: Send undelivered copies and address changes to Computer, IEEE Membership Processing Dept., 445 Hoes Lane, Piscataway, NJ 08855. Periodicals<br />

Postage Paid at New York, New York, and at additional mailing offices. Canadian GST #125634188. Canada Post Corporation (Canadian distribution) publications<br />

mail agreement number 40013885. Return undeliverable Canadian addresses to PO Box 122, Niagara Falls, ON L2E 6S8 Canada. Printed in USA.<br />

Editorial: Unless otherwise stated, bylined articles, as well as product and service descriptions, reflect the author’s or firm’s opinion. Inclusion in Computer<br />

does not necessarily constitute endorsement by the IEEE or the Computer Society. All submissions are subject to editing for style, clarity, and space.<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

Almost $90 million in server room<br />

research. Now yours is FREE!<br />

Implementing Energy<br />

Efficient Data Centers<br />

“ Implementing Energy<br />

Efficient Data Centers”<br />

White Paper #114<br />

$ 65 00 FREE!<br />

Power er and an and<br />

Cooling Coo oolin ling i g Ca Capacity Ca Capacity CThe pa pacity acityAdvantages<br />

of Row<br />

Management Ma for Data and Centers Rack-Oriented<br />

Five ve Basic Steps for Efficient<br />

Cooling Architectures<br />

Space pace Organization within<br />

High-Density gh-Density for Enclosures<br />

Data Centers<br />

White Paper #130<br />

An Improved Architecture<br />

for High-Efficiency,<br />

High-Density Data Centers<br />

White Paper #130<br />

“ An Improved Architecture<br />

for High-Efficiency,<br />

High-Density<br />

Data Centers”<br />

White Paper #126<br />

$ 120 00 FREE!<br />

Time well spent...<br />

The Advantages of Row Row<br />

and Rack-Oriented<br />

Cooling Architectures res<br />

for Data Centers<br />

White Paper #130<br />

White Paper #130<br />

“ The Advantages of<br />

Row and Rack-Oriented<br />

Cooling Architectures<br />

for Data Centers”<br />

White Paper #130<br />

$ 85 00 FREE!<br />

Deploying ng High-Density<br />

High-Density<br />

Zones in n a a Low-Density<br />

Low-Density<br />

Data Center nter<br />

White White Paper Paper #130 #130<br />

“ Deploying High-Density<br />

Zones in a Low-Density<br />

Data Center”<br />

White Paper #134<br />

$ 00<br />

115 FREE!<br />

Power and Cooling<br />

Capacity Management<br />

for Data Centers<br />

White Paper #150<br />

©2010 Schneider Electric Industries SAS, All Rights Reserved. Schneider Electric and APC are owned by Schneider Electric, or its affiliated companies in the United States and other countries.<br />

<br />

“ Power and Cooling<br />

Capacity Management<br />

for Data Centers”<br />

White Paper #150<br />

$ 00 225<br />

Download FREE APC White Papers to avoid<br />

the most common mistakes in planning<br />

IT power and cooling.<br />

FREE!<br />

Have a plan for your data center.<br />

<br />

<br />

cases, turnover and budget cuts resulted in no plan at all.<br />

Get the answers you need and avoid headaches tomorrow.<br />

<br />

<br />

<br />

yourself money and headaches tomorrow.<br />

<br />

www.apc.com<br />

Choose and download any three white papers<br />

within the next 90 days for FREE!<br />

Visit www.apc.com/promo Key Code s145w x6165 <br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

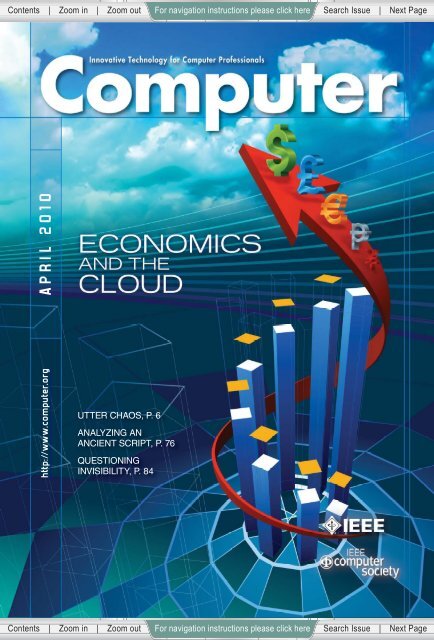

CONTENTS<br />

ABOUT THIS ISSUE<br />

Growing interest in cloud <strong>computing</strong> also raises the<br />

question of cost/benefit. In this issue, we feature an<br />

article describing a federated, open-source cloud platform<br />

that lowers the entry barriers to systems and<br />

applications research communities, an economic model<br />

for determining whether to lease or buy storage, and an<br />

analysis of energy consumption and battery life in<br />

mobile devices offloading computation to the cloud. We<br />

also look at puzzle-based learning, ethical codes of conduct<br />

in the legal realm, and the linkage of software<br />

development and business strategy.<br />

COMPUTING PRACTICES<br />

20 Puzzle-Based Learning for<br />

Engineering and Computer<br />

Science<br />

Nickolas Falkner, Raja Sooriamurthi,<br />

and Zbigniew Michalewicz<br />

To attract, motivate, and retain students and<br />

increase their mathematical awareness and<br />

problem-solving skills, universities are<br />

introducing courses or seminars that explore<br />

puzzle-based learning. The authors introduce<br />

and define this learning approach, describe<br />

course variations, and highlight early student<br />

feedback.<br />

PERSPECTIVES<br />

29 Using Codes of Conduct<br />

to Resolve Legal Disputes<br />

Peter Aiken, Robert M. Stanley, Juanita<br />

Billings, and Luke Anderson<br />

In the absence of other published standards of<br />

care, it is reasonable for contractual parties to<br />

rely on an applicable, widely available code of<br />

conduct to guide expectations.<br />

COVER FEATURES<br />

35 Open Cirrus: A Global<br />

Cloud Computing Testbed<br />

Arutyun I. Avetisyan, Roy Campbell, Indranil<br />

Gupta, Michael T. Heath, Steven Y. Ko,<br />

Gregory R. Ganger, Michael A. Kozuch,<br />

David O’Hallaron, Marcel Kunze, Thomas T.<br />

Kwan, Kevin Lai, Martha Lyons, Dejan S.<br />

Milojicic, Hing Yan Lee, Yeng Chai Soh,<br />

http://computer.org/computer<br />

For more information on <strong>computing</strong> topics, visit the Computer Society Digital Library at www.computer.org/csdl.<br />

Ng Kwang Ming, Jing-Yuan Luke,<br />

and Han Namgoong<br />

Open Cirrus is a cloud <strong>computing</strong> testbed<br />

that, unlike existing alternatives, federates<br />

distributed data centers. It aims to spur<br />

innovation in systems and applications<br />

research and catalyze development of an<br />

open source service stack for the cloud.<br />

44 To Lease or Not to Lease<br />

from Storage Clouds<br />

Edward Walker, Walter Brisken,<br />

and Jonathan Romney<br />

Storage clouds are online services for leasing<br />

disk storage. A new modeling tool,<br />

formulated from empirical data spanning<br />

many years, lets organizations rationally<br />

evaluate the benefit of using storage clouds<br />

versus purchasing hard disk drives.<br />

51 Cloud Computing for Mobile<br />

Users: Can Offloading<br />

Computation Save Energy?<br />

Karthik Kumar and Yung-Hsiang Lu<br />

The cloud heralds a new era of <strong>computing</strong><br />

where application services are provided<br />

through the Internet. Cloud <strong>computing</strong> can<br />

enhance the <strong>computing</strong> capability of mobile<br />

systems, but is it the ultimate solution for<br />

extending such systems’ battery lifetimes?<br />

RESEARCH FEATURE<br />

57 Linking Software<br />

Development and<br />

Business Strategy<br />

through Measurement<br />

Victor R. Basili, Mikael Lindvall, Myrna<br />

Regardie, Carolyn Seaman, Jens Heidrich,<br />

Jürgen Münch, Dieter Rombach,<br />

and Adam Trendowicz<br />

The GQM + Strategies approach extends the<br />

goal/question/metric paradigm for<br />

measuring the success or failure of goals and<br />

strategies, adding enterprise-wide support<br />

for determining action on the basis of<br />

measurement results. An organization can<br />

thus integrate its measurement program<br />

across all levels.<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

IEEE Computer Society: http://computer.org<br />

Computer: http://computer.org/computer<br />

computer@computer.org<br />

___________<br />

IEEE Computer Society Publications Office: +1 714 821 8380<br />

Cover image © Lolaferari Dreamstime.com<br />

6 The Known World<br />

Utter Chaos<br />

David Alan Grier<br />

10 32 & 16 Years Ago<br />

Computer, April 1978 and 1994<br />

Neville Holmes<br />

NEWS<br />

13 Technology News<br />

Will HTML 5 Restandardize the Web?<br />

Steven J. Vaughan-Nichols<br />

16 News Briefs<br />

Linda Dailey Paulson<br />

MEMBERSHIP NEWS<br />

66 IEEE Computer Society<br />

Connection<br />

69 Call and Calendar<br />

COLUMNS<br />

76 AI Redux<br />

Probabilistic Analysis of an Ancient<br />

Undeciphered Script<br />

Rajesh P.N. Rao<br />

81 Software Technologies<br />

When TV Dies, Will It Go to the Cloud?<br />

Karin Breitman, Markus Endler, Rafael<br />

Pereira, and Marcello Azambuja<br />

Flagship Publication of the IEEE<br />

Computer Society<br />

April 2010, Volume 43, Number 4<br />

84 Invisible Computing<br />

Questioning Invisibility<br />

Leah Buechley<br />

87 Education<br />

Cutting Across the Disciplines<br />

Jim Vallino<br />

90 Security<br />

Integrating Legal and Policy Factors in<br />

Cyberpreparedness<br />

James Bret Michael, John F. Sarkesain,<br />

Thomas C. Wingfield, Georgios Dementis,<br />

and Gonçalo Nuno Baptista de Sousa<br />

96 The Profession<br />

Computing a Better World<br />

Kai A. Olsen<br />

DEPARTMENTS<br />

4 Elsewhere in the CS<br />

71 Computer Society Information<br />

72 Career Opportunities<br />

74 Advertiser/Product Index<br />

93 Bookshelf<br />

Reuse Rights and Reprint Permissions: Educational or personal use of this<br />

material is permitted without fee, provided such use: 1) is not made for profit;<br />

2) includes this notice and a full citation to the original work on the first page of<br />

the copy; and 3) does not imply IEEE endorsement of any third-party products<br />

or services. Authors and their companies are permitted to post their IEEE-copyrighted material on<br />

their own Web servers without permission, provided that the IEEE copyright notice and a full citation<br />

to the original work appear on the first screen of the posted copy.<br />

Permission to reprint/republish this material for commercial, advertising, or promotional purposes<br />

or for creating new collective works for resale or redistribution must be obtained from the IEEE by<br />

writing to the IEEE Intellectual Property Rights Office, 445 Hoes Lane, Piscataway, NJ 08854-4141<br />

or pubs-permissions@ieee.org. _______________ Copyright © 2010 IEEE. All rights reserved.<br />

Abstracting and Library Use: Abstracting is permitted with credit to the source. Libraries are permitted<br />

to photocopy for private use of patrons, provided the per-copy fee indicated in the code at<br />

the bottom of the first page is paid through the Copyright Clearance Center, 222 Rosewood Drive,<br />

Danvers, MA 01923.<br />

IEEE prohibits discrimination, harassment, and bullying. For more information, visit www.ieee.org/<br />

______________________<br />

web/aboutus/whatis/policies/p9-26.html.<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

4<br />

ELSEWHERE IN THE CS<br />

Computer Highlights Society Magazines<br />

The IEEE Computer Society offers a lineup<br />

of 13 peer-reviewed technical magazines<br />

that cover cutting-edge topics in <strong>computing</strong><br />

including scientific applications, design and<br />

test, security, Internet <strong>computing</strong>, machine intelligence,<br />

digital graphics, and computer history. Select articles from<br />

recent issues of Computer Society magazines are highlighted<br />

below.<br />

The March/April issue of Software features an overview<br />

of tools and technologies for improved collaboration titled<br />

“Collaboration Tools for Global Software Engineering,” by<br />

Filippo Lanubile, Christof Ebert, Rafael Prikladnicki, and<br />

Aurora Vizcaíno. Effective tool support for collaboration<br />

is a strategic initiative for any company with distributed<br />

resources, no matter whether the strategy involves offshore<br />

development, outsourcing, or supplier networks. Software<br />

needs to be shared, and appropriate tool support is the only<br />

way to do this efficiently, consistently, and securely.<br />

No single solution has yet emerged to unify communications<br />

among the various and proliferating messaging<br />

systems available today. In “Understanding Unified Messaging,”<br />

in the January/February issue of IT Pro, Clinton M.<br />

Banner of Alcatel-Lucent surveys the issues and solutions—<br />

both proprietary and open—that are in development.<br />

“Integrating Visualization and Interaction Research to<br />

Improve Scientific Workflows,” by the University of Minnesota’s<br />

Daniel F. Keefe, identifies three goals—improving<br />

accuracy, linking multiple visualization strategies, and<br />

making data analysis more fluid—that serve as a guide<br />

for future interactive-visualization research targeted<br />

COMPUTER<br />

at improving visualization tools’ impact on scientific<br />

workflows.<br />

The article, which appears in the March/April issue of<br />

CG&A, also offers four examples that illustrate the potential<br />

and challenges of integrating visualization research and<br />

interaction research: 3D selection techniques in brain visualizations,<br />

interactive multiview scientific visualizations,<br />

fluid pen- and touch-based interfaces for visualization, and<br />

modeling human performance in interactive visualizationrelated<br />

tasks.<br />

The Cell Broadband Engine is a heterogeneous chip<br />

multiprocessor that combines a PowerPC processor core<br />

with eight single-instruction, multiple-data accelerator<br />

cores and delivers high performance on many computationally<br />

intensive codes. “Application Acceleration with the<br />

Cell Broadband Engine” by Guochun Shi, Volodymyr Kindratenko,<br />

Frederico Pratas, Pedro Trancoso, and Michael<br />

Gschwind appears in the January/February issue of CiSE.<br />

Demand-response systems provide detailed powerconsumption<br />

data to utilities and those angling to assist<br />

consumers in understanding and managing energy use.<br />

Such data reveals information about in-home activities that<br />

can be mined and combined with other readily available<br />

information to discover more about occupants’ activities.<br />

“Inferring Personal Information from Demand-Response<br />

Systems” by Mikhail Lisovich, Deirdre Mulligan, and Stephen<br />

Wicker appears in the January/February issue of S&P.<br />

The March/April issue of Intelligent Systems focuses<br />

on new paradigms in business and market intelligence.<br />

The emergence of user-generated Web 2.0 content offers<br />

Published by the IEEE Computer Society 0018-9162/10/$26.00 © 2010 IEEE<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

opportunities to listen to the voice of the market as articulated<br />

by a vast number of business constituents. An<br />

overview in this issue of IS includes five short articles by<br />

distinguished experts on today’s trends in business and<br />

market intelligence. Each article presents a unique, innovative<br />

research framework, computational methods, and<br />

selected results and examples.<br />

In the world of Web 2.0, Internet 2, and open systems,<br />

most learning is still done in traditional classrooms. As<br />

education costs continue to far outpace inflation, what is<br />

e-learning’s role? In the March/April issue of Internet Computing,<br />

Stephen Ruth of George Mason University addresses<br />

these questions in “Is E-Learning Really Working? The<br />

Trillion Dollar Question.”<br />

Spectacles is a hardware/software platform developed<br />

from off-the-shelf components and ready for market. It<br />

includes local computation and communication facilities,<br />

an integrated power supply, and modular system building<br />

blocks such as sensors, voice-to-text and text-to-speech<br />

components, localization and positioning units, and microdisplay<br />

units. Its see-through display components are<br />

integrated into eyeglass frames. “Wearable Displays—for<br />

Everyone!” by Alois Ferscha and Simon Vogl appears in the<br />

January-March issue of PvC.<br />

The January/February issue of Micro continues a<br />

seven-year tradition of featuring top picks from computer<br />

architecture conferences. Guest editor Trevor Mudge of<br />

the University of Michigan participated in a program committee<br />

of 31 computer architects from both industry and<br />

academia. The committee reviewed 91 submissions, selecting<br />

13 papers for abridgment in the magazine. Topics range<br />

from practical prefetching to application-domain-specific<br />

<strong>computing</strong> and nonvolatile memory.<br />

Current media-synchronization techniques range from<br />

the very theoretical to the very practical. In “Modeling<br />

Media Synchronization with Semiotic Agents,” researchers<br />

from the City University of Hong Kong and the University of<br />

Reading describe a modeling technique for mapping theoretical<br />

models to system implementations. The technique<br />

combines agent technology with semiotics to offer a sound<br />

theoretical framework for expressing and manipulating<br />

media-synchronization attributes in real-world applications.<br />

Read more in the January-March issue of Multimedia.<br />

reduced 70%<br />

reduced 70%<br />

As CMOS technology scales down to the nanometer<br />

range, variation control in semiconductor manufacturing<br />

becomes ever more challenging. Introducing D&T’s March/<br />

April special issue in “Compact Variability Modeling in<br />

Scaled CMOS Design,” guest editors Yu Cao of Arizona State<br />

University and Frank Liu of IBM Austin Research Laboratory<br />

preview five articles that address these challenges.<br />

An article by Fred Brooks, renowned computer scientist<br />

and author of The Mythical Man-Month, opens the first issue<br />

of Annals in 2010. In “Stretch-ing Is Great Exercise—It Gets<br />

You in Shape to Win,” Brooks recounts the history of the<br />

IBM Stretch Project (1955-1961). Although the company lost<br />

$35 million on the project in 1960, Brooks describes how<br />

Stretch drove technologies that enabled the rapid development<br />

of IBM’s successful 7000-series computers and the<br />

architectural innovations of its System/360 product family.<br />

Editor: Bob Ward, Computer; bnward@computer.org<br />

____________<br />

http://computer.org/cn/ELSEWHERE<br />

April Theme:<br />

AGILITY AND<br />

ARCHITECTURE<br />

APRIL 2010<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

5

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

6<br />

THE KNOWN WORLD<br />

Utter Chaos<br />

As far as I can tell, they live<br />

<strong>lives</strong> of utter chaos. The<br />

three of them, all young<br />

physicians who seem<br />

no older than 16, seize<br />

control of the locker room every<br />

morning. They apparently finish a<br />

rather raucous game just as I arrive<br />

to begin my swim.<br />

They typically appear with an<br />

explosion of noise, a clash of locker<br />

doors, and a litter of sweaty athletic<br />

clothes that bear the seals of expensive<br />

and exclusive institutions of<br />

higher education. They talk as if no<br />

one else is listening, as if they were<br />

standing in the most private seminar<br />

room of the local hospital, albeit<br />

standing buck naked, dripping wet,<br />

and yelling for no good reason.<br />

Surprisingly, they talk not of<br />

women or sports or one of the few<br />

topics of male discourse but of medicine.<br />

They pay inordinate attention<br />

to every corner and fold of their<br />

own bodies and speculate on what<br />

signs of good or ill they find. If you<br />

believe their words, these young doctors<br />

are the most medicated human<br />

beings on the planet. They spend an<br />

unusual amount of time talking about<br />

the medicines that they prescribe<br />

for themselves in much the same<br />

way that a small child will discuss<br />

the color of a recently encountered<br />

COMPUTER<br />

David Alan Grier, George Washington University<br />

No matter what we may believe, scientific practice often begins with<br />

a season when we don’t know what we know.<br />

puppy. One can be grateful that these<br />

physicians know the effects of their<br />

own medicine but simultaneously<br />

worried that these pharmaceuticals<br />

will impair their judgment when they<br />

recommend some treatment.<br />

For the most part, these young<br />

men talk about their cases and the<br />

treatments that they recommend.<br />

Although most of the medical terms<br />

are a complete mystery to the rest of<br />

us, we can all tell that these are young<br />

doctors who don’t know yet how to<br />

be professional physicians. They’re<br />

a little too offended when someone<br />

questions their judgment. They give<br />

far too many details about their<br />

patients and make it far too easy for<br />

the rest of us to identify, or at least<br />

imagine, the individual in question.<br />

These young physicians, in spite<br />

of their enthusiasm, their elite educations,<br />

and their practical experience<br />

with drugs, are still learning how to<br />

organize and discipline their practice.<br />

They’re living a medicine of<br />

utter chaos. Their conversation cycles<br />

around the various bodily systems,<br />

maps every symptom to every possible<br />

cause imaginable, and identifies<br />

extraordinary lists of information that<br />

they would like to extract from their<br />

patients.<br />

Even with this chaos, they’re still<br />

in love with their profession and still<br />

excited to encounter new problems.<br />

Yet they don’t realize that they’re<br />

only now learning to discipline their<br />

professional activity, to build a framework<br />

that will guide their work. Well<br />

before they reach the age of 30, they<br />

will learn to organize their professional<br />

knowledge into a structure that<br />

will guide the rest of their <strong>lives</strong>.<br />

UNINHIBITED SPECULATION<br />

We usually live so completely<br />

within our professional knowledge<br />

that we remember the effort required<br />

to discipline our thoughts only when<br />

we approach a topic that is new to us.<br />

New ideas can dislocate that mental<br />

structure, that vague semantic network,<br />

which gives us a foundation in<br />

the field.<br />

Over the past three months, I have<br />

had many opportunities to demonstrate<br />

that a career in computer<br />

science can still leave you grossly<br />

ignorant of many subjects and completely<br />

disoriented as you try to learn<br />

the basics of a new topic. The search<br />

tools of modern digital libraries give<br />

you little guidance in this work. You<br />

can retrieve hundreds of articles that<br />

seem relevant, spend hours reading<br />

material about some peripheral bit<br />

of research, and never find that key<br />

document describing the basic concepts<br />

of the field.<br />

Published by the IEEE Computer Society 0018-9162/10/$26.00 © 2010 IEEE<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

After years of doing this kind of<br />

research, I’ve learned that all new<br />

fields of scientific endeavor will go<br />

through periods of utter chaos before<br />

they settle into a regular discipline<br />

with well-defined terms, stable categories,<br />

and general methods. The task<br />

of any researcher is to work through<br />

the early literature and find that key<br />

moment when ideas begin to solidify.<br />

THE CHAOS OF<br />

RÖNTGEN’S XfiRAYS<br />

The early days of physics produced<br />

many fields that began in chaos and<br />

moved quickly into order. Few present<br />

a more dramatic story than the discovery<br />

of X-rays by Wilhelm Röntgen<br />

in late 1895. To both scientists and the<br />

public, X-rays seemed to be an entirely<br />

new phenomenon that didn’t exactly<br />

fit into the theories of electricity and<br />

magnetism that had been maturing<br />

over the prior 50 years.<br />

Röntgen discovered X-rays in<br />

early November and was mystified<br />

by them. Being a careful scientist, he<br />

attempted to identify the basic nature<br />

of this radiation. He assembled his<br />

notes from this work into an article<br />

that is little more than a list of observations.<br />

“A Discharge from a large<br />

induction coil is passed through a<br />

Hittorf’s vacuum tube,” he begins.<br />

This apparatus will cause a piece of<br />

paper covered with barium platinocyanide<br />

to glow. “The fluorescence is<br />

still visible at two meters distance,”<br />

he wrote. “It is easy to show that the<br />

origin of the fluorescence lies within<br />

the vacuum tube.”<br />

In the last paragraphs of the article,<br />

Röntgen admitted that he didn’t have<br />

a theory that explained the properties<br />

of X-rays. He rejected as “unlikely” the<br />

idea that they were a form of electromagnetic<br />

radiation and hence related<br />

to visible light. Instead, he speculated<br />

that his discovery was an entirely new<br />

form of energy. “Should not the new<br />

rays,” he asked, “be ascribed to longitudinal<br />

waves in the ether?”<br />

Had X-rays been less novel, had<br />

they not been able to a pass through<br />

soft human tissue and reveal the<br />

hidden bones, then Röntgen’s speculations<br />

about the luminiferous ether<br />

and longitudinal waves would have<br />

percolated through the community<br />

of physicists and would have been<br />

tested in a relatively systematic way.<br />

However, X-rays were indeed novel,<br />

and they could reveal the inner parts<br />

of the human body, so Röntgen’s<br />

ideas quickly moved into the laboratory<br />

and living room.<br />

All new fields of<br />

scientific endeavor<br />

will go through<br />

periods of utter<br />

chaos before they<br />

settle into a regular<br />

discipline with welldefined<br />

terms, stable<br />

categories, and<br />

general methods.<br />

THE EXCITEMENT<br />

OF THE POPULAR PRESS<br />

Barely four weeks after the original<br />

article appeared, news of this<br />

strange new ray reached all the<br />

capitals of Europe. “The invention of<br />

neither the telephone nor the phonograph<br />

has stirred up such scientific<br />

excitement as this Röntgen discovery<br />

in photography,” reported the London<br />

correspondent for The New York<br />

Times. “The papers everywhere are<br />

full of reports of experiments, and of<br />

reproductions of more or less ghastly<br />

anatomical pictures.”<br />

In general, the Times and other<br />

newspapers offered accurate descriptions<br />

of the experiments and the<br />

phenomena that had been observed.<br />

Yet, the papers weren’t scholarly<br />

journals and couldn’t distinguish<br />

well-conceived theory from rampant<br />

assumption. They speculated about<br />

what Röntgen meant when he mentioned<br />

“longitudinal waves in the<br />

ether” and listened to everyone who<br />

claimed credit for the discovery. “A<br />

French savant is said to have been<br />

securing similar results for several<br />

years by the use of an ordinary kerosene<br />

lamp,” offered the Times, which<br />

also claimed that it had found “that<br />

this discovery was not only made by<br />

a Prague professor in 1885” and that<br />

“a full report of the achievement was<br />

made to the Austrian Academy of Sciences<br />

in 1885.”<br />

ORGANIZING IGNORANCE<br />

Surprisingly, the popular press<br />

wasn’t alone in publishing speculation.<br />

The discovery of X-rays<br />

created a massive wave of papers<br />

that crashed on the scholarly community<br />

that winter. More than 125<br />

appeared before the first anniversary<br />

of the discovery. Some were good.<br />

Some were poorly conceived and ill<br />

written. Some identified the major<br />

applications for the waves. Some<br />

wandered into strange and unsupported<br />

speculations.<br />

The articles appeared so quickly<br />

that few could have been reviewed<br />

only by an editor and not an outside<br />

referee. Researchers would read a<br />

description of X-rays in a newspaper,<br />

conduct an experiment, and see their<br />

results published in fewer than six<br />

weeks. Many of these articles were<br />

part of a growing chain of work, in<br />

which one article spawns new experiments,<br />

which in turn spawn new<br />

work. Unfortunately, most of these<br />

researchers were engaged in speculation<br />

that was no better informed<br />

than the newspapers. On 14 February<br />

1896, Columbia physicist Michael<br />

Pupin published a simple framework<br />

for studying these waves, but his<br />

ideas had limited influence in those<br />

first months.<br />

Less than two weeks after Pupin’s<br />

paper appeared, a pair of researchers<br />

built a simple X-ray apparatus and<br />

started applying it to everything that<br />

they could find. A colleague asked<br />

them to “undertake the location of a<br />

bullet in the head of a child that had<br />

been accidentally shot.” They agreed<br />

APRIL 2010<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

7

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

8<br />

THE KNOWN WORLD<br />

to do it but had enough doubts about<br />

the process to test the safety of their<br />

machine. “Accordingly, Dr. Dudley,<br />

with his characteristic devotion to<br />

the cause of science,” explained the<br />

report, “lent himself to the experiment.”<br />

They taped a coin to one side<br />

of his head, placed a photographic<br />

plate on the other, and exposed him<br />

to the X-ray machine for an hour.<br />

The experiment produced no<br />

useful outcome, but it may have<br />

spared the child. “The plate developed<br />

nothing,” they reported. But<br />

three weeks after the experiment,<br />

Dudley lost all of his hair in the area<br />

where the X-rays had been directed.<br />

“The spot is perfectly bald, being two<br />

inches in diameter,” they noted. “We,<br />

and especially Dr. Dudley, shall watch<br />

with interest the ultimate effect.”<br />

THE ELECTRICAL ENGINEERS<br />

START ORGANIZING<br />

Twelve full months passed before<br />

the scientific community began to<br />

develop a coherent approach to the<br />

study of X-rays. The key article is a<br />

report from the American Institute<br />

of Electrical Engineers (AIEE) that<br />

appeared in December 1896. “We<br />

were very much surprised, something<br />

like a year ago, by this very great discovery,”<br />

the report begins, “but we<br />

cannot say that we know very much<br />

more about it now than we did then.<br />

The whole world seems to have been<br />

working on it for all this time without<br />

having discovered a great deal with<br />

respect to it.”<br />

This report also reveals much<br />

about the nature of scientific practice<br />

at the time. It was a record of a discussion<br />

among a half-dozen senior<br />

scientists, all of whom lived within<br />

easy travel distance to New York and<br />

could easily congregate there for a<br />

meeting. Most important, they represented<br />

most of the major research<br />

universities and, among them, knew<br />

most of the scientists who could<br />

contribute to the field. When they discussed<br />

and debated ideas, they were<br />

speaking for an entire community.<br />

COMPUTER<br />

The report is strong and detailed<br />

but a little cautious as none of the participants<br />

wished to offend the others<br />

or give a conclusion that might be<br />

disproved later. The majority of the<br />

group concluded that X-rays were a<br />

form of electro-magnetic radiation<br />

and that they “most likely consist of<br />

vibrations of such small wavelengths<br />

as to be comparable with the distances<br />

between molecules of the most<br />

dense substances.” That hypothesis<br />

would be the basis for the serious<br />

study of X-rays.<br />

The flood of papers<br />

has continued to rise,<br />

as scientists from<br />

laboratories near and<br />

far try to make a name<br />

for themselves.<br />

THE POWER OF PUBLICATION<br />

Scientific periodicals have always<br />

had a central role in the process that<br />

turns chaos into order, although that<br />

role has often changed. At the time<br />

of Röntgen’s discovery, the editors<br />

of the oldest and most prestigious<br />

scientific journal in the English language,<br />

the Philosophical Transactions,<br />

stated that they selected articles for<br />

publication because of “the importance<br />

and singularity of the subjects,<br />

or the advantageous manner of<br />

treating them.” At the same time,<br />

they rejected the idea that they<br />

were validating or proving the science<br />

of any article that appeared in<br />

the Transactions. They didn’t pretend<br />

“to answer for the certainty of the<br />

facts, or propriety of the reasonings<br />

contained in the several papers so<br />

published,” they explained, “which<br />

must still rest on the credit or judgment<br />

of their respective authors.”<br />

The editors could take such a stand<br />

because the scientific community<br />

was still relatively small. Readers had<br />

other information to help them assess<br />

the articles. Moreover, they actually<br />

knew most of the people and laboratories<br />

engaged in active research and<br />

contributing to the field.<br />

The role of periodicals changed<br />

substantially over the next 50 years.<br />

By the middle of the 20th century,<br />

scientific periodicals were acting as<br />

validators—institutions that verified<br />

scientific research. “Science is<br />

moving rapidly,” noted the editor of<br />

the journal Science. He argued that scientific<br />

periodicals “in every discipline<br />

should take their responsibilities with<br />

respect to critical reviews” of every<br />

paper submitted to them. As an editor<br />

of a leading journal, he felt that Science<br />

needed to set the standard for<br />

scientific publication and regularly<br />

published articles that described<br />

the process of peer review, the rules<br />

that should guide reviewers, and the<br />

kinds of judgments that editors should<br />

make.<br />

At the same time it was promoting<br />

peer review, Science’s editor acknowledged<br />

that the expansion of scientific<br />

research was making it difficult for<br />

editors to validate every article that<br />

reached their desks. “With the great<br />

flood of manuscripts that today’s<br />

editors receive, in every scientific<br />

discipline, it is not possible to spend<br />

so much time and effort debating<br />

details,” he wrote. And as the century<br />

progressed, the flood of manuscripts<br />

only increased.<br />

THE ORIGINAL COLD FUSION<br />

As the waters rose, so rose the calls<br />

for scientific periodicals to test and<br />

validate the papers that they published.<br />

These calls reached a high<br />

point in 1989, when a pair of researchers<br />

announced to the public that they<br />

had made a discovery every bit as dramatic<br />

as the story of X-rays a century<br />

before. Two chemists “started a scientific<br />

uproar last month by asserting<br />

that they had achieved nuclear fusion<br />

at room temperature,” reported The<br />

New York Times. The writer added,<br />

“Many scientists remain skeptical.”<br />

The story of cold fusion lived<br />

for two years as laboratories<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

tried to make sense of the report. As the<br />

narrative unfolded, it quickly turned into a<br />

debate over the process of validating scientific<br />

ideas. Many claimed that the scientists who had<br />

performed the original experiments had taken<br />

their ideas too quickly to the public press. Their<br />

supporters argued that traditional laboratories<br />

were too skeptical of new ideas and that the<br />

scientific journals wouldn’t protect the patent<br />

rights of the discoverers. As the excitement of<br />

the moment faded and the realities of the story<br />

became clear, most commentators concluded<br />

that the public had not been well served. “Some<br />

scholars lunge too hastily for glory,” editorialized<br />

Times science writer Nicholas Wade. He<br />

told scientists to never announce their claims<br />

“until your manuscript has at least been<br />

accepted for publication in a reputable journal.”<br />

Nothing has become easier since the<br />

cold fusion controversy or those<br />

first exciting days of X-rays. Science<br />

has expanded. The number of<br />

subdisciplines has increased. The<br />

flood of papers has continued to rise, as scientists<br />

from laboratories near and far try to<br />

make a name for themselves. In that flood are<br />

ideas good and bad, breathtakingly original<br />

and utterly derivative. They’re the gift of painstaking<br />

research, the theft by 15 minutes of edits<br />

with a word processor, an instantaneous forgery<br />

by a clever bit of code that mocks the way<br />

that scientists write.<br />

By themselves, scientific journals aren’t the<br />

only institutions that attempt to organize the<br />

utter chaos of scientific research. They’re part<br />

of an infrastructure that includes laboratories,<br />

funding agencies, professional societies, review<br />

boards, and other institutions. These organizations<br />

will have to continue to grow and evolve as<br />

editors and publishers grapple with the forces<br />

acting on the scientific community, just as my<br />

young physician friends will have to mature<br />

into wise doctors who know how to draw disciplined<br />

judgment from the anarchy of energetic<br />

speculation.<br />

David Alan Grier is an associate professor of<br />

international science and technology policy at<br />

George Washington University and is the author<br />

of When Computers Were Human (Princeton,<br />

2005) and Too Soon to Tell (Wiley, 2009). Contact<br />

him at grier@computer.org.<br />

_____________<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

<br />

UMassAmherst<br />

ISENBERG<br />

SCHOOL OF MANAGEMENT<br />

<br />

_____________________<br />

APRIL 2010<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

9

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

32 & 16 YEARS AGO<br />

APRIL 1978<br />

INTERNATIONAL DATA (p. 6) “AFIPS has named a panel<br />

of US experts to study foreign countries’ activities which<br />

restrict the international exchange of information. The<br />

panel is also expected to respond to the State Department’s<br />

position paper, Protecting Privacy in International<br />

Data Processing.”<br />

SOFTWARE QUALITY (p. 10) “For computer software<br />

systems, quality appears to be a characteristic that can<br />

be neither built in with assurance at system creation<br />

time nor retrofitted with certainty after a product is<br />

in use. Apart from the issues of logistics (copying and<br />

distributing software) there appears to be a continuing<br />

need to demonstrate some minimum level of quality in<br />

typical wide-use software systems. At the same time<br />

this appears to be impossible to accomplish: the best<br />

designed systems have had errors revealed many years<br />

after introduction, and several programs that had been<br />

publicly ‘proved correct’ contained outright mistakes!”<br />

SOFTWARE REVALIDATION (p. 14) “The problems of revalidating<br />

software following maintenance have received<br />

little attention, yet typically 70 percent of the effort<br />

expended on software occurs as program maintenance.<br />

There are two reasons to perform maintenance on a<br />

software system: to correct a problem or to modify the<br />

capabilities of the system. Revalidation must verify the<br />

corrected or new capability, and also verify that no other<br />

capability of the system has been adversely affected<br />

by the modification. Revalidation is facilitated by good<br />

documentation and a system structure in which functions<br />

are localized to well-defined modules.”<br />

ERROR DETECTION (p. 25) “It is well known that one<br />

cannot find all the errors in a program simply by testing<br />

it for a set of input data. Nevertheless, program testing is<br />

the most commonly used technique for error detection<br />

in today’s software industry. Consequently, the problem<br />

of finding a program test method with increased errordetection<br />

capability has received considerable attention<br />

in the field of software research.<br />

“There appear to be two major approaches to this problem.<br />

One is to find better criteria for test-case selection. The<br />

other is to find a way to obtain additional information (i.e.,<br />

information other than that provided by the output of the<br />

program) that can be used to detect errors.”<br />

PROGRAM TESTING (p. 41) “So, there is certainly no need<br />

to apologize for applying ad hoc strategies in program<br />

testing. A programmer who considers his problems well<br />

and skillfully applies appropriate techniques—regardless<br />

of where the techniques arise—will succeed.”<br />

SYMBOLIC<br />

PROGRAM<br />

EXECUTION<br />

(p. 51) “The<br />

notion of<br />

symbolically<br />

executing a program<br />

follows quite<br />

naturally from normal<br />

program execution. First assume<br />

that there is a given programming language (say, Algol-<br />

60) and the normal definition of program execution for<br />

that language. One can extend that definition to provide<br />

a symbolic execution in the same manner as one extends<br />

arithmetic over numbers to symbolic algebraic operations<br />

over symbols and numbers. The definition of the symbolic<br />

execution is such that trivial cases involving no symbols<br />

are equivalent to normal executions, and any information<br />

learned in a symbolic execution applies to the corresponding<br />

normal executions as well.”<br />

COMPONENT PROGRESS (p. 64) “It is clear, looking back,<br />

that progress in component performance, size, cost, and<br />

reliability has been reflected in corresponding improvements<br />

in systems. Looking forward then, an analysis of<br />

coming developments in logic and memory components<br />

appears to be one approach to forecasting future system<br />

characteristics. Prediction at the system level, however,<br />

is complicated by various options in machine organization,<br />

such as parallel processing and pipelining, which<br />

interact with component features to establish the performance<br />

limits of the system.”<br />

HEMISPHERICAL KEYBOARD (p. 99) “A new hemisphericalshaped<br />

typing keyboard, called the Writehander, permits<br />

typing all 128 characters of the ASCII code with<br />

one hand.<br />

“To use the keyboard, the typist places his four fingers<br />

on four press-switches and his thumb on one of eight<br />

press-switches. The four finger-switches operate as the<br />

lower four bits of the 7-bit ASCII code, selecting one of<br />

16 character groups which contain the desired character.<br />

Each group contains eight letters, numerals, or symbols.<br />

The thumb then presses one switch to select the desired<br />

character from the group of eight. The shape and switch<br />

locations have been designed so that the fingers naturally<br />

locate themselves on the switches, according to the<br />

designer, Sid Owen.”<br />

PDFs of the articles and departments of the April issues of<br />

Computer for 1978 and 1994 are available through the IEEE<br />

Computer Society’s website: www.computer.org/computer.<br />

10 COMPUTER<br />

Published by the IEEE Computer Society 0018-9162/10/$26.00 © 2010 IEEE<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

APRIL 1994<br />

ATM (p. 8) “This year is an important one for asynchronous<br />

transfer mode. The technology now has the backing<br />

of vendors.<br />

“Membership of the ATM Forum, a consortium formed<br />

in October 1991 to accelerate the development and implementation<br />

of ATM products and services, has risen to 465<br />

with new faces from the likes of Microsoft and Novell.<br />

According to Fred Sammartino, forum president, 1994<br />

will be the year many companies set up serious trial<br />

implementations with intent to later incorporate the<br />

technology.”<br />

MBONE (p. 30) “Short for Multicast Backbone, MBone is<br />

a virtual network that has been in existence since early<br />

1992. It was named by Steve Casner of the University of<br />

Southern California Information Sciences Institute and<br />

originated from an effort to multicast audio and video<br />

from meetings of the Internet Engineering Task Force.<br />

Today, hundreds of researchers use MBone to develop<br />

protocols and applications for group communication.<br />

Multicast provides one-to-many and many-to-many<br />

network delivery services for applications such as<br />

videoconferencing and audio where several hosts need<br />

to communicate simultaneously.”<br />

MOBILE COMPUTING (p. 38) “Recent advances in technology<br />

have provided portable computers with wireless<br />

interfaces that allow networked communication even<br />

while a user is mobile. Whereas today’s first-generation<br />

notebook computers and personal digital assistants<br />

(PDAs) are self-contained, networked mobile computers<br />

are part of a greater <strong>computing</strong> infrastructure. Mobile<br />

<strong>computing</strong>—the use of a portable computer capable of<br />

wireless networking—will very likely revolutionize the<br />

way we use computers.”<br />

TELEROBOTICS (p. 49) “The significant communication<br />

latency between an earth-based local site and an<br />

on-orbit remote site drove much of the Steler [Supervisory<br />

Telerobotics Laboratory (at the Jet Propulsion<br />

Laboratory)] design. Such a large latency precludes<br />

direct real-time control of the robot in what nonetheless<br />

remains a real-time operation. To meet these<br />

real-time control requirements, our group devised<br />

a control scheme similar to the one used to control<br />

spacecraft.… Instead of transmitting programs to the<br />

remote site, we transmit command blocks (a set of data<br />

parameters) that control the execution of the remote<br />

site software that provides task-level control. This<br />

allows the remote site to handle a variety of control<br />

modes without requiring changes in the remote site<br />

software.”<br />

DIGITAL SOUPS (p. 65) “Last August, Apple Computer<br />

released a much-talked-about new computer called the<br />

Newton MessagePad. It’s the first platform in a series of<br />

Newton products that fall under the term personal digital<br />

assistant (Sharp’s ExpertPad is another). Although Apple<br />

doesn’t describe ‘the Newton’ as a computer, it’s actually<br />

an advanced system that includes handwriting recognition,<br />

full memory management and protection, and<br />

preemptive multitasking. But one feature that the MessagePad<br />

doesn’t have is files. The Newton stores similar<br />

data objects in formats called ‘soups.’ These soups are<br />

available to all applications.”<br />

SOFTWARE STANDARDS (p. 68) “The International<br />

Organization for Standardization is developing a suite<br />

of standards on software process under the rubric of<br />

Spice, an abbreviation for Software Process Improvement<br />

and Capability Determination. Spice was inspired<br />

by numerous efforts on software process around the<br />

world, including the Software Engineering Institute’s<br />

work with the Capability Maturity Model (CMM), Bell<br />

Canada’s Trillium, and ESPRIT’s Bootstrap.”<br />

VIDEOCONFERENCING (p. 96) “For the past two years,<br />

United Technologies Corp. (UTC) has been experimenting<br />

with desktop video communications in its Topdesc (Total<br />

Personal Desktop Communications) program, which has<br />

the goals of completeness and convenience: allowing<br />

separated personnel to communicate as completely and<br />

easily as if they were at the same location, and doing<br />

so whenever and from wherever it is convenient. This<br />

means that parties should be able to see objects and<br />

documents and collaborate easily using computer data.<br />

In addition, the communications unit must be sized and<br />

priced to fit into an office or lab.”<br />

THE SOFTWARE CRISIS (p. 104) “There’s been so much<br />

talk about a ‘software crisis’ over the past decade or two<br />

that you’d think software practitioners were the original<br />

Mr. Bumble, barely able to program their way out of a<br />

simple application problem. But when I look around, I see<br />

a world in which computers and the software that drives<br />

them are dependable and indispensable. They make my<br />

plane reservations, control my banking transactions, and<br />

send people into space. They even wage war—in entirely<br />

new and apparently successful ways.”<br />

Editor: Neville Holmes; neville.holmes@utas.edu.au<br />

_________________<br />

APRIL 2010 11<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

______________________<br />

_______________________<br />

_______________________<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

TECHNOLOGY NEWS<br />

Will HTML 5<br />

Restandardize<br />

the Web?<br />

Steven J. Vaughan-Nichols<br />

The World Wide Web Consortium is developing HTML 5 as a standard<br />

that provides Web users and developers with enhanced functionality<br />

without using the proprietary technologies that have<br />

become popular in recent years.<br />

In theory, the Web is a resource<br />

that is widely and uniformly<br />

usable across platforms. As<br />

such, many of the Web’s<br />

key technologies and architectural<br />

elements are open and<br />

platform-independent.<br />

However, some vendors have<br />

developed their own technologies<br />

that provide more functionality than<br />

Web standards—such as the ability to<br />

build rich Internet applications.<br />

Adobe System’s Flash, Apple’s<br />

QuickTime, and Microsoft’s Silverlight<br />

are examples of such proprietary<br />

formats.<br />

In addition, Google’s Gears and<br />

Oracle’s JavaFX—which the company<br />

acquired along with Sun Microsystems—have<br />

technologies that enable<br />

creation of offline and client-side Web<br />

applications.<br />

Although these approaches provide<br />

additional capabilities, they have<br />

also reduced the Web’s openness and<br />

platform independence, and tend to<br />

lock in users to specific technologies<br />

and vendors.<br />

In response, the World Wide Web<br />

Consortium (W3C) is developing<br />

HTML 5 as a single standard that provides<br />

Web users and developers with<br />

0018-9162/10/$26.00 © 2010 IEEE<br />

enhanced functionality without using<br />

proprietary technologies.<br />

Indeed, pointed out Google<br />

researcher Ian Hickson, one of the<br />

W3C’s HTML 5 editors, “One of our<br />

goals is to move the Web away from<br />

proprietary technologies.”<br />

The as-yet-unapproved standard<br />

takes HTML from simply describing<br />

the basics of a text-based Web to creating<br />

and presenting animations, audio,<br />

mathematical equations, typefaces,<br />

and video, as well as providing offline<br />

functionality. It also enables geolocation,<br />

a rich text-editing model, and<br />

local storage in client-side databases.<br />

The Web isn’t just about reading<br />

the text on the page and clicking<br />

on the links anymore, noted Bruce<br />

Lawson, standards evangelist at<br />

browser developer Opera Software.<br />

Added W3C director Tim Berners-<br />

Lee, “HTML 5 is still a markup language<br />

for webpages, but the really big<br />

shift that’s happening here—and, you<br />

could argue, what’s actually driving<br />

the fancy features—is the shift to the<br />

Web [supporting applications].”<br />

“HTML 5 tries to bring HTML into<br />

the world of application development,”<br />

explained Microsoft senior<br />

principal architect Vlad Vinogradsky.<br />

Published by the IEEE Computer Society<br />

“Microsoft is investing heavily in<br />

the W3C HTML 5 effort, working with<br />

our competitors and the Web community<br />

at large. We want to implement<br />

ratified, thoroughly tested, and stable<br />

standards that can help Web interoperability,”<br />

said Paul Cotton, cochair of<br />

the W3C HTML Working Group and<br />

Microsoft’s group manager for Web<br />

services standards and partners in<br />

the company’s Interoperability Strategy<br />

Team.<br />

At the same time though, Web<br />

companies say their proprietary technologies<br />

are already up and running,<br />

unlike HTML 5.<br />

Adobe vice president of developer<br />

tools Dave Story said, “The HTML 5<br />

timeline states that it will be at least a<br />

decade before the evolving efforts are<br />

finalized, and it remains to be seen<br />

what parts will be implemented consistently<br />

across all browsers.”<br />

In fact, while HTML 5 recently<br />

became a working draft, it’s not<br />

expected to become even a W3C candidate<br />

recommendation until 2012 or<br />

a final W3C standard until 2022.<br />

Nonetheless, some browser<br />

designers, Web authors, and websites—<br />

such as YouTube—are already adopting<br />

HTML 5 elements. For more<br />

APRIL 2010<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

13

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

14<br />

TECHNOLOGY NEWS<br />

PLAYING WITH HTML 5<br />

Several websites očer a taste of what HTML 5 will bring. Some of the applications work<br />

with only certain browsers.<br />

YouTube’s beta HTML 5 video project (www.youtube.com/html5) works with the standard’s<br />

video tag. Browsers must support the video tag and have a player that uses the<br />

H.264 codec. Otherwise, YouTube will use Flash to play video.<br />

Mozilla Labs’ BeSpin (https://bespin.mozillalabs.com) is an experimental programmer’s<br />

editor that uses a variety of HTML 5 elements.<br />

FreeCiv.net (www.freeciv.net) is an online game by the FreeCiv.net open source project<br />

that supports HTML 5’s Canvas element. HTML 5-compatible browsers display map<br />

changes faster than those that aren’t compatible.<br />

Google Wave (https://wave.google.com/wave), a cross between social networking<br />

and groupware, uses several HTML 5 elements.<br />

Merge Web Design’s HTML 5 Geolocation (http://merged.ca/iphone/html5geolocation)<br />

is, as the name indicates, a demo of HTML 5-based geolocation.<br />

Sticky Notes (http://webkit.org/demos/sticky-notes/index.html) is the WebKit Open<br />

Source Project’s demo of HTML 5’s client-side database storage API. WebKit is an open<br />

source Web browser engine now used by, for example, Apple’s Safari browser.<br />

information, see the “Playing with<br />

HTML 5” sidebar.<br />

BACKGROUNDER<br />

HTML is the predominant markup<br />

language for webpages. It uses tags<br />

to create structured documents via<br />

semantics for text—such as headings,<br />

paragraphs, and lists—as well<br />

as for links and other elements. HTML<br />

also lets authors embed images and<br />

objects in pages and can create interactive<br />

forms.<br />

HTML, which stemmed from the<br />

mid-1980s Standardized General<br />

Markup Language, first appeared as<br />

about a dozen tags in 1991.<br />

The Internet Engineering Task<br />

Force began the first organized effort<br />

to standardize HTML in 1995 with<br />

HTML 2.0.<br />

The IETF’s efforts to maintain<br />

HTML stalled, so the W3C took over<br />

standardization.<br />

In 1996, the W3C released HTML<br />

3.2, which removed the various proprietary<br />

elements introduced over<br />

time by Microsoft and Netscape.<br />

HTML 4.0, still not a final standard,<br />

followed in 1998. The approach<br />

provides mechanisms for style<br />

sheets, scripting, embedded objects,<br />

richer tables, enhanced forms, and<br />

improved accessibility for people<br />

with disabilities.<br />

COMPUTER<br />

However, HTML was still primarily<br />

focused on delivering text, not multimedia<br />

or client-based applications.<br />

Because of this, proprietary technologies<br />

such as Apple’s QuickTime<br />

and Microsoft’s multimedia players,<br />

both first released in 1991; and Adobe<br />

Flash, which debuted in 1996, have<br />

been used for video.<br />

Technologies such as Google<br />

Gears and Oracle’s JavaFX, both<br />

first released in 2007, make creating<br />

Web-based desktop-style applications<br />

easier for developers.<br />

HTML 5<br />

W3C is designing HTML 5 to create<br />

a standard with a feature set that<br />

handles all the jobs that the proprietary<br />

technologies currently perform,<br />

said specification editor Michael<br />

Smith, the consortium’s special-missions-subsection<br />

junior interim floor<br />

manager.<br />

In addition, HTML 5 will support<br />

newer mobile technologies such as<br />

geolocation and location-based services<br />

(LBS), as well as newer open<br />

formats such as scalable vector<br />

graphics. SVG, an open XML-based<br />

file format, produces compact and<br />

high-quality graphics.<br />

Developers would thus be able<br />

to develop rich webpages and Webbased<br />

applications without needing to<br />

master or license multiple proprietary<br />

technologies.<br />

And browsers would be able to do<br />

more without plug-ins.<br />

Canvas. One of HTML 5’s key<br />

new features is Canvas, which lets<br />

developers create and incorporate<br />

graphics, video, and animations, usually<br />

via JavaScript, on webpages.<br />

HTML Canvas 2D Context is an<br />

Apple-originated technology for rendering<br />

2D graphics and animations on<br />

the client rather than on Web servers.<br />

By rendering graphics locally, the<br />

bottlenecks of server and bandwidth<br />

restrictions are avoided. This makes<br />

graphics-heavy pages render faster.<br />

Video tags. HTML 5’s codecneutral<br />

video tags provide a way to<br />

include nonproprietary video formats,<br />

such as Ogg Theora and H.264,<br />

in a page.<br />

The tag and underlying code<br />

tell the browser that the associated<br />

information is to be handled as an<br />

HTML 5-compatible video stream.<br />

They would also let users view<br />

video embedded on a webpage without<br />

a specific video player.<br />

Location-based services. A location<br />

API offers support for mobile<br />

browsers and LBS applications by<br />

enabling interaction with, for example,<br />

GPS technology and data.<br />

Working offline. AppCache lets<br />

online applications store data and<br />

programming code locally so that<br />

Web-based programs can work as<br />

desktop applications, even without<br />

an Internet connection.<br />

HTML 5 has several other features<br />

that address building Web applications<br />

that work offline. These include<br />

support for a client-side SQL database<br />

and for offline application and data<br />

caching.<br />

Web applications thus can have<br />

their code, graphics, and data stored<br />

locally.<br />

Web Workers. The Web Workers<br />

element runs scripts in the background<br />

that can’t be interrupted by<br />

other scripts or user interactions.<br />

This speeds up background tasks.<br />

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F

A<br />

Computer Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page M S BE<br />

aG<br />

F<br />

Syntax and semantics. HTML 5<br />

makes some changes to the syntax<br />

and the semantics of the language’s<br />

elements and attributes.<br />

For example, as Figure 1 shows,<br />

HTML can be written in two syntaxes:<br />

HTML and XML.<br />

Using XML will enable more complex<br />

webpages that will run faster on<br />

Web browsers.<br />

XML requires a stricter, more accurate<br />

grammar than HTML and thus<br />

necessitates less work by the local<br />

computer to run quickly and correctly.<br />

However, XML pages require more<br />

work by the developer to achieve the<br />

higher accuracy level.<br />

HTML+RDFa. The W3C recently<br />

began dividing HTML 5 into subsections<br />

for easier development.<br />

For example, HTML+RDFa<br />