Outline Proposal - Oxford Brookes University

Outline Proposal - Oxford Brookes University

Outline Proposal - Oxford Brookes University

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Request 69009 Page 4 of 11<br />

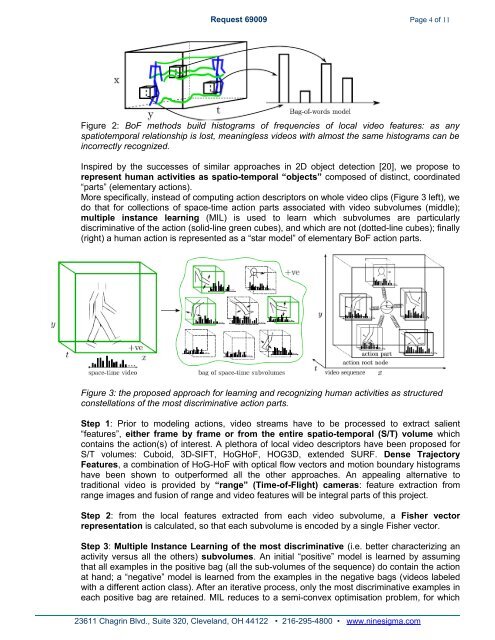

Figure 2: BoF methods build histograms of frequencies of local video features: as any<br />

spatiotemporal relationship is lost, meaningless videos with almost the same histograms can be<br />

incorrectly recognized.<br />

Inspired by the successes of similar approaches in 2D object detection [20], we propose to<br />

represent human activities as spatio-temporal “objects” composed of distinct, coordinated<br />

“parts” (elementary actions).<br />

More specifically, instead of computing action descriptors on whole video clips (Figure 3 left), we<br />

do that for collections of space-time action parts associated with video subvolumes (middle);<br />

multiple instance learning (MIL) is used to learn which subvolumes are particularly<br />

discriminative of the action (solid-line green cubes), and which are not (dotted-line cubes); finally<br />

(right) a human action is represented as a “star model” of elementary BoF action parts.<br />

Figure 3: the proposed approach for learning and recognizing human activities as structured<br />

constellations of the most discriminative action parts.<br />

Step 1: Prior to modeling actions, video streams have to be processed to extract salient<br />

“features”, either frame by frame or from the entire spatio-temporal (S/T) volume which<br />

contains the action(s) of interest. A plethora of local video descriptors have been proposed for<br />

S/T volumes: Cuboid, 3D-SIFT, HoGHoF, HOG3D, extended SURF. Dense Trajectory<br />

Features, a combination of HoG-HoF with optical flow vectors and motion boundary histograms<br />

have been shown to outperformed all the other approaches. An appealing alternative to<br />

traditional video is provided by “range” (Time-of-Flight) cameras: feature extraction from<br />

range images and fusion of range and video features will be integral parts of this project.<br />

Step 2: from the local features extracted from each video subvolume, a Fisher vector<br />

representation is calculated, so that each subvolume is encoded by a single Fisher vector.<br />

Step 3: Multiple Instance Learning of the most discriminative (i.e. better characterizing an<br />

activity versus all the others) subvolumes. An initial “positive” model is learned by assuming<br />

that all examples in the positive bag (all the sub-volumes of the sequence) do contain the action<br />

at hand; a “negative” model is learned from the examples in the negative bags (videos labeled<br />

with a different action class). After an iterative process, only the most discriminative examples in<br />

each positive bag are retained. MIL reduces to a semi-convex optimisation problem, for which<br />

23611 Chagrin Blvd., Suite 320, Cleveland, OH 44122 • 216-295-4800 • www.ninesigma.com