Learning hybrid neuro-fuzzy classifier models from data: to combine ...

Learning hybrid neuro-fuzzy classifier models from data: to combine ...

Learning hybrid neuro-fuzzy classifier models from data: to combine ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

46 B. Gabrys / Fuzzy Sets and Systems 147 (2004) 39–56<br />

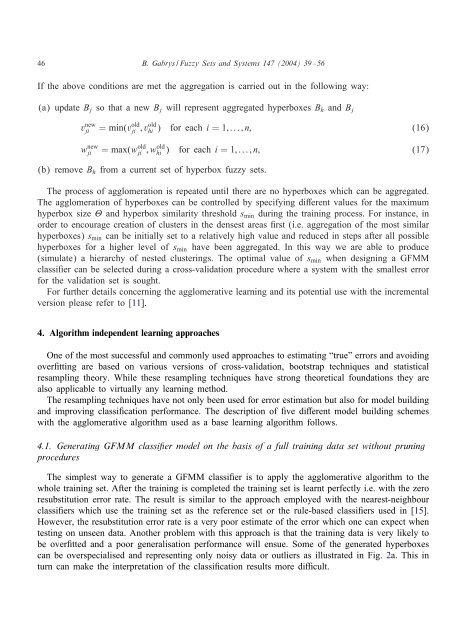

If the above conditions are met the aggregation is carried out in the following way:<br />

(a) update B j sothat a new B j will represent aggregated hyperboxes B h and B j<br />

v new<br />

ji<br />

w new<br />

ji<br />

= min(v old<br />

ji ;v old<br />

hi ) for each i =1;:::;n; (16)<br />

= max(wji<br />

old ;whi old ) for each i =1;:::;n; (17)<br />

(b) remove B h <strong>from</strong> a current set of hyperbox <strong>fuzzy</strong> sets.<br />

The process of agglomeration is repeated until there are no hyperboxes which can be aggregated.<br />

The agglomeration of hyperboxes can be controlled by specifying dierent values for the maximum<br />

hyperbox size and hyperbox similarity threshold s min during the training process. For instance, in<br />

order <strong>to</strong> encourage creation of clusters in the densest areas rst (i.e. aggregation of the most similar<br />

hyperboxes) s min can be initially set <strong>to</strong>a relatively high value and reduced in steps after all possible<br />

hyperboxes for a higher level of s min have been aggregated. In this way we are able <strong>to</strong>produce<br />

(simulate) a hierarchy of nested clusterings. The optimal value of s min when designing a GFMM<br />

classier can be selected during a cross-validation procedure where a system with the smallest error<br />

for the validation set is sought.<br />

For further details concerning the agglomerative learning and its potential use with the incremental<br />

version please refer <strong>to</strong> [11].<br />

4. Algorithm independent learning approaches<br />

One of the most successful and commonly used approaches <strong>to</strong> estimating “true” errors and avoiding<br />

overtting are based on various versions of cross-validation, bootstrap techniques and statistical<br />

resampling theory. While these resampling techniques have strong theoretical foundations they are<br />

alsoapplicable <strong>to</strong>virtually any learning method.<br />

The resampling techniques have not only been used for error estimation but also for model building<br />

and improving classication performance. The description of ve dierent model building schemes<br />

with the agglomerative algorithm used as a base learning algorithm follows.<br />

4.1. Generating GFMM classier model on the basis of a full training <strong>data</strong> set without pruning<br />

procedures<br />

The simplest way <strong>to</strong>generate a GFMM classier is <strong>to</strong>apply the agglomerative algorithm <strong>to</strong>the<br />

whole training set. After the training is completed the training set is learnt perfectly i.e. with the zero<br />

resubstitution error rate. The result is similar <strong>to</strong> the approach employed with the nearest-neighbour<br />

classiers which use the training set as the reference set or the rule-based classiers used in [15].<br />

However, the resubstitution error rate is a very poor estimate of the error which one can expect when<br />

testing on unseen <strong>data</strong>. Another problem with this approach is that the training <strong>data</strong> is very likely <strong>to</strong><br />

be overtted and a poor generalisation performance will ensue. Some of the generated hyperboxes<br />

can be overspecialised and representing only noisy <strong>data</strong> or outliers as illustrated in Fig. 2a. This in<br />

turn can make the interpretation of the classication results more dicult.