MiniTasking: Improving Cache Performance for Multiple ... - CiteSeerX

MiniTasking: Improving Cache Performance for Multiple ... - CiteSeerX

MiniTasking: Improving Cache Performance for Multiple ... - CiteSeerX

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

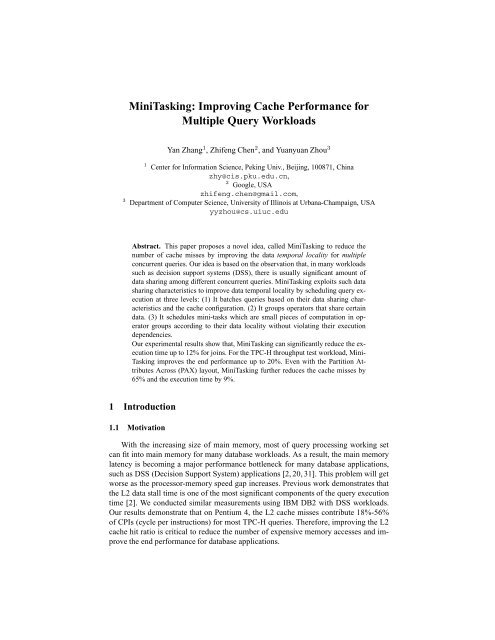

<strong>MiniTasking</strong>: <strong>Improving</strong> <strong>Cache</strong> <strong>Per<strong>for</strong>mance</strong> <strong>for</strong><br />

<strong>Multiple</strong> Query Workloads<br />

Yan Zhang 1 , Zhifeng Chen 2 , and Yuanyuan Zhou 3<br />

1 Center <strong>for</strong> In<strong>for</strong>mation Science, Peking Univ., Beijing, 100871, China<br />

zhy@cis.pku.edu.cn,<br />

2 Google, USA<br />

zhifeng.chen@gmail.com,<br />

3 Department of Computer Science, University of Illinois at Urbana-Champaign, USA<br />

yyzhou@cs.uiuc.edu<br />

Abstract. This paper proposes a novel idea, called <strong>MiniTasking</strong> to reduce the<br />

number of cache misses by improving the data temporal locality <strong>for</strong> multiple<br />

concurrent queries. Our idea is based on the observation that, in many workloads<br />

such as decision support systems (DSS), there is usually significant amount of<br />

data sharing among different concurrent queries. <strong>MiniTasking</strong> exploits such data<br />

sharing characteristics to improve data temporal locality by scheduling query execution<br />

at three levels: (1) It batches queries based on their data sharing characteristics<br />

and the cache configuration. (2) It groups operators that share certain<br />

data. (3) It schedules mini-tasks which are small pieces of computation in operator<br />

groups according to their data locality without violating their execution<br />

dependencies.<br />

Our experimental results show that, <strong>MiniTasking</strong> can significantly reduce the execution<br />

time up to 12% <strong>for</strong> joins. For the TPC-H throughput test workload, Mini-<br />

Tasking improves the end per<strong>for</strong>mance up to 20%. Even with the Partition Attributes<br />

Across (PAX) layout, <strong>MiniTasking</strong> further reduces the cache misses by<br />

65% and the execution time by 9%.<br />

1 Introduction<br />

1.1 Motivation<br />

With the increasing size of main memory, most of query processing working set<br />

can fit into main memory <strong>for</strong> many database workloads. As a result, the main memory<br />

latency is becoming a major per<strong>for</strong>mance bottleneck <strong>for</strong> many database applications,<br />

such as DSS (Decision Support System) applications [2, 20, 31]. This problem will get<br />

worse as the processor-memory speed gap increases. Previous work demonstrates that<br />

the L2 data stall time is one of the most significant components of the query execution<br />

time [2]. We conducted similar measurements using IBM DB2 with DSS workloads.<br />

Our results demonstrate that on Pentium 4, the L2 cache misses contribute 18%-56%<br />

of CPIs (cycle per instructions) <strong>for</strong> most TPC-H queries. There<strong>for</strong>e, improving the L2<br />

cache hit ratio is critical to reduce the number of expensive memory accesses and improve<br />

the end per<strong>for</strong>mance <strong>for</strong> database applications.

Fig. 1. CPI breakdown of some TPC-H queries on Shore using PAX.<br />

An effective method <strong>for</strong> improving the L2 data cache hit ratio is to increase data<br />

locality, which includes spatial locality and temporal locality. Many previous studies<br />

have proposed clever ideas to improve the data spatial locality of a single query by<br />

using cache-conscious data layout. Examples include PAX (Partition Attributes Across)<br />

by Ailamaki et al. [1], data morphing by Hankins and Patel [14] and wider B + -tree<br />

nodes by Chen et al. [8]. These layout schemes place data that are likely to be accessed<br />

together consecutively so that servicing one cache miss can “prefetch” other data into<br />

the cache to avoid subsequent cache misses.<br />

While the above techniques are very effective in reducing the number of cache<br />

misses, the memory latency still remains significant contributor <strong>for</strong> the query execution<br />

time even though the amount of contribution is not as high as be<strong>for</strong>e. For example,<br />

as shown in Figure 1, with the PAX layout, the L1 and L2 cache misses still contribute<br />

around 20% of CPIs <strong>for</strong> TPC-H queries. There<strong>for</strong>e, it is still necessary to seek other<br />

complementary techniques to further reduce the number of cache misses.<br />

<strong>Improving</strong> temporal locality is a potential complementary technique to reduce cache<br />

miss ratio by improving data temporal reuse. This approach has been widely studied <strong>for</strong><br />

scientific applications. Most previous work in this category maximizes data temporal<br />

locality by reordering computation, e.g., compiler-directed tiling or loop trans<strong>for</strong>mations<br />

[32, 18, 11, 3], fine-grained thread scheduling [23, 34]. While these techniques are<br />

very useful <strong>for</strong> regular, array-based applications, it is difficult to apply them to database<br />

applications that usually have complex pointer-based data structures, and whose structure<br />

in<strong>for</strong>mation is known only at run-time after the database schema is loaded into the<br />

main memory. So far few studies have been conducted to improve the temporal cache<br />

reuse <strong>for</strong> database applications.<br />

1.2 Our Contributions<br />

In this paper, we propose a technique called <strong>MiniTasking</strong> to improve data temporal<br />

locality <strong>for</strong> concurrent query execution. Our idea is based on the observation that, in a<br />

large scale decision support system, it is very common <strong>for</strong> multiple users with complex<br />

queries to hit the same data set concurrently [16], even though these queries may not be<br />

identical. <strong>MiniTasking</strong> exploits such data sharing characteristics to improve temporal<br />

locality by scheduling query execution at three levels:(1) It batches queries based on<br />

their data sharing characteristics and the cache configuration. (2) It groups operators<br />

that share certain data. (3) It schedules mini-tasks which are small fractions of operator<br />

groups according to their data locality without violating their execution dependencies.<br />

2

<strong>MiniTasking</strong> is complementary to previously proposed solutions such as PAX [1]<br />

and data morphing [14], because <strong>MiniTasking</strong> improves temporal locality while cache<br />

conscious layouts improve spatial locality. <strong>MiniTasking</strong> is also complementary to multiple<br />

query optimization (MQO) techniques that produce a global query plan <strong>for</strong> them [13,<br />

28, 27].<br />

We implemented <strong>MiniTasking</strong> in the Shore storage manager [6]. Our experimental<br />

results with various DSS workloads using the TPC-H benchmark suite show that,<br />

<strong>MiniTasking</strong> improves the end per<strong>for</strong>mance up to 20% on a real compound workload<br />

running TPC-H throughput testing streams. Even with the Partition Attributes Across<br />

(PAX) layout, <strong>MiniTasking</strong> reduces the L2 cache misses by 65% and the execution time<br />

of concurrent queries by 9%.<br />

The remainder of this paper is organized as follows. Section 2 presents the related<br />

work. Section 3 introduces data temporal locality. Section 4 describes <strong>MiniTasking</strong> in<br />

detail. Section 5 demonstrates the experimental evaluation. Finally, we show our conclusions<br />

in Section 6.<br />

2 Related Work<br />

<strong>Multiple</strong> Query Optimization endeavors to reduce the execution time of multiple queries<br />

by reducing duplicated computation and reusing the computation results. Previous work<br />

proposes to extract common sub-expressions from plans of multiple queries and reuse<br />

their intermediate results in all queries [10, 13, 27, 28]. Early work shows that the multiple<br />

query optimization is an NP-hard problem and proposes heuristics <strong>for</strong> query ordering<br />

and common sub-expressions detection and selection [13, 27]. Roy et al. propose<br />

to materialize certain common sub-expressions into transient tables so that later<br />

queries can reuse the results [26]. Instead of materializing the results of common subexpressions,<br />

Davli et al. focus on pipelining the intermediate tuples simultaneously to<br />

several queries so as to avoid the prohibitive cost of materializing and reading the intermediate<br />

results [10]. Harizopoulos et al. propose a operator-centric engine Qpipe to<br />

support on-demand simultaneous pipelining [15]. O’Gorman et al. propose to reduce<br />

disk I/O by scheduling queries with the same table scans at the same time and there<strong>for</strong>e<br />

achieve significant speedups [22]. However, reusing intermediate results requires<br />

exactly same common sub-expressions. For example, a little change in the selection<br />

predicate of one query will render previous results not usable.<br />

<strong>Improving</strong> Data Locality is another important technique to improve per<strong>for</strong>mance of<br />

multiple queries, especially when the memory latency becomes a new bottleneck <strong>for</strong><br />

DSS workload on modern processors. Ailamaki et al. show that the primary memoryrelated<br />

bottleneck is mainly contributed by L1 instruction and L2 data cache misses [2].<br />

Many recent studies have focused on improving data spatial locality to reduce cache<br />

misses in database systems [1, 9, 19, 33, 25]. <strong>Cache</strong>-conscious algorithms change data<br />

access pattern of table scan [4] and index scan [33] so that consecutive data accesses<br />

will hit in the same cache lines. Shatdal et al. demonstrate that several basic database<br />

operator algorithms can be redesigned to make better use of the cache [29]. <strong>Cache</strong>conscious<br />

index structures pack more keys in one cache lines to reduce cache misses<br />

3

during lookup in an index tree [9, 19, 25]. <strong>Cache</strong>-conscious data storage models partition<br />

tables vertically so that one cache line can store the same fields from several<br />

records [1, 24]. Although these techniques effectively reduce cache misses within a single<br />

query, data fetched into processor caches are not reused across multiple queries.<br />

Much previous work studies improving data temporal locality <strong>for</strong> general programs<br />

[7, 5, 12]. For example, based on the temporal relationship graph between objects generated<br />

via profiling, Calder et al. present a compiler directed approach <strong>for</strong> cache-conscious<br />

data placement [5]. Carr and Tseng propose a model that computes temporal reuse of<br />

cache lines to find desirable loop organizations <strong>for</strong> better data locality [7, 21]. Although<br />

these methods are effective in increasing cache reuse, it is difficult to apply them directly<br />

to DSS workload because it is hard to profile ad hoc DSS queries.<br />

3 Feasibility Analysis: <strong>Improving</strong> Temporal Locality<br />

Processor caches are used in modern architectures to reduce the average latency of<br />

memory accesses. Every memory load or store instruction is first checked inside the<br />

processor cache (L1 and L2). If the data is in the cache, a.k.a. a cache hit, the access<br />

is satisfied by the cache directly. Otherwise, it is a cache miss. Upon a cache miss,<br />

the accessed data is fetched into the cache from the main memory. Because accessing<br />

the main memory is 10–30 times slower than accessing the processor cache, it is<br />

per<strong>for</strong>mance critical to have high cache hit ratios to avoid paying the large penalty of<br />

accessing main memory.<br />

There are two kinds of locality: spatial locality and temporal locality. Our work<br />

focuses on improving temporal locality via locality-based scheduling. Temporal locality<br />

is the tendency that individual locations, once referenced, are likely to be referenced<br />

again in the near future. Good temporal locality allows data in processor caches to be<br />

reused (called as temporal reuse) multiple times be<strong>for</strong>e being replaced and thereby<br />

improving the cache effectiveness.<br />

In most real world workloads, database servers usually serve multiple concurrent<br />

queries simultaneously. Usually, there is significant amount of data sharing among<br />

many of such concurrent queries. For example, Query 1 (Q1) and Query 6 (Q6) from the<br />

TPC-H benchmark [30] share the same table Lineitem, the largest one in the TPC-H<br />

database.<br />

However, due to the locality-oblivious multi-query scheduling that is commonly<br />

used in modern database servers, such significant data sharing is not fully exploited in<br />

databases to improve the level of temporal reuse in processor caches and reduce the<br />

number of processor cache misses. As a result, be<strong>for</strong>e a piece of data can be reused by<br />

another query, it has already been replaced and needs to be fetched again from main<br />

memory when it is needed by another query.<br />

Let us looking at an example using Q1 and Q6 from the TPC-H benchmark. Suppose<br />

Lineitem has 1M tuples, with each tuple occupying one cache line of 64 bytes (<strong>for</strong> the<br />

simplicity of description), and the L2 cache holds only 64K cache lines (total of 4<br />

MBytes). Suppose that the scheduler decides to execute Q1 first in concurrent to some<br />

other queries that do not share any data with Q1 and Q6. After Q1 accesses the 128K-th<br />

tuple, Q6 is scheduled to start from the 1st tuple. Since the L2 cache can only hold 64K<br />

4

Fig. 2. Comparison between locality-oblivious and locality-aware multi-query scheduling.<br />

tuples, the first tuple of Lineitem is already evicted from L2. There<strong>for</strong>e, the database<br />

needs to fetch this tuple again from main memory to execute Q6.<br />

In contrast, if we use a locality-aware multi-query scheduling and execution, we<br />

can schedule Q1 and Q6 together in an interleaved fashion so that, after a query fetches<br />

a tuple from main memory into L2, this tuple can be accessed <strong>for</strong> both queries be<strong>for</strong>e<br />

being replaced from L2.<br />

Figure 2 shows that, <strong>for</strong> multiple queries of different types (Q1+Q6), the localityaware<br />

scheduling is able to reduce the number of cache misses by 41.7% and result<br />

in 9.7% reduction in execution time. For multiple queries of the same type but with<br />

different arguments (Q6+Q6’), the locality-aware scheduling reduces the number of<br />

cache misses by 42.4% and the execution time by 9.9%. These results indicate that<br />

locality-awareness in multi-query scheduling is very helpful to reduce the number of<br />

cache misses and improve database per<strong>for</strong>mance, which is the major focus of our work.<br />

4 <strong>MiniTasking</strong><br />

4.1 Overview<br />

To exploit data sharing among concurrent queries <strong>for</strong> improving temporal locality,<br />

<strong>MiniTasking</strong> schedules and executes concurrent queries based on data sharing characteristics<br />

at three levels: query level batching, operator level grouping and mini-task<br />

level scheduling. While each level is different, all levels share the same goal: improving<br />

temporal data locality. There<strong>for</strong>e, at each level, all decisions are made based on data<br />

sharing characteristics with consideration of other factors that are specific to each level.<br />

At the query level, due to the processor cache capacity limit, it is not beneficial to<br />

execute together all concurrent queries (queries that have already arrived at the database<br />

management server and are waiting to be processed). There<strong>for</strong>e, <strong>MiniTasking</strong> carefully<br />

selects a batch of queries based on their data sharing characteristics and the processor<br />

cache configuration to maximize the level of temporal locality in the processor cache.<br />

Queries in the same batch are then processed together in the next two levels.<br />

At the second level, <strong>MiniTasking</strong> produces a locality-aware query plan tree <strong>for</strong> each<br />

batch of queries. <strong>MiniTasking</strong> does this by starting from the query plan tree produced<br />

by the optimizer and group together those operators that share significant amount of<br />

data. Operators that do not share data with others remain untouched.<br />

At the third level, <strong>MiniTasking</strong> further breaks each operator into mini-tasks, with<br />

each mini-task operating on a fine-grained data block. Then all mini-tasks from the<br />

5

Algorithm Greedy-Selecting:<br />

;; Given n queries Q 1, ..., Q n, return a batch of<br />

;; queries that will be processed as a whole.<br />

S={Q a, Q b |max i,jAmountDataSharing(Q i, Q j)}<br />

while |S| < MaxBatchSize<br />

do<br />

Find Q /∈ S s.t. ∃Q ′ ∈ S<br />

AmountDataSharing(Q,Q ′ ) is maximized<br />

if AmountDataSharing(Q,Q ′ ) ≠ 0<br />

S=S ∪ {Q}<br />

else<br />

exit the loop ;; No more queries sharing with S<br />

return S<br />

Fig. 3. Greedy batch selecting algorithm.<br />

same of query plan tree are executed one after another following an order to maximize<br />

temporal data reuse in the processor cache.<br />

4.2 Query Level Batching<br />

Obviously, the first criteria <strong>for</strong> query batching should be data sharing. If two queries<br />

access totally different data, there is no chance of reusing each other’s data from the<br />

processor cache. Such case can happen even when two queries access the same table<br />

but access different fields that do not share the same cache line. In this case, we call that<br />

these two queries do not have overlapping working sets, which is defined as the set of<br />

data (cache lines) accessed by a query.<br />

There<strong>for</strong>e, to batch queries based on data sharing characteristics, <strong>MiniTasking</strong> needs<br />

to estimate the amount of sharing between any two concurrent queries. A metric, called<br />

as AmountDataSharing is introduced to measure the estimated amount of data sharing,<br />

i.e. the amount of overlapping in working set, between two given concurrent queries.<br />

Since only coarse-grain data access characteristics are known at the query level, we estimate<br />

a query’s working set based on the tables and the fields accessed by this query.<br />

<strong>MiniTasking</strong> schedules queries in batches and processes these batches one by one.<br />

Given a large number of concurrent queries that share data with each other, intuitively,<br />

it sounds beneficial to execute concurrently as many queries as possible so that the<br />

amount of data reuse can be maximized.<br />

However, in reality, due to the limited L2 cache capacity, scheduling too many concurrent<br />

queries can result in even poor temporal locality because data from different<br />

queries can replace each other in the cache be<strong>for</strong>e being reused. There<strong>for</strong>e, we should<br />

carefully decide how many and which concurrent queries should be batched together.<br />

To address this problem, we use a threshold parameter, MaxBatchSize, to limit the<br />

number of concurrent queries in a batch.<br />

Based on the above analysis, we use a heuristic greedy algorithm to select batches<br />

of queries, as shown in Figure 3. It works similar to a clustering algorithm: divide all<br />

concurrent queries into clusters smaller than MaxBatchSize to maximize the total<br />

amount of data sharing.<br />

6

Original Plans:<br />

Op6<br />

Op’4<br />

Op5<br />

Op’3<br />

Op4<br />

Op3<br />

Op’1<br />

Op’2<br />

Op1<br />

Op2<br />

Table Scan T1 Table Scan T2<br />

Enhanced Plans:<br />

Table Scan T1 Table Scan T2<br />

Op6<br />

Table Scan T3<br />

<strong>MiniTasking</strong><br />

Op’4<br />

Op5<br />

Op’3<br />

Op4<br />

Op3<br />

Op’1 Op1<br />

Op’2 Op2<br />

Table Scan T1<br />

Table Scan T2<br />

Table Scan T3<br />

Fig. 4. An example of the operator grouping process. The output of Op ′ 3 to Op ′ 4 and the output<br />

of Op 4 to Op 5 are materialized.<br />

4.3 Operator Level Grouping<br />

Since queries consist of operators, <strong>MiniTasking</strong> goes one step further to group together<br />

operators from the same batch of queries according to their data sharing characteristics.<br />

<strong>MiniTasking</strong> scans every physical operator tree produced by the query optimizer<br />

<strong>for</strong> each query in a batch and groups operators that share some certain data.<br />

The evaluation process is similar to the one used at the query level. If the results of an<br />

operator is pipelined to other operators, <strong>MiniTasking</strong> also puts these related operators<br />

into the same group. Each group of operators is then passed to the mini-task level. Operators<br />

that do not share data with others are all put into the last group and is executed<br />

last using the original scheduling algorithm.<br />

<strong>MiniTasking</strong> supports operator dependency by maintaining a pool of ready operators.<br />

An operator is ready and joins the ready pool when it does not dependent on other<br />

unexecuted operators. <strong>MiniTasking</strong> selects a group of operators from the ready pool<br />

using a similar algorithm to the one used in query batching described in Figure 3. After<br />

this group of operators finishes execution via mini-tasking (described in the next subsection),<br />

some operators that depend on the ones just executed will be “released” and<br />

join the ready pool if they do not have other dependencies. <strong>MiniTasking</strong> will select the<br />

next group of operators and so on so <strong>for</strong>th until all operators are executed.<br />

Figure 4 uses an example to demonstrate how <strong>MiniTasking</strong> works at the operator<br />

level. Suppose there are two queries, namely Q and Q’. Op 1 to Op 5 are operators of<br />

query Q, and Op ′ 1 to Op ′ 4 are operators of query Q’. As both Op 1 and Op ′ 1 access table<br />

T 1 , they are grouped together. Suppose Op ′ 3 and Op 4 are implemented using pipelining.<br />

<strong>MiniTasking</strong> also puts them into the same group as Op 1 , Op ′ 1 , Op 2 and Op ′ 2 . This group<br />

does not contain Op ′ 4 or Op 5 , because the results of Op ′ 3 and Op 4 are materialized.<br />

4.4 Mini-task Level Scheduling<br />

At the mini-task level, the challenge is how <strong>MiniTasking</strong> breaks various query operators<br />

into mini-tasks and achieves benefit from rescheduling them. We show our method<br />

7

Fig. 5. <strong>MiniTasking</strong> breaks the operators into<br />

mini-tasks and schedules them.<br />

Fig. 6. The layouts <strong>for</strong> the two join relations<br />

and the join query.<br />

by illustrating a data-centric method applied to a table scan. The idea can be extended<br />

to handle other query operators.<br />

The goal of <strong>MiniTasking</strong> is to make the data loaded into the cache reused by queries<br />

as much as possible be<strong>for</strong>e it is evicted from the cache. There<strong>for</strong>e, <strong>MiniTasking</strong> carefully<br />

chooses an appropriate value <strong>for</strong> the whole working set size, which means the<br />

total size <strong>for</strong> all the data blocks that can reside in the cache. It has a big impact on the<br />

query per<strong>for</strong>mance. If it is too large, some data may be evicted from the cache be<strong>for</strong>e<br />

being reused. However, decreasing it will result in more mini-tasks and thereby heavier<br />

switching overhead.<br />

Generally, this parameter is related to the target architecture, the data layouts and<br />

the queries, especially the L2 cache size, the L2 cache line size and the associativity.<br />

According to our experiments, it is not very sensitive to the type of queries. Once the<br />

target architecture and the data layouts are specified, it is feasible to run some calibration<br />

experiments in advance to determine the best value <strong>for</strong> this parameter.<br />

There<strong>for</strong>e, <strong>for</strong> a table scan, <strong>MiniTasking</strong> divides the table into n fine-grained data<br />

blocks, with each block suitable <strong>for</strong> the working set. Correspondingly, <strong>MiniTasking</strong><br />

breaks the execution of each scan operator into n mini-tasks, according to the data<br />

blocks they use. Thereafter, when a data block is loaded by the first mini-task, Mini-<br />

Tasking schedules other mini-tasks that share this data block to execute one by one.<br />

When no mini-tasks use this data block, it will be replaced by the next data block. Thus<br />

the data resided in the cache can be maximally reused be<strong>for</strong>e being evicted.<br />

The following example illustrates this data-centric scheduling method. Suppose<br />

there are three table scan operators Op 1 , Op 2 , Op 3 and they share the table T , as<br />

shown in Figure 5. Table T is divided into three data blocks. According to the data<br />

blocks they access, the three operators are broken into (Op 1,1 , Op 1,2 , Op 1,3 ), (Op 2,1 ,<br />

Op 2,2 , Op 2,3 ), and (Op 3,1 , Op 3,2 , Op 3,3 ), respectively. <strong>MiniTasking</strong> schedules them in<br />

such an order: Op 1,1 , Op 2,1 , Op 3,1 , Op 1,2 , Op 2,2 , Op 3,2 , Op 1,3 , Op 2,3 , and Op 3,3 . In<br />

this way, the data block (DT j ) loaded into the cache by Op 1,j (j=1, 2, 3) can be reused<br />

by the subsequent mini-tasks Op 2,j and Op 3,j .<br />

8

Hash Joins Index Joins<br />

Tuples Hash-1 Hash-2 Index-1 Index-2<br />

Outer 10 6 10 6 10 6 5,000<br />

Inner 5,000 100 500,000 10 6<br />

Table 1. The sizes of the outer and inner relations<br />

used by Micro-join.<br />

Parameters L1 D cache L2 cache<br />

Size 8KB 512KB<br />

Associativity 4-way 8-way<br />

<strong>Cache</strong> line 64B 64B<br />

<strong>Cache</strong> miss latency 7 cycles 350 cycles<br />

Table 2. Processor cache parameters of the<br />

evaluation plat<strong>for</strong>m .<br />

5 Experimental Evaluation<br />

5.1 Evaluation Methodology<br />

We implement <strong>MiniTasking</strong> in the Shore database storage manager [6], which provides<br />

most of the popular storage features used in a modern commercial DBMS. Previous<br />

work show that Shore exhibits memory access behaviors similar to several commercial<br />

DBMSes [1]. Since Shore’s original query scheduler is fairly serialized (executing<br />

one query after another), we have extended Shore to use a slightly more sophisticated<br />

scheduler which switches from one query to another after a certain time quantum or<br />

when this query yields voluntarily due to other reasons (e.g. I/Os). This scheduler emulates<br />

what would really happen with a multi-threaded or multi-processed commercial<br />

database server. Our results also show that this scheduler per<strong>for</strong>ms slightly better than<br />

the original scheduler in Shore. There<strong>for</strong>e, we use this time quantum-based scheduler<br />

as our baseline to compare with <strong>MiniTasking</strong>.<br />

Experimental Workloads For DSS workloads, we use a TPC-H-like benchmark, which<br />

represents the activities of a complex business that manages, sells and distributes a large<br />

number of products [30]. The following are the table sizes in our TPC-H-like database:<br />

600572 tuples in Lineitem, 150000 tuples in Orders, and 20000 tuples in Part.<br />

Experimental Plat<strong>for</strong>m Our evaluation is conducted on a machine with a 2.4GHz Intel<br />

Pentium 4 processor and 2.5GB of main memory. The processor includes two levels of<br />

caches: L1 and L2, whose characteristics are shown on Table 2. The operating system<br />

is Linux kernel 2.4.20. For measurements, we use a commercial tool, the Intel VTune<br />

per<strong>for</strong>mance tool [17], which collect per<strong>for</strong>mance statistics with negligible overhead.<br />

5.2 Results For Micro-Join<br />

We use a two-relation join query to examine <strong>MiniTasking</strong>, as shown in Figure 6.<br />

We vary the number of tuples in the two relations and examine four representative<br />

combinations <strong>for</strong> them, as shown in Table 1.<br />

Our experiments show that <strong>MiniTasking</strong> improves the per<strong>for</strong>mance of join operations<br />

by 4%–12%. When a hash join is used, if the hash table on the inner relation is<br />

small enough to be put into the cache, <strong>MiniTasking</strong> can be effectively applied to the<br />

outer relation. For example, when two instances of the join query are running, Mini-<br />

Tasking improves the query per<strong>for</strong>mance by 9% in the case of Hash-1 and 12% in the<br />

case of Hash-2, as shown in Figure 7. <strong>MiniTasking</strong> has similar speedup <strong>for</strong> the indexbased<br />

join since it can break the index probing into mini-tasks. As a result, <strong>MiniTasking</strong><br />

reduces the query execution time by up to 8.2% <strong>for</strong> two concurrently running instances<br />

of the join query.<br />

9

Fig. 7. <strong>Per<strong>for</strong>mance</strong> of join operations. <strong>MiniTasking</strong> reduces execution time up to 12.1% <strong>for</strong> hash<br />

joins and 8.2% <strong>for</strong> index nested-loops joins. MT stands <strong>for</strong> <strong>MiniTasking</strong>.<br />

(a) Normalized execution time<br />

(b) CPI breakdown<br />

Fig. 8. <strong>Per<strong>for</strong>mance</strong> of throughput-real tests. Each test runs several concurrent streams. The execution<br />

time of each test is normalized to the baseline without <strong>MiniTasking</strong>.<br />

5.3 Results For Throughput-Real<br />

We validate our <strong>MiniTasking</strong> strategy using a real workload, modeling after the<br />

throughput test of TPC-H benchmark. The standard TPC-H throughput test is composed<br />

of multiple concurrent streams. Each stream contains a sequence of TPC-H queries in<br />

an order which TPC-H benchmark specifies. Accordingly, our experiment follows these<br />

sequences and let each stream execute the six TPC-H queries we implemented.<br />

Our experimental results show that <strong>MiniTasking</strong> is very effective <strong>for</strong> this workload.<br />

Figure 8(a) shows that the execution time of each test is reduced by 11%-20% <strong>for</strong> various<br />

number of concurrent query streams. As shown on Figure 8(b), the per<strong>for</strong>mance<br />

gain comes from the reduction in L2 cache misses: <strong>MiniTasking</strong> significantly reduces<br />

the number of L2 cache misses by 41%-79%. This is all because <strong>MiniTasking</strong>’s localityaware<br />

query scheduling and execution effectively improves the access temporal locality.<br />

Meanwhile, <strong>MiniTasking</strong> do not affect other processor events very much since it adds<br />

little overhead. There<strong>for</strong>e, the improved L2 cache hit ratios is proportionally reflected<br />

into the end per<strong>for</strong>mance.<br />

5.4 Improvement Upon PAX Layout<br />

Figure 9 shows the effects of <strong>MiniTasking</strong> on cache-conscious data layout such<br />

as PAX [1]. <strong>MiniTasking</strong> can still effectively reduce the number of L2 cache misses<br />

by 65% and the execution time by 9%. The per<strong>for</strong>mance speedup is less pronounced<br />

with PAX than with the default NSF layout because, with PAX that has significantly<br />

improved spatial locality in accesses, the L2 cache miss time contributes less to the<br />

execution time than with NSM.<br />

10

(a) Normalized execution time<br />

(b) CPI breakdown<br />

Fig. 9. The execution time and the CPI breakdown of four concurrent queries (TPCH-Q1). The<br />

PAX data layout is used in Shore and MT.<br />

6 Conclusion<br />

In this paper, we propose a technique called <strong>MiniTasking</strong> to improve database per<strong>for</strong>mance<br />

<strong>for</strong> concurrent query execution by reducing the number of processor cache<br />

misses via three levels of locality-based scheduling. Through query level batching, operator<br />

level grouping and mini-task level scheduling, <strong>MiniTasking</strong> can significantly reduce<br />

L2 cache misses and execution time. Our experimental results show that, Mini-<br />

Tasking can significantly reduce the execution time up to 12% <strong>for</strong> joins. For the TPC-<br />

H throughput test workload, <strong>MiniTasking</strong> reduces the number of L2 cache misses up<br />

to 79% and improves the end per<strong>for</strong>mance up to 20%. With the Partition Attributes<br />

Across (PAX) layout, <strong>MiniTasking</strong> further reduces the cache misses by 65% and the<br />

execution time by 9%, which indicates that our technique well compliments previous<br />

cache-conscious layouts.<br />

References<br />

1. A. Ailamaki, D. J. DeWitt, and M. D. Hill. Data page layouts <strong>for</strong> relational databases on<br />

deep memory hierarchies. The VLDB Journal, 11(3):198–215, 2002.<br />

2. A. Ailamaki, D. J. DeWitt, M. D. Hill, and D. A. Wood. DBMSs on a modern processor:<br />

Where does time go? In VLDB ’99, pages 266–277, 1999.<br />

3. A.-H. A. Badawy, A. Aggarwal, D. Yeung, and C.-W. Tseng. Evaluating the impact of memory<br />

system per<strong>for</strong>mance on software prefetching and locality optimizations. In International<br />

Conference on Supercomputing, pages 486–500, 2001.<br />

4. P. A. Boncz, S. Manegold, and M. L. Kersten. Database architecture optimized <strong>for</strong> the new<br />

bottleneck: Memory access. In VLDB ’99, pages 54–65, 1999.<br />

5. B. Calder, C. Krintz, S. John, and T. Austin. <strong>Cache</strong>-conscious data placement. In ASPLOS<br />

’98, pages 139–149, 1998.<br />

6. M. J. Carey, D. J. DeWitt, M. J. Franklin, N. E. Hall, M. L. McAuliffe, J. F. Naughton, D. T.<br />

Schuh, M. H. Solomon, C. K. Tan, O. G. Tsatalos, S. J. White, and M. J. Zwilling. Shoring<br />

up persistent applications. In SIGMOD ’94, pages 383–394, 1994.<br />

7. S. Carr, K. S. McKinley, and C.-W. Tseng. Compiler optimizations <strong>for</strong> improving data locality.<br />

In ASPLOS ’94, pages 252–262, 1994.<br />

8. S. Chen, P. B. Gibbons, and T. C. Mowry. <strong>Improving</strong> index per<strong>for</strong>mance through prefetching.<br />

In SIGMOD ’01, pages 235–246, 2001.<br />

9. S. Chen, P. B. Gibbons, T. C. Mowry, and G. Valentin. Fractal prefetching b+-trees: optimizing<br />

both cache and disk per<strong>for</strong>mance. In SIGMOD ’02, pages 157–168, 2002.<br />

11

10. N. N. Dalvi, S. K. Sanghai, P. Roy, and S. Sudarshan. Pipelining in multi-query optimization.<br />

In PODS ’01, pages 59–70, 2001.<br />

11. C. Ding and K. Kennedy. Inter-array data regrouping. In Languages and Compilers <strong>for</strong><br />

Parallel Computing, pages 149–163, 1999.<br />

12. C. Ding and M. Orlovich. The potential of computation regrouping <strong>for</strong> improving locality.<br />

In ACM/IEEE SC2004, Nov. 6-12, 2004.<br />

13. S. Finkelstein. Common expression analysis in database applications. In SIGMOD ’82,<br />

pages 235–245, 1982.<br />

14. R. A. Hankins and J. M. Patel. Data morphing: An adaptive,cache-conscious storage technique.<br />

In VLDB ’03. Morgan Kaufmann, 2003.<br />

15. S. Harizopoulos, V. Shkapenyuk, and A. Ailamaki. Qpipe: A simultaneously pipelined relational<br />

query engine. In SIGMOD ’05, pages 383–394, 2005.<br />

16. IBM. Personal communication with IBM, Jan. 2005.<br />

17. Intel Corporation. Intel vtune per<strong>for</strong>mance analyzer.<br />

http://www.intel.com/software/products/vtune/, 2004.<br />

18. K. Kennedy and K. S. McKinley. Maximizing loop parallelism and improving data locality<br />

via loop fusion and distribution. In Proceedings of the 6th International Workshop on<br />

Languages and Compilers <strong>for</strong> Parallel Computing, pages 301–320. Springer-Verlag, 1994.<br />

19. K. Kim, S. K. Cha, and K. Kwon. Optimizing multidimensional index trees <strong>for</strong> main memory<br />

access. In SIGMOD ’01, pages 139–150. ACM Press, 2001.<br />

20. J. L. Lo, L. A. Barroso, S. J. Eggers, K. Gharachorloo, H. M. Levy, and S. S. Parekh. An<br />

analysis of database workload per<strong>for</strong>mance on simultaneous multithreaded processors. In<br />

ISCA ’98, pages 39–50. IEEE Computer Society, 1998.<br />

21. K. S. McKinley, S. Carr, and C.-W. Tseng. <strong>Improving</strong> data locality with loop trans<strong>for</strong>mations.<br />

ACM Transactions on Programming Languages and Systems, 18(4):424–453, July 1996.<br />

22. K. O’Gorman, D. Agrawal, and A. E. Abbadi. <strong>Multiple</strong> query optimization by cache-aware<br />

middleware using query teamwork. In ICDE ’02, page 274. IEEE Computer Society, 2002.<br />

23. J. Philbin, J. Edler, O. J. Anshus, C. C. Douglas, and K. Li. Thread scheduling <strong>for</strong> cache<br />

locality. In ASPLOS ’96, pages 60–71. ACM Press, 1996.<br />

24. R. Ramamurthy, D. J. DeWitt, and Q. Su. A case <strong>for</strong> fractured mirrors. In VLDB ’02, pages<br />

430–441, 2002.<br />

25. J. Rao and K. A. Ross. Making b+- trees cache conscious in main memory. In SIGMOD ’00,<br />

pages 475–486, New York, NY, USA, 2000. ACM Press.<br />

26. P. Roy, S. Seshadri, S. Sudarshan, and S. Bhobe. Efficient and extensible algorithms <strong>for</strong><br />

multi query optimization. In SIGMOD ’00, pages 249–260. ACM Press, 2000.<br />

27. T. Sellis and S. Ghosh. On the multiple-query optimization problem. IEEE Transactions on<br />

Knowledge and Data Engineering, 2(2):262–266, 1990.<br />

28. T. K. Sellis. <strong>Multiple</strong>-query optimization. ACM Trans. Database Syst., 13(1):23–52, 1988.<br />

29. A. Shatdal, C. Kant, and J. F. Naughton. <strong>Cache</strong> conscious algorithms <strong>for</strong> relational query<br />

processing. In VLDB ’94, pages 510–521, 1994.<br />

30. Transaction processing per<strong>for</strong>mance council. http://www.tpc.org.<br />

31. P. Trancoso, J.-L. Larriba-Pey, Z. Zhang, and J. Torrellas. The memory per<strong>for</strong>mance of DSS<br />

commercial workloads in shared-memory multiprocessors. In HPCA ’97, 1997.<br />

32. M. E. Wolf and M. S. Lam. A data locality optimizing algorithm. In PLDI ’91, 1991.<br />

33. J. Zhou and K. A. Ross. Buffering accesses to memory-resident index structures. In VLDB<br />

’03, pages 405–416, 2003.<br />

34. Y. Zhou, L. Wang, D. W. Clark, and K. Li. Thread scheduling <strong>for</strong> out-of-core applications<br />

with memory server on multicomputers. In IOPADS ’99, pages 57–67. ACM Press, 1999.<br />

12