Search Web Page Classification Using Form Structural Characteristics

Search Web Page Classification Using Form Structural Characteristics

Search Web Page Classification Using Form Structural Characteristics

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

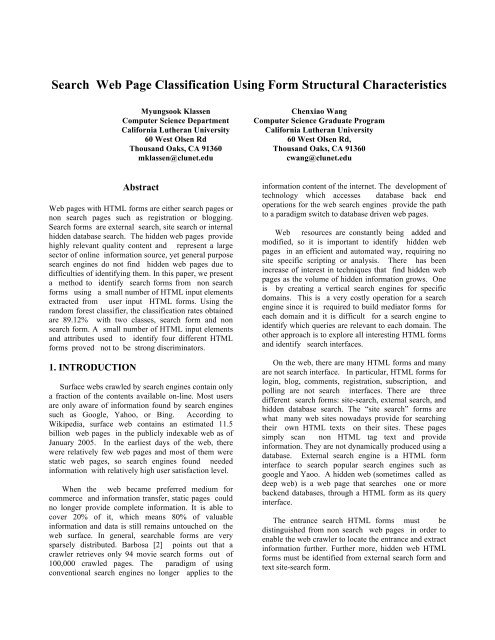

<strong>Search</strong> <strong>Web</strong> <strong>Page</strong> <strong>Classification</strong> <strong>Using</strong> <strong>Form</strong> <strong>Structural</strong> <strong>Characteristics</strong><br />

Myungsook Klassen<br />

Computer Science Department<br />

California Lutheran University<br />

60 West Olsen Rd<br />

Thousand Oaks, CA 91360<br />

mklassen@clunet.edu<br />

Chenxiao Wang<br />

Computer Science Graduate Program<br />

California Lutheran University<br />

60 West Olsen Rd,<br />

Thousand Oaks, CA 91360<br />

cwang@clunet.edu<br />

Abstract<br />

<strong>Web</strong> pages with HTML forms are either search pages or<br />

non search pages such as registration or blogging.<br />

<strong>Search</strong> forms are external search, site search or internal<br />

hidden database search. The hidden web pages provide<br />

highly relevant quality content and represent a large<br />

sector of online information source, yet general purpose<br />

search engines do not find hidden web pages due to<br />

difficulties of identifying them. In this paper, we present<br />

a method to identify search forms from non search<br />

forms using a small number of HTML input elements<br />

extracted from user input HTML forms. <strong>Using</strong> the<br />

random forest classifier, the classification rates obtained<br />

are 89.12% with two classes, search form and non<br />

search form. A small number of HTML input elements<br />

and attributes used to identify four different HTML<br />

forms proved not to be strong discriminators.<br />

1. INTRODUCTION<br />

Surface webs crawled by search engines contain only<br />

a fraction of the contents available on-line. Most users<br />

are only aware of information found by search engines<br />

such as Google, Yahoo, or Bing. According to<br />

Wikipedia, surface web contains an estimated 11.5<br />

billion web pages in the publicly indexable web as of<br />

January 2005. In the earliest days of the web, there<br />

were relatively few web pages and most of them were<br />

static web pages, so search engines found needed<br />

information with relatively high user satisfaction level.<br />

When the web became preferred medium for<br />

commerce and information transfer, static pages could<br />

no longer provide complete information. It is able to<br />

cover 20% of it, which means 80% of valuable<br />

information and data is still remains untouched on the<br />

web surface. In general, searchable forms are very<br />

sparsely distributed. Barbosa [2] points out that a<br />

crawler retrieves only 94 movie search forms out of<br />

100,000 crawled pages. The paradigm of using<br />

conventional search engines no longer applies to the<br />

information content of the internet. The development of<br />

technology which accesses database back end<br />

operations for the web search engines provide the path<br />

to a paradigm switch to database driven web pages.<br />

<strong>Web</strong> resources are constantly being added and<br />

modified, so it is important to identify hidden web<br />

pages in an efficient and automated way, requiring no<br />

site specific scripting or analysis. There has been<br />

increase of interest in techniques that find hidden web<br />

pages as the volume of hidden information grows. One<br />

is by creating a vertical search engines for specific<br />

domains. This is a very costly operation for a search<br />

engine since it is required to build mediator forms for<br />

each domain and it is difficult for a search engine to<br />

identify which queries are relevant to each domain. The<br />

other approach is to explore all interesting HTML forms<br />

and identify search interfaces.<br />

On the web, there are many HTML forms and many<br />

are not search interface. In particular, HTML forms for<br />

login, blog, comments, registration, subscription, and<br />

polling are not search interfaces. There are three<br />

different search forms: site-search, external search, and<br />

hidden database search. The “site search” forms are<br />

what many web sites nowadays provide for searching<br />

their own HTML texts on their sites. These pages<br />

simply scan non HTML tag text and provide<br />

information. They are not dynamically produced using a<br />

database. External search engine is a HTML form<br />

interface to search popular search engines such as<br />

google and Yaoo. A hidden web (sometimes called as<br />

deep web) is a web page that searches one or more<br />

backend databases, through a HTML form as its query<br />

interface.<br />

The entrance search HTML forms must be<br />

distinguished from non search web pages in order to<br />

enable the web crawler to locate the entrance and extract<br />

information further. Further more, hidden web HTML<br />

forms must be identified from external search form and<br />

text site-search form.

In this paper, we make an attempt first to identify<br />

search forms from non search forms, and second, to<br />

identify hidden web search forms from two other<br />

types of search forms using a small number of HTML<br />

input element attributes, since a sparse matrix data are<br />

often very lager and are over fitted by classifiers if care<br />

is not taken. Besides HTML input elements attributes<br />

such as names, values, labels and URLs in the sparse<br />

matrix contain many different string values and<br />

variant string values which are hard to be used without<br />

daunting string/text processing task.<br />

Another contribution of our work is that we<br />

conducted our experiments on a real data set we<br />

collected by crawling web pages to acquire the newest<br />

web page trends instead of using dated archived data<br />

from data repositories such as UIUC. And lastly,<br />

performance of a random forest classifier is explored<br />

to evaluate its goodness in identifying different types<br />

of forms.<br />

The rest paper is organized as follows: section 2<br />

presents related works in the field, and section 3<br />

describes input values created from web pages. In<br />

Section 4, the Random Forest classifier is briefly<br />

discussed. Numerical results are presented in section 5,<br />

followed by discussions and conclusions in section 6.<br />

2. RELATED WORK<br />

In what follows, we give an overview of previous<br />

works on the hidden web classification and a brief<br />

review of approaches which address different aspects of<br />

hidden wed data retrieval.<br />

In year 2000, He et al [6] surveyed the deep web<br />

studying the scale, subject distribution, and search<br />

engine coverage and reported their findings in 2007.<br />

They report that 72% interfaces were found within a<br />

depth 3 and 94% web databases appeared within depth<br />

3. They classified web databases into two types:<br />

unstructured databases such as images, audio and video,<br />

and structures databases which provide data objects as<br />

structured “relational” records with attribute-value pairs.<br />

Hidden web database is dominated by structured<br />

database with 77.2%. The top three subjects of hidden<br />

web pages are business & economy, computers&<br />

Internet, and education. The last question presented in<br />

their paper was “ how do search engines cover the deep<br />

web?”. They report that Google and Yahoo indexed<br />

32% of hidden web pages while MSN had 11%.<br />

Automatic classification of Hidden web database<br />

classification: post query techniques<br />

There are two methods to classify hidden page web<br />

forms. One is pre-query, which classifies web database<br />

according to the features of query forms such as HTML<br />

tag input types and labels. The other is post query,<br />

which identifies the search interfaces, submit probe<br />

queries and analyzes the results.<br />

Hidden web database classification is performed<br />

based on probing[4][5]. Gong et el [4] created prototype<br />

for classifying hidden web database into a predefined<br />

category hierarchy using query probing and link<br />

evaluation. Features for each class is extracted from<br />

randomly selected web pages. The hidden web is probed<br />

by analyzing the results of the class –specific query to<br />

the hidden database. <strong>Classification</strong> methods used are k-<br />

means nearest neighbor method. In [5] authors have<br />

done similar work, but used extensive number of rules<br />

or queries for probing multiple times while Gong et al<br />

used only one query for probing each category. In [11],<br />

authors used semantic information to feature vectors of<br />

forms and centroid vector to classify hidden web<br />

database. The support Vector machine classification<br />

method is used with data obtained from UIUC databases<br />

to obtain high classification rates between 90.87% and<br />

98.37% in five categories. In [5], authors trained a rule<br />

based document classifier, and then uses the classifier’s<br />

rules to generate probing queries. The queries are sent to<br />

the databases which are then classified based on the<br />

number of matches that they produce for each query.<br />

UCI KDD archive database is used for their work.<br />

Identifying hidden web pages: pre query techniques<br />

Pre-query techniques use visible features of forms.<br />

<strong>Form</strong> attribute labels are used for classifiers. In [2],<br />

authors proposed adaptive crawling strategies to<br />

efficiently locate the entry points to hidden web<br />

databases. The strategies use both links and forms to<br />

enhance identification of hidden databases. For link<br />

classification, features present in the anchor, URL, and<br />

text around links are extracted and from them, terms<br />

with highest document frequency are selected.<br />

Hess and Kushmerick [7] used input tag labels,<br />

input name and input types from 129 forms collected.<br />

For instance HTML fragments “Enter name: ”, the sequence<br />

[“enter”,”name”,”user”, “text”] is gathered to be used<br />

for a Naïve Bayes classifier. They reported the<br />

classification rate of 82%.<br />

Barbosa and Freire [1] used 216 searchable forms<br />

from the UIUC repository and gathered 259 non<br />

searchable forms for the negative examples. HTML<br />

form structure attributes such as number of hidden tags,<br />

number of radio tags, number of file input, number of

submit tags, number of image inputs, number of buttons,<br />

number of resets, number of password tags, number of<br />

textboxes, number of items in selection lists, sum of text<br />

sizes in textboxes and submission method (post versus<br />

get) are used as attributes to several classifiers. Two<br />

thirds were used as a training data set and one third as a<br />

test data set for a multi layer perceptron, Naïve Bayes,<br />

C4.5 and Support Vector Machines. They reported error<br />

rates 9.05% by C4.5 and 24% by Naïve Bayes classifier.<br />

Ye et al [10] used a sparse matrix of HTML<br />

structure features. Features are value and name<br />

attributes for “input”, “select”, “textarea”, and “label”<br />

from input types to create a sequence such as [ input1,<br />

input1-name, input1-value] for each HTML input type.<br />

A number of “input” and a number of “select” , a<br />

number “label”, and a number of “textarea” in each<br />

form are computed to be used as features. In addition,<br />

attributes “name” and “method” from a HTML form<br />

element, “src” and “alt” values of “input-image”<br />

element are used. Made up data set from Mataquerier<br />

project [10] and websites crawled from <strong>Search</strong> Engine<br />

Guide were used for their experiments. Bayes, C4.5<br />

decision tree, support vector machine and random forest<br />

classifiers produced from the lowest 79.78% to the<br />

highest 93.88% classification rates.<br />

3. FORM ENTRY DATA<br />

3.1 <strong>Form</strong> collections<br />

There are many different HTML forms and<br />

many of them are not search interfaces. The forms are<br />

categorized into the following four groups:<br />

• External search: forms which provide<br />

external web search sites such as to the<br />

google site for convenience of users. They<br />

are not considered as an entry to an<br />

internal database.<br />

• No search: forms for login, subscription,<br />

registration, polling, or blogging. They are<br />

not considered as an entry to an internal<br />

database.<br />

• site search: forms which many web sites<br />

now provide for searching its own HTML<br />

pages. They are not considered as an entry<br />

to an internal database.<br />

• Internal database search: forms which<br />

provide entry to its own site backend<br />

databases. These are considered true<br />

hidden web pages.<br />

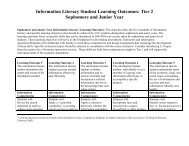

(a) External search form<br />

(b) Non search form<br />

(c) Site search<br />

(d) internal database search form<br />

Figure 1: different HTML forms<br />

The data used for our experiments were created by<br />

crawling the web site www.searchengineguide.com with<br />

the web crawler <strong>Web</strong>Lech with a depth 3 and each<br />

form was manually categorized into one of four types.<br />

Examples of each type are shown in Figure 1. Initially<br />

792 HTML forms were collected by the crawler and<br />

only 9.8% of all forms were internal database forms.<br />

Other categories collected are 12.86%, 46.36%, and<br />

30.96% for external search, site search, and no search<br />

respectively. To increase the number of internal<br />

database search forms, 89 forms were manually<br />

collected in 10 different subject areas and added to the<br />

sample set (confirm with chenxiao) which now contains<br />

874 samples.<br />

3.2 <strong>Form</strong> input elements<br />

A home grown parser was written in Java to scan<br />

web pages and break into HTML elements and their<br />

attributes. Inside HTML forms, following<br />

elements(often called tags) were considered important<br />

for users to put information for internal database search.<br />

Initially based on work by Ye et al [10] but with

changes with our own ideas, the following elements and<br />

their attributes were gathered.<br />

• form element attributes action and name. For<br />

action, only the file name from the URL was<br />

used. The “method’ attribute was not used.<br />

• input element type=”text”: name and value<br />

attributes. This forms a pair for each input text.<br />

• input element type=”checkbox”: data is<br />

collected same as input type text.<br />

• input element type=”radio”. data is collected<br />

same as input type text.<br />

• select element: name and value attributes. This<br />

forms a set of triplets <br />

for each select element.<br />

• Textarea element: name and value attributes.<br />

This forms a set of triplets for each textarea element.<br />

• Labels from all elements and types inside<br />

HTML were gathered as one attribute field<br />

containing comma delimited text values.<br />

The numbers of elements in forms vary much.<br />

Some forms contain a large number of form elements<br />

while some don’t contain any at all. Table 1 shows the<br />

maximum number of HTML form elements found in<br />

one HTML form. As a result, data created in this fashion<br />

is a large sparse matrix with 144 attributes with 877<br />

samples.<br />

HTML form elements Maximum numbers<br />

Input type text 14<br />

select 6<br />

Input type checkbox 16<br />

Input type radio 18<br />

textarea 2<br />

Table 1:maximum number of form input elements<br />

There are two main problems with this data set to<br />

be used as features of a classification method. Not only<br />

the data set is very sparse, but also names and values<br />

have gathered have many variations ( name, nam, user<br />

name, last name, lname, for instance) if meaningful<br />

names used at all, but many names and values used are<br />

surprisingly meaningless such as “tinyturing” and <br />

“lang-‐de”. And frequently an element name and value<br />

are missing since they are not required in HTML.<br />

Laborious preprocessing of text strings of name, value<br />

and labels is required for them to be useful as<br />

classification features.<br />

4. RANDOM FOREST CLASSIFIER<br />

The Random forest[3] is a meta-learner which<br />

consists of many individual trees. Each tree votes on an<br />

overall classification for the given set of data and the<br />

random forest algorithm chooses the individual<br />

classification with the most votes. Each decision tree is<br />

built from a random subset of the training dataset, using<br />

what is called replacement, in performing this sampling.<br />

That is, some entities will be included more than once in<br />

the sample, and others won't appear at all. In building<br />

each decision tree, a model based on a different random<br />

subset of the training dataset and a random subset of the<br />

available variables is used to choose how best to<br />

partition the dataset at each node. Each decision tree is<br />

built to its maximum size, with no pruning performed.<br />

Together, the resulting decision tree models of the<br />

Random forest represent the final ensemble model<br />

where each decision tree votes for the result, and the<br />

majority wins.<br />

There are two different sources of randomness in<br />

Random forest: random training set (bootstrap) and<br />

random selection of attributes. <strong>Using</strong> a random<br />

selection of attributes to split each node yields favorable<br />

error rates and are more robust with respect to noise.<br />

These attributes form nodes using standard tree building<br />

methods. Diversity is obtained by randomly choosing<br />

attributes at each node of a tree and using the attributes<br />

that provide the highest level of learning. Each tree is<br />

grown to the fullest possible without pruning until no<br />

more nodes can be created due to information loss. In<br />

Breiman’s early work[2], each individual tree is given<br />

an equal vote and later version of random forest allows<br />

weighted and un-weighted voting.<br />

The random forest algorithm computes the out-ofbag<br />

error. The average misclassification for the entire<br />

forest is called as out-of-bag error which is useful for<br />

predicting the performance of the classifier without<br />

involving the test set example nor cross-validation. The<br />

out-of-bag error of random forest depends on the<br />

strength of the individual trees in the forest and the<br />

correlation between them. With a less number of<br />

attributes used for split, correlation between any two<br />

trees decrease and the strength of a tree decreases.<br />

These two have reverse effect on error rates of random<br />

forest: less correlation increases the error rate while less<br />

strength decrease the error rate.<br />

5. EXPERIMENTS<br />

For our work, to eliminate sparse matrix problem<br />

and to avoid preprocessing of strings, numeric data<br />

such as the numbers of input element text type,<br />

checkbox type, radio type, select element and textarea<br />

element in a form were gathered.<br />

In addition, all strings from label elements and<br />

name attribute and value attribute of input type text,

checkbox and radio, element select and element<br />

textarea are scanned for word “search” and its<br />

synonyms. Proximity of a string to a certain input<br />

element or type is not considered. Some synonyms and<br />

antonym of the word “search” in the context of our<br />

research work are “inquiry”, “examine”, “inspect”,<br />

“investigate”, “look”, “query” and “find”. However<br />

the word “search” is dominantly used over 99% and<br />

“query” was found 4 times and “find” 2 times. Other<br />

words were not used at all in our data samples.<br />

In our analysis, Random forest in WEKA 3.5.6<br />

software developed by the University of Waikato was<br />

used for experiments. The data file has 6 input<br />

attributes and 1 target class attribute. The target class<br />

attribute values are external search, non search, site<br />

search, and internal database search. Table 2 shows<br />

numbers of samples of each class.<br />

External<br />

search<br />

Non search Site search Internal db<br />

search<br />

104 288 344 151<br />

Table 2: sample numbers of each class<br />

The number of attributes to be used in Random<br />

selection, “numFeatures” (is called Mtry in WEKA),<br />

and the number of trees to be generated “numTrees”<br />

were two parameters controlled in our work for<br />

performance evaluation. The number of tree depth was<br />

set to 0 to build trees of any depth. For all data sets,<br />

numTrees were set to max 10. With each numTree,<br />

classification rates of Mtry values 3,4, and 5 are shown<br />

in Figure 2.<br />

5.1 4 classes classification<br />

Two test methods were used: 10-cross<br />

validation and using separate test samples. With 10-fold<br />

cross validation as a test option, its result is shown in<br />

Figure 2 for various numTrees and attributes. The<br />

highest 66.93% was obtained when all 5 attributes were<br />

used with one tree. The next was to do testing with a<br />

test sample set. The entire file is divided into two files:<br />

70% as a training data set and 30% as a test data set.<br />

The classification rates obtained are in a range between<br />

62.89% and 62.63%, about 4.% lower than those<br />

obtained by 10 folds cross validation.<br />

The confusion matrix is shown in Table 3. The site<br />

search results is surprisingly low with zero<br />

classification rate while internal database search shows<br />

0.99 classification. However there are many false<br />

positive (FP) internal database search from external<br />

search, non search and site search classes with values<br />

0.42, 0.18 and 0.50 respectively.<br />

Figure 2: classification rates by 10-fold cross<br />

validation<br />

5.2 2 classes classification<br />

External<br />

search<br />

Non<br />

search<br />

Site<br />

<strong>Search</strong><br />

Internal<br />

db search<br />

External<br />

search<br />

Non<br />

search<br />

Site<br />

search<br />

Internal<br />

db<br />

search<br />

0.02 0.56 0.0 0.42<br />

0.02 0.80 0.0 0.18<br />

0.09 0.41 0.0 0.50<br />

0.0 0.0 0.01 0.99<br />

Table 3:test sample classification confusion matrix<br />

The second experiment was to classify any searches<br />

(external, site search, internal db search) from non<br />

search such as password entry and registration. The<br />

classification rates with the test sample set are 89.12%<br />

consistently for numbers of attributes from 1 to 5 and<br />

numbers of trees from 1 to 10. Table 4 shows statistics<br />

from the test data set classification. The classification<br />

with only two classes increases from 66.93% to<br />

89.12%.<br />

<strong>Classification</strong> rate 0.8912<br />

Out-of-bag error 0.1705<br />

Mean absolute error 0.1667<br />

RMSE 0.2872<br />

<strong>Search</strong> class TP rate 0.944<br />

<strong>Search</strong> class FP rate 0.279<br />

Non search class TP rate 0.721<br />

Non search class FP rate 0.056<br />

Table 4: statistics of 2 class test sample data set<br />

classification.

6. CONCLUSIONS AND FUTURE<br />

WORKS<br />

It is apparent from our results that the<br />

numbers of input type text, input type checkbox, input<br />

type radio, input element select, input element textarea<br />

along with the word ‘search” are not enough to<br />

distinguish 4 different types of classes: site search,<br />

external search, internal database search and non search.<br />

Login, registration, discussion and blogging are<br />

considered non search. Three classes, external search,<br />

internal database search and text search are very similar<br />

in HTML & PHP codes and have very similar structure<br />

in appearance and layout. External search facility and<br />

site search is provided by many web pages in recent<br />

years and almost become a standard feature in any web<br />

pages.<br />

There is not enough statistical difference with<br />

the attribute the number of input type text as shown in<br />

the scatter diagram (Figure 3). Other HTML elements<br />

and types used for other work show similar results. And<br />

when we combined three search types into one and<br />

made our data as two class problems, similar results<br />

were obtained.<br />

Figure 3:scatter diagram of the numbers of input<br />

type text(Y axis) for 4 classes (x-axis).<br />

In contrast, the word “ search” is a good discriminator to<br />

distinguish non search class from three search classes.<br />

Over 99.9% search classes contain the word “search”<br />

while approximately 20% of non search class contains<br />

the word “search.” This is due to the fact that in our<br />

research work, we didn’t consider a proximity of the<br />

word ‘search’ or similar occurrence to the location of a<br />

HTM input form element or type. In one case, an<br />

irrelevant URL away in an input text from a non<br />

search page contained the word ‘search’.<br />

Our 2 class classification rate we obtained is<br />

close to 90%, which implies that we use a simple set of<br />

input attributes to identify search form from login or<br />

registration pages. We propose our future work that it is<br />

an incremental learning machine architecture which<br />

consists of<br />

Stage One: non search forms are separated from any<br />

search forms.<br />

Stage Two: Three search sites, site-search sites,<br />

external search sites and internal database search<br />

sites are separated<br />

7. REFERENCES<br />

[1] L. Barbosa and J. Freire. “ Combining classifiers to<br />

identify online databases.” WWW 2007 conference. Banff,<br />

Canada. 2007.<br />

[2] L. Barbosa and J. Freire. “ An adaptive crawler for<br />

locating hidden web entry points.” WWW 2007, Banff,<br />

Canada.<br />

[3] L. Breiman. “ Random Forest.” Machine Learning. vol.<br />

45, No. 1, pp.5-32 .2001.<br />

[4] Z. Gong, J. Zhang, and Q. Liu. “ Automatic hidden web<br />

database classification.” PKDD 2007. Springer-Verlag Berline<br />

Heidelberg., pp 454-461.<br />

[5] L. Gravano, P. Ipeirotis, M. Sahami. “QProber: a system<br />

for automatic classification of hidden web databases.” ACM<br />

Transactions on Inforamtion Systems, Vol. 21, No. 1. 2003.<br />

[6] B.He, M. Patel, Z. Zhang, K. Chand. “Accessing the<br />

deep web.” Communication of the ACM. May 2007. Vol. 50,<br />

No. 5, p.95-101.<br />

[7] A. Hess, N. Kushmerick, “Automatically Attaching<br />

semantic metadata to web services.” Proceedigns of II <strong>Web</strong>.<br />

Pp111-116. 2003.<br />

[8] J. Madhaven, at al. “Google’s deep web crawl.” VLDB<br />

’08. Auckland, New Zealand. 2008.<br />

[9] A. Statnikov, L.Wang, and C. Aliferis. “ A<br />

comprehensive comparison of random forests and support<br />

vector machines for microarray-based cancer classification.”<br />

BMC Bioinformatics 2008, 9:319.<br />

[10] Y. Ye, H. Li, X. Deng, and J. Huang. “ Feature weighting<br />

random forest for detection of hidden web search interfaces.”<br />

Computational linguisitucs and Chinese lanaugage processing.<br />

Vo. 13, No. 4. Feb 2009.<br />

[11] W.Zuo, Y. Wang, X.Wang, D.Zhang, T. Peng.<br />

“Automatic classification of deep web database based on<br />

centroid and wordnet,” Journal of Computational Information<br />

systems. Vol. 6, No. 1. 2010. Pp63-70.