Run-Time Parallelization of Large FEM Analyses with PERMAS - intes

Run-Time Parallelization of Large FEM Analyses with PERMAS - intes

Run-Time Parallelization of Large FEM Analyses with PERMAS - intes

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

[NASA’97] 4th National Symposium on <strong>Large</strong>-Scale Analysis and Design on High-Performance<br />

Computers and Workstations, October 14-17, 1997 in Williamsburg, VA<br />

<strong>Run</strong>-<strong>Time</strong> <strong>Parallelization</strong> <strong>of</strong><br />

<strong>Large</strong> <strong>FEM</strong> <strong>Analyses</strong> <strong>with</strong> <strong>PERMAS</strong><br />

1 INTRODUCTION<br />

M. Ast, ∗ R. Fischer, ∗ J. Labarta, ∗∗ H. Manz ∗<br />

∗ INTES Ingenieurgesellschaft für technische S<strong>of</strong>tware mbH, Stuttgart, Germany<br />

∗∗ Universitat Politécnica de Catalunya, Barcelona, Spain<br />

The general purpose Finite Element system <strong>PERMAS</strong> 1 has been extended to<br />

support shared and distributed parallel computer architectures as well as workstation<br />

clusters. The methods used to parallelize this large application s<strong>of</strong>tware<br />

package are <strong>of</strong> high generality and have the capability to parallelize all<br />

mathematical operations in a <strong>FEM</strong> analysis – not only the solver. Utilizing<br />

the existing hyper-matrix data structure for large, sparsely populated matrices,<br />

a programming tool called PTM was introduced that automatically parallelizes<br />

block matrix operations on-the-fly. PTM totally hides parallelization<br />

from higher order algorithms, thus giving the physically oriented expert a virtually<br />

sequential programming environment. An operation graph <strong>of</strong> sub-matrix<br />

operations is asynchronously build and executed. A clustering algorithm distributes<br />

the work, performing a dynamic load balancing and exploiting data<br />

locality. Furthermore a distributed data management system allows free data<br />

access from each node. The generality <strong>of</strong> the approach is demonstrated by<br />

some benchmark examples dealing <strong>with</strong> different types <strong>of</strong> <strong>FEM</strong> analyses.<br />

Two major characteristics have been persistent ever<br />

since the FE-Method is being used. Firstly, FE simulations<br />

are CPU time consuming, and secondly, they<br />

are I/O intensive !<br />

The ongoing evolution in the size and complexity <strong>of</strong><br />

finite element models shows that the actual available<br />

computer resources are still a limiting factor. The tendency<br />

to produce finer meshes <strong>with</strong> a higher number <strong>of</strong><br />

unknowns is still very strong. The users enlarge their<br />

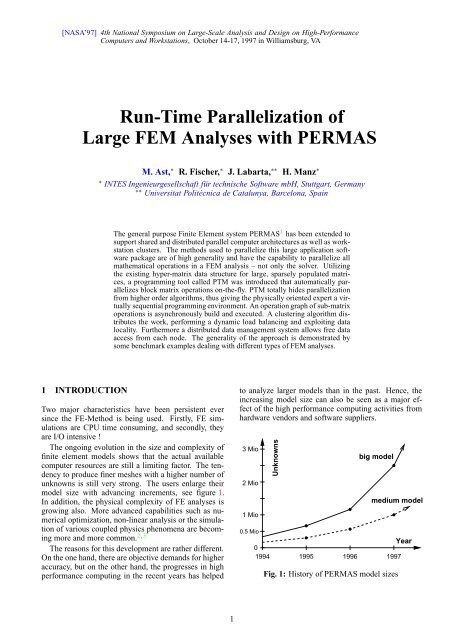

model size <strong>with</strong> advancing increments, see figure 1.<br />

In addition, the physical complexity <strong>of</strong> FE analyses is<br />

growing also. More advanced capabilities such as numerical<br />

optimization, non-linear analysis or the simulation<br />

<strong>of</strong> various coupled physics phenomena are becoming<br />

more and more common. 2, 3<br />

The reasons for this development are rather different.<br />

On the one hand, there are objective demands for higher<br />

accuracy, but on the other hand, the progresses in high<br />

performance computing in the recent years has helped<br />

1<br />

to analyze larger models than in the past. Hence, the<br />

increasing model size can also be seen as a major effect<br />

<strong>of</strong> the high performance computing activities from<br />

hardware vendors and s<strong>of</strong>tware suppliers.<br />

3 Mio<br />

2 Mio<br />

1 Mio<br />

Unknowns<br />

big model<br />

medium model<br />

0.5 Mio<br />

Year<br />

0<br />

1994 1995 1996 1997<br />

Fig. 1: History <strong>of</strong> <strong>PERMAS</strong> model sizes

Y Z<br />

Since many years the time consuming pre-processing<br />

phase is the most costly part in analysis projects. Therefore<br />

automatic meshing tools were developed in order<br />

to reduce the turnaround times <strong>of</strong> this phase. Figure 2<br />

shows a typical example for a shell element model<br />

which can be generated from existing geometry in reasonable<br />

time only <strong>with</strong> at least half-automatic mesh<br />

generators. Automatic mesh generation does, however,<br />

almost inevitable lead to fine meshes <strong>with</strong> a large number<br />

<strong>of</strong> unknowns.<br />

Another trend is the increasing number <strong>of</strong> <strong>FEM</strong> computations.<br />

For example, the application <strong>of</strong> automatic<br />

mesh generators also allows even less experienced engineers<br />

to use such methods more and more.<br />

X<br />

Fig. 2: Car body <strong>with</strong> 130,000 shell elements<br />

(<strong>PERMAS</strong> at Karmann GmbH, Osnabrück)<br />

Fig. 3: Transmission housing <strong>with</strong> 311,000 nodes and<br />

963 contacts (<strong>PERMAS</strong> at ZF Friedrichshafen AG)<br />

2<br />

An impressive example for a today’s nonlinear analysis<br />

can be seen from figure 3. All interior shafts, gears,<br />

and bearings <strong>of</strong> the transmission housing are modeled<br />

together <strong>with</strong> excessive contact definitions. Figure 4<br />

shows a coupled simulation. Based on natural modes<br />

and frequencies, a response analysis has to be performed<br />

in the time and frequency domain subsequently.<br />

Fig. 4: Floating ship <strong>with</strong> fluid-structure interaction<br />

(<strong>PERMAS</strong> at IRCN, Nantes)<br />

Although computers became much faster in recent<br />

years, it is obvious that hardware speed alone is not<br />

the answer to the demands for high performance computing.<br />

Since single CPU speed enhancements become<br />

more and more difficult, parallelization seems to be the<br />

only solution for further performance speedups. 7<br />

The various forms <strong>of</strong> parallel algorithms for computational<br />

mechanics are as numerous as the number <strong>of</strong><br />

people working on the problem. 4, 5, 6 One obvious approach<br />

is the usage <strong>of</strong> data parallel programming languages<br />

such as parallel C or HPF. 8, 9 This may be a solution<br />

for new applications designed for these kind <strong>of</strong><br />

tools. However for a huge program system <strong>with</strong> a long<br />

sequential history, rewriting the whole s<strong>of</strong>tware (or significant<br />

parts <strong>of</strong> it) is not very practicable.<br />

The most popular way to perform an explicit parallelization<br />

<strong>of</strong> FE packages is the domain decomposition<br />

approach. 10, 11, 12, 13, 14, 15 In <strong>PERMAS</strong>, a completely alternative<br />

strategy has been selected. 16<br />

2 PARALLELIZATION STRATEGY<br />

A number <strong>of</strong> important requirements had to be taken<br />

into account:<br />

• Generality: A general purpose package like PER-<br />

MAS not only has one but several solvers and many<br />

different matrix operations. Hence, a parallelization<br />

<strong>of</strong> only one solver does not really help. Instead,<br />

a method is required which is able to parallelize<br />

all solvers and matrix operations in a uniform<br />

manner.

• Extendability: The approach must be open to new<br />

developments and has to ensure an easy upgrade <strong>of</strong><br />

already existent program parts, i.e. the top solution<br />

procedures shall stay unchanged.<br />

• Maintainability: For both the sequential and parallel<br />

execution, the algorithms and the program versions<br />

have to be the same in order to have a consistent<br />

development <strong>of</strong> functionalities. An approach is<br />

needed who is as machine independent as possible.<br />

• Portability: Only standard message passing must<br />

be used in order to be able to work on several<br />

machine architectures (like distributed memory,<br />

shared memory or workstation clusters).<br />

• Ease <strong>of</strong> use: The users should not need additional<br />

knowledge or training to operate <strong>with</strong> the parallel<br />

code.<br />

Based on the existing program and data structure, not<br />

the well-known domain decomposition but a strategy<br />

based on a mathematical operation graph has been chosen.<br />

Figure 5 gives a basic understanding <strong>of</strong> the approach<br />

based on a schematic comparison <strong>of</strong> the two parallelization<br />

methods.<br />

Whereas the parallelism <strong>of</strong> the domain decomposition<br />

method is achieved through the computation <strong>of</strong> partial<br />

(sub)models, the parallelism <strong>of</strong> the <strong>PERMAS</strong> approach<br />

stems from the computation <strong>of</strong> Tasks, which are<br />

basic mathematical operations on sub-matrices.<br />

Fig. 5: Different parallelization strategies<br />

3<br />

3 HYPER-MATRIX DATA STRUCTURE<br />

In a Finite Element system, one major objective is the<br />

efficient storage and handling <strong>of</strong> extremely large but<br />

sparsely populated matrices. In <strong>PERMAS</strong> this problem<br />

is solved by dividing the matrix in sub-matrices <strong>of</strong> variable<br />

block size (typically 30∗30 to 128∗128). The complete<br />

matrix, called hyper-matrix, is organized in three<br />

hierarchical levels where levels 2 and 3 contain nothing<br />

but references to the next lower level and level-1<br />

contains the actual data (see figure 6). 17, 18 Of course,<br />

at each level, only sub-matrices containing at least one<br />

non-zero element are stored and processed. Moreover,<br />

on level-1 only the rectangular area <strong>of</strong> real non-zeros<br />

<strong>with</strong>in that sub-matrix is actually stored.<br />

sym.<br />

n<br />

3<br />

Numeric<br />

Array<br />

(window)<br />

Level 2<br />

Submatrix<br />

n<br />

1<br />

m<br />

Level 3 Matrix<br />

Highest Level<br />

m<br />

m<br />

1<br />

3<br />

2<br />

n<br />

2<br />

Level 1 Submatrix<br />

Lowest Level (paragraph)<br />

Fig. 6: The hyper-matrix data structure<br />

A hyper-matrix operation can be viewed as a stream<br />

<strong>of</strong> basic block operations on sub-matrices, e.g. a<br />

multiply-add <strong>of</strong> two level-1 matrices. One major advantage<br />

<strong>of</strong> this scheme is the high granularity <strong>of</strong> operations<br />

and the fact that a basic operation typically requires<br />

2∗n 3 floating point operations but only 3∗n 2 memory<br />

accesses. The data structure is simple and hence easily<br />

understood by the programmers. The overhead for<br />

administration <strong>of</strong> the sub-matrices is small compared to<br />

the basic operations. The typical block size can easily<br />

be adapted to the actual matrix sparsity and hardware<br />

environment, e.g. to optimize vector length or<br />

I/O record size. With respect to parallelization it is<br />

worthwhile to mention that all basic operations on submatrices<br />

are <strong>of</strong> similar length, i.e. need the same order<br />

<strong>of</strong> time magnitude for execution.

4 PARALLEL TASK MANAGER<br />

Corresponding to the 3-level data structure all hypermatrix<br />

algorithms are organized in 3 well separated program<br />

layers: Two logical layers working only on addresses<br />

and one numerical layer – usually just a cap to<br />

a standard BLAS routine.<br />

Fig. 7: Parallel Task Manager<br />

For parallelization, a new s<strong>of</strong>tware layer called the<br />

Parallel Task Manager (PTM) 19 was introduced, see figure<br />

7. PTM <strong>of</strong>fers a complete set <strong>of</strong> functions for the<br />

traversal <strong>of</strong> hyper-matrices. These new functions replace<br />

all calls previously made to the sequential data<br />

base manager. A <strong>PERMAS</strong> programmer implementing<br />

a hyper-matrix algorithm will call PTM to ask for<br />

the existence and properties <strong>of</strong> particular sub-matrices.<br />

Based on this information he can organize the level 3<br />

and 2 loops just as before – in fact the level 3 and 2<br />

program structure stays unchanged and the call replacements<br />

are quite easy, because the new functions have<br />

similar names and arguments as the old data base calls.<br />

Finally, instead <strong>of</strong> directly calling a numerical level-1<br />

routine, the programmer passes a task request to PTM.<br />

Each task is defined by a certain opcode (i.e. an identifier<br />

for the level-1 routine to be called) and a reference<br />

to the level-1 sub-matrices to be used. After copying<br />

the task request in an internal buffer PTM immediately<br />

returns control to the calling layer. The task execution<br />

will then be done asynchronously on a node and in a<br />

sequence controlled by PTM.<br />

Inside PTM the graph <strong>of</strong> sub-matrix operations is<br />

asynchronously build and executed, see figure 8. The<br />

data dependencies are resolved by a graph generator,<br />

which works in a dynamic way according to the actual<br />

loops on the sparse matrix structure. A separate clustering<br />

algorithm collects basic mathematical operations<br />

into packages to be sent. Subsequently, the scheduler is<br />

4<br />

responsible to distribute the tasks to the different nodes<br />

for execution (using MPI). 20 Taking into account the<br />

execution times <strong>of</strong> completed tasks the scheduler also<br />

ensures a dynamic load balancing – thus adapting itself<br />

to the current load <strong>of</strong> each node and avoiding bottlenecks<br />

in data distribution.<br />

The problem <strong>of</strong> finding the optimal schedule <strong>of</strong> a<br />

number <strong>of</strong> interdependent tasks is NP complete like the<br />

ordering problem <strong>of</strong> a sequential graph. Therefore a<br />

number <strong>of</strong> heuristics have been developed to find reasonable<br />

schedules in affordable computing times. 21, 22<br />

Level-2<br />

address<br />

matrices<br />

Graph<br />

Clusterer<br />

Scheduler<br />

MPI<br />

Executor<br />

Level-1<br />

matrices<br />

*<br />

-<br />

+<br />

node 1<br />

hyper-matrix algorithm<br />

task (opcode + operand address)<br />

node 2<br />

data dependency<br />

node 3 node 4<br />

Fig. 8: Asynchronous generation<br />

and execution <strong>of</strong> tasks<br />

<strong>Run</strong>ning <strong>PERMAS</strong> on a sequential machine, PTM<br />

executes these basic operations in the same control flow<br />

as if the original functions had been called directly. This<br />

means that we can use the same program version for<br />

both sequential and parallel runs.<br />

The most important aspect <strong>of</strong> this approach is the<br />

fact that all details <strong>of</strong> parallel computing are completely<br />

handled inside the tools and hidden from the developer<br />

implementing a new hyper-matrix operation. This allows<br />

the adoption <strong>of</strong> the <strong>PERMAS</strong> program to future<br />

hardware architectures <strong>with</strong>out having to change large<br />

parts <strong>of</strong> the code. The effort in such developments is<br />

hence protected against hardware changes. Moreover,<br />

it is possible to improve the performance-critical parts<br />

like the clustering and scheduling mechanism <strong>with</strong>out<br />

changing any higher order algorithms.

In a parallel environment, the task execution is performed<br />

on the basis <strong>of</strong> a host-node concept. The host<br />

has only control functionality and executes all <strong>of</strong> the<br />

sequential program parts, whereas the nodes are executing<br />

all basic numerical operations <strong>of</strong> a parallel hypermatrix<br />

algorithm, figure 9. With respect to communication<br />

bandwidth it is important to note that a typical task<br />

definition requires less than 70 bytes and that several<br />

task clusters are sent at once in order to minimize the<br />

overall time needed for message startup.<br />

<strong>PERMAS</strong> <strong>PERMAS</strong><br />

Node<br />

Node<br />

<strong>PERMAS</strong><br />

<strong>PERMAS</strong><br />

Node Node<br />

<strong>PERMAS</strong><br />

Node<br />

<strong>PERMAS</strong><br />

Disk Disk Disk<br />

Disk Disk<br />

Host<br />

<strong>PERMAS</strong><br />

Node<br />

Disk Disk<br />

Disk<br />

Disk<br />

Fig. 9: Centralized Task Control<br />

5 DISTRIBUTED DATA BASE<br />

<strong>PERMAS</strong><br />

Node<br />

According to the new needs, the central data base system<br />

had to be upgraded too. A new, Distributed Data<br />

base Management System (DDMS) was introduced,<br />

that controls all data traffic from, and to, the direct access<br />

files <strong>of</strong> the physically distributed data base (above<br />

DDMS the data base is a logical unity <strong>with</strong> global references),<br />

see figure 10. One instance <strong>of</strong> DDMS runs on<br />

each node including the host – regardless <strong>of</strong> whether a<br />

node has own disks or not. For node-local I/Os DDMS<br />

handles the direct I/O to the local files. In addition it<br />

manages the network traffic due to remote data requests.<br />

Thus DDMS allows free data access from each node,<br />

exploiting the local cache and I/O channels <strong>of</strong> all nodes.<br />

Unlike the short task messages, the level-1 operands<br />

handled by DDMS have typical sizes <strong>of</strong> about 128<br />

kbytes. Therefore the distributed characteristic and the<br />

ability to handle node to node communication also ensures<br />

that the host will not become the communication<br />

or administration bottleneck (as it would be <strong>with</strong> a centralized<br />

data base).<br />

Disk<br />

Disk<br />

5<br />

Due to the asynchronity, the integrity <strong>of</strong> data has to be<br />

guaranteed. This is realized by a monotonously increasing<br />

version number for task operands, the producer task<br />

identifier (PTI). Each level-1 matrix owes its own PTI,<br />

which is also stored in the referring level-2 matrices.<br />

Each modification changes the PTI and a change is possible<br />

only for one task at a time. With this paradigm the<br />

data traffic can be minimized and possible deadlocks<br />

are avoided.<br />

Node<br />

DDMS<br />

Node<br />

DDMS<br />

Node<br />

DDMS<br />

Host<br />

DDMS<br />

Node<br />

DDMS<br />

Node<br />

DDMS<br />

Node<br />

DDMS<br />

Node<br />

DDMS<br />

Disk Disk Disk<br />

Disk Disk<br />

6 IN PRACTICE<br />

Disk Disk<br />

Disk<br />

Disk<br />

Fig. 10: Distributed Data Base<br />

Management System<br />

Above PTM the parallel and sequential code is identical.<br />

There is only one program version for sequential<br />

and parallel machine architectures. The basic program<br />

structure and programming style <strong>of</strong> the s<strong>of</strong>tware development<br />

team can stay unchanged – an essential item<br />

for the code owner’s investments in past and future.<br />

Another advantage is the separation <strong>of</strong> work fields between<br />

algorithmic oriented specialists and parallelization<br />

experts. The experience <strong>of</strong> the s<strong>of</strong>tware developers<br />

show that a functional splitting <strong>of</strong> work areas always<br />

pays <strong>of</strong>f in terms <strong>of</strong> productivity, code efficiency and<br />

last but not least stability. In <strong>PERMAS</strong> this separation<br />

is clearly reflected by different programming levels for<br />

machine, parallelization, algorithmic and physical experts,<br />

see figure 11. Furthermore a single program version<br />

keeps the maintenance simple. There is only one<br />

source code to handle and the quality assurance <strong>of</strong> the<br />

sequential version is applicable to parallel runs <strong>with</strong>out<br />

change. Again, this is an important point for an industrial<br />

s<strong>of</strong>tware vendor (who <strong>of</strong>ten spends more money on<br />

maintaining than developing a program).<br />

Disk<br />

Disk

Also the end user benefits from this run-time parallelization.<br />

Apart from faster execution times (and<br />

maybe access to more memory and disks) the user’s<br />

daily working environment does not change. No additional<br />

knowledge is necessary and no extra work is<br />

required (e.g. no special mesh partitioning). The program<br />

releases are consistent in the way that the parallel<br />

’version’ <strong>of</strong>fers identical functionality as the sequential<br />

one (indeed it’s the same binary). Moreover,<br />

because a sequential and a parallel run use the same<br />

algorithms, the sequence <strong>of</strong> operations remains also unchanged.<br />

This gives identical results independent <strong>of</strong> the<br />

number <strong>of</strong> computing nodes actually used. This even<br />

<strong>of</strong>fers the possibility to choose the number <strong>of</strong> nodes by<br />

the system administrator according to a global view <strong>of</strong><br />

available computing resources. The user does not have<br />

to care about parallelization – for him there is no difference<br />

between one faster or just more CPUs.<br />

7 BENCHMARKS<br />

All <strong>of</strong> the following benchmarks have been performed<br />

on an IBM SP-2 (except one specially mentioned), thus<br />

reflecting the behavior <strong>of</strong> a distributed memory architecture.<br />

All models have been calculated using the direct<br />

solver, since this is still the fastest solver for the<br />

sequential case. This ensures a realistic comparison <strong>of</strong><br />

run times.<br />

Compared to present-day mesh sizes the benchmark<br />

models are <strong>of</strong> medium size only. This is due to various<br />

reasons: First the benchmarks were selected at start<br />

<strong>of</strong> the parallelization project, thus reflecting the problem<br />

situation in 1994. Second, getting real upto-date<br />

industrial benchmarks for publications is not easy. On<br />

the other hand parallelization rates on larger problems<br />

are usually better, so smaller models are more appropriate<br />

to show possible limitations <strong>of</strong> the underlying parallelization<br />

strategy.<br />

✏✏<br />

Sequential or parallel hardware (distributed/shared)<br />

✏<br />

✏<br />

✘ Program interface for automatic run-time parallelization<br />

✘ ✘<br />

✥<br />

✥<br />

✥ Basic hyper-matrix algorithms<br />

✭✭ ✭✭<br />

<strong>PERMAS</strong> <strong>FEM</strong> system<br />

Fig. 11: <strong>PERMAS</strong> Program layers<br />

6<br />

Fig. 12: Crank Housing<br />

Fig. 12 (a): Crank Housing, Static Analysis

X<br />

Z<br />

Y<br />

Fig. 13: Methane Carrier<br />

Fig. 13 (a): Methane Carrier, Static Analysis<br />

Fig. 13 (b): Methane Carrier, Dynamic Analysis<br />

7<br />

Z<br />

Y<br />

X<br />

Fig. 14: Artificial Cube (scalable test case)<br />

Fig. 14 (a): Artificial Cube, Cholesky Decomposition<br />

Fig. 15: Contact Analysis <strong>of</strong> a Motor Piston

Fig. 16: Electromagnetic Wave Propagation <strong>of</strong> a Box<br />

Fig. 17: Casting Carrier, Static Analysis on SGI<br />

A static analysis <strong>of</strong> the crank housing shows a reasonable<br />

scalability up to 16 nodes, figure 12 (a). Figure<br />

13 (a) and 13 (b) display two different type <strong>of</strong> analysis<br />

for the methane carrier. For a static run <strong>of</strong> this small<br />

model, the solver part itself is less than 50 % <strong>of</strong> the total<br />

elapsed time already. For the dynamic job the cholesky<br />

decomposition is almost negligible. This demonstrates<br />

that it is not sufficient to parallelize just the solver part.<br />

Also from figure 13 (b) it can be seen that the run-time<br />

parallelization <strong>of</strong> PTM works for the whole application.<br />

In order to investigate the influence <strong>of</strong> the model size<br />

an artificial test case was created, figure 14 and 14 (a).<br />

Apart from minor improvements for the larger model,<br />

it can be seen that the parallelism exploited by PTM is<br />

basically independent <strong>of</strong> the problem size.<br />

Figure 15 present the elapsed times for a non-linear<br />

analysis, whilst figure 16 show the numbers achieved<br />

for the simulation <strong>of</strong> electromagnetic phenomena. Finally<br />

figure 17 gives an instance for a parallel job on a<br />

shared memory machine.<br />

8<br />

8 CONCLUSIONS<br />

The generality <strong>of</strong> the parallelization approach has been<br />

presented not only from conceptual but also from the<br />

application point <strong>of</strong> view. As shown by the examples,<br />

parallelization is also beneficial for medium size models.<br />

The PTM programming interface <strong>of</strong>fers a general<br />

toolbox for automatic parallelization <strong>of</strong> initially sequential<br />

hyper-matrix algorithms, enabling also unexperienced<br />

programmers to write parallel algorithms.<br />

With this approach, the number <strong>of</strong> CPUs used for<br />

an analysis becomes a mere performance parameter as<br />

it should be the case. The parallel <strong>PERMAS</strong> version<br />

may be used <strong>with</strong>out additional know-how and there is<br />

a guarantee <strong>of</strong> a consistent program evolution for both<br />

the sequential and the parallel version. This is a prerequisite<br />

for a protection <strong>of</strong> the investments made by the<br />

s<strong>of</strong>tware supplier. Moreover this is a basic requirement<br />

for a reliable usage on the customer’s side.<br />

Up to now only basic work was done <strong>with</strong> emphasis<br />

on a clean structure <strong>of</strong> the s<strong>of</strong>tware. In order to improve<br />

the scalability <strong>of</strong> the code and to fully exploit the potential<br />

<strong>of</strong> the parallelization approach, further development<br />

is needed. However, global tuning can be performed<br />

<strong>with</strong>out changing the PTM interface. This means that<br />

current and future <strong>PERMAS</strong> procedures will automatically<br />

benefit from any improvement made in this field.<br />

ACKNOWLEDGEMENT<br />

The work reported in this paper is supported by<br />

the European Comission under the ESPRIT projects<br />

PERMPAR 23 and PARMAT. 24<br />

REFERENCES<br />

1. <strong>PERMAS</strong>: User’s Reference Manual, INTES Publication<br />

No. 450, Rev. D, Stuttgart, 1997. 1<br />

2. CISPAR: Open interface for coupling <strong>of</strong> industrial simulation<br />

codes on parallel systems, Esprit project 20161,<br />

World Wide Web address: http://www.pallas.de/cispar/. 1<br />

3. Löhner, R., Yang, C., Cebral, J., Baum, J.D., Luo, H.,<br />

Pelessone, D. and Charman, C., Fluid Structure Interaction<br />

Using a Loose Coupling Algorithm and Adaptive Unstructured<br />

Grids, AIAA Paper 95-2259, 1995. 1<br />

4. Noor, A.K., Parallel processing in finite element structural<br />

analysis, Parallel Computations and Their Impact on Mechanics,<br />

ASME, 1987, pp.253-277. 2<br />

5. Ortega, J., Voigt, R., Romine, C., A bibliography on parallel<br />

and vector numerical algorithms, NASA Contractor<br />

Report 181764, ICASE Interim Report 6, 1988. 2<br />

6. White, D.W., Abel, J.F., Bibliography on finite elements<br />

and supercomputing, Commun. Applied Numeric Methods,<br />

4, 1988, pp.279-294. 2<br />

7. Topping, B.H.V., Khan, A.I., Parallel Finite Element<br />

Computations, Saxe-Coburg Publications, Edinburgh,<br />

UK, 1996. 2

8. Wilson, G.V., Lu, P., Stroustrup, B., Parallel Programming<br />

Using C++, MIT Press, Cambridge, MA, 1996. 2<br />

9. Perrin, G.-R., Darte, E., The Data Parallel Programming<br />

Model, Lecture Notes in Computer Science, Vol. 1132,<br />

Springer, Berlin, 1996. 2<br />

10. Farhat, C., Roux, F.-X., Implicit parallel processing<br />

in structural mechanics, Computational Mechanics Advances,<br />

1994, 2(1), pp.1-124. 2<br />

11. Topping, B.H.V., Sziveri, J., Parallel Sub-domain Generation<br />

Method, Developments in Computational Techniques<br />

for Structural Engineering, Civil-Comp Press, Edinburgh,<br />

UK, 1995, pp.449-457. 2<br />

12. Walshaw, C., Cross, M., Everett, M., Mesh partitioning<br />

and load-balancing for distributed memory parallel systems,<br />

Proc. Parallel & Distributed Computing for Computational<br />

Mechanics, Lochinver, Scotland, 1997. 2<br />

13. Liu, J.W.H., The Multifrontal Method for Sparse Matrix<br />

Solution: Theory and Practice, SIAM Review, 34, 1992,<br />

pp.82-109. 2<br />

14. PARASOL: An Integrated Programming Environment for<br />

Parallel Sparse Matrix Solvers, Esprit 4, World Wide Web<br />

address: http://www.genias.de/parasol/. 2<br />

15. Pothen, A., Rothberg, E., Simon, H.D., Wang, L., Parallel<br />

Sparse Cholesky Factorization <strong>with</strong> Spectral Nested Dissection<br />

Ordering, NAS Technical Report RNR-94-011,<br />

1994. 2<br />

16. Ast, M., Labarta, J., Manz, H., Pérez, A., Schulz and<br />

U., Solé, J., A General Approach for an Automatic <strong>Parallelization</strong><br />

Applied to the Finite Element Code <strong>PERMAS</strong>,<br />

Proceedings <strong>of</strong> the HPCN Conference, Springer, 1995. 2<br />

9<br />

17. Braun, K.A., et. al., Some Hypermatrix algorithms in Linear<br />

Algebra, Lecture Notes in Economics and Mathematical<br />

Systems, Vol. 134, Springer, Berlin, 1981. 3<br />

18. Rothberg, E., Gupta, A., An Efficient Block-Oriented Approach<br />

to Parallel Sparse Cholesky Factorization, SIAM<br />

Journal <strong>of</strong> Scientific Computing, 15, 1994, pp.1413-1439.<br />

3<br />

19. Ast, M., Labarta, J., Manz, H., Pérez, A., Schulz, U. and<br />

Solé, J., The <strong>Parallelization</strong> <strong>of</strong> <strong>PERMAS</strong>, Conference on<br />

Spacecraft Structures Materials & Mechanical Testing,<br />

ESA, 1996. 4<br />

20. Snir, M., Otto, S.W., Dongarra, J., MPI: The Complete<br />

Reference, MIT Press, Cambridge, MA, 1996. 4<br />

21. Ast, M., Jerez, T., Labarta, J., Manz, H., Pérez, A.,<br />

Schulz, U. and Solé, J., <strong>Run</strong>time <strong>Parallelization</strong> <strong>of</strong> the<br />

Finite Element Code <strong>PERMAS</strong>, International Journal <strong>of</strong><br />

Supercomputer Applications and High Performance Computing,<br />

1997, 11(4), pp.328-335. 4<br />

22. El-Rewini, H., Lewis, T., Ali, H.H., Task Scheduling in<br />

Parallel and Distributed Systems, Prentice-Hall, 1994. 4<br />

23. PERMPAR: Implementation <strong>of</strong> the General Purpose Finite<br />

Element Code <strong>PERMAS</strong> on High Parallel Computer<br />

Systems, EUROPORT-1, World Wide Web address:<br />

http://www.gmd.de/SCAI/europort-1/C2.HTM. 8<br />

24. PARMAT: Efficient Handling <strong>of</strong> <strong>Large</strong> Matrices on<br />

High Parallel Computer Systems in the <strong>PERMAS</strong><br />

Code, Esprit project 22740, World Wide Web address:<br />

http://www.<strong>intes</strong>.de/parmat/. 8