Statistics 300A Syllabus Theoretical Statistics Fall 2005 Lectures ...

Statistics 300A Syllabus Theoretical Statistics Fall 2005 Lectures ...

Statistics 300A Syllabus Theoretical Statistics Fall 2005 Lectures ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

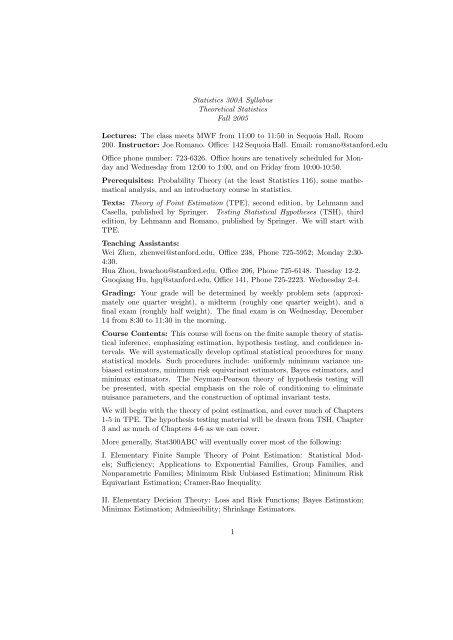

<strong>Statistics</strong> <strong>300A</strong> <strong>Syllabus</strong><br />

<strong>Theoretical</strong> <strong>Statistics</strong><br />

<strong>Fall</strong> <strong>2005</strong><br />

<strong>Lectures</strong>: The class meets MWF from 11:00 to 11:50 in Sequoia Hall, Room<br />

200. Instructor: Joe Romano. Office: 142 Sequoia Hall. Email: romano@stanford.edu<br />

Office phone number: 723-6326. Office hours are tenatively scheduled for Monday<br />

and Wednesday from 12:00 to 1:00, and on Friday from 10:00-10:50.<br />

Prerequisites: Probability Theory (at the least <strong>Statistics</strong> 116), some mathematical<br />

analysis, and an introductory course in statistics.<br />

Texts: Theory of Point Estimation (TPE), second edition, by Lehmann and<br />

Casella, published by Springer. Testing Statistical Hypotheses (TSH), third<br />

edition, by Lehmann and Romano, published by Springer. We will start with<br />

TPE.<br />

Teaching Assistants:<br />

Wei Zhen, zhenwei@stanford.edu, Office 238, Phone 725-5952; Monday 2:30-<br />

4:30.<br />

Hua Zhou, hwachou@stanford.edu, Office 206, Phone 725-6148. Tuesday 12-2.<br />

Guoqiang Hu, hgq@stanford.edu, Office 141, Phone 725-2223. Wednesday 2-4.<br />

Grading: Your grade will be determined by weekly problem sets (approximately<br />

one quarter weight), a midterm (roughly one quarter weight), and a<br />

final exam (roughly half weight). The final exam is on Wednesday, December<br />

14 from 8:30 to 11:30 in the morning.<br />

Course Contents: This course will focus on the finite sample theory of statistical<br />

inference, emphasizing estimation, hypothesis testing, and confidence intervals.<br />

We will systematically develop optimal statistical procedures for many<br />

statistical models. Such procedures include: uniformly minimum variance unbiased<br />

estimators, minimum risk equivariant estimators, Bayes estimators, and<br />

minimax estimators. The Neyman-Pearson theory of hypothesis testing will<br />

be presented, with special emphasis on the role of conditioning to eliminate<br />

nuisance parameters, and the construction of optimal invariant tests.<br />

We will begin with the theory of point estimation, and cover much of Chapters<br />

1-5 in TPE. The hypothesis testing material will be drawn from TSH, Chapter<br />

3 and as much of Chapters 4-6 as we can cover.<br />

More generally, Stat<strong>300A</strong>BC will eventually cover most of the following:<br />

I. Elementary Finite Sample Theory of Point Estimation: Statistical Models;<br />

Sufficiency; Applications to Exponential Families, Group Families, and<br />

Nonparametric Families; Minimum Risk Unbiased Estimation; Minimum Risk<br />

Equivariant Estimation; Cramer-Rao Inequality.<br />

II. Elementary Decision Theory: Loss and Risk Functions; Bayes Estimation;<br />

Minimax Estimation; Admissibility; Shrinkage Estimators.<br />

1

III. Finite Sample Theory of Hypothesis Testing and Confidence Intervals: Neyman-<br />

Pearson Theory; Uniformly Most Powerful Tests and Uniformly Most Accurate<br />

Confidence Intervals for Distributions with Monotone Likelihood Ratio; Systematic<br />

Use of Sufficiency and Conditioning to Eliminate Nuisance Parameters<br />

in Exponential Families; Use of Invariance to Eliminate Nuisance Parameters<br />

in Group Families; Maximin Tests and the Hunt-Stein Theorem; Permutation<br />

Tests.<br />

IV. Elementary Large Sample Estimation Theory: Asymptotic Relative Efficiency;<br />

Maximum Likelihood Estimation; Chi-squared tests; Rao, Wald, and<br />

Likelihood Ratio Tests; Delta Method; Asymptotic Distribution of Quantiles<br />

and Trimmed Means; Differentiability of Statistical Functionals; Robustness<br />

and Influence. Rank, Permutation and Randomization Tests; Jackknife, Bootstrap<br />

and Sample Reuse Methods.<br />

V. Asymptotic Optimality Theory of Estimators, Tests, and Confidence Intervals:<br />

Contiguity, Quadratic Mean Differentiability, Expansions of the log likelihood<br />

ratio. Convergence to a Gaussian experiment, Asymptotic Minimax Theorem,<br />

Convolution Theorem, Asymptotically Uniformly Most Powerful Tests,<br />

etc.<br />

VI. Density Estimation: Kernel Density Estimation; Bias versus Variance Tradeoff;<br />

Choice of Bandwidth and Kernel.<br />

VII. Time Series: First and Perhaps Second Order Autoregressive Processes;<br />

Conditions for Stationarity; Use of Maximum Likelihood in Time Series With<br />

Asymptotic Theory.<br />

VIII. Other Possible Topics (Covered Briefly): Sequential Analysis; Optimal Experimental<br />

Design; Empirical Processes With Applications to <strong>Statistics</strong>; Edgeworth<br />

Expansions With Applications to <strong>Statistics</strong>.<br />

Homework 1, tentatively due Monday, October 3. From TPE, Chapter<br />

1: 1.8, 1.11, 4.13, 4.16, 5.10, 6.2, 6.3.<br />

Homework 2, tentatively due Monday, October 10. From TPE, Chapter<br />

1: 6.9, 6.32, 6.33, 6.35, 7.23. From TPE, Chapter 2: 1.15, 1.17.<br />

2