GigabitEthernet Testbed over Dark Fiber

GigabitEthernet Testbed over Dark Fiber

GigabitEthernet Testbed over Dark Fiber

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

<strong>GigabitEthernet</strong> <strong>Testbed</strong> <strong>over</strong> <strong>Dark</strong> <strong>Fiber</strong><br />

sl. dr. ing. Emil CEBUC *<br />

prof. dr. ing. PUSZTAI Kalman<br />

ing. Otto KREITER<br />

ing. Florin FLORIAN<br />

RoEduNet Cluj<br />

Emil.Cebuc@cs.utcluj.ro<br />

Pusztai.Kalman@cs.utcluj.ro<br />

ottok@cluj.roedu.net<br />

florin@cluj.roedu.net<br />

Abstract<br />

The paper will present the testing of a 120km<br />

fiber optic link between RoEduNet NOC Cluj and<br />

RoEduNet NOC Tg-Mures using long reach Gigabit<br />

Ethernet technology.<br />

1. Introduction<br />

First we will present the hardware environment<br />

used for the testing.<br />

Second the software for traffic generation and<br />

monitoring the test.<br />

Third design of the tests and preliminary results<br />

And last the conclusions drawn from the<br />

performed tests.<br />

2. Hardware Environment<br />

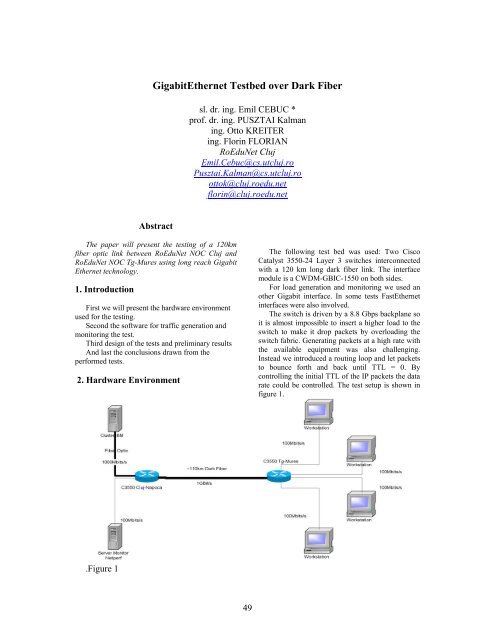

The following test bed was used: Two Cisco<br />

Catalyst 3550-24 Layer 3 switches interconnected<br />

with a 120 km long dark fiber link. The interface<br />

module is a CWDM-GBIC-1550 on both sides.<br />

For load generation and monitoring we used an<br />

other Gigabit interface. In some tests FastEthernet<br />

interfaces were also involved.<br />

The switch is driven by a 8.8 Gbps backplane so<br />

it is almost impossible to insert a higher load to the<br />

switch to make it drop packets by <strong>over</strong>loading the<br />

switch fabric. Generating packets at a high rate with<br />

the available equipment was also challenging.<br />

Instead we introduced a routing loop and let packets<br />

to bounce forth and back until TTL = 0. By<br />

controlling the initial TTL of the IP packets the data<br />

rate could be controlled. The test setup is shown in<br />

figure 1.<br />

.Figure 1<br />

49

3. Monitoring Gigabit Interfaces<br />

Monitoring the Gigabit interface.<br />

To monitor with SNMP a highly loaded gigabit<br />

interface in a Cisco Catalyst 3550 it is necessary to<br />

take in consideration that they have just 32 Bit<br />

SNMP counters. If the interface is fully loaded with<br />

1 Gigabit/s traffic the counter resets every 34<br />

seconds.<br />

(2^32 * 8) / 10 9 = 34.35 second<br />

In consequence it is necessary to poll the<br />

interface at least every 30 seconds. Taking in<br />

consideration that when the switch is heavily loaded<br />

the switch can't respond to the SNMP request in<br />

time we have decided to poll the interface every 20<br />

seconds.<br />

The monitoring machine was a RedHat Linux<br />

2.4.19, using UCD-SNMP version 4.2.4 to fetch the<br />

data, and RRD-tool 1.0.40 to store and graph the bit<br />

rates and packet rates. Because in CRONTAB we<br />

can't setup to run the data fetching script every 20<br />

seconds we used the following script:<br />

#!/bin/bash<br />

while true; do<br />

/var/local/flows/giga-test/scripts/snmp-datastore<br />

sleep 20<br />

done<br />

The snmp-data-store script pools the gigabit<br />

interface and store the data in rrd's.<br />

IN=$(snmpget 217.73.171.3 public 2.2.1.10.26 |<br />

awk "{print \$4 ;}")<br />

OUT=$(snmpget 217.73.171.3 public<br />

2.2.1.16.26 | awk "{print \$4 ;}")<br />

INpk=$(snmpget 217.73.171.3 public<br />

interfaces.ifTable.ifEntry.ifInUcastPkts.26 | awk<br />

"{print \$4 ;}")<br />

OUTpk=$(snmpget 217.73.171.3 public<br />

interfaces.ifTable.ifEntry.ifOutUcastPkts.26 |<br />

awk "{print \$4 ;}")<br />

DATA=`date +%s`<br />

rrdtool update /var/local/flows/gigatest/rrds/c3550.rrd<br />

$DATA:$IN:$OUT:$INpk:$OUTpk<br />

Every 5 minute one script create the graphic in<br />

the form of a GIF picture.<br />

To visualize the bit rates we use the following<br />

rrdtool command:<br />

rrdtool graph gigabits20.gif \<br />

--start -86400 -t "Giga 20 sec" \<br />

-w 600 -h 400 --base 1000 \<br />

DEF:i=c3550.rrd:in:AVERAGE \<br />

CDEF:inb=i,8,* \<br />

DEF:o=c3550.rrd:out:AVERAGE \<br />

CDEF:outb=o,8,* \<br />

CDEF:outbn=outb,-1,* \<br />

AREA:inb#00ff88:"IN traf" \<br />

COMMENT:Min \<br />

GPRINT:inb:MIN:%lf%s COMMENT:bps<br />

\<br />

COMMENT:Average \<br />

GPRINT:inb:AVERAGE:%lf%S<br />

COMMENT:bps \<br />

COMMENT:Max \<br />

GPRINT:inb:MAX:%lf%s<br />

COMMENT:bps \<br />

AREA:outbn#ff8800:"Out traff" \<br />

COMMENT:Min \<br />

GPRINT:outb:MIN:%lf%s<br />

COMMENT:bps \<br />

COMMENT:Average \<br />

GPRINT:outb:AVERAGE:%lf%S<br />

COMMENT:bps \<br />

COMMENT:Max \<br />

GPRINT:outb:MAX:%le%s<br />

COMMENT:bps \<br />

HRULE:0#000000<br />

4. Tests<br />

4.1 Connectivity test<br />

Before any exhaustive test could begin we had to<br />

test connectivity between the two switches.<br />

The estimated distance of 110km is slightly <strong>over</strong><br />

the 100km range of the product but fortunately after<br />

connecting the optical fiber the connected LED lit<br />

up. CDP also showed the other end switch.<br />

4.2 Layer 3 connectivity test<br />

After some basic configuration a IP connectivity<br />

test could be performed and ping reported 2 ms.<br />

After configuring a routing loop and injecting<br />

some traffic, interface utilization was brought up to<br />

<strong>over</strong> 95%. Ping reported an average 15 ms and still<br />

with no packet drops. The switch and interfaces are<br />

performing quit well.<br />

4.3 Monitored Heavy load test<br />

50

The Multi-Generator (MGEN) is open source<br />

software which provides the ability to perform IP<br />

network performance tests and measurements using<br />

UDP/IP traffic (TCP is currently being developed).<br />

The toolset generates real-time traffic patterns so<br />

that the network can be loaded in a variety of ways.<br />

Script files are used to drive the generated<br />

loading patterns <strong>over</strong> time. These script files can be<br />

used to emulate the traffic patterns of unicast and/or<br />

multicast UDP/IP applications.<br />

The main objective of this test is to test the<br />

CWDM fiber link and the c3550 under heavy load.<br />

We have put one static route on each device<br />

pointing each route to the other device for the same<br />

prefix, a typical route loop.<br />

Sending packets with great TTL results in heavy<br />

load and a full 1 Gbit/s traffic between Cluj and Tg-<br />

Mures.<br />

Figure 2 following illustrates the test bed.<br />

Figure 2<br />

We made two heavy load tests using MGEN4.0:<br />

The MGEN generates one UDP stream with TTL<br />

100. This pattern type generates messages of a fixed<br />

(in bytes) at a very regular (in<br />

messages/second). The field must be greater<br />

or equal to the minimum MGEN message size and<br />

less than or equal to the maximum UDP message<br />

size of 8192 bytes.<br />

The first pattern generates messages with fixed<br />

1200.0 bytes and at a regular 1024 rate. After 7200<br />

seconds (2 hours) the stream is modified for a bigger<br />

packet rate, 1500, but the same packet size. Packet<br />

size and rate are shown in the following table for<br />

each time span:<br />

Hour Packet size Packet rate<br />

0 - 2 1200 1024<br />

2 – 4 1200 1500<br />

4 – 6 1200 2000<br />

6 – 8 1200 4096<br />

8 – 10 1600 1024<br />

10 – 12 1600 1500<br />

12 – 14 1600 2000<br />

14 – 16 1600 4096<br />

16 – 18 1900 1024<br />

18 – 20 1900 1500<br />

20 – 22 1900 2000<br />

22 – 24 1900 4096<br />

51

The following results were obtained for a test started at 20:00 hours:<br />

Bytes:<br />

Packets:<br />

52

A second test with an even stronger load was<br />

performed next day. The results were similar and<br />

brought nothing new.<br />

5. Conclusion<br />

The Catalyst 3550 Switch was tested <strong>over</strong> a dark<br />

fiber link exceeding with 10% the manufacturers<br />

specifications. Wire speed IP switching was<br />

obtained under any circumstances and we couldn’t<br />

detect any loss of performance neither in switching<br />

neither in transmitting the test packets.<br />

6. References<br />

http://www.cisco.com/en/US/customer/products/hw/<br />

switches/ps646/index.html<br />

http://manimac.itd.nrl.navy.mil/MGEN/<br />

http://people.ee.ethz.ch/~oetiker/webtools/rrdtool/<br />

http://www.netperf.org/netperf/NetperfPage.html<br />

http://www.cis.ohio-state.edu/~jain/cis788-<br />

97/gigabit_ethernet/<br />

53