Sufficiency Fisher-Neyman's factorization theorem

Sufficiency Fisher-Neyman's factorization theorem

Sufficiency Fisher-Neyman's factorization theorem

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

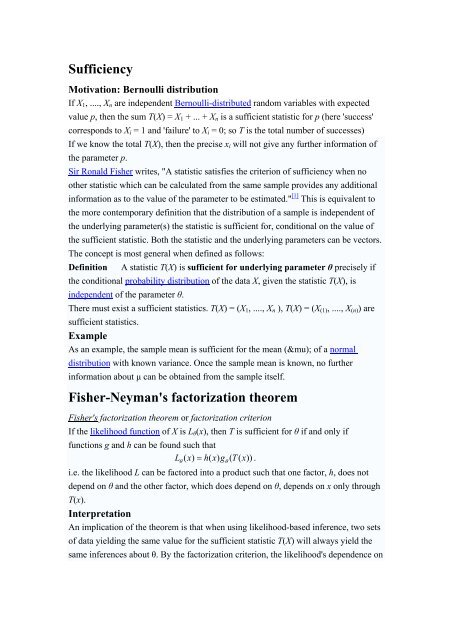

<strong>Sufficiency</strong><br />

Motivation: Bernoulli distribution<br />

If X 1 , ...., X n are independent Bernoulli-distributed random variables with expected<br />

value p, then the sum T(X) = X 1 + ... + X n is a sufficient statistic for p (here 'success'<br />

corresponds to X i = 1 and 'failure' to X i = 0; so T is the total number of successes)<br />

If we know the total T(X), then the precise x i will not give any further information of<br />

the parameter p.<br />

Sir Ronald <strong>Fisher</strong> writes, "A statistic satisfies the criterion of sufficiency when no<br />

other statistic which can be calculated from the same sample provides any additional<br />

information as to the value of the parameter to be estimated." [1] This is equivalent to<br />

the more contemporary definition that the distribution of a sample is independent of<br />

the underlying parameter(s) the statistic is sufficient for, conditional on the value of<br />

the sufficient statistic. Both the statistic and the underlying parameters can be vectors.<br />

The concept is most general when defined as follows:<br />

Definition<br />

A statistic T(X) is sufficient for underlying parameter θ precisely if<br />

the conditional probability distribution of the data X, given the statistic T(X), is<br />

independent of the parameter θ.<br />

There must exist a sufficient statistics. T(X) = (X 1 , ...., X n ), T(X) = (X (1) , ...., X (n) ) are<br />

sufficient statistics.<br />

Example<br />

As an example, the sample mean is sufficient for the mean (&mu); of a normal<br />

distribution with known variance. Once the sample mean is known, no further<br />

information about µ can be obtained from the sample itself.<br />

<strong>Fisher</strong>-<strong>Neyman's</strong> <strong>factorization</strong> <strong>theorem</strong><br />

<strong>Fisher</strong>'s <strong>factorization</strong> <strong>theorem</strong> or <strong>factorization</strong> criterion<br />

If the likelihood function of X is L θ (x), then T is sufficient for θ if and only if<br />

functions g and h can be found such that<br />

Lθ ( x)<br />

= h(<br />

x)<br />

gθ<br />

( T ( x))<br />

.<br />

i.e. the likelihood L can be factored into a product such that one factor, h, does not<br />

depend on θ and the other factor, which does depend on θ, depends on x only through<br />

T(x).<br />

Interpretation<br />

An implication of the <strong>theorem</strong> is that when using likelihood-based inference, two sets<br />

of data yielding the same value for the sufficient statistic T(X) will always yield the<br />

same inferences about θ. By the <strong>factorization</strong> criterion, the likelihood's dependence on

θ is only in conjunction with T(X). As this is the same in both cases, the dependence<br />

on θ will be the same as well, leading to identical inferences.<br />

Example<br />

Binomial distribution p371 Ex2.7<br />

Exponential distribution p371 Ex2.8<br />

Normal distribution p372 Ex2.10<br />

Uniform distribution<br />

If X 1 , ...., X n are independent and uniformly distributed on the interval [0,θ], then T(X)<br />

= max(X 1 , ...., X n ) is sufficient for θ.<br />

If X 1 , ...., X n are independent and uniformly distributed on the interval [a,b], then T(X)<br />

= (min(X 1 , ...., X n ), max(X 1 , ...., X n )) is sufficient for [a,b] p373 Ex2.11.<br />

Poisson distribution<br />

If X 1 , ...., X n are independent and have a Poisson distribution with parameter λ, then<br />

the sum T(X) = X 1 + ... + X n is a sufficient statistic for λ<br />

Theorem<br />

Let X 1 , ...., X n be a random sample with pdf f(x|θ). If<br />

k<br />

∑ i = 1<br />

f ( x | θ ) = h(<br />

x)<br />

c(<br />

θ )exp{ w ( θ ) t ( x)}<br />

I<br />

, where h( x)<br />

≥ 0, c(<br />

θ ) ≥ 0 then T ( X ) = ( t ( X ), L , t ( ))<br />

is a sufficient<br />

statistics for θ.<br />

Example p376 ex2.14<br />

i<br />

i<br />

A<br />

( x)<br />

∑<br />

n<br />

∑<br />

n<br />

i=<br />

1 1 i i=<br />

1 k<br />

X<br />

i<br />

T(X) = (X (1) , ...., X (n) ) is better than T(X) = (X 1 , ...., X n ).<br />

Minimal sufficiency<br />

A sufficient statistic is minimal sufficient if it can be represented as a function of any<br />

other sufficient statistic. In other words, S(X) is minimal sufficient if and only if<br />

1. S(X) is sufficient, and<br />

2. if T(X) is sufficient, then there exists a function f such that S(X) =<br />

f(T(X)).<br />

Intuitively, a minimal sufficient statistic most efficiently captures as much<br />

information as is possible about the parameter θ.<br />

A useful characterization of minimal sufficiency:<br />

Theorem<br />

Suppose that the density f θ exists. T(X) is minimal sufficient if and only if

For any sample point x,y f ( x | θ ) f ( y | θ ) is<br />

independent of θ if and only if T(x) = T(y)<br />

This follows as a direct consequence from the <strong>Fisher</strong>'s <strong>factorization</strong> <strong>theorem</strong> stated<br />

above.<br />

Ex p377 ex2.16 ex2.17<br />

A complete statistic is necessarily minimal sufficient.<br />

Rao-Blackwell <strong>theorem</strong><br />

<strong>Sufficiency</strong> finds a useful application in the Rao-Blackwell <strong>theorem</strong>. It states that if<br />

g(X) is any kind of estimator of θ, then typically the conditional expectation of g(X)<br />

given T(X) is a better estimator of θ, and is never worse. Sometimes one can very<br />

easily construct a very crude estimator g(X), and then evaluate that conditional<br />

expected value to get an estimator that is in various senses optimal.