Nonnegativity Constraints in Numerical Analysis - CiteSeer

Nonnegativity Constraints in Numerical Analysis - CiteSeer

Nonnegativity Constraints in Numerical Analysis - CiteSeer

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Nonnegativity</strong> <strong>Constra<strong>in</strong>ts</strong> <strong>in</strong> <strong>Numerical</strong><strong>Analysis</strong>Donghui Chen ∗ and Robert J. Plemmons †AbstractA survey of the development of algorithms for enforc<strong>in</strong>g nonnegativity constra<strong>in</strong>ts<strong>in</strong> scientific computation is given. Special emphasis is placed on such constra<strong>in</strong>ts <strong>in</strong>least squares computations <strong>in</strong> numerical l<strong>in</strong>ear algebra and <strong>in</strong> nonl<strong>in</strong>ear optimization.Techniques <strong>in</strong>volv<strong>in</strong>g nonnegative low-rank matrix and tensor factorizations are alsoemphasized. Details are provided for some important classical and modern applications<strong>in</strong> science and eng<strong>in</strong>eer<strong>in</strong>g. For completeness, this report also <strong>in</strong>cludes an efforttoward a literature survey of the various algorithms and applications of nonnegativityconstra<strong>in</strong>ts <strong>in</strong> numerical analysis.Key Words: nonnegativity constra<strong>in</strong>ts, nonnegative least squares, matrix and tensor factorizations,image process<strong>in</strong>g, optimization.1 Historical Comments on Enforc<strong>in</strong>g <strong>Nonnegativity</strong><strong>Nonnegativity</strong> constra<strong>in</strong>ts on solutions, or approximate solutions, to numerical problemsare pervasive throughout science, eng<strong>in</strong>eer<strong>in</strong>g and bus<strong>in</strong>ess. In order to preserve <strong>in</strong>herentcharacteristics of solutions correspond<strong>in</strong>g to amounts and measurements, associated with,for <strong>in</strong>stance frequency counts, pixel <strong>in</strong>tensities and chemical concentrations, it makes senseto respect the nonnegativity so as to avoid physically absurd and unpredictable results. Thisviewpo<strong>in</strong>t has both computational as well as philosophical underp<strong>in</strong>n<strong>in</strong>gs. For example, forthe sake of <strong>in</strong>terprentation one might prefer to determ<strong>in</strong>e solutions from the same space, ora subspace thereof, as that of the <strong>in</strong>put data.In numerical l<strong>in</strong>ear algebra, nonnegativity constra<strong>in</strong>ts very often arise <strong>in</strong> least squaresproblems, which we denote as nonnegative least squares (NNLS). The design and implementationof NNLS algorithms has been the subject of considerable work the sem<strong>in</strong>al bookof Lawson and Hanson [49]. This book seems to conta<strong>in</strong> the first widely used method forsolv<strong>in</strong>g NNLS. A variation of their algorithm is available as lsqnonneg <strong>in</strong> Matlab. (For ahistory of NNLS computations <strong>in</strong> Matlab see [83].)∗ Department of Mathematics, Wake Forest University, W<strong>in</strong>ston-Salem, NC 27109. Research supported<strong>in</strong> part by the Air Force Office of Scientific Research under grant F49620-02-1-0107.† Departments of Computer Science and Mathematics, Wake Forest University, W<strong>in</strong>ston-Salem, NC 27109.Research supported by the Air Force Office of Scientific Research under grant F49620-02-1-0107, by the ArmyResearch Office under grants DAAD19-00-1-0540 and W911NF-05-1-04021

Next we move to the topic of least squares computations with nonnegativity constra<strong>in</strong>ts,NNLS. Both old and new algorithms are outl<strong>in</strong>ed. We will see that NNLS leads <strong>in</strong> a naturalway to the topics of approximate low-rank nonnegative matrix and tensor factorizations,NMF and NTF.3 Nonnegative Least Squares3.1 IntroductionA fundamental problem <strong>in</strong> data model<strong>in</strong>g is the estimation of a parameterized model fordescrib<strong>in</strong>g the data. For example, imag<strong>in</strong>e that several experimental observations that arel<strong>in</strong>ear functions of the underly<strong>in</strong>g parameters have been made. Given a sufficiently largenumber of such observations, one can reliably estimate the true underly<strong>in</strong>g parameters. Letthe unknown model parameters be denoted by the vector x = (x 1 , · · · , x n ) T , the differentexperiments relat<strong>in</strong>g x be encoded by the measurement matrix A ∈ R m×n , and the setof observed values be given by b. The aim is to reconstruct a vector x that expla<strong>in</strong>s theobserved values as well as possible. This requirement may be fulfilled by consider<strong>in</strong>g thel<strong>in</strong>ear systemAx = b, (4)where the system may be either under-determ<strong>in</strong>ed (m < n) or over-determ<strong>in</strong>ed (m ≥ n). Inthe latter case, the technique of least-squares proposes to compute x so that the reconstructionerrorf(x) = 1 2 ‖Ax − b‖2 (5)is m<strong>in</strong>imized, where ‖ · ‖ denotes the L 2 norm. However, the estimation is not always thatstraightforward because <strong>in</strong> many real-world problems the underly<strong>in</strong>g parameters representquantities that can take on only nonnegative values, e.g., amounts of materials, chemicalconcentrations, pixel <strong>in</strong>tensities, to name a few. In such a case, problem (5) must be modifiedto <strong>in</strong>clude nonnegativity constra<strong>in</strong>ts on the model parameters x. The result<strong>in</strong>g problem iscalled Nonnegative Least Squares (NNLS), and is formulated as follows:4

NNLS Problem:Given a matrix A ∈ R m×n and the set of observed values given by b ∈ R m , f<strong>in</strong>d a nonnegativea vector x ∈ R n to m<strong>in</strong>imize the functional f(x) = 1 2 ‖Ax − b‖2 , i.e.m<strong>in</strong>xf(x) = 1 2 ‖Ax − b‖2 ,subject to x ≥ 0.(6)The gradient of f(x) is ∇f(x) = A T (Ax − b) and the KKT optimality conditions forNNLS problem (6) arex ≥ 0∇f(x) ≥ 0 (7)∇f(x) T x = 0.Some of the iterative methods for solv<strong>in</strong>g (6) are based on the solution of the correspond<strong>in</strong>gl<strong>in</strong>ear complementarity problem (LCP).L<strong>in</strong>ear Complementarity Problem:Given a matrix A ∈ R m×n and the set of observed values be given by b ∈ R m , f<strong>in</strong>d avector x ∈ R n to m<strong>in</strong>imize the functionalλ = ∇f(x) = A T Ax − A T b ≥ 0x ≥ 0λ T x = 0.(8)Problem (8) is essentially the set of KKT optimality conditions (7) for quadratic programm<strong>in</strong>g.The problem reduces to f<strong>in</strong>d<strong>in</strong>g a nonnegative x which satisfies (Ax − b) T Ax = 0.Handl<strong>in</strong>g nonnegative constra<strong>in</strong>ts is computationally nontrivial because we are deal<strong>in</strong>g withexpansive nonl<strong>in</strong>ear equations. An equivalent but sometimes more tractable formulation ofNNLS us<strong>in</strong>g the residual vector variable p = b − Ax is as follows:1m<strong>in</strong>x,p 2 pT ps. t. Ax + p = b, x ≥ 0.The advantage of this formulation is that we have a simple and separable objective functionwith l<strong>in</strong>ear and nonnegtivity constra<strong>in</strong>ts.The NNLS problem is fairly old. The algorithm of Lawson and Hanson [49] seems to bethe first method to solve it. (This algorithm is available as the lsqnonneg <strong>in</strong> Matlab, see[83].) An <strong>in</strong>terest<strong>in</strong>g th<strong>in</strong>g about NNLS is that it is solved iteratively, but as Lawson andHanson show, the iteration always converges and term<strong>in</strong>ates. There is no cutoff <strong>in</strong> iterationrequired. Sometimes it might run too long, and have to be term<strong>in</strong>ated, but the solutionwill still be “fairly good”, s<strong>in</strong>ce the solution improves smoothly with iteration. Noise, asexpected, <strong>in</strong>creases the number of iterations required to reach the solution.(9)5

Lawson and Hanson’s Algorithm:In their landmark text [49], Lawson and Hanson give the Standard algorithm for NNLSwhich is an active set method [30]. Mathworks [83] modified the algorithm NNLS, whichultimately was renamed to “lsqnonneg”.Notation: The matrix A P is a matrix associated with only the variables currently <strong>in</strong>the passive set P .Algorithm lsqnonneg :Input: A ∈ R m×n , b ∈ R mOutput: x ∗ ≥ 0 such that x ∗ = arg m<strong>in</strong> ‖Ax − b‖ 2 .Initialization: P = ∅, R = {1, 2, · · · , n}, x = 0, w = A T (b − Ax)repeat1. Proceed if R ≠ ∅ ∧ [max i∈R (w i ) > tolerance]2. j = arg max i∈R (w i )3. Include the <strong>in</strong>dex j <strong>in</strong> P and remove it from R4. s P = [(A P ) T A P ] −1 (A P ) T b4.1. Proceed if m<strong>in</strong>(s P ) ≤ 04.2. α = − m<strong>in</strong> i∈P [x i /(x i − s i )]4.3. x := x + α(s − x)4.4. Update R and P4.5. s P = [(A P ) T A P ] −1 (A P ) T b4.6. s R = 05. x = s6. w = A T (b − Ax)It is proved by Lawson and Hanson that the iteration of the NNLS algorithm is f<strong>in</strong>ite.Given sufficient time, the algorithm will reach a po<strong>in</strong>t where the Kuhn-Tucker conditionsare satisfied, and it will term<strong>in</strong>ate. There is no arbitrary cutoff <strong>in</strong> iteration required; <strong>in</strong> thatsense it is a direct algorithm. It is not direct <strong>in</strong> the sense that the upper limit on the possiblenumber of iterations that the algorithm might need to reach the po<strong>in</strong>t of optimum solution isimpossibly large. There is no good way of tell<strong>in</strong>g exactly how many iterations it will require<strong>in</strong> a practical sense. The solution does improve smoothly as the iteration cont<strong>in</strong>ues. If it isterm<strong>in</strong>ated early, one will obta<strong>in</strong> a sub-optimal but likely still fairly good image.7

However, when applied <strong>in</strong> a straightforward manner to large scale NNLS problems, thisalgorithm’s performance is found to be unacceptably slow ow<strong>in</strong>g to the need to perform theequivalent of a full pseudo-<strong>in</strong>verse calculation for each observation vector. More recently,Bro and de Jong [15] have made a substantial speed improvement to Lawson and Hanson’salgorithm for the case of a large number of observation vectors, by develop<strong>in</strong>g a modifiedNNLS algorithm.Fast NNLS fnnls :In the paper [15], Bro and de Jong give a modification of the standard algorithm forNNLS by Lawson and Hanson. Their algorithm, called Fast Nonnegative Least Squares,fnnls, is specifically designed for use <strong>in</strong> multiway decomposition methods for tensor arrayssuch as PARAFAC and N-mode PCA. (See the material on tensors given later <strong>in</strong> this paper.)They realized that large parts of the pseudo<strong>in</strong>verse could be computed once but usedrepeatedly. Specifically, their algorithm precomputes the cross-product matrices that appear<strong>in</strong> the normal equation formulation of the least squares solution. They also observed that,dur<strong>in</strong>g alternat<strong>in</strong>g least squares (ALS) procedures (to be discussed later), solutions tend tochange only slightly from iteration to iteration. In an extension to their NNLS algorithmthat they characterized as be<strong>in</strong>g for “advanced users”, they reta<strong>in</strong>ed <strong>in</strong>formation about theprevious iterations solution and were able to extract further performance improvements <strong>in</strong>ALS applications that employ NNLS. These <strong>in</strong>novations led to a substantial performanceimprovement when analyz<strong>in</strong>g large multivariate, multiway data sets.Algorithm fnnls :Input: A ∈ R m×n , b ∈ R mOutput: x ∗ ≥ 0 such that x ∗ = arg m<strong>in</strong> ‖Ax − b‖ 2 .Initialization: P = ∅, R = {1, 2, · · · , n}, x = 0, w = A T b − (A T A)xrepeat1. Proceed if R ≠ ∅ ∧ [max i∈R (w i ) > tolerance]2. j = arg max i∈R (w i )3. Include the <strong>in</strong>dex j <strong>in</strong> P and remove it from R4. s P = [(A T A) P ] −1 (A T b) P4.1. Proceed if m<strong>in</strong>(s P ) ≤ 04.2. α = − m<strong>in</strong> i∈P [x i /(x i − s i )]4.3. x := x + α(s − x)4.4. Update R and P4.5. s P = [(A T A) P ] −1 (A T b) P4.6. s R = 08

5. x = s6. w = A T (b − Ax)While Bro and de Jongs algorithm precomputes parts of the pseudo<strong>in</strong>verse, the algorithmstill requires work to complete the pseudo<strong>in</strong>verse calculation once for each vectorobservation. A recent variant of fnnls, called fast comb<strong>in</strong>atorial NNLS [4], appropriatelyrearranges calculations to achieve further speedups <strong>in</strong> the presence of multiple observationvectors b i , i = 1, 2 · · · l. This new method rigorously solves the constra<strong>in</strong>ed least squaresproblem while exact<strong>in</strong>g essentially no performance penalty as compared with Bro and Jong’salgorithm. The new algorithm employs comb<strong>in</strong>atorial reason<strong>in</strong>g to identify and group togetherall observations b i that share a common pseudo<strong>in</strong>verse at each stage <strong>in</strong> the NNLS iteration.The complete pseudo<strong>in</strong>verse is then computed just once per group and, subsequently,is applied <strong>in</strong>dividually to each observation <strong>in</strong> the group. As a result, the computationalburden is significantly reduced and the time required to perform ALS operations is likewisereduced. Essentially, if there is only one observation, this new algorithm is no different fromBro and Jong’s algorithm.In the paper [24], Dax concentrates on two problems that arise <strong>in</strong> the implementationof an active set method. One problem is the choice of a good start<strong>in</strong>g po<strong>in</strong>t. The secondproblem is how to move away from a “dead po<strong>in</strong>t”. The results of his experiments <strong>in</strong>dicatethat the use of Gauss-Seidel iterations to obta<strong>in</strong> a start<strong>in</strong>g po<strong>in</strong>t is likely to provide largega<strong>in</strong>s <strong>in</strong> efficiency. And also, dropp<strong>in</strong>g one constra<strong>in</strong>t at a time is advantageous to dropp<strong>in</strong>gseveral constra<strong>in</strong>ts at a time.However, all these active set methods still depend on the normal equations, render<strong>in</strong>gthem <strong>in</strong>feasible for ill-conditioned. In contrast to an active set method, iterative methods,for <strong>in</strong>stance gradient projection, enables one to <strong>in</strong>corporate multiple active constra<strong>in</strong>ts ateach iteration.3.2.2 Algorithms Based On Iterative MethodsThe ma<strong>in</strong> advantage of this class of algorithms is that by us<strong>in</strong>g <strong>in</strong>formation from a projectedgradient step along with a good guess of the active set, one can handle multiple activeconstra<strong>in</strong>ts per iteration. In contrast, the active-set method typically deals with only oneactive constra<strong>in</strong>t at each iteration. Some of the iterative methods are based on the solutionof the correspond<strong>in</strong>g LCP (8). In contrast to an active set approach, iterative methods likegradient projection enables the <strong>in</strong>corporation of multiple active constra<strong>in</strong>ts at each iteration.Projective Quasi-Newton NNLS (PQN-NNLS)In the paper [46], Kim, et al. proposed a projection method with non-diagonal gradientscal<strong>in</strong>g to solve the NNLS problem (6). In contrast to an active set approach, gradientprojection avoids the pre-computation of A T A and A T b, which is required for the use of9

the active set method fnnls. It also enables their method to <strong>in</strong>corporate multiple activeconstra<strong>in</strong>ts at each iteration. By employ<strong>in</strong>g non-diagonal gradient scal<strong>in</strong>g, PQN-NNLSovercomes some of the deficiencies of a projected gradient method such as slow convergenceand zigzagg<strong>in</strong>g. An important characteristic of PQN-NNLS algorithm is that despite theefficiencies, it still rema<strong>in</strong>s relatively simple <strong>in</strong> comparison with other optimization-orientedalgorithms. Also <strong>in</strong> this paper, Kim et al. gave experiments to show that their methodoutperforms other standard approaches to solv<strong>in</strong>g the NNLS problem, especially for largescaleproblems.Algorithm PQN-NNLS:Input: A ∈ R m×n , b ∈ R mOutput: x ∗ ≥ 0 such that x ∗ = arg m<strong>in</strong> ‖Ax − b‖ 2 .Initialization: x 0 ∈ R n +, S 0 ← I and k ← 0repeat1. Compute fixed variable set I k = {i : x k i = 0, [∇f(x k )] i > 0}2. Partition x k = [y k ; z k ], where y k i /∈ I k and z k i ∈ I k3. Solve equality-constra<strong>in</strong>ed subproblem:3.1. F<strong>in</strong>d appropriate values for α k and β k3.2. γ k (β k ; y k ) ← P(y k − β k ¯Sk ∇f(y k ))3.3. ỹ ← y k + α(γ k (β k ; y k ) − y k )4. Update gradient scal<strong>in</strong>g matrix S k to obta<strong>in</strong> S k+15. Update x k+1 ← [ỹ; z k ]6. k ← k + 1until Stopp<strong>in</strong>g criteria are met.Sequential Coord<strong>in</strong>ate-wise Algorithm for NNLSIn [29], the authors propose a novel sequential coord<strong>in</strong>ate-wise (SCA) algorithm whichis easy to implement and it is able to cope with large scale problems. They also derivestopp<strong>in</strong>g conditions which allow control of the distance of the solution found to the optimalone <strong>in</strong> terms of the optimized objective function. The algorithm produces a sequence ofvectors x 0 , x 1 , . . . , x t which converges to the optimal x ∗ . The idea is to optimize <strong>in</strong> eachiteration with respect to a s<strong>in</strong>gle coord<strong>in</strong>ate while the rema<strong>in</strong><strong>in</strong>g coord<strong>in</strong>ates are fixed. Theoptimization with respect to a s<strong>in</strong>gle coord<strong>in</strong>ate has an analytical solution, thus it can becomputed efficiently.10

Notation: I = {1, 2, · · · , n}, I k = I/k, H = A T A which is semi-positive def<strong>in</strong>ite, andh k denotes the kth column of H.Algorithm SCA-NNLS:Input: A ∈ R m×n , b ∈ R mOutput: x ∗ ≥ 0 such that x ∗ = arg m<strong>in</strong> ‖Ax − b‖ 2 .Initialization: x 0 = 0 and µ 0 = f = −A T brepeat For k = 1 to n1. x t+1k= max2. µ t+1 = µ t + (x t+1k( )0, x t k − µt kH k,k, and x t+1i = x t i, ∀i ∈ I k− x t k )h kuntil Stopp<strong>in</strong>g criteria are met.3.2.3 Other Methods:Pr<strong>in</strong>cipal Block Pivot<strong>in</strong>g methodIn the paper [17], the authors gave a block pr<strong>in</strong>cipal pivot<strong>in</strong>g algorithm for large andsparse NNLS. They considered the l<strong>in</strong>ear complementarity problem (8). The n <strong>in</strong>dices of thevariables <strong>in</strong> x are divided <strong>in</strong>to complementary sets F and G, and let x F and y G denote pairsof vectors with the <strong>in</strong>dices of their nonzero entries <strong>in</strong> these sets. Then the pair (x F , y G ) is acomplementary basic solution of Equation (8) if x F is a solution of the unconstra<strong>in</strong>ed leastsquares problemm<strong>in</strong> ‖A F x F − b‖ 2x F ∈R |F | 2 (10)where A F is formed from A by select<strong>in</strong>g the columns <strong>in</strong>dexed by F , and y G is obta<strong>in</strong>ed byy G = A T G(A F x F − b). (11)If x F ≥ 0 and y G ≥ 0, then the solution is feasible. Otherwise it is <strong>in</strong>feasible, and we referto the negative entries of x F and y G as <strong>in</strong>feasible variables. The idea of the algorithm isto proceed through <strong>in</strong>feasible complementary basic solutions of (8) to the unique feasiblesolution by exchang<strong>in</strong>g <strong>in</strong>feasible variables between F and G and updat<strong>in</strong>g x F and y G by(10) and (11). To m<strong>in</strong>imize the number of solutions of the least-squares problem <strong>in</strong> (10), it isdesirable to exchange variables <strong>in</strong> large groups if possible. The performance of the algorithmis several times faster than Matstoms’ Matlab implementation [58] of the same algorithm.Further, it matches the accuracy of Matlab’s built-<strong>in</strong> lsqnonneg function. (The program isavailable onl<strong>in</strong>e at http://plato.asu.edu/sub/nonlsq.html).11

Block pr<strong>in</strong>cipal pivot<strong>in</strong>g algorithm:Input: A ∈ R m×n , b ∈ R mOutput: x ∗ ≥ 0 such that x ∗ = arg m<strong>in</strong> ‖Ax − b‖ 2 .Initialization: F = ø and G = 1, . . . , n, x = 0, y = −A T b, and p = 3, N = ∞.repeat:1. Proceed if (x F , y G ) is an <strong>in</strong>feasible solution.2. Set n to the number of negative entries <strong>in</strong> x F and y G .2.1 Proceed if n < N,2.1.1 Set N = n, p = 3,2.1.2 Exchange all <strong>in</strong>feasible variables between F and G.2.2 Proceed if n ≥ N2.2.1 Proceed if p > 0,2.2.1.1 set p = p − 12.2.1.2 Exchange all <strong>in</strong>feasible variables between F and G.2.2.2 Proceed if p ≤ 0,2.2.2.1 Exchange only the <strong>in</strong>feasible variable with largest <strong>in</strong>dex.3. Update x F and y G by Equation (10) and (11).4. Set Variables <strong>in</strong> x F < 10 −12 and y G < 10 −12 to zero.Interior Po<strong>in</strong>t Newton-like Method:In addition to the methods above, Interior Po<strong>in</strong>t methods can be used to solve NNLSproblems. They generate an <strong>in</strong>f<strong>in</strong>ite sequence of strictly feasible po<strong>in</strong>ts converg<strong>in</strong>g to thesolution and are known to be competitive with active set methods for medium and largeproblems. In the paper [1], the authors present an <strong>in</strong>terior-po<strong>in</strong>t approach suited for NNLSproblems. Global and locally fast convergence is guaranteed even if a degenerate solution isapproached and the structure of the given problem is exploited both <strong>in</strong> the l<strong>in</strong>ear algebraphase and <strong>in</strong> the globalization strategy. Viable approaches for implementation are discussedand numerical results are provided. Here we give an <strong>in</strong>terior algorithm for NNLS, moredetailed discussion could be found <strong>in</strong> the paper [1].Notation: g(x) is the gradient of the objective function (6), i.e. g(x) = ∇f(x) =A T (Ax − b). Therefore, by the KKT conditions, x ∗ can be found by search<strong>in</strong>g for thepositive solution of the system of nonl<strong>in</strong>ear equationsD(x)g(x) = 0, (12)12

where D(x) = diag(d 1 (x), . . . , d n (x)), has entriesd i (x) ={xi if g i (x) ≥ 0,1 otherwise.(13)1The matrix W (x) is def<strong>in</strong>ed by W (x) = diag(w 1 (x), . . . , w n (x)), where w i (x) =d i (x)+e i (x)and for 1 < s ≤ 2{gi (x) if 0 ≤ ge i (x) =i (x) < x s i or g i (x) s > x i ,1 otherwise.(14)Newton Like method for NNLS:Input: A ∈ R m×n , b ∈ R mOutput: x ∗ ≥ 0 such that x ∗ = arg m<strong>in</strong> ‖Ax − b‖ 2 .Initialization: x 0 > 0 and σ < 1repeat1. Choose η k ∈ [0, 1)2. Solve Z k ˜p = −W 1 2kD 1 2kg k + ˜r k , ‖˜r k ‖ 2 ≤ η k ‖W k D k g k ‖ 23. Set p = W 1 2kD 1 2k ˜p4. Set p k = max{σ, 1 − ‖p(x k + p) − x k ‖ 2 }(p(x k + p) − x k )5. Set x k+1 = x k + p kuntil Stopp<strong>in</strong>g criteria are met.We next move to the extension of Problem NNLS to approximate low-rank nonnegativematrix factorization and later extend that concept to approximate low-rank nonnegativetensor (multiway array) factorization.4 Nonnegative Matrix and Tensor FactorizationsAs <strong>in</strong>dicated earlier, NNLS leads <strong>in</strong> a natural way to the topics of approximate nonnegativematrix and tensor factorizations, NMF and NTF. We beg<strong>in</strong> by discuss<strong>in</strong>g algorithms forapproximat<strong>in</strong>g an m×n nonnegative matrix X by a low-rank matrix, say Y, that is factored<strong>in</strong>to Y = WH, where W has k ≤ m<strong>in</strong>{m, n} columns, and H has k rows.13

4.1 Nonnegative Matrix FactorizationIn Nonnegative Matrix Factorization (NMF), an m × n (nonnegative) mixed data matrix Xis approximately factored <strong>in</strong>to a product of two nonnegative rank-k matrices, with k smallcompared to m and n, X ≈ WH. This factorization has the advantage that W and H canprovide a physically realizable representation of the mixed data. NMF is widely used <strong>in</strong> avariety of applications, <strong>in</strong>clud<strong>in</strong>g air emission control, image and spectral data process<strong>in</strong>g,text m<strong>in</strong><strong>in</strong>g, chemometric analysis, neural learn<strong>in</strong>g processes, sound recognition, remotesens<strong>in</strong>g, and object characterization, see, e.g. [9].NMF problem: Given a nonnegative matrix X ∈ R m×n and a positive <strong>in</strong>teger k ≤m<strong>in</strong>{m, n}, f<strong>in</strong>d nonnegative matrices W ∈ R m×k and H ∈ R k×n to m<strong>in</strong>imize the functionf(W, H) = 1‖X − 2 WH‖2 F , i.e.k∑m<strong>in</strong> f(H) = ‖X − W (i) ◦ H (i) ‖ subject to W, H ≥ 0 (15)Hi=1where ′ ◦ ′ denotes outer product, W (i) is ith column of W, H (i) is ith column of H TFigure 1: An illustration of nonnegative matrix factorization.See Figure 1 which provides an illustration of matrix approximation by a sum of rankone matrices determ<strong>in</strong>ed by W and H. The sum is truncated after k terms.Quite a few numerical algorithms have been developed for solv<strong>in</strong>g the NMF. The methodologiesadapted are follow<strong>in</strong>g more or less the pr<strong>in</strong>ciples of alternat<strong>in</strong>g direction iterations,the projected Newton, the reduced quadratic approximation, and the descent search. Specificimplementations generally can be categorized <strong>in</strong>to alternat<strong>in</strong>g least squares algorithms[64], multiplicative update algorithms [40, 51, 52], gradient descent algorithms, and hybridalgorithms [67, 69]. Some general assessments of these methods can be found <strong>in</strong> [20, 56]. Itappears that there is much room for improvement of numerical methods. Although schemesand approaches are different, any numerical method is essentially centered around satisfy<strong>in</strong>gthe first order optimality conditions derived from the Kuhn-Tucker theory. Note that thecomputed factors W and H may only be local m<strong>in</strong>imizers of (15).14

Theorem 1. Necessary conditions for (W, H) ∈ R m×p+ × R p×n+ to solve the nonnegativematrix factorization problem (15) areW. ∗ ((X − W H)H T ) = 0 ∈ R m×p ,H. ∗ (W T (X − W H)) = 0 ∈ R p×n ,(X − W H)H T ≤ 0,W T (X − W H) ≤ 0,(16)where ′ .∗ ′ denotes the Hadamard product.Alternat<strong>in</strong>g Least Squares (ALS) algorithms for NMFS<strong>in</strong>ce the Frobenius norm of a matrix is just the sum of Euclidean norms over columns(or rows), m<strong>in</strong>imization or descent over either W or H boils down to solv<strong>in</strong>g a sequenceof nonnegative least squares (NNLS) problems. In the class of ALS algorithms for NMF, aleast squares step is followed by another least squares step <strong>in</strong> an alternat<strong>in</strong>g fashion, thusgiv<strong>in</strong>g rise to the ALS name. ALS algorithms were first used by Paatero [64], exploit<strong>in</strong>gthe fact that, while the optimization problem of (15) is not convex <strong>in</strong> both W and H, it isconvex <strong>in</strong> either W or H. Thus, given one matrix, the other matrix can be found with NNLScomputations. An elementary ALS algorithm <strong>in</strong> matrix notation follows.ALS Algorithm for NMF:Initialization: Let W be a random matrix W = rand(m, k) or use another <strong>in</strong>itializationfrom [50]repeat: for i = 1 : maxiterend1. (NNLS) Solve for H <strong>in</strong> the matrix equation W T WH = W T X by solv<strong>in</strong>gwith W fixed,m<strong>in</strong>H f(H) = 1 2 ‖X − WH‖2 F subject to H ≥ 0, (17)2. (NNLS) Solve for W <strong>in</strong> the matrix equation HH T W T = HX T by solv<strong>in</strong>gwith H fixed.m<strong>in</strong>W f(W) = 1 2 ‖XT − H T W T ‖ 2 F subject to W ≥ 0 (18)Compared to other methods for NMF, the ALS algorithms are more flexible, allow<strong>in</strong>gthe iterative process to escape from a poor path. Depend<strong>in</strong>g on the implementation, ALS15

algorithms can be very fast. The implementation shown above requires significantly lesswork than other NMF algorithms and slightly less work than an SVD implementation. Improvementsto the basic ALS algorithm appear <strong>in</strong> [50, 65].We conclude this section with a discussion of the convergence of ALS algorithms. Algorithmsfollow<strong>in</strong>g an alternat<strong>in</strong>g process, approximat<strong>in</strong>g W, then H, and so on, are actuallyvariants of a simple optimization technique that has been used for decades, and are knownunder various names such as alternat<strong>in</strong>g variables, coord<strong>in</strong>ate search, or the method of localvariation [62]. While statements about global convergence <strong>in</strong> the most general cases havenot been proven for the method of alternat<strong>in</strong>g variables, a bit has been said about certa<strong>in</strong>special cases. For <strong>in</strong>stance, [73] proved that every limit po<strong>in</strong>t of a sequence of alternat<strong>in</strong>gvariable iterates is a stationary po<strong>in</strong>t. Others [71, 72, 90] proved convergence for specialclasses of objective functions, such as convex quadratic functions. Furthermore, it is knownthat an ALS algorithm that properly enforces nonnegativity, for example, through the nonnegativeleast squares (NNLS) algorithm of Lawson and Hanson [49], will converge to a localm<strong>in</strong>imum [11, 32, 54].4.2 Nonnegative Tensor DecompositionNonnegative Tensor Factorization (NTF) is a natural extension of NMF to higher dimensionaldata. In NTF, high-dimensional data, such as hyperspectral or other image cubes, is factoreddirectly, it is approximated by a sum of rank 1 nonnegative tensors. The ubiquitous tensorapproach, orig<strong>in</strong>ally suggested by E<strong>in</strong>ste<strong>in</strong> to expla<strong>in</strong> laws of physics without depend<strong>in</strong>g on<strong>in</strong>ertial frames of reference, is now becom<strong>in</strong>g the focus of extensive research. Here, we developand apply NTF algorithms for the analysis of spectral and hyperspectral image data. Thealgorithm given here comb<strong>in</strong>es features from both NMF and NTF methods.Notation: The symbol ∗ denotes the Hadamard (i.e., elementwise) matrix product,⎛⎞A 11 B 11 · · · A 1n B 1n⎜A ∗ B = ⎝.. ..⎟ . ⎠ (19)A m1 B m1 · · · A mn B mnThe symbol ⊗ denotes the Kronecker product, i.e.⎛⎞A 11 B · · · A 1n B⎜A ⊗ B = ⎝.. ..⎟ . ⎠ (20)A m1 B · · · A mn BAnd the symbol ⊙ denotes the Khatri-Rao product (columnwise Kronecker)[42],A ⊙ B = (A 1 ⊗ B 1 · · · A n ⊗ B n ). (21)where A i , B i are the columns of A, B respectively.The concept of matriciz<strong>in</strong>g or unfold<strong>in</strong>g is simply a rearrangement of the entries of T<strong>in</strong>to a matrix. For a three-dimensional array T of size m × n × p, the notation T (m×np)16

epresents a matrix of size m × np <strong>in</strong> which the n-<strong>in</strong>dex runs the fastest over columns and pthe slowest. The norm of a tensor, ||T ||, is the same as the Frobenius norm of the matricizedarray, i.e., the square root of the sum of squares of all its elements.Nonnegative Rank-k Tensor Decomposition Problem:subject to:m<strong>in</strong> ||T −x (i) ,y (i) ,z (i)r∑x (i) ◦ y (i) ◦ z (i) ||, (22)i=1x (i) ≥ 0, y (i) ≥ 0, z (i) ≥ 0where T ∈ R m×n×p , x (i) ∈ R m , y (i) ∈ R n , z (i) ∈ R p .Note that Equation (22) def<strong>in</strong>es matrices X which is m × k, Y which is n × k, and Xwhich is p × k. Also, see Figure 2 which provides an illustration of 3D tensor approximationby a sum of rank one tensors. When the sum is truncated after, say, k terms, it then providesa rank k approximation to the tensor T .Figure 2: An illustration of 3-D tensor factorization.Alternat<strong>in</strong>g least squares for NTFA common approach to solv<strong>in</strong>g Equation (22) is an alternat<strong>in</strong>g least squares(ALS) algorithm[28, 36, 84], due to its simplicity and ability to handle constra<strong>in</strong>ts. At each <strong>in</strong>neriteration, we compute an entire factor matrix while hold<strong>in</strong>g all the others fixed.Start<strong>in</strong>g with random <strong>in</strong>itializations for X, Y and Z, we update these quantities <strong>in</strong>an alternat<strong>in</strong>g fashion us<strong>in</strong>g the method of normal equations. The m<strong>in</strong>imization problem<strong>in</strong>volv<strong>in</strong>g X <strong>in</strong> Equation (22) can be rewritten <strong>in</strong> matrix form as a least squares problem:m<strong>in</strong>X ||T (m×np) − XC|| 2 . (23)where T (m×np) = X(Z ⊙ Y ) T , C = (Z ⊙ Y ) T .The least squares solution for Equation (22) <strong>in</strong>volves the pseudo-<strong>in</strong>verse of C, which maybe computed <strong>in</strong> a special way that avoids comput<strong>in</strong>g C T C with an explicit C, so the solutionto Equation (22) is given byX = T (m×np) (Z ⊙ Y )(Y T Y ∗ Z T Z) −1 . (24)17

Furthermore, the product T (m×np) (Z ⊙Y ) may be computed efficiently if T is sparse by notform<strong>in</strong>g the Khatri-Rao product (Z ⊙Y ). Thus, comput<strong>in</strong>g X essentially reduces to severalmatrix <strong>in</strong>ner products, tensor-matrix multiplication of Y and Z <strong>in</strong>to T , and <strong>in</strong>vert<strong>in</strong>g anR × R matrix.Analogous least squares steps may be used to update Y and Z. Follow<strong>in</strong>g is a summaryof the complete NTF algorithm.ALS algorithm for NTF:1. Group x i ’s, y i ’s and z i ’s as columns <strong>in</strong> X ∈ R m×r+ , Y ∈ R n×r+ and Z ∈ R p×r+ respectively.2. Initialize X, Y .(a) Nonnegative Matrix Factorization of the mean slice,where A is the mean of T across the 3 rd dimension.3. Iterative Tri-Alternat<strong>in</strong>g M<strong>in</strong>imizationm<strong>in</strong> ||A − XY || 2 F . (25)(a) Fix T , X, Y and fit Z by solv<strong>in</strong>g a NMF problem <strong>in</strong> an alternat<strong>in</strong>g fashion.(b) Fix T , X, Z, fit for Y ,(c) Fix T , Y , Z, fit for X.(T (m×np) C) iρX iρ ← X iρ , C = (Z ⊙ Y ) (26)(XC T C) iρ + ɛ(T (m×np) C) jρY jρ ← Y jρ , C = (Z ⊙ X) (27)(Y C T C) jρ + ɛ(T (m×np) C) kρZ kρ ← Z kρ , C = (Y ⊙ X) (28)(ZC T C) kρ + ɛHere ɛ is a small number like 10 −9 that adds stability to the calculation and guardsaga<strong>in</strong>st <strong>in</strong>troduc<strong>in</strong>g a negative number from numerical underflow.If T is sparse a simpler computation <strong>in</strong> the procedure above can be obta<strong>in</strong>ed. Eachmatricized version of T is a sparse matrix. The matrix C from each step should not be formedexplicitly because it would be a large, dense matrix. Instead, the product of a matricized Twith C should be computed specially, exploit<strong>in</strong>g the <strong>in</strong>herent Kronecker product structure<strong>in</strong> C so that only the required elements <strong>in</strong> C need to be computed and multiplied with thenonzero elements of T .18

5 Some Applications of <strong>Nonnegativity</strong> <strong>Constra<strong>in</strong>ts</strong>5.1 Support Vector Mach<strong>in</strong>esSupport Vector mach<strong>in</strong>es were <strong>in</strong>troduced by Vapnik and co-workers [13, 23] theoreticallymotivated by Vapnik-Chervonenkis theory (also known as VC theory [87, 88]). Support vectormach<strong>in</strong>es (SVMs) are a set of related supervised learn<strong>in</strong>g methods used for classificationand regression. They belong to a family of generalized l<strong>in</strong>ear classifiers. They are based onthe follow<strong>in</strong>g idea: <strong>in</strong>put po<strong>in</strong>ts are mapped to a high dimensional feature space, where aseparat<strong>in</strong>g hyperplane can be found. The algorithm is chosen <strong>in</strong> such a way to maximize thedistance from the closest patterns, a quantity that is called the marg<strong>in</strong>. This is achieved byreduc<strong>in</strong>g the problem to a quadratic programm<strong>in</strong>g problem,F (v) = 1 2 vT Av + b T v, v ≥ 0. (29)Here we assume that the matrix A is symmetric and semipositive def<strong>in</strong>ite. The problem(29) is then usually solved with optimization rout<strong>in</strong>es from numerical libraries. SVMs havea proven impressive performance on a number of real world problems such as optical characterrecognition and face detection.We briefly review the problem of comput<strong>in</strong>g the maximum marg<strong>in</strong> hyperplane <strong>in</strong> SVMs[87]. Let {(x i , y i )} N i = 1} denote labeled examples with b<strong>in</strong>ary class labels y i = ±1, and letK(x i , x j ) denote the kernel dot product between <strong>in</strong>puts. For brevity, we consider only thesimple case where <strong>in</strong> the high dimensional feature space, the classes are l<strong>in</strong>early separableand the hyperplane is required to pass through the orig<strong>in</strong>. In this case, the maximum marg<strong>in</strong>hyperplane is obta<strong>in</strong>ed by m<strong>in</strong>imiz<strong>in</strong>g the loss function:L(α) = − ∑ iα i + 1 ∑α i α j y i y j K(x i , x j ), (30)2subject to the nonnegativity constra<strong>in</strong>ts α i ≥ 0. Let α ∗ denote the m<strong>in</strong>imum of equation(30). The maximal marg<strong>in</strong> hyperplane has normal vector w = ∑ i α∗ i y i x i and satisfies themarg<strong>in</strong> constra<strong>in</strong>ts y i K(w, x i ) ≥ 1 for all examples <strong>in</strong> the tra<strong>in</strong><strong>in</strong>g set.The loss function <strong>in</strong> equation (30) is a special case of the non-negative quadratic programm<strong>in</strong>g(29) with A ij = y i y j K(x i , x j ) and b i = −1. Thus, the multiplicative updates <strong>in</strong>the paper [79] are easily adapted to SVMs. This algorithm for tra<strong>in</strong><strong>in</strong>g SVMs is known asMultiplicative Marg<strong>in</strong> Maximization (M 3 ). The algorithm can be generalized to data thatis not l<strong>in</strong>early separable and to separat<strong>in</strong>g hyper-planes that do not pass through the orig<strong>in</strong>.Many iterative algorithms have been developed for nonnegative quadratic programm<strong>in</strong>g<strong>in</strong> general and for SVMs as a special case. Benchmark<strong>in</strong>g experiments have shown thatM 3 is a feasible algorithm for small to moderately sized data sets. On the other hand, itdoes not converge as fast as lead<strong>in</strong>g subset methods for large data sets. Nevertheless, theextreme simplicity and convergence guarantees of M 3 make it a useful start<strong>in</strong>g po<strong>in</strong>t forexperiment<strong>in</strong>g with SVMs.ij19

5.2 Image Process<strong>in</strong>g and Computer VisionDigital images are represented nonnegative matrix arrays, s<strong>in</strong>ce pixel <strong>in</strong>tensity values arenonnegative. It is sometimes desirable to process data sets of images represented by columnvectors as composite objects <strong>in</strong> many articulations and poses, and sometimes as separatedparts for <strong>in</strong>, for example, biometric identification applications such as face or iris recognition.It is suggested that the factorization <strong>in</strong> the l<strong>in</strong>ear model would enable the identificationand classification of <strong>in</strong>tr<strong>in</strong>sic “parts” that make up the object be<strong>in</strong>g imaged by multipleobservations [19, 41, 51, 53]. More specifically, each column x j of a nonnegative matrix Xnow represents m pixel values of one image. The columns w i of W are basis elements <strong>in</strong> R m .The columns of H, belong<strong>in</strong>g to R k , can be thought of as coefficient sequences represent<strong>in</strong>gthe n images <strong>in</strong> the basis elements. In other words, the relationshipx j =k∑w i h ij , (31)i=1can be thought of as that there are standard parts w i <strong>in</strong> a variety of positions and thateach image represented as a vector x j mak<strong>in</strong>g up the factor W of basis elements is madeby superpos<strong>in</strong>g these parts together <strong>in</strong> specific ways by a mix<strong>in</strong>g matrix represented byH. Those parts, be<strong>in</strong>g images themselves, are necessarily nonnegative. The superpositioncoefficients, each part be<strong>in</strong>g present or absent, are also necessarily nonnegative. A relatedapplication to the identification of object materials from spectral reflectance data at differentoptical wavelengths has been <strong>in</strong>vestigated <strong>in</strong> [68, 69, 70].As one of the most successful applications of image analysis and understand<strong>in</strong>g, facerecognition has recently received significant attention, especially dur<strong>in</strong>g the past few years.Recently, many papers, like [9, 41, 51, 55, 64] have proved that Nonnegative Matrix Factorization(NMF) is a good method to obta<strong>in</strong> a representation of data us<strong>in</strong>g non-negativityconstra<strong>in</strong>ts. These constra<strong>in</strong>ts lead to a part-based representation because they allow onlyadditive, not subtractive, comb<strong>in</strong>ations of the orig<strong>in</strong>al data. Given an <strong>in</strong>itial database expressedby a n × m matrix X, where each column is an n-dimensional nonnegative vector ofthe orig<strong>in</strong>al database (m vectors), it is possible to f<strong>in</strong>d two new matrices W and H <strong>in</strong> orderto approximate the orig<strong>in</strong>al matrixX iµ ≈ (W H) iµ =k∑W ia H aµ . (32)The dimensions of the factorized matrices W and H are n × k and k × m, respectively.Usually, k is chosen so that (n + m)k < nm. Each column of matrix W conta<strong>in</strong>s a basisvector while each column of H conta<strong>in</strong>s the weights needed to approximate the correspond<strong>in</strong>gcolumn <strong>in</strong> X us<strong>in</strong>g the bases from W.Other image process<strong>in</strong>g work that uses non-negativity constra<strong>in</strong>t <strong>in</strong>cludes the work imagerestorations. Image restoration is the process of approximat<strong>in</strong>g an orig<strong>in</strong>al image from anobserved blurred and noisy image. In image restoration, image formation is modeled as afirst k<strong>in</strong>d <strong>in</strong>tegral equation which, after discretization, results <strong>in</strong> a large scale l<strong>in</strong>ear systemof the formAx + η = b. (33)20a=1

The vector x represents the true image, b is the blurred noisy copy of x, and η models additivenoise, matrix A is a large ill-conditioned matrix represent<strong>in</strong>g the blurr<strong>in</strong>g phenomena.In the absence of <strong>in</strong>formation of noise, we can model the image restoration problem asNNLS problem,m<strong>in</strong>2 ‖Ax − b‖2 ,subject to x ≥ 0.x1Thus, we can use NNLS to solve this problem. Experiments show that enforc<strong>in</strong>g a nonnegativityconstra<strong>in</strong>t can produce a much more accurate approximate solution, see e.g.,[35, 43, 60, 77].5.3 Text M<strong>in</strong><strong>in</strong>gAssume that the textual documents are collected <strong>in</strong> an matrix Y = [y ij ] ∈ R m×n . Eachdocument is represented by one column <strong>in</strong> Y. The entry y ij represents the weight of oneparticular term i <strong>in</strong> document j whereas each term could be def<strong>in</strong>ed by just one s<strong>in</strong>gle wordor a str<strong>in</strong>g of phrases. To enhance discrim<strong>in</strong>ation between various documents and to improveretrieval effectiveness, a term-weight<strong>in</strong>g scheme of the form,(34)y ij = t ij g i d j , (35)is usually used to def<strong>in</strong>e Y [10], where t ij captures the relative importance of term i <strong>in</strong>document j, g i weights the overall importance of term i <strong>in</strong> the entire set of documents, andd j = ( ∑ mi=1 t ijg i ) −1/2 is the scal<strong>in</strong>g factor for normalization. The normalization by d j perdocument is necessary, otherwise one could artificially <strong>in</strong>flate the prom<strong>in</strong>ence of document jby padd<strong>in</strong>g it with repeated pages or volumes. After the normalization, the columns of Yare of unit length and usually nonnegative.The <strong>in</strong>dex<strong>in</strong>g matrix conta<strong>in</strong>s lot of <strong>in</strong>formation for retrieval. In the context of latentsemantic <strong>in</strong>dex<strong>in</strong>g (LSI) application [10, 37], for example, suppose a query represented by arow vector q T = [q 1 , ..., q m ] ∈ R m , where q i denotes the weight of term i <strong>in</strong> the query q, issubmitted. One way to measure how the query q matches the documents is to calculate therow vector s T = q T Y and rank the relevance of documents to q accord<strong>in</strong>g to the scores <strong>in</strong> s.The computation <strong>in</strong> the LSI application seems to be merely the vector-matrix multiplication.This is so only if Y is a ”reasonable” representation of the relationship betweendocuments and terms. In practice, however, the matrix Y is never exact. A major challenge<strong>in</strong> the field has been to represent the <strong>in</strong>dex<strong>in</strong>g matrix and the queries <strong>in</strong> a more compactform so as to facilitate the computation of the scores [25, 66]. The idea of represent<strong>in</strong>g Yby its nonnegative matrix factorization approximation seems plausible. In this context, thestandard parts w i <strong>in</strong>dicated <strong>in</strong> (31) may be <strong>in</strong>terpreted as subcollections of some ”generalconcepts” conta<strong>in</strong>ed <strong>in</strong> these documents. Like images, each document can be thought of asa l<strong>in</strong>ear composition of these general concepts. The column-normalized matrix A itself is aterm-concept <strong>in</strong>dex<strong>in</strong>g matrix.21

5.4 Environmetrics and ChemometricsIn the air pollution research community, one observational technique makes use of the ambientdata and source profile data to apportion sources or source categories [39, 44, 75]. Thefundamental pr<strong>in</strong>ciple <strong>in</strong> this model is that mass conservation can be assumed and a massbalance analysis can be used to identify and apportion sources of airborne particulate matter<strong>in</strong> the atmosphere. For example, it might be desirable to determ<strong>in</strong>e a large number of chemicalconstituents such as elemental concentrations <strong>in</strong> a number of samples. The relationshipsbetween p sources which contribute m chemical species to n samples leads to a mass balanceequationp∑y ij = a ik f kj , (36)k=1where y ij is the elemental concentration of the ith chemical measured <strong>in</strong> the j th sample,a ik is the gravimetric concentration of the ith chemical <strong>in</strong> the kth source, and f kj is theairborne mass concentration that the kth source has contributed to the j th sample. In atypical scenario, only values of y ij are observable whereas neither the sources are known northe compositions of the local particulate emissions are measured. Thus, a critical questionis to estimate the number p, the compositions a ik , and the contributions f kj of the sources.Tools that have been employed to analyze the l<strong>in</strong>ear model <strong>in</strong>clude pr<strong>in</strong>cipal componentanalysis, factor analysis, cluster analysis, and other multivariate statistical techniques. Inthis receptor model, however, there is a physical constra<strong>in</strong>t imposed upon the data. That is,the source compositions a ik and the source contributions f kj must all be nonnegative. Theidentification and apportionment problems thus become a nonnegative matrix factorizationproblem for the matrix Y.5.5 Speech RecognitionStochastic language model<strong>in</strong>g plays a central role <strong>in</strong> large vocabulary speech recognition,where it is usually implemented us<strong>in</strong>g the n-gram paradigm. In a typical application, thepurpose of an n-gram language model may be to constra<strong>in</strong> the acoustic analysis, guide thesearch through various (partial) text hypotheses, and/or contribute to the determ<strong>in</strong>ation ofthe f<strong>in</strong>al transcription.In language model<strong>in</strong>g one has to model the probability of occurrence of a predicted wordgiven its history P r(w n |H). N-gram based Language Models have been used successfully <strong>in</strong>Large Vocabulary Automatic Speech Recognition Systems. In this model, the word historyconsists of the N − 1 immediately preced<strong>in</strong>g words. Particularly, tri-gram language models(P r(w n |w n−1 ; w n−2 )) offer a good compromise between model<strong>in</strong>g power and complexity. Amajor weakness of these models is the <strong>in</strong>ability to model word dependencies beyond the spanof the n-grams. As such, n-gram models have limited semantic model<strong>in</strong>g ability. Alternatemodels have been proposed with the aim of <strong>in</strong>corporat<strong>in</strong>g long term dependencies <strong>in</strong>to themodel<strong>in</strong>g process. Methods such as word trigger models, high-order n-grams, cache models,etc., have been used <strong>in</strong> comb<strong>in</strong>ation with standard n-gram models.One such method, a Latent Semantic <strong>Analysis</strong> based model has been proposed [2]. Aword-document occurrence matrix X m×n is formed (m = size of the vocabulary, n = number22

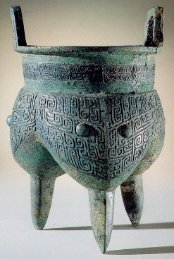

of documents), us<strong>in</strong>g a tra<strong>in</strong><strong>in</strong>g corpus explicitly segmented <strong>in</strong>to a collection of documents.A S<strong>in</strong>gular Value Decomposition X = USV T is performed to obta<strong>in</strong> a low dimensional l<strong>in</strong>earspace S, which is more convenient to perform tasks such as word and document cluster<strong>in</strong>g,us<strong>in</strong>g an appropriate metric. Bellegarda [2] gave the detail<strong>in</strong>g explanation about this method.In the paper [63], Novak and Mammone <strong>in</strong>troduce a new method with NMF. In additionto the non-negativity, another property of this factorization is that the columns of W tend torepresent groups of associated words. This property suggests that the columns of W can be<strong>in</strong>terpreted as conditional word probability distributions, s<strong>in</strong>ce they satisfy the conditionsof a probability distribution by the def<strong>in</strong>ition. Thus the matrix W describes a hiddendocument space D = {d j } by provid<strong>in</strong>g conditional distributions W = P(w i |d j ). The taskis to f<strong>in</strong>d a matrix W, given the word document count matrix X. The second term of thefactorization, matrix H, reflects the properties of the explicit segmentation of the tra<strong>in</strong><strong>in</strong>gcorpus <strong>in</strong>to <strong>in</strong>dividual documents. This <strong>in</strong>formation is not of <strong>in</strong>terest <strong>in</strong> the context ofLanguage Model<strong>in</strong>g. They provide an experimental result where the NMF method results<strong>in</strong> a perplexity reduction of 16% on a database of biology lecture transcriptions.5.6 Spectral Unmix<strong>in</strong>g by NMF and NTFHere we discuss applications of NMF and NTF to numerical methods for the classification ofremotely sensed objects. We consider the identification of space satellites from non-imag<strong>in</strong>gdata such as spectra of visible and NIR range, with different spectral resolutions and <strong>in</strong> thepresence of noise and atmospheric turbulence (See, e.g., [68] or [69, 70].). This is the researcharea of space object identification (SOI).A primary goal of us<strong>in</strong>g remote sens<strong>in</strong>g image data is to identify materials present <strong>in</strong>the object or scene be<strong>in</strong>g imaged and quantify their abundance estimation, i.e., to determ<strong>in</strong>econcentrations of different signature spectra present <strong>in</strong> pixels. Also, due to the large quantityof data usually encountered <strong>in</strong> hyperspectral datasets, compress<strong>in</strong>g the data is becom<strong>in</strong>g<strong>in</strong>creas<strong>in</strong>gly important. In this section we discuss the use of MNF and NTF to reach thesemajor goals: material identification, material abundance estimation, and data compression.For safety and other considerations <strong>in</strong> space, non-resolved space object characterization isan important component of Space Situational Awareness. The key problem <strong>in</strong> non-resolvedspace object characterization is to use spectral reflectance data to ga<strong>in</strong> knowledge regard<strong>in</strong>gthe physical properties (e.g., function, size, type, status change) of space objects that cannotbe spatially resolved with telescope technology. Such objects may <strong>in</strong>clude geosynchronoussatellites, rocket bodies, platforms, space debris, or nano-satellites. Figure 3 shows an artist’srendition of a JSAT type satellite <strong>in</strong> a 36,000 kilometer high synchronous orbit around theEarth. Even with adaptive optics capabilities, this object is generally not resolvable us<strong>in</strong>gground-based telescope technology.Spectral reflectance data of a space object can be gathered us<strong>in</strong>g ground-based spectrometersand conta<strong>in</strong>s essential <strong>in</strong>formation regard<strong>in</strong>g the make up or types of materialscompris<strong>in</strong>g the object. Different materials such as alum<strong>in</strong>um, mylar, pa<strong>in</strong>t, etc. possesscharacteristic wavelength-dependent absorption features, or spectral signatures, that mixtogether <strong>in</strong> the spectral reflectance measurement of an object. Figure 4 shows spectral signaturesof four materials typically used <strong>in</strong> satellites, namely, alum<strong>in</strong>um, mylar, white pa<strong>in</strong>t,and solar cell.23

Figure 3: Artist rendition of a JSAT satellite. Image obta<strong>in</strong>ed from the Boe<strong>in</strong>g SatelliteDevelopment Center.0.09Alum<strong>in</strong>um0.086Mylar0.0850.0840.080.0750.070.0820.080.0780.0760.0650.0740.060 0.5 1 1.5 2Wavelength, microns0.0720 0.5 1 1.5 2Wavelength, microns0.35Solar Cell0.1White Pa<strong>in</strong>t0.30.250.080.20.060.150.040.10.050.0200 0.5 1 1.5 2Wavelength, microns00 0.5 1 1.5 2Wavelength, micronsFigure 4: Laboratory spectral signatures for alum<strong>in</strong>um, mylar, solar cell, and white pa<strong>in</strong>t.For details see [70].The objective is then, given a set of spectral measurements or traces of an object, todeterm<strong>in</strong>e i) the type of constituent materials and ii) the proportional amount <strong>in</strong> which thesematerials appear. The first problem <strong>in</strong>volves the detection of material spectral signaturesor endmembers from the spectral data. The second problem <strong>in</strong>volves the computation ofcorrespond<strong>in</strong>g proportional amounts or fractional abundances. This is known as the spectralunmix<strong>in</strong>g problem <strong>in</strong> the hyperspectral imag<strong>in</strong>g community.Recall that <strong>in</strong> In Nonnegative Matrix Factorization (NMF), an m × n (nonnegative)mixed data matrix X is approximately factored <strong>in</strong>to a product of two nonnegative rankkmatrices, with k small compared to m and n, X ≈ WH. This factorization has theadvantage that W and H can provide a physically realizable representation of the mixeddata, see e.g. [68]. Two sets of factors, one as endmembers and the other as fractionalabundances, are optimally fitted simultaneously. And due to reduced sizes of factors, data24

Figure 5: A blurred and noisy simulated hyperspectral image above the orig<strong>in</strong>al simulatedimage of the Hubble Space Telescope representative of the data collected by the Maui ASISsystem.compression, spectral signature identification of constituent materials, and determ<strong>in</strong>ation oftheir correspond<strong>in</strong>g fractional abundances, can be fulfilled at the same time.Spectral reflectance data of a space object can be gathered us<strong>in</strong>g ground-based spectrometers,such as the SPICA system located on the 1.6 meter Gem<strong>in</strong>i telescope and the ASISsystem located on the 3.67 meter telescope at the Maui Space Surveillance Complex (MSSC),and conta<strong>in</strong>s essential <strong>in</strong>formation regard<strong>in</strong>g the make up or types of materials compris<strong>in</strong>gthe object. Different materials, such as alum<strong>in</strong>um, mylar, pa<strong>in</strong>t, plastics and solar cell,possess characteristic wavelength-dependent absorption features, or spectral signatures, thatmix together <strong>in</strong> the spectral reflectance measurement of an object. A new spectral imag<strong>in</strong>gsensor, capable of collect<strong>in</strong>g hyperspectral images of space objects, has been <strong>in</strong>stalled on the3.67 meter Advanced Electrocal-optical System (AEOS) at the MSSC. The AEOS SpectralImag<strong>in</strong>g Sensor (ASIS) is used to collect adaptive optics compensated spectral images ofastronomical objects and satellites. See Figure 4 for a simulated hyperspectral image of theHubble Space Telescope similar to that collected by ASIS.In [92] and [93] Zhang, et al. develop NTF methods for identify<strong>in</strong>g space objects us<strong>in</strong>ghyperspectral data. Illustrations of material identification, material abundance estimation,and data compression are demonstrated for data similar to that shown <strong>in</strong> Figure 5.6 SummaryWe have outl<strong>in</strong>ed some of what we consider the more important and <strong>in</strong>terest<strong>in</strong>g problemsfor enforc<strong>in</strong>g nonnegativity constra<strong>in</strong>ts <strong>in</strong> numerical analysis. Special emphasis has beenplaced nonnegativity constra<strong>in</strong>ts <strong>in</strong> least squares computations <strong>in</strong> numerical l<strong>in</strong>ear algebraand <strong>in</strong> nonl<strong>in</strong>ear optimization. Techniques <strong>in</strong>volv<strong>in</strong>g nonnegative low-rank matrix and tensorfactorizations and their many applications were also given. This report also <strong>in</strong>cludes aneffort toward a literature survey of the various algorithms and applications of nonnegativityconstra<strong>in</strong>ts <strong>in</strong> numerical analysis. As always, such an overview is certa<strong>in</strong>ly <strong>in</strong>complete, andwe apologize for omissions. Hopefully, this work will <strong>in</strong>form the reader about the importanceof nonnegativity constra<strong>in</strong>ts <strong>in</strong> many problems <strong>in</strong> numerical analysis, while po<strong>in</strong>t<strong>in</strong>g towardthe many advantages of enforc<strong>in</strong>g nonnegativity <strong>in</strong> practical applications.25

References[1] S. Bellavia, M. Macconi, and B. Mor<strong>in</strong>i, An <strong>in</strong>terior po<strong>in</strong>t newton-like method for nonnegativeleast squares problems with degenerate solution, <strong>Numerical</strong> L<strong>in</strong>ear Algebra withApplications, 13, pp. 825–846, 2006.[2] J. R. Bellegarda, A multispan language modell<strong>in</strong>g framework for large vocabulary speechrecognition, IEEE Transactions on Speech and Audio Process<strong>in</strong>g, September 1998, Vol.6, No. 5, pp. 456–467.[3] P. Belhumeur, J. Hespanha, and D. Kriegman, Eigenfaces vs. Fisherfaces: RecognitionUs<strong>in</strong>g Class Specific L<strong>in</strong>ear Projection, IEEE PAMI, Vol. 19, No. 7, 1997.[4] M. H. van Benthem, M. R. Keenan, Fast algorithm for the solution of large-scale nonnegativityconstra<strong>in</strong>ed least squares problems, Journal of Chemometrics, Vol. 18, pp.441-450, 2004.[5] M. S. Bartlett, J. R. Movellan, and T. J. Sejnowski, Face recognition by <strong>in</strong>dependentcomponent analysis, IEEE Trans. Neural Networks, Vol. 13, No. 6, pp. 1450–1464, 2002.[6] R. B. Bapat, T. E. S. Raghavan, Nonnegative Matrices and Applications, CambridgeUniversity Press, UK, 1997.[7] A. Berman and R. Plemmons, Rank factorizations of nonnegative matrices, Problemsand Solutions, 73-14 (Problem), SIAM Rev., Vol. 15:655, 1973.[8] A. Berman, R. Plemmons, Nonnegative Matrices <strong>in</strong> the Mathematical Sciences, AcademicPress, NY, 1979. Revised version <strong>in</strong> SIAM Classics <strong>in</strong> Applied Mathematics,Philadelphia, 1994.[9] M. Berry, M Browne, A. Langville, P Pauca, and R. Plemmons, Algorithmsand applications for approximate nonnegative matrix factorization, ComputationalStatistics and Data <strong>Analysis</strong>, Vol. 52, pp. 155-173, 2007. Prepr<strong>in</strong>t available athttp://www.wfu.edu/∼plemmons[10] M. W. Berry, Computational Information Retrieval, SIAM, Philadelphia, 2000.[11] D. Bertsekas, Nonl<strong>in</strong>ear Programm<strong>in</strong>g, Athena Scientific, Belmont, MA.[12] M. Bierlaire, Ph. L. To<strong>in</strong>t, and D. Tuyttens, On iterative algorithms for l<strong>in</strong>ear leastsquares problems with bound constra<strong>in</strong>ts, L<strong>in</strong>ear Algebra and its Applications, Vol. 143,pp. 111-143, 1991.[13] B. Boser, I. Guyon, and V. Vapnik, A tra<strong>in</strong><strong>in</strong>g algorithm for optimal marg<strong>in</strong> classifiers,Fifth Annual Workshop on Computational Learn<strong>in</strong>g Theory, ACM Press, 1992.[14] D. S. Briggs, High fidelity deconvolution of moderately resolved radio sources, Ph.D.thesis, New Mexico Inst. of M<strong>in</strong><strong>in</strong>g & Technology, 1995.26

[15] R. Bro, S. D. Jong, A fast non-negativity-constra<strong>in</strong>ed least squares algorithm, Journalof Chemometrics, Vol. 11, No. 5, pp. 393-401, 1997.[16] M. Catral, L. Han, M. Neumann, and R.Plemmons, On reduced rank nonnegative matrixfactorization for symmetric nonnegative matrices, L<strong>in</strong>. Alg. Appl., 393:107–126, 2004.[17] J. Cantarella, M. Piatek, Tsnnls: A solver for large sparse least squares problems withnon-negative variables, ArXiv Computer Science e-pr<strong>in</strong>ts, 2004.[18] R.Chellappa, C. Wilson, and S. Sirohey, Human and Mach<strong>in</strong>e Recognition of Faces: ASurvey, Proc, IEEE, Vol. 83, No. 5, pp. 705–740, 1995.[19] X. Chen, L. Gu, S. Z. Li, and H. J. Zhang, Learn<strong>in</strong>g representative local features forface detection, IEEE Conference on Computer Vision and Pattern Recognition, Vol.1,pp. 1126–1131, 2001.[20] M. T. Chu, F. Diele, R. Plemmons, S. Ragni, Optimality, computation,and <strong>in</strong>terpretation of nonnegative matrix factorizations, prepr<strong>in</strong>t. Available at:http://www.wfu.edu/∼plemmons[21] M. Chu and R.J. Plemmons, Nonnegative matrix factorization and applications, Appeared<strong>in</strong> IMAGE, Bullet<strong>in</strong> of the International L<strong>in</strong>ear Algebra Society, Vol. 34, pp.2-7, July 2005. Available at: http://www.wfu.edu/∼plemmons[22] I. B. Ciocoiu, H. N. Cost<strong>in</strong>, Localized versus locality-preserv<strong>in</strong>g subspace projectionsfor face recognition, EURASIP Journal on Image and Video Process<strong>in</strong>g Volume 2007,Article ID 17173.[23] C. Cortes, V. Vapnik, Support Vector networks, Mach<strong>in</strong>e Learn<strong>in</strong>g, Vol. 20, pp. 273 -297, 1995.[24] A. Dax, On computational aspects of bounded l<strong>in</strong>ear least squares problems, ACM Trans.Math. Softw. Vol. 17, pp. 64-73, 1991.[25] I. S. Dhillon, D. M. Modha, Concept decompositions for large sparse text data us<strong>in</strong>gcluster<strong>in</strong>g, Mach<strong>in</strong>e Learn<strong>in</strong>g J., Vol. 42, pp. 143–175, 2001.[26] C. D<strong>in</strong>g and X. He, and H. Simon, On the equivalence of nonnegative matrix factorizationand spectral cluster<strong>in</strong>g, Proceed<strong>in</strong>gs of the Fifth SIAM International Conference onData M<strong>in</strong><strong>in</strong>g, Newport Beach, CA, 2005.[27] B. A. Draper, K. Baek, M. S. Bartlett, and J. R. Beveridge, Recogniz<strong>in</strong>g faces with PCAand ICA, Computer Vision and Image Understand<strong>in</strong>g, Vol. 91, No. 1, pp. 115–137, 2003.[28] N. K. M. Faber, R. Bro, and P. K. Hopke, Recent developments <strong>in</strong> CANDE-COMP/PARAFAC algorithms: a critical review, Chemometr. Intell. Lab., Vol. 65, No.1, pp. 119–137, 2003.27

[29] V. Franc, V. Hlavč, and M. Navara, Sequential coord<strong>in</strong>ate-wise algorithm for nonnegativeleast squares problem, Research report CTU-CMP-2005-06, Center for Mach<strong>in</strong>ePerception, Czech Technical University, Prague, Czech Republic, February 2005.[30] P. E. Gill, W. Murray and M. H. Wright, Practical Optimization, Academic, London,1981.[31] A. A. Giordano, F. M. Hsu, Least Square Estimation With Applications To Digital SignalProcess<strong>in</strong>g, John Wiley & Sons, 1985.[32] L. Grippo, M. Sciandrone, On the convergence of the block nonl<strong>in</strong>ear GaussSeidel methodunder convex constra<strong>in</strong>ts, Oper. Res. Lett. Vol. 26, N0. 3, pp. 127-136, 2000.[33] D. Guillamet, J. Vitrià, Classify<strong>in</strong>g faces with non-negative matrix factorization, AcceptedCCIA 2002, Castell de la Plana, Spa<strong>in</strong>.[34] D. Guillamet, J. Vitrià, Non-negative matrix factorization for face recognition, LectureNotes <strong>in</strong> Computer Science. Vol. 2504, 2002, pp. 336–344.[35] M. Hanke, J. G. Nagy and C. R. Vogel, Quasi-newton approach to nonnegative imagerestorations, L<strong>in</strong>ear Algebra Appl., Vol. 316, pp. 223–236, 2000.[36] R. A. Harshman, Foundations of the PARAFAC procedure: models and conditions foran ”explantory” multi-modal factor analysis, UCLA work<strong>in</strong>g papers <strong>in</strong> phonetics, Vol.16, pp. 1–84, 1970.[37] T. Hastie, R. Tibshirani, and J. Friedman, The Elements of Statistical Learn<strong>in</strong>g: DataM<strong>in</strong><strong>in</strong>g, Inference, and Prediction, Spr<strong>in</strong>ger-Verlag, New York, 2001.[38] P. K. Hopke, Receptor Model<strong>in</strong>g <strong>in</strong> Environmental Chemistry, Wiley and Sons, NewYork, 1985.[39] P. K. Hopke, Receptor Model<strong>in</strong>g for Air Quality Management, Elsevier, Amsterdam,Hetherlands, 1991.[40] P. O. Hoyer, Nonnegative sparse cod<strong>in</strong>g, neural networks for signal process<strong>in</strong>g XII, Proc.IEEE Workshop on Neural Networks for Signal Process<strong>in</strong>g, Martigny, 2002.[41] P. Hoyer, Nonnegative matrix factorization with sparseness constra<strong>in</strong>ts, J. of Mach.Leanr<strong>in</strong>g Res., vol.5, pp.1457–1469, 2004.[42] C. G. Khatri, C. R. Rao, Solutions to some functional equations and their applicationsto. characterization of probability distributions, Sankhya, Vol. 30, pp. 167–180, 1968.[43] B. Kim, <strong>Numerical</strong> optimization methods for image restoration, Ph.D. thesis, StanfordUniversity, 2002.[44] E. Kim, P. K. Hopke, E. S. Edgerton, Source identification of Atlanta aerosol by positivematrix factorization, J. Air Waste Manage. Assoc., Vol. 53, pp. 731–739, 2003.28

[45] H. Kim, H. Park, Sparse non-negative matrix factorizations via alternat<strong>in</strong>g nonnegativity-constra<strong>in</strong>edleast squares for microarray data analysis, Bio<strong>in</strong>formatics, Vol.23, No. 12, pp. 1495-1502, 2007.[46] D. Kim, S. Sra, and I. S. Dhillon, A new projected quasi-newton approach for the nonnegativeleast squares problem, Dept. of Computer Sciences, The Univ. of Texas at Aust<strong>in</strong>,Technical Report # TR-06-54, Dec. 2006.[47] D. Kim, S. Sra, and I. S. Dhillon, Fast newton-type methods for the least squares nonnegativematrix approximation problem, To appear <strong>in</strong> Proceed<strong>in</strong>gs of SIAM Conferenceon Data M<strong>in</strong><strong>in</strong>g, (2007).[48] S. Kullback, and R. Leibler, On <strong>in</strong>formation and sufficiency, Annals of MathematicalStatistics Vol. 22 pp. 79–86, 1951.[49] C. L. Lawson and R. J. Hanson, Solv<strong>in</strong>g Least Squares Problems, PrenticeHall, 1987.[50] A. Langville, C. Meyer, R. Albright, J. Cox, D. Dul<strong>in</strong>g, Algorithms, <strong>in</strong>itializations, andconvergence for the nonnegative matrix factorization, prepr<strong>in</strong>t, 2006.[51] D. Lee and H. Seung, Learn<strong>in</strong>g the parts of objects by non-negative matrix factorization,Nature Vol. 401, pp. 788-791, 1999.[52] D. Lee and H. Seung, Algorithms for nonnegative matrix factorization, Advances <strong>in</strong>Neural Information Process<strong>in</strong>g Systems, Vol. 13, pp. 556–562, 2001.[53] S. Z. Li, X. W. Hou and H. J. Zhang, Learn<strong>in</strong>g spatially localized, parts-based representation,IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–6,2001.[54] C. J. L<strong>in</strong>, Projected gradient methods for non-negative matrix factorization. TechnicalReport Information and Support Services Technical Report ISSTECH-95-013, Departmentof Computer Science, National Taiwan University.[55] C. J. L<strong>in</strong>, Projected gradient methods for non-negative matrix factorization, to appear<strong>in</strong> Neural Computation, 2007.[56] W. Liu, J. Yi, Exist<strong>in</strong>g and new algorithms for nonnegative matrix factorization, Universityof Texas at Aust<strong>in</strong>, 2003, report.[57] A. Mazer, M. Mart<strong>in</strong>, M. Lee and J. Solomon, Image process<strong>in</strong>g software for imag<strong>in</strong>gspectrometry data analysis, Remote Sens<strong>in</strong>g of Environment, Vol. 24, pp. 201–220, 1988.[58] P. Matstoms, snnls: a matlab toolbox for Solve sparse l<strong>in</strong>ear least squarest problemwith nonnegativity constra<strong>in</strong>ts by an active set method, 2004, available athttp://www.math.liu.se/ milun/sls/.[59] J. J. Moré, G. Toraldo, On the solution of large quadratic programm<strong>in</strong>g problems withbound constra<strong>in</strong>ts, SIAM Journal on Optimization, Vol. 1, No. 1, pp. 93-113, 1991.29

[60] J. G. Nagy, Z. Strakoš, Enforc<strong>in</strong>g nonnegativity <strong>in</strong> image reconstruction algorithms, <strong>in</strong>Mathematical Model<strong>in</strong>g, Estimation, and Imag<strong>in</strong>g, 4121, David C. Wilson, et al, eds.,pp. 182–190, 2000.[61] P. Niyogi, C. Burges, P. Ramesh, Dist<strong>in</strong>ctive feature detection us<strong>in</strong>g support vectormach<strong>in</strong>es, In Proceed<strong>in</strong>gs of ICASSP-99, pages 425-428, 1999.[62] J. Nocedal, S. Wright, <strong>Numerical</strong> Optimization, Spr<strong>in</strong>ger, Berl<strong>in</strong>, 2006.[63] M. Novak, R. Mammone, Use of non-negative matrix factorization for language modeladaptation <strong>in</strong> a lecture transcription task, IEEE Workshop on ASRU 2001, pp. 190–193,2001.[64] P. Paatero and U. Tapper, Positive matrix factorization a nonnegative factor model withoptimal utilization of error-estimates of data value, Environmetrics, Vol. 5, pp. 111–126,1994.[65] P. Paatero, The multil<strong>in</strong>ear eng<strong>in</strong>e – a table driven least squares program for solv<strong>in</strong>gmutil<strong>in</strong>ear problems, <strong>in</strong>clud<strong>in</strong>g the n-way parallel factor analysis model, J. Comput.Graphical Statist. Vol. 8, No. 4, pp.854–888, 1999.[66] H. Park, M. Jeon, J. B. Rosen, Lower dimensional representation of text data <strong>in</strong> vectorspace based <strong>in</strong>formation retrieval, <strong>in</strong> Computational Information Retrieval, ed. M. Berry,Proc. Comput. Inform. Retrieval Conf., SIAM, pp. 3–23, 2001.[67] V. P. Pauca, F. Shahnaz, M. W. Berry, R. J. Plemmons, Text m<strong>in</strong><strong>in</strong>g us<strong>in</strong>g nonnegativematrix factorizations, In Proc. SIAM Inter. Conf. on Data M<strong>in</strong><strong>in</strong>g, Orlando, FL, April2004.[68] P. Pauca, J. Piper, and R. Plemmons, Nonnegative matrix factorization for spectral dataanalysis, L<strong>in</strong>. Alg. Applic., Vol. 416, Issue 1, pp. 29–47, 2006.[69] P. Pauca, J. Piper R. Plemmons, M. Giff<strong>in</strong>, Object characterization from spectral dataus<strong>in</strong>g nonnegative factorization and <strong>in</strong>formation theory, Proc. AMOS Technical Conf.,Maui HI, September 2004. Available at http://www.wfu.edu/∼plemmons[70] P. Pauca, R. Plemmons, M. Giff<strong>in</strong> and K. Hamada, Unmix<strong>in</strong>g spectral data us<strong>in</strong>g nonnegativematrix factorization, Proc. AMOS Technical Conference, Maui, HI, September2004. Available at http://www.wfu.edu/∼plemmons[71] M. Powell, An efficient method for f<strong>in</strong>d<strong>in</strong>g the m<strong>in</strong>imum of a function of several variableswithout calculat<strong>in</strong>g derivatives, Comput. J. Vol. 7, pp. 155-162, 1964.[72] M. Powell, On Search Directions For M<strong>in</strong>imization, Math. Programm<strong>in</strong>g Vol. 4, pp.193-201, 1973.[73] E. Polak, Computational Methos <strong>in</strong> Optimization: A Unified Approach, Academic Press,New York.30

[74] L. F. Portugal, J. J. Judice, and L. N. Vicente, A comparison of block pivot<strong>in</strong>g and<strong>in</strong>terior-po<strong>in</strong>t algorithms for l<strong>in</strong>ear least squares problems with nonnegative variables,Mathematics of Computation, Vol. 63, No. 208, pp. 625-643, 1994.[75] Z. Ramadana, B. Eickhoutb, X. Songa, L. M. C. Buydensb, P. K. Hopke Comparisonof positive matrix factorization and multil<strong>in</strong>ear eng<strong>in</strong>e for the source apportionment ofparticulate pollutants, Chemometrics and Intelligent Laboratory Systems 66, pp. 15-28,2003.[76] R. Ramath, W. Snyder, and H. Qi, Eigenviews for object recognition <strong>in</strong> multispectralimag<strong>in</strong>g systems, 32nd Applied Imagery Pattern Recognition Workshop, Wash<strong>in</strong>gtonD.C., pp. 33–38, 2003.[77] M. Rojas, T. Steihaug, Large-Scale optimization techniques for nonnegative imagerestorations, Proceed<strong>in</strong>gs of SPIE, 4791: 233-242, 2002.[78] K. Schittkowski, The numerical solution of constra<strong>in</strong>ed l<strong>in</strong>ear least-squares problems,IMA Journal of <strong>Numerical</strong> <strong>Analysis</strong>, Vol. 3, pp. 11-36, 1983.[79] F. Sha, L. K. Saul, D. D. Lee, Multiplicative updates for large marg<strong>in</strong> classifiers, TechnicalReport MS-CIS-03-12, Department of Computer and Information Science, Universityof Pennsylvania, 2003.[80] A. Shashua and T. Hazan, Non-negative tensor factorization with applications to statisticsand computer vision, Proceed<strong>in</strong>gs of the 22 nd International Conference on Mach<strong>in</strong>eLearn<strong>in</strong>g, Bonn, Germany, pp. 792–799, 2005.[81] A. Shashua and A. Lev<strong>in</strong>, L<strong>in</strong>ear image cod<strong>in</strong>g for regression and classification us<strong>in</strong>gthe tensor-rank pr<strong>in</strong>cipal, Proceed<strong>in</strong>gs of the IEEE Conference on Computer Vision andPattern Recognition, 2001.[82] N. Smith, M. Gales, Speech recognition us<strong>in</strong>g SVMs, <strong>in</strong> Advances <strong>in</strong> Neural and InformationProcess<strong>in</strong>g Systems, Vol. 14, Cambridge, MA, 2002, MIT Press.[83] L. Shure, Brief History of Nonnegative Least Squares <strong>in</strong> MATLAB, Blog available at:http://blogs.mathworks.com/loren/2006/ .[84] G. Tomasi, R. Bro, PARAFAC and miss<strong>in</strong>g values, Chemometr. Intell. Lab., Vol. 75,No. 2, pp. 163–180, 2005.[85] M. A. Turk, A. P. Pentland, Eigenfaces for recognition, Cognitive Neuroscience, Vol. 3,No. 1, pp.71-86, 1991.[86] M. A. Turk, A. P. Pentland, Face recognition us<strong>in</strong>g eigenfaces, Proc. IEEE Conferenceon Computer Vision and Pattern Recognition, Maui, Hawaii, 1991.[87] V. Vapnik, Statistical Learn<strong>in</strong>g Theory, Wiley, 1998.[88] V. Vapnik, The Nature of Statistical Learn<strong>in</strong>g Theory, Spr<strong>in</strong>ger-Verlag, 1999.31

[89] R. S. Varga, Matrix Iterative <strong>Analysis</strong>, Prentice-Hall, Englewood Cliffs, NJ, 1962.[90] W. Zangwill, M<strong>in</strong>imiz<strong>in</strong>g a function without calculat<strong>in</strong>g derivatives. Comput. J. Vol. 10,pp. 293-296, 1967.[91] P. Zhang, H. Wang, P. Pauca and R. Plemmons, Tensor methods for space object identificationus<strong>in</strong>g hyperspectral data, prepr<strong>in</strong>t, 2007.[92] P. Zhang, H. Wang, R. Plemmons, and P. Pauca, Spectral unmix<strong>in</strong>g us<strong>in</strong>g nonnegativetensor factorization, Proc. ACM, March 2007.[93] P. Zhang, H. Wang, P. Pauca and R. Plemmons, Tensor methods for space object identificationus<strong>in</strong>g hyperspectral data, prepr<strong>in</strong>t, 2007.[94] W. Zhao, R. Chellappa, P. J. Phillips And A. Rosenfeld, Face recognition: a literaturesurvey, ACM Comput<strong>in</strong>g Surveys, Vol. 35, No. 4, pp. 399-458, 2003.32