You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

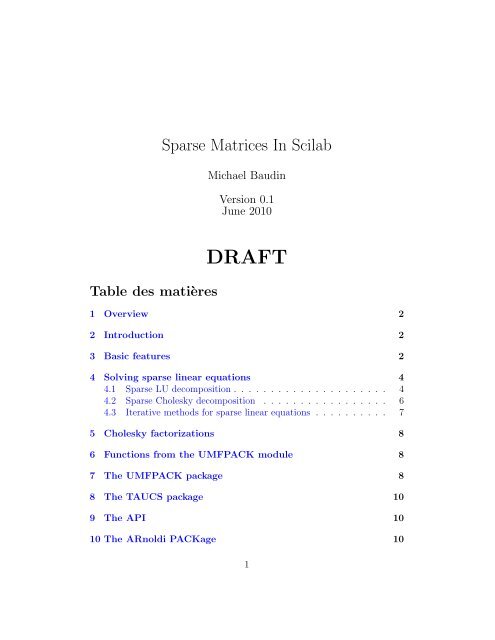

<strong>Sparse</strong> <strong>Matrices</strong> <strong>In</strong> <strong>Scilab</strong>Michael BaudinVersion 0.1June 2010Table des matièresDRAFT1 Overview 22 <strong>In</strong>troduction 23 Basic features 24 Solving sparse linear equations 44.1 <strong>Sparse</strong> LU decomposition . . . . . . . . . . . . . . . . . . . . . 44.2 <strong>Sparse</strong> Cholesky decomposition . . . . . . . . . . . . . . . . . 64.3 Iterative methods for sparse linear equations . . . . . . . . . . 75 Cholesky factorizations 86 Functions from the UMFPACK module 87 The UMFPACK package 88 The TAUCS package 109 The API 1010 The ARnoldi PACKage 101

11 The Matrix Market module 11Bibliography 141 OverviewThe goal of this document is to present the management of sparse matricesin <strong>Scilab</strong>.2 <strong>In</strong>troduction<strong>In</strong> numerical analysis, a sparse matrix is a matrix populated primarilywith zeros[5]. Huge sparse matrices often appear in science or engineeringwhen solving partial differential equations.When storing and manipulating sparse matrices on a computer, it is beneficialand often necessary to use specialized algorithms and data structuresthat take advantage of the sparse structure of the matrix. Operations usingstandard dense matrix structures and algorithms are slow and consume largeamounts of memory when applied to large sparse matrices. <strong>Sparse</strong> data isby nature easily compressed, and this compression almost always results insignificantly less computer data storage usage. <strong>In</strong>deed, some very large sparsematrices are infeasible to manipulate with the standard dense algorithms.<strong>Scilab</strong> provides several features to manage sparse matrices and performusual linear algebra operations on them. These operations includes all thebasic linear algebra including addition, dot product, transpose and the matrixvector product. For these basic operations, we do not make any difference ina script managing a dense matrix and a script managing a sparse matrix.Higher level operations include solving linear systems of equations, computingsome of their eigenvalues or computing some decompositions (e.g.Cholesky). The usual functions, such as the lu or chol functions, do notwork with sparse matrices and special sparse functions must be used.3 Basic featuresThe figure 1 present several functions to create sparse matrices.2

sp2adjspeyesponessprandspzerosfullsparsemtlb sparsennzspcompackspgetconverts sparse matrix into adjacency formsparse identity matrixsparse matrixsparse random matrixsparse zero matrixsparse to full matrix conversionsparse matrix definitionconvert sparse matrixnumber of non zero entries in a matrixconverts a compressed adjacency representationretrieves entries of sparse matrixFigure 1 – Basic features for sparse matrices.<strong>In</strong> the following session, we use the sprand function to create a 100×1000sparse matrix with density 0.001. The density parameter makes so that thenumber of non-zero entries in the sparse matrix is approximately equal to100 · 1000 · 0.001 = 100. Then we use the size function to compute the sizeof the matrix. Finally, we compute the number of non-zero entries with thennz function.-->A= sprand (100 ,1000 ,0.001);--> size (A)ans =100. 1000.-->nnz (A)ans =100.The sparse and full functions works as complementary functions. <strong>In</strong>deed,the sparse function converts a full matrix into a sparse one, while thefull function converts a sparse matrix into a full one.<strong>In</strong> the following session, we create a 4 × 4 dense Hilbert matrix. Thenwe use the sparse function to convert it into a sparse matrix. <strong>Scilab</strong> thendisplays all the non-zero entries of the matrix one at a time. Finally, we usethe full function to convert the sparse matrix into a dense one.-->A = testmatrix (" hilb " ,4)A =16. - 120. 240. - 140.- 120. 1200. - 2700. 1680.3

handle to the factors h and the rank rk of the matrix. The variable h has nodirect use for the user. <strong>In</strong> fact, it represents a memory location where <strong>Scilab</strong>has stored the actual factors. The purpose of the variable h is to be an inputargument of the lusolve, luget and ludel functions. <strong>In</strong> the script, we passh and the right hand-side b to the lusolve function, which produces thesolution x of the equation Ax = b. Finally, we delete the LU factors with theludel function.non_zeros =[1 ,2 ,3 ,4];rows_cols =[1 ,1;2 ,2;3 ,3;4 ,4];spA = sparse ( rows_cols , non_zeros )[h,rk] = lufact (sp );b = [1 1 1 1] ’;x= lusolve (h,b)ludel (h);The previous script produces the following output.--> non_zeros =[1 ,2 ,3 ,4];--> rows_cols =[1 ,1;2 ,2;3 ,3;4 ,4];-->spA = sparse ( rows_cols , non_zeros )spA =( 4, 4) sparse matrix( 1, 1) 1.( 2, 2) 2.( 3, 3) 3.( 4, 4) 4.-->[h,rk] = lufact (sp );-->b = [1 1 1 1] ’;-->x= lusolve (h,b)x =1.0.50.33333330.25--> ludel (h);4.2 Iterative methods for sparse linear equationsThe pcg function is a precondioned conjugate gradient algorithm for symmetricpositive definite matrices. It can managed dense or sparse matrices,but is mainly designed for large sparse systems of linear equations. It is basedon a <strong>Scilab</strong> port of the Matlab scripts provided in [1].6

<strong>In</strong> the following example, we define a dense, well-conditionned 10 × 10dense matrix A and a right hand size b made of ones. We use the sparsefunction to convert the A matrix into the sparse matrix Asparse. We finallyuse the pcg function on the sparse matrix Asparse in order to solve theequations Ax = b. This produces the solution x, a status flag fail, therelative residual norm err, the number of iterations iter and the vector ofthe residual relative norms res.A=[ 94 0 0 0 0 28 0 0 32 00 59 13 5 0 0 0 10 0 00 13 72 34 2 0 0 0 0 650 5 34 114 0 0 0 0 0 550 0 2 0 70 0 28 32 12 028 0 0 0 0 87 20 0 33 00 0 0 0 28 20 71 39 0 00 10 0 0 32 0 39 46 8 032 0 0 0 12 33 0 8 82 110 0 65 55 0 0 0 0 11 100];b= ones (10 ,1);Asparse = sparse (A);[x, fail , err , iter , res ]= pcg ( Asparse ,b, maxIter =30 , tol =1d -12)The previous script produces the following output.-->[x, fail , err , iter , res ]= pcg ( Asparse ,b, maxIter =30 , tol =1d -12)res =1.0.23027430.11021720.02234630.00964460.00520380.00375250.00069590.00002070.00000421.697D -13iter =10.err =1.697D -13fail =0.x =0.00717510.01344927

chfactchsolvespcholsparse Cholesky factorizationsparse Cholesky solversparse cholesky factorizationFigure 3 – Cholesky factorizations for sparse matrices.0.00676100.00503390.00737350.00652480.00420640.00934340.00446400.0023456We see that 10 iterations were required to get a residual close to 10 −13 .5 Cholesky factorizationsThe figure 3 presents the functions which allow to compute the Choleskydecomposition of sparse matrices.According to [4], the spchol function spchol uses two Fortran packages :a sparse Cholesky factorization package developed by Esmond Ng and BarryPeyton at ORNL and a multiple minimun-degree ordering package by JosephLiu at University of Waterloo.6 Functions from the UMFPACK moduleThe UMFPACK module provide several functions related to sparse matrices.7 The UMFPACK packageThe UMFPACK package provide several direct algorithms to computeLU decompositions of sparse matrices.8

Plot<strong>Sparse</strong>ReadHB<strong>Sparse</strong>cond2spcondestsprafiterres with precplot the pattern of non nul elements of a sparse matrixread a Harwell-Boeing sparse format filecomputes an approximation of the 2-norm conditionnumber of a s.p.d. sparse matrixestimate the condition number of a sparse matrix(obsolete) iterative refinement for a s.p.d. linear systemcomputes the residual r = Ax-b with precisionFigure 4 – Functions from the umfpack module.umf licenseumf ludelumf lufactumf lugetumf luinfoumf lusolveumfpackdisplay the umfpack licenseutility function used with umf lufactlu factorisation of a sparse matrixretrieve lu factors at the scilab levelget information on LU factorssolve a linear sparse system given the LU factorssolve sparse linear systemFigure 5 – Functions from the umfpack module.9

taucs chdeltaucs chfacttaucs chgettaucs chinfotaucs chsolvetaucs licenseutility function used with taucs chfactcholesky factorisation of a sparse s.p.d. matrixretrieve the Cholesky factorization at the scilab levelget information on Cholesky factorssolve a linear sparse (s.p.d.) system given the Cholesky factorsdisplay the taucs licenseFigure 6 – Functions from the TAUCS module.Boolean sparse readingBoolean sparse writing<strong>Sparse</strong> reading<strong>Sparse</strong> writingBoolean sparse readingBoolean sparse writing<strong>Sparse</strong> matrix reading<strong>Sparse</strong> writingHow to read boolean sparse in a list.How to add boolean sparse matrix in a list.How to read sparse in a list.How to add sparse matrix in a list.How to read boolean sparse in a gateway.How to add boolean sparse matrix in a gateway.How to read sparse matric in a gateway.How to write sparse matrix in a gateway.Figure 7 – The sparse API, to be used in gateways.8 The TAUCS packageThe TAUCS package provide several direct algorithms to compute Choleskydecompositions of sparse matrices. This package is available in theUMFPACK module.9 The API10 The ARnoldi PACKageARPACK is a collection of Fortran77 subroutines designed to solve largescale eigenvalue problems. The functions available in <strong>Scilab</strong> are presented inthe figure 8.The package is designed to compute a few eigenvalues and correspondingeigenvectors of a general n by n matrix A. It is most appropriate for largesparse or structured matrices A where structured means that a matrix-vector10

product w = Av requires order n rather than the usual order n 2 floating pointoperations. This software is based upon an algorithmic variant of the Arnoldiprocess called the Implicitly Restarted Arnoldi Method (IRAM). When thematrix A is symmetric it reduces to a variant of the Lanczos process calledthe Implicitly Restarted Lanczos Method (IRLM). These variants may beviewed as a synthesis of the Arnoldi/Lanczos process with the ImplicitlyShifted QR technique that is suitable for large scale problems. For manystandard problems, a matrix factorization is not required. Only the action ofthe matrix on a vector is needed.ARPACK software is capable of solving large scale symmetric, nonsymmetric,and generalized eigenproblems from significant application areas. Thesoftware is designed to compute a few (k) eigenvalues with user specified featuressuch as those of largest real part or largest magnitude. Storage requirementsare on the order of nk locations. No auxiliary storage is required. A setof Schur basis vectors for the desired k-dimensional eigen-space is computedwhich is numerically orthogonal to working precision. Numerically accurateeigenvectors are available on request.The following is a list of the main features of the library :– Reverse Communication <strong>In</strong>terface.– Single and Double Precision Real Arithmetic Versions for Symmetric,Non-symmetric,– Standard or Generalized Problems.– Single and Double Precision Complex Arithmetic Versions for Standardor Generalized Problems.– Routines for Banded <strong>Matrices</strong> - Standard or Generalized Problems.– Routines for The Singular Value Decomposition.– Example driver routines that may be used as templates to implementnumerous Shift-<strong>In</strong>vert strategies for all problem types, data types andprecision.11 The Matrix Market moduleThe Matrix Market (MM) exchange formats provide a simple mechanismto facilitate the exchange of matrix data. <strong>In</strong> particular, the objective hasbeen to define a minimal base ASCII file format which can be very easilyexplained and parsed, but can easily adapted to applications with a morerigid structure, or extended to related data objects. The MM exchange format11

dnaupddneupddsaupddsaupdznaupdzneupdcompute approximations to a few eigenpairs ofa real linear operatorcompute the converged approximations to eigenvaluesof Az = λBz, approximations to a few eigenpairsof a real linear operatorcompute approximations to a few eigenpairs of a real andsymmetric linear operatorcompute approximations to the converged approximations toeigenvalues of Az = λBzcompute a few eigenpairs of a complex linear operatorwith respect to a semi-inner product defined by ahermitian positive semi-definite real matrix B.compute approximations to the converged approximations toeigenvalues of Az = λBzFigure 8 – Functions from the Arnoldi Package.for matrices is really a collection of affiliated formats which share designelements.<strong>In</strong> the specification, two matrix formats are defined.– Coordinate Format. A file format suitable for representing general sparsematrices. Only nonzero entries are provided, and the coordinates ofeach nonzero entry is given explicitly. This is illustrated in the examplebelow.– Array Format. A file format suitable for representing general densematrices. All entries are provided in a pre-defined (column-oriented)order.The Matrix Market file format can be used to manage dense or sparsematrices. MM coordinate format is suitable for representing sparse matrices.Only nonzero entries need be encoded, and the coordinates of each are givenexplicitly.http://atoms.scilab.org/toolboxes/MatrixMarket<strong>In</strong> order to install the Matrix Market module, we use the atoms system,as in the following script.atoms<strong>In</strong>stall (" MatrixMarket ")12

mminfommreadmmwriteExtracts size and storage information out of a Matrix Market fileReads a Matrix Market fileWrites a sparse or dense matrix to a Matrix Market fileFigure 9 – Functions from the Matrix Market module.The table 9 presents the functions provided by the Matrix Market module.<strong>In</strong> the following script, we create a sparse matrix with the sparse functionand save it into a file with the mmwrite function.A= sparse ([1 ,1;1 ,3;2 ,2;3 ,1;3 ,3;3 ,4;4 ,3;4 ,4] ,..[9;27+ %i ;16;27 - %i ;145;88;88;121] ,[4 ,4]) ;filename = TMPDIR +"/A. mtx ";mmwrite ( filename ,A);<strong>In</strong> the following session, we use the mminfo function to extract informationsfrom this file.--> mminfo ( filename );==============================================================<strong>In</strong>formation about MatrixMarket file :C:\ Users \ myname \ AppData \ Local \ Temp \ SCI_TMP_8216_ /A. mtx%%% Generated by <strong>Scilab</strong> 11 -Jun -2010storage : coordinateentry type : complexsymmetry : hermitian==============================================================<strong>In</strong> the following session, we use another calling sequence of the mminfo functionto extract data from the file. This allows to get the number of rows rows,the number of columns cols, the number of entries and other informationsas well.-->[rows ,cols , entries ,rep , field ,symm , comm ] = mminfo ( filename )comm =%%% Generated by <strong>Scilab</strong> 11 -Jun -2010symm =hermitianfield =complexrep =coordinateentries =6.13

cols =4.rows =4.<strong>In</strong> the following session, we use the mmread function to read the content ofthe file and create the matrix A. We use the nnz function to compute thenumber of nonzero entries in the matrix.-->A= mmread ( filename )A =( 4, 4) sparse matrix( 1, 1) 9.( 1, 3) 27. + 1.i( 2, 2) 16.( 3, 1) 27. - 1.i( 3, 3) 145.( 3, 4) 88.( 4, 3) 88.( 4, 4) 121.-->nnz (A)ans =8.Références[1] R. Barrett, M. Berry, T. F. Chan, J. Demmel, J. Donato, J. Dongarra,V. Eijkhout, R. Pozo, C. Romine, and H. Van der Vorst. Templates forthe Solution of Linear Systems : Building Blocks for Iterative Methods,2nd Edition. SIAM, Philadelphia, PA, 1994.[2] Kenneth Kundert and Alberto Sangiovanni-Vincentelli. <strong>Sparse</strong> 1.3. http://www.netlib.org/sparse/.[3] Kenneth S. Kundert. <strong>Sparse</strong> matrix techniques. <strong>In</strong> Albert E. Ruehli,editor, Circuit Analysis, Simulation and Design, volume 3 of pt. 1. North-Holland, 1986.[4] Héctor E. Rubio Scola. Implementation of lipsol in scilab. 1997. http://hal.inria.fr/inria-00069956/en.[5] Wikipedia. <strong>Sparse</strong> matrix — wikipedia, the free encyclopedia, 2010. [Online; accessed 11-June-2010].14