Hash Probability - BYU Computer Science Students Homepage Index

Hash Probability - BYU Computer Science Students Homepage Index

Hash Probability - BYU Computer Science Students Homepage Index

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

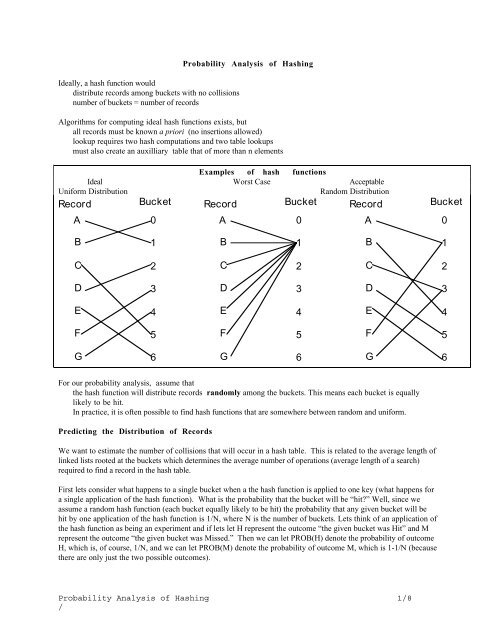

Now suppose we apply the hash function twice. For a given bucket there are now 4 possible outcomes of thisexperiment. MM-the bucket was missed both times, MH-the bucket was missed the first time and hit the second,HM-the bucket was hit the first time and missed the second, and HH-the bucket was hit both times. Theprobabilities of each of these outcomes, since the experiments are independent, is the product of the two probabilitiesof two outcomes in each pair. PROB(HH)=PROB(H)XPROB(H)=(1/N)X(1/N)=1/N 2 .PROB(MH)=PROB(M)XPROB(H)=(1-1/N)X(1/N)etc.For any sequence of applications of the hash function we can calculate the probability of any sequence of outcomeswith respect to a given bucket. Suppose we apply the hash function four times. For a given bucket the possibleoutcomes are:Outcome PROB(Outcome) Assuming N=10MMMM Miss,Miss,Miss,Miss (1-1/N) 4 .6561MMMH Miss,Miss,Miss,Hit (1-1/N) 3 (1/N) .0729MMHM Miss,Miss,Hit,Miss (1-1/N) 3 (1/N) .0729MMHH Miss,Miss,Hit,Hit (1-1/N) 2 (1/N) 2 .0081MHMM Miss,Hit,Miss,Miss (1-1/N) 3 (1/N) .0729MHMH Miss,Hit,Miss,Hit (1-1/N) 2 (1/N) 2 .0081MHHM Miss,Hit,Hit,Miss (1-1/N) 2 (1/N) 2 .0081MHHH Miss,Hit,Hit,Hit (1-1/N)(1/N) 3 .0009HMMM Hit,Miss,Miss,Miss (1-1/N) 3 (1/N) .0729HMMH Hit,Miss,Miss,Hit (1-1/N) 2 (1/N) 2 .0081HMHM Hit,Miss,Hit,Miss (1-1/N) 2 (1/N) 2 .0081HMHH Hit,Miss,Hit,Hit (1-1/N)(1/N) 3 .0009HHMM Hit,Hit,Miss,Miss (1-1/N) 2 (1/N) 2 .0081HHMH Hit,Hit,Miss,Hit (1-1/N)(1/N) 3 .0009HHHM Hit,Hit,Hit,Miss (1-1/N)(1/N) 3 .0009HHHH Hit,Hit,Hit,Hit (1/N) 4 .0001_____1.0000Suppose we want to calculate from this the probability of a given bucket getting hit exactly once. We just add upthe probabilities for those events that have one hit.Outcome PROB(Outcome) Assuming N=10MMMM Miss,Miss,Miss,Miss (1-1/N) 4 .6561MMMH Miss,Miss,Miss,Hit (1-1/N) 3 (1/N) .0729MMHM Miss,Miss,Hit,Miss (1-1/N) 3 (1/N) .0729MMHH Miss,Miss,Hit,Hit (1-1/N) 2 (1/N) 2 .0081MHMM Miss,Hit,Miss,Miss (1-1/N) 3 (1/N) .0729MHMH Miss,Hit,Miss,Hit (1-1/N) 2 (1/N) 2 .0081MHHM Miss,Hit,Hit,Miss (1-1/N) 2 (1/N) 2 .0081MHHH Miss,Hit,Hit,Hit (1-1/N)(1/N) 3 .0009HMMM Hit,Miss,Miss,Miss (1-1/N) 3 (1/N) .0729HMMH Hit,Miss,Miss,Hit (1-1/N) 2 (1/N) 2 .0081HMHM Hit,Miss,Hit,Miss (1-1/N) 2 (1/N) 2 .0081HMHH Hit,Miss,Hit,Hit (1-1/N)(1/N) 3 .0009HHMM Hit,Hit,Miss,Miss (1-1/N) 2 (1/N) 2 .0081HHMH Hit,Hit,Miss,Hit (1-1/N)(1/N) 3 .0009HHHM Hit,Hit,Hit,Miss (1-1/N)(1/N) 3 .0009HHHH Hit,Hit,Hit,Hit (1/N) 4 .0001<strong>Probability</strong> Analysis of <strong>Hash</strong>ing 2/8

4 X .0729 = .2916Notice that if we apply the hash function k times then the probability if j hits and k-j misses for a given bucket isWX(1/N)jX(1-1/N) where W is the number of ways of getting j hits and k-j misses. W=4 for the precedingexample.Also notice that the number of ways of getting j hits and k-j misses is just the number of ways of placing j Hs in kplaces. For the preceding example j=1 and k=4 so it is the number of ways of placing 1 H in 4 places.H_ _ _ _ H _ _ _ _ H _ _ _ _ H 4 waysHow about 2 hits in 5 hashes?HH_ _ _H _ H _ _ H _ _ H _ H _ _ _ H _ HH _ _ _ H _ H _ _ H _ _ H_ _ HH _ _ _ H _ H _ _ _ HHThere are 10 ways to realize 2 hits in 5 hashes.In general, the number of ways of placing j Hs in k places is given by the formulak !W =( k − j )! j !So, if we want to compute the probability p(j,k) of j hits in k hashes we can use the formulak ! 1 j 1 k − jp ( j , k ) = ( ) ( 1 − )(A)( k − j )! j ! N NUnfortunately, this is a very nasty function to evaluate. The factorials get gigantic. However, there is a much moreeasily computed function that is a very close approximation to (A) for large N and k. It is the Poisson functionP ( j , k ) =j( k / N ) e − ( k / N )j !or, for a given k (the number of records hashed) and a given N (the hash table size)P ( j ) =j( k / N ) e − ( k / N )j !this function can be used to compute the probability that a given hash table bucket will have j records assigned to itafter k records have been hashed. P(j) is a good approximation to p(j) as shown in this table:j k p(j) P(j)1 50 0.11976 0.119451 100 0.20881 0.2083161 125 0.24371 0.2431571 150 0.27307 0.2724722 50 0.00806 0.0081812 100 0.0284 0.0285362 150 0.05589 0.0559873 50 0.00035 0.0003743 1 00 0.00255 0.0026063 1 50 0.00757 0.007669For example, suppose we are hashing 100 records into 100 buckets and we want to know the probability of a givenbucket being hit zero times.0 − ( 100/ 100)( 100 / 100) e 1 0 e − 1P ( 0 ) == = 0 . 3680 !0 !<strong>Probability</strong> Analysis of <strong>Hash</strong>ing 3/8/

Also, the probability that a given bucket will be hit once is1 1 e − 1P ( 1 ) = = 0 . 3681 !and, the probability that a given bucket will be hit twice is1 2 e − 1P ( 2 ) = = 0 . 1842 !and, the probability that a given bucket will be hit three times is1 31 e − 1P ( 3 ) = = 0 . 0613 !and, the probability that a given bucket will be hit four times is1 4 e − 1P ( 4 ) = = 0 . 0154 !We can also use the Poisson function to estimate, for any j, the number of buckets that will be hit j times.Because, if p is the probability of a given bucket being hit j times then kp is the expected number of buckets thatwill be hit j times. For our preceding example, 100 records hashed into 100 buckets, we would expect (sinceP(0)=0.368) that 100X0.368=36.8 or about 37 buckets would not be hit at all and also (since P(1)=0.368) that about37 buckets would be hit exactly once, and that 100X0.184=18 buckets would be hit twice. We can build a tableshowing the expected number of buckets that will be hit j times for various values of j.j <strong>Probability</strong> Expected numberthat a given bucketof buckets beingis hit j timeshit j times____________________________________________0 .368 371 .368 372 .184 183 .061 64 .015 25 .003 06 .000 0So, we have another way of viewing P(j). Rather than thinking of it as giving a probability, we can think of it asgiving the proportion of the buckets being hit by j records.Predicting CollisionsWe can expand the above table with a column for collisions. Each of the 37 buckets hit zero times account for zeroscollisions. Each of the 37 buckets hit once account for zero collisions. But each of the 18 buckets hit twice accountfor one collision each, and each of the 6 buckets hit 3 times account for 2 collisions each.j <strong>Probability</strong> Expected numberthat a given bucketof buckets beingis hit j times hit j times Collisions________________________________________________________0 .368 37 01 .368 37 02 .184 18 183 .061 6 124 .015 2 65 .003 0 06 .000 0 0<strong>Probability</strong> Analysis of <strong>Hash</strong>ing 4/8

So, the expected number of collisions when 100 records are hashed into 100 buckets with a random hashing functionis 18+12+6=36. 36% of the records are collisions!Reducing Collisions by Lowering Packing DensityOur intuition tells us that we can reduce the number of collisions by having more buckets than records. Packingdensity is the ratio k/N of the number of records k to the number of buckets N. Notice that packing densityappears twice in the Poisson function.Packing DensityP(j ) = (k/N) j e -k/ Nj!Let’s rebuild our table showing collisions for a packing density of .50. Again, we’ll assume we are hashing 100records but this time we’ll assume 200 buckets. k/N = 100/200 = 0.50.P ( 0 ) =0 − ( 100/ 100)( 100 / 200) e0 !=. 5 0 e − . 50 != 0 . 607P ( 1 ) =. 5 1 e − . 51 != 0 . 304P ( 2 ) =. 5 2 e − . 52 != 0 . 076P ( 3 ) =. 5 3 e − . 53 != 0 . 013j <strong>Probability</strong> Expected numberthat a given bucketof buckets beingis hit j times hit j times Collisions________________________________________________________0 .607 121 (.607X200) 01 .304 61 02 .076 15 153 .013 3 64 .000 0 0We still have 21 collisions, which is 21% of the 100 records. It seems like a lot of collisions, but what we arereally interested in is the average retrieval time - the time to find a record given its key. This is just the averagelength of a synonym list. But the length of a synonym list rooted at a bucket is just the number of records that hitthe bucket - j in our table - the row index. So we can compute the average length of a synonym list by taking theaverage of the synonym list lengths weighted by the number of buckets with that list length. For the packingdensity of 1.0j <strong>Probability</strong> Expected numberthat a given bucketof buckets beingis hit j times hit j times Collisions________________________________________________________0 .368 37 01 .368 37 02 .184 18 183 .061 6 124 .015 2 65 .003 0 06 .000 0 0<strong>Probability</strong> Analysis of <strong>Hash</strong>ing 5/8/

we have 37 buckets with list length 1, 18 with length 2, 6 with length 3 and 2 with length 4. So, our weightedaverage is (37X1 + 18X2 + 6X3 + 2X4)/63=1.57. So, the average number of records that have to be examined tofinish an unsuccessful search is 1.57. The average number to finish a successful search is slightly less because wewouldn’t always look at every record on a list longer than 1 record.(37 + 18X1.5 + 6X2 + 2X3)/63 = 1.3We can use this same analysis to predict birthday coincidences in a room of n people. This table indicates, onaverage, how many days will be the birthday for j different people if there are n people in the room. In a group of100 people, you could expect about 10 pairs of identical birthdays, and one triple birthday.n\j 0 1.000 2.0 3 4 520 345.538 18.934 0.519 0.009 0.000 0.00023 342.710 21.595 0.680 0.014 0.000 0.00040 327.114 35.848 1.964 0.072 0.002 0.00060 309.672 50.905 4.184 0.229 0.009 0.00080 293.160 64.254 7.042 0.514 0.028 0.001100 277.529 76.035 10.416 0.951 0.065 0.004200 211.020 115.627 31.679 5.786 0.793 0.087365 134.276 134.276 67.138 22.379 5.595 1.119Collision Resolution by Open AddressingStoring records in linked lists rooted at the buckets is not the only way to handle collisions. It may be that the linkfields take a significant amount of space because the actual records are small. Another way to handle collisions is tosearch for an unused bucket and put the colliding record there. The search can be as simple as a sequential searchforward (through buckets with higher indexes). Open addressing with a simple forward search is called progressiveoverflow. For example, suppose we are going to store the following words in a hash table with 10 buckets. Also,suppose out hash function assigns the words to the given bucket address.WordBucket AddressAssigned bythe <strong>Hash</strong> Function___________________________and 5any 8bet 0big 5do 7go 8car 8As we put the words in the hash table one at a time everything is great until we try to put “big” in and find that itcollides. So we search forward and find that bucket 6 is empty so we put it there.<strong>Probability</strong> Analysis of <strong>Hash</strong>ing 6/8

54AverageSear chLengt h32110 20 30 40 50 60 70 80 90Packing Densit y100Deletions Under Progressive OverflowLet’s consider the algorithm for searching in a hash table which uses progressive overflow.When we are searching we want to stop when we find the record or when we reach an empty bucket (we continuesearching while Table[i] is not empty).So, when we delete a record we have to make sure we don’t cause a search to be terminated prematurely. Forexample, suppose we delete “bet” from our example table.0123456789betcarandbigdoanygodelete " bet "0123456789carandbigdoanygoNow what happens when we search for “car?”0123456789carandbigdoanygohome addresscarsearch 1Terminate search becausebucket is empty.search 2When have to distinguish between “never used” buckets and buckets that were used but have become empty bydeletion. We do so by using a tombstone which is just a marker that distinguishes buckets that were used buthave become empty by deletion from buckets that have never been used. A bucket with a tombstone is notconsidered empty by the LookUp search algorithm. But when we search to resolve a collision we can put thecolliding record in place of a tombstone.<strong>Probability</strong> Analysis of <strong>Hash</strong>ing 8/8