The GPU Computing Revolution - London Mathematical Society

The GPU Computing Revolution - London Mathematical Society

The GPU Computing Revolution - London Mathematical Society

- No tags were found...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

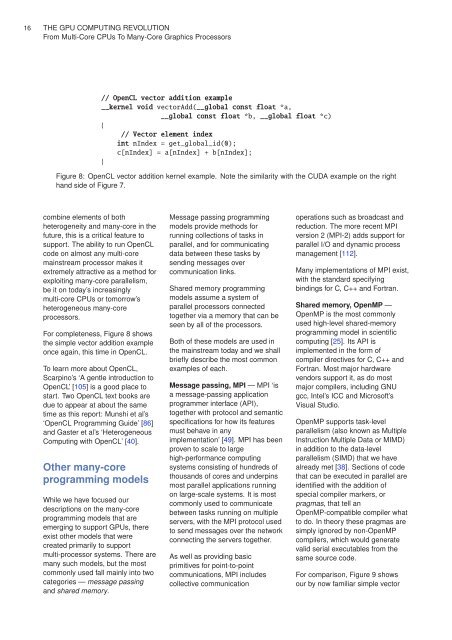

16 THE <strong>GPU</strong> COMPUTING REVOLUTIONFrom Multi-Core CPUs To Many-Core Graphics Processors// OpenCL vector addition example__kernel void vectorAdd(__global const float *a,__global const float *b, __global float *c){// Vector element indexint nIndex = get_global_id(0);c[nIndex] = a[nIndex] + b[nIndex];}Figure 8: OpenCL vector addition kernel example. Note the similarity with the CUDA example on the righthand side of Figure 7.combine elements of bothheterogeneity and many-core in thefuture, this is a critical feature tosupport. <strong>The</strong> ability to run OpenCLcode on almost any multi-coremainstream processor makes itextremely attractive as a method forexploiting many-core parallelism,be it on today’s increasinglymulti-core CPUs or tomorrow’sheterogeneous many-coreprocessors.For completeness, Figure 8 showsthe simple vector addition exampleonce again, this time in OpenCL.To learn more about OpenCL,Scarpino’s ‘A gentle introduction toOpenCL’ [105] is a good place tostart. Two OpenCL text books aredue to appear at about the sametime as this report: Munshi et al’s‘OpenCL Programming Guide’ [86]and Gaster et al’s ‘Heterogeneous<strong>Computing</strong> with OpenCL’ [40].Other many-coreprogramming modelsWhile we have focused ourdescriptions on the many-coreprogramming models that areemerging to support <strong>GPU</strong>s, thereexist other models that werecreated primarily to supportmulti-processor systems. <strong>The</strong>re aremany such models, but the mostcommonly used fall mainly into twocategories — message passingand shared memory.Message passing programmingmodels provide methods forrunning collections of tasks inparallel, and for communicatingdata between these tasks bysending messages overcommunication links.Shared memory programmingmodels assume a system ofparallel processors connectedtogether via a memory that can beseen by all of the processors.Both of these models are used inthe mainstream today and we shallbriefly describe the most commonexamples of each.Message passing, MPI — MPI ‘isa message-passing applicationprogrammer interface (API),together with protocol and semanticspecifications for how its featuresmust behave in anyimplementation’ [49]. MPI has beenproven to scale to largehigh-performance computingsystems consisting of hundreds ofthousands of cores and underpinsmost parallel applications runningon large-scale systems. It is mostcommonly used to communicatebetween tasks running on multipleservers, with the MPI protocol usedto send messages over the networkconnecting the servers together.As well as providing basicprimitives for point-to-pointcommunications, MPI includescollective communicationoperations such as broadcast andreduction. <strong>The</strong> more recent MPIversion 2 (MPI-2) adds support forparallel I/O and dynamic processmanagement [112].Many implementations of MPI exist,with the standard specifyingbindings for C, C++ and Fortran.Shared memory, OpenMP —OpenMP is the most commonlyused high-level shared-memoryprogramming model in scientificcomputing [25]. Its API isimplemented in the form ofcompiler directives for C, C++ andFortran. Most major hardwarevendors support it, as do mostmajor compilers, including GNUgcc, Intel’s ICC and Microsoft’sVisual Studio.OpenMP supports task-levelparallelism (also known as MultipleInstruction Multiple Data or MIMD)in addition to the data-levelparallelism (SIMD) that we havealready met [38]. Sections of codethat can be executed in parallel areidentified with the addition ofspecial compiler markers, orpragmas, that tell anOpenMP-compatible compiler whatto do. In theory these pragmas aresimply ignored by non-OpenMPcompilers, which would generatevalid serial executables from thesame source code.For comparison, Figure 9 showsour by now familiar simple vector