Design and development of a concept-based multi ... - Citeseer

Design and development of a concept-based multi ... - Citeseer

Design and development of a concept-based multi ... - Citeseer

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Shiyan Ou, Christopher S.G. Khoo <strong>and</strong> Dion H. Goh<br />

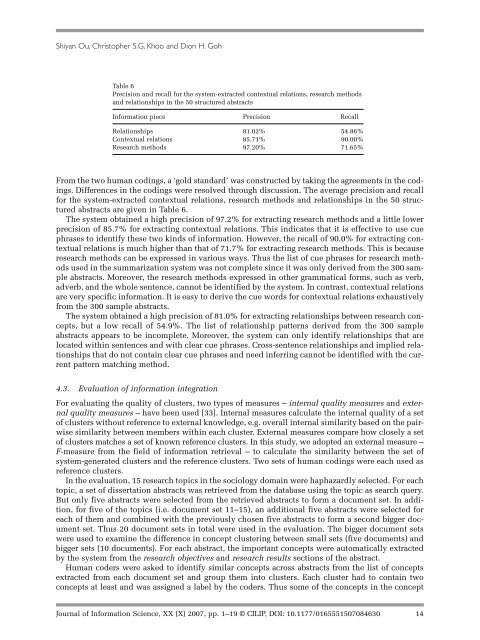

Table 6<br />

Precision <strong>and</strong> recall for the system-extracted contextual relations, research methods<br />

<strong>and</strong> relationships in the 50 structured abstracts<br />

Information piece Precision Recall<br />

Relationships 81.02% 54.86%<br />

Contextual relations 85.71% 90.00%<br />

Research methods 97.20% 71.65%<br />

From the two human codings, a ‘gold st<strong>and</strong>ard’ was constructed by taking the agreements in the codings.<br />

Differences in the codings were resolved through discussion. The average precision <strong>and</strong> recall<br />

for the system-extracted contextual relations, research methods <strong>and</strong> relationships in the 50 structured<br />

abstracts are given in Table 6.<br />

The system obtained a high precision <strong>of</strong> 97.2% for extracting research methods <strong>and</strong> a little lower<br />

precision <strong>of</strong> 85.7% for extracting contextual relations. This indicates that it is effective to use cue<br />

phrases to identify these two kinds <strong>of</strong> information. However, the recall <strong>of</strong> 90.0% for extracting contextual<br />

relations is much higher than that <strong>of</strong> 71.7% for extracting research methods. This is because<br />

research methods can be expressed in various ways. Thus the list <strong>of</strong> cue phrases for research methods<br />

used in the summarization system was not complete since it was only derived from the 300 sample<br />

abstracts. Moreover, the research methods expressed in other grammatical forms, such as verb,<br />

adverb, <strong>and</strong> the whole sentence, cannot be identified by the system. In contrast, contextual relations<br />

are very specific information. It is easy to derive the cue words for contextual relations exhaustively<br />

from the 300 sample abstracts.<br />

The system obtained a high precision <strong>of</strong> 81.0% for extracting relationships between research <strong>concept</strong>s,<br />

but a low recall <strong>of</strong> 54.9%. The list <strong>of</strong> relationship patterns derived from the 300 sample<br />

abstracts appears to be incomplete. Moreover, the system can only identify relationships that are<br />

located within sentences <strong>and</strong> with clear cue phrases. Cross-sentence relationships <strong>and</strong> implied relationships<br />

that do not contain clear cue phrases <strong>and</strong> need inferring cannot be identified with the current<br />

pattern matching method.<br />

4.3. Evaluation <strong>of</strong> information integration<br />

For evaluating the quality <strong>of</strong> clusters, two types <strong>of</strong> measures – internal quality measures <strong>and</strong> external<br />

quality measures – have been used [33]. Internal measures calculate the internal quality <strong>of</strong> a set<br />

<strong>of</strong> clusters without reference to external knowledge, e.g. overall internal similarity <strong>based</strong> on the pairwise<br />

similarity between members within each cluster. External measures compare how closely a set<br />

<strong>of</strong> clusters matches a set <strong>of</strong> known reference clusters. In this study, we adopted an external measure –<br />

F-measure from the field <strong>of</strong> information retrieval – to calculate the similarity between the set <strong>of</strong><br />

system-generated clusters <strong>and</strong> the reference clusters. Two sets <strong>of</strong> human codings were each used as<br />

reference clusters.<br />

In the evaluation, 15 research topics in the sociology domain were haphazardly selected. For each<br />

topic, a set <strong>of</strong> dissertation abstracts was retrieved from the database using the topic as search query.<br />

But only five abstracts were selected from the retrieved abstracts to form a document set. In addition,<br />

for five <strong>of</strong> the topics (i.e. document set 11–15), an additional five abstracts were selected for<br />

each <strong>of</strong> them <strong>and</strong> combined with the previously chosen five abstracts to form a second bigger document<br />

set. Thus 20 document sets in total were used in the evaluation. The bigger document sets<br />

were used to examine the difference in <strong>concept</strong> clustering between small sets (five documents) <strong>and</strong><br />

bigger sets (10 documents). For each abstract, the important <strong>concept</strong>s were automatically extracted<br />

by the system from the research objectives <strong>and</strong> research results sections <strong>of</strong> the abstract.<br />

Human coders were asked to identify similar <strong>concept</strong>s across abstracts from the list <strong>of</strong> <strong>concept</strong>s<br />

extracted from each document set <strong>and</strong> group them into clusters. Each cluster had to contain two<br />

<strong>concept</strong>s at least <strong>and</strong> was assigned a label by the coders. Thus some <strong>of</strong> the <strong>concept</strong>s in the <strong>concept</strong><br />

Journal <strong>of</strong> Information Science, XX (X) 2007, pp. 1–19 © CILIP, DOI: 10.1177/0165551507084630 14