Système lame DLC bullx B700

Système lame DLC bullx B700

Système lame DLC bullx B700

- No tags were found...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

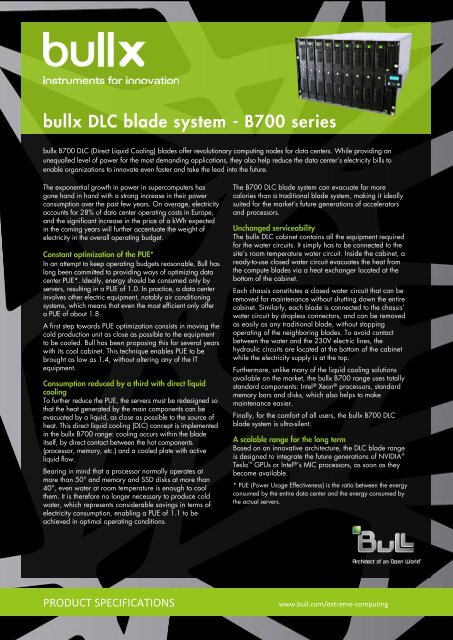

ullx <strong>DLC</strong> blade system - <strong>B700</strong> series<strong>bullx</strong> <strong>B700</strong> <strong>DLC</strong> (Direct Liquid Cooling) blades offer revolutionary computing nodes for data centers. While providing anunequalled level of power for the most demanding applications, they also help reduce the data center’s electricity bills toenable organizations to innovate even faster and take the lead into the future.The exponential growth in power in supercomputers hasgone hand in hand with a strong increase in their powerconsumption over the past few years. On average, electricityaccounts for 28% of data center operating costs in Europe,and the significant increase in the price of a kWh expectedin the coming years will further accentuate the weight ofelectricity in the overall operating budget.Constant optimization of the PUE*In an attempt to keep operating budgets reasonable, Bull haslong been committed to providing ways of optimizing datacenter PUE*. Ideally, energy should be consumed only byservers, resulting in a PUE of 1.0. In practice, a data centerinvolves other electric equipment, notably air conditioningsystems, which means that even the most efficient only offera PUE of about 1.8.A first step towards PUE optimization consists in moving thecold production unit as close as possible to the equipmentto be cooled. Bull has been proposing this for several yearswith its cool cabinet. This technique enables PUE to bebrought as low as 1.4, without altering any of the ITequipment.Consumption reduced by a third with direct liquidcoolingTo further reduce the PUE, the servers must be redesigned sothat the heat generated by the main components can beevacuated by a liquid, as close as possible to the source ofheat. This direct liquid cooling (<strong>DLC</strong>) concept is implementedin the <strong>bullx</strong> <strong>B700</strong> range: cooling occurs within the bladeitself, by direct contact between the hot components(processor, memory, etc.) and a cooled plate with activeliquid flow.Bearing in mind that a processor normally operates atmore than 50° and memory and SSD disks at more than40°, even water at room temperature is enough to coolthem. It is therefore no longer necessary to produce coldwater, which represents considerable savings in terms ofelectricity consumption, enabling a PUE of 1.1 to beachieved in optimal operating conditions.The <strong>B700</strong> <strong>DLC</strong> blade system can evacuate far morecalories than a traditional blade system, making it ideallysuited for the market’s future generations of acceleratorsand processors.Unchanged serviceabilityThe <strong>bullx</strong> <strong>DLC</strong> cabinet contains all the equipment requiredfor the water circuits. It simply has to be connected to thesite’s room temperature water circuit. Inside the cabinet, aready-to-use closed water circuit evacuates the heat fromthe compute blades via a heat exchanger located at thebottom of the cabinet.Each chassis constitutes a closed water circuit that can beremoved for maintenance without shutting down the entirecabinet. Similarly, each blade is connected to the chassis’water circuit by dropless connectors, and can be removedas easily as any traditional blade, without stoppingoperating of the neighboring blades. To avoid contactbetween the water and the 230V electric lines, thehydraulic circuits are located at the bottom of the cabinetwhile the electricity supply is at the top.Furthermore, unlike many of the liquid cooling solutionsavailable on the market, the <strong>bullx</strong> <strong>B700</strong> range uses totallystandard components: Intel ® Xeon ® processors, standardmemory bars and disks, which also helps to makemaintenance easier.Finally, for the comfort of all users, the <strong>bullx</strong> <strong>B700</strong> <strong>DLC</strong>blade system is ultra-silent.A scalable range for the long termBased on an innovative architecture, the <strong>DLC</strong> blade rangeis designed to integrate the future generations of NVIDIA ®Tesla GPUs or Intel ® ’s MIC processors, as soon as theybecome available.* PUE (Power Usage Effectiveness) is the ratio between the energyconsumed by the entire data center and the energy consumed bythe actual servers.PRODUCT SPECIFICATIONSwww.bull.com/extreme-computing

S-<strong>bullx</strong><strong>B700</strong>-en2<strong>bullx</strong> <strong>DLC</strong> <strong>B700</strong> cabinetSpecifications<strong>DLC</strong> blade system technical specificationsMount capacity: 42U - Dimensions (HxWxD) of the bare cabinet: 2020x600x1200mmWeight (empty): 173kg - Maximum weight with 4 chassis: 1144.42kg, with 5 chassis: 1331.78kgPDU: 1 as standard, 1 optional extensionElectric bus bar: 4 x 54V – Expansion tankRegulatory compliance CE, IEC 60297, EIA-310-E, EN 60950Cooperative Power Chassis (CPC) 7U format drawer mounted in the <strong>bullx</strong> <strong>DLC</strong> cabinet or on the cabinet roofElectricity supplyHydraulic chassis (HYC)Number of unitsComponentsPower supplies<strong>bullx</strong> <strong>DLC</strong> <strong>B700</strong> chassisForm factorManagementVentilation and coolingInterconnectEthernetMidplanePower Distribution ModulesPhysical specificationsNumber of shelves for 54V PSUs: up to 7 in 1U formatNumber of PSUs on the first shelf: 3 x 3kW 54V DC – it contains the RMMNumber of PSUs on the other shelves: 4 x 3kW 54V DCNumber of three-phase 32A AC lines: up to 5 - 3 lines for the first 4 shelvesPSU redundancy: N+1Power supplied with the first 15 PSUs: up to 42kW, maximum power with the 27 PSUs: up to 78kW3.5U chassis, mounted in the <strong>bullx</strong> <strong>DLC</strong> cabinet, 7U with 2 units1 as standard - 1 optional for redundancy1 heat exchanger for the cooling liquid - 1 multistage centrifugal pump2 x 2-way motorized valves to regulate temperature and flow of the private loopTemperature sensors for the cooling liquid; Static pressure sensors; Regulation module220VAC and 54VDC<strong>bullx</strong> B710 <strong>DLC</strong> compute bladeForm factorProcessorsArchitectureRack mount 7U drawer for up to 9 double blades, i.e. 18 compute nodesChassis management module (CMM) comprising: 1Gb Ethernet switch with 24 ports, 3 of which are external LEDs to indicate module operating statusDisplay: screen and control panel on the front of the chassis4 fans/blowers to cool CMM and ESM/TSM6 Disk Blower Boxes (DBB) to cool disks (3 at top and 3 at bottom of the chassis)Cooling: by cooling liquid via CHMA-type tubesInfiniBand switch(ISM): up to 2 x 36 port FDR switches with 18 internal ports and 18 external QSFP+ ports (optional)1Gb/s switch (ESM): 21 1Gb ports, including 18 internal ports and 3 external RJ45 ports for backbone access (optional)10Gb/s switch (TSM): 22 ports, including 18 internal 1Gb ports and 4 external 10BaseSR ports (SFP+ connector ) (optional)Passive board consisting of connectors for 18 servers, 2 DBBs, ISM, CMM, ESM, PDM, and all CHMA tubesUp to 2 hotswap modules – Max. consumption 15kWInput voltage 54V DC, output voltage 12V DC and 3.3V DCDimensions (HxWxD) 31.1cm (7U) x 48.3cm (19”) x 74.5cm – Max. weight 175kg (with all options)Double height blade containing 2 compute nodes2 x 2 Intel ® Xeon ® processors E5 Family (SandyBridge EP)Cores: up to 8 - Frequency: up to 2.7 GHz - Consumption: up to 130WL3 cache: up to 20MB shared, depending on model2 x Intel® C600 series (Patsburg PCH)2 x 2 QPI links (6.4 GT/s, 7.2 GT/s or 8.0 GT/s)Memory 2 x 8 DDR3 DIMM sockets - Data rate support: 1333 MT/s, 1600 MT/s - Voltage support: 1.35V and 1.5VUp to 2 x 256GB Reg EC DDR3 (with 32GB DIMMs, subject to availability)StorageEthernetInfiniBandManagementCoolingOS and cluster softwareRegulatory complianceWarrantyUp to 2 x 2 SATA disks (2.5") or 2 x 2 SSD disks (2.5") – max. height 9.5mm2 x 1 1Gb dual port Ethernet controller for links to CMM and ESM or TSM2 x1 ConnectX3 adapter supplying two IB FDR ports2 x integrated baseboard management controller (IBMC)Cold plate + heat spreaders for memory DIMMsRed Hat Enterprise Linux & <strong>bullx</strong> supercomputer suiteCE (UL, FCC, RoHS)1 year, optional warranty extension© Bull SAS – November 2011 –Versailles trade and companies register, no. B642 058739 – All brands mentioned in this document belong to their respective owners.Bull reserves the right to modify this documentat any time and without prior notification.Some offers or offer components described in this document may not be available locally. Intel and Intel Xeon are brands or registered trademarks of Intel Corp. or itssubsidiaries in the USA or other countries.Please contact your local Bull correspondent to find out about the offers available in your country.This document may not be considered contractually binding.Bull – Rue Jean Jaurès – 78340 Les Clayes sous Bois – FranceUK : Bull Maxted Road, Hemel Hempstead, Hertfordshire HP2 7DZUSA: Bull 300 Concord Road, Billerica, MA 01821This flyer is printed on paper containing 40% eco-certifed fibers originating from sustainably managed forests and 60% recycled fibers, in conformity with environmental regulations (ISO 14001).