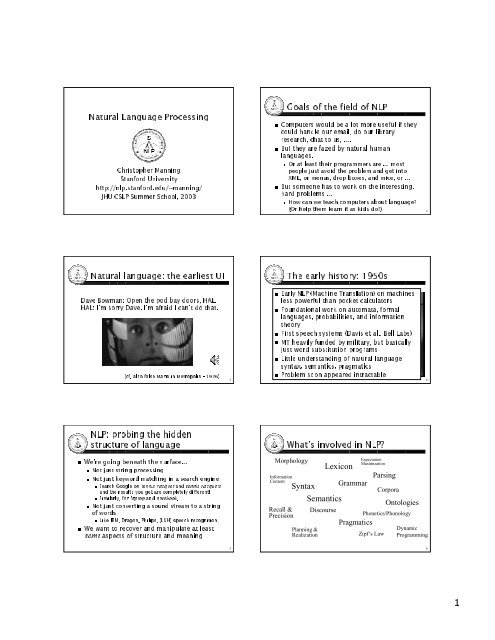

1 Natural Language Processing Goals of the field of NLP Natural ...

1 Natural Language Processing Goals of the field of NLP Natural ...

1 Natural Language Processing Goals of the field of NLP Natural ...

- No tags were found...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

need for <strong>NLP</strong>:Thein 2003“Databases”search, PeertoPeer, Agents, CollaborativeWebXML/Metadata, Data miningFiltering,is more <strong>of</strong> everything, it’s more distributed,Thereit’s less structured.and<strong>of</strong> <strong>the</strong> information in most companies isMostin human languages (reports, customermaterialdiscussion papers, text) – not stuff inemail,databasestraditionaltextbases and information retrieval nowLargea big impact on everyday people (web search,haveprogress on thisMakingproblem… The task is difficult! What tools do we need? The answer that’s been getting traction:models built from language dataprobabilisticP(“maison” → “house”) highSome computer scientists think this is a newidea“A.I.”models say how likely a sentence is, or<strong>Language</strong>likely one word is to appear after ano<strong>the</strong>rhow Traditional models just P(w i|w i-1, w i-2)a need forToday:→ KnowledgeInformationthat we’d like to turn into usable knowledgeemploys(stanfordUniversity, chrisManning)∃e ∃x 1 ∃x 2 (employing(e) & employer(e, x 1 ) & employed(e,∃t2 ) & name(x 2 , “Christopher Manning”) & name(x 1 , “Stanfordxstarted out with words, <strong>the</strong>y were encodedWea signal, and we now wish to decode.asmost likely sequence w <strong>of</strong> “words” givenFindsequence <strong>of</strong> observations a<strong>the</strong>Bayes’ law to create a generative modelUsew P(w|a) = ArgMax w P(a|w) P(w) / P(a)ArgMax=ArgMax wthat our computers are so fast and haveNowmuch disk, we should be embeddingsoexample: The file commandTrivial> file ~/current/<strong>NLP</strong>-notesdataproposal.txt:Uh oh! This is also English text. Just has a couple <strong>of</strong>1. I am going to go visit Sonoma county this weekend.A lot <strong>of</strong> new things: Lots <strong>of</strong> unstructured text/web information78University) & at(e, t) & t ⊃ [1999, 2003]portals, email)The Noisy Channel ModelKnowledge about languageKnowledge about <strong>the</strong> worldA way to combine knowledge sourcesP(a|w) P(w)P(“L’avocat general” → “<strong>the</strong> general avocado”) lowChannel Model<strong>Language</strong> ModelSpeech productionEnglish words910English wordsTranslating to English French wordsengineers…. electrical <strong>the</strong>OCR/spelling with errorsBut really it’s an old idea that was stolen fromEmbedding <strong>NLP</strong><strong>Language</strong> modelsUseful for many things:simple practical <strong>NLP</strong> into systems programs!Speech, OCR, language identificationContext sensitive spelling correction“Fluent” natural language generation, MT/user/manning/current/<strong>NLP</strong>-notes: ASCII English textGrammar checking> file proposal.txtLately, <strong>the</strong> hottest area in Information Retrievalfunny characters (“smart quotes”) in it somewhere….Are <strong>the</strong>se “documents” English text to file?onsat X 4 <strong>the</strong>2. THE QUICK BROWN FOX JUMPS OVER THE LAZY DOG.1112Recently, great results with richer parsing models2

those “documents” English text to file?AreI am going to go visit Sonoma county this weekend.sequence level language models areCharactereffective language recognizers.verymore robust: file is an overextended set <strong>of</strong>Farhacks…. Why hasn’t this happened?<strong>NLP</strong> is difficult:WhyheadlinesNewspaperformer)(TheAnalytic Section QuestionsGRESix sculptures – C, D, E, F, G, H – are to be exhibited in rooms 1, 2,3 <strong>of</strong> an art gallery.andsculptures E and F are exhibited in <strong>the</strong> same room, no o<strong>the</strong>rIfmay be exhibited in that room.sculptureleast one sculpture must be exhibited in each room, and noAtthan three sculptures may be exhibited in any room.moresculpture D is exhibited in room 3 and sculptures E and F areIfin room 1, which <strong>of</strong> <strong>the</strong> following may be true?exhibitedSculpture C is exhibited in room 1A.Sculpture H is exhibited in room 1B.Sculpture G is exhibited in room 2C.Sculptures C and H are exhibited in <strong>the</strong> same roomD.E. Sculptures G and F are exhibited in <strong>the</strong> same roomBill Gates, Remarks to Gartner Symposium,6, 1997:Octoberalways become more demanding.“Applications<strong>the</strong> computer can speak to you in perfectUntiland understand everything you say toEnglishand learn in <strong>the</strong> same way that an assistantitlearn – until it has <strong>the</strong> power to do thatwouldwe need all <strong>the</strong> cycles. We need to be–to do <strong>the</strong> best we can. Right nowoptimizedare right on <strong>the</strong> edge <strong>of</strong> what <strong>the</strong>linguisticscan do. As we get ano<strong>the</strong>r factor <strong>of</strong>processor<strong>the</strong>n speech will start to be on <strong>the</strong> edgetwo,Embedding <strong>NLP</strong>Sculptures C and E may not be exhibited in <strong>the</strong> same room.THE QUICK BROWN FOX JUMPS OVER THE LAZY DOG.Sculptures D and G must be exhibited in <strong>the</strong> same room.No! Pure ASCII with <strong>the</strong> word ‘<strong>the</strong>’ or ‘The’We can do better than this with language models!Even Java vs. C++ can confuse it[It has at Micros<strong>of</strong>t!]1314Systems people divorced from <strong>NLP</strong> people?Is <strong>the</strong> problem just cycles?Ban on Nude Dancing on Governor's DeskIraqi Head Seeks ArmsJuvenile Court to Try Shooting DefendantTeacher Strikes Idle KidsStolen Painting Found by TreeLocal High School Dropouts Cut in HalfRed Tape Holds Up New BridgesClinton Wins on Budget, but More Lies AheadHospitals Are Sued by 7 Foot Doctors1516Kids Make Nutritious Snacks<strong>of</strong> what it can do.”17 183

many meanings <strong>of</strong> interest [n.]TheReadiness to give attention to or to learn about1.Quality <strong>of</strong> causing attention to be given2.Activity, subject, etc., which one gives time and3.toattentionThe advantage, advancement or favor <strong>of</strong> an4.or groupindividualA stake or share (in a company, business, etc.)5.6. Money paid regularly for <strong>the</strong> use <strong>of</strong> moneysimple counts <strong>of</strong>ten work quite well as a substituteMoral:AI-complete reasoning … though not perfectlyforParameters: priors P(s) andword distribution P(w|s).<strong>the</strong>w 1 w 2 w 3Parameters usually set using relative frequency(RFEs) with some smoothing:estimatorsModels for WSDNaive-BayesModel: P(s|w 1,…w n) ∝Word sense disambiguationsP(s)P(w 1 |s)…P(w n |s)somethingP(s) = count(s)/totalP(w|s) = count(w,s)/count(s)Converse: words that mean (almost) <strong>the</strong> same:1920image, likeness, portrait, facsimile, pictureNaive Bayes WSD performanceData - Leacock et al.Accuracy“line”0.900.800.700.60Joint NB0.500 1000 2000 3000 4000Training Set Size0.860.840.820.800.780.76“hard”Joint NB0 1000 2000 3000 4000Training Set Size212223 244

You can model much <strong>of</strong> POS resolution as asequence modelflatSequenceDataFeatureExtractionSequence Model InferenceFeaturesare elementary pieces <strong>of</strong> evidence thatFeaturesaspects <strong>of</strong> what we observe d with a categorylinkfeatures are indicator functions <strong>of</strong>Usually<strong>of</strong> <strong>the</strong> input and a particular class.properties f i (c, d) ≡ [Φ(d) ∧ c = c i ] [Value is 0 or 1]We also say that Φ(d) is a feature <strong>of</strong> <strong>the</strong> data d,for each c when, i <strong>the</strong> conjunction Φ(d) ∧ c = c , i a isLabelStocksBUSINESS:a yearly low …hitFeaturesstocks, hit, a,{…,TextCategorization= states s 1, s 2, …HMMstart state s 1(specialtransition probs P(s i |s j )stateemission probs P(w k |s j )tokene.g., speech recognition, POS tagging,NN INbed into restructure…debt.bank:MONEYLabelMONEYFeaturesP=restructure,{…,Word-SenseDisambiguationVB TOaid toJJ NN …DTprevious fall …ThePT=JJ{W=fall,PW=previous}JJblue inLabelNNHMM formalism: as a FSALinear sequence models for POSNNHMM = probabilistic FSAJJ Classic model: Hidden Markov Modelsx 1 x t-1 x t x t+1 x Tend state s n )specialalphabet a 1, a 2, …tokenVBCDo 1satonP(sat|x t-1 ) P(x t|x t-1 ) 26<strong>the</strong>o TDTUHWidely used in many language processing tasks,25topic detection.Local and sequence classifiersFeature-Based ModelsSequence Level The decision about a data point is basedonly on <strong>the</strong> features active at that point.DataDataDataLocal LevelLabelLabelClassifier TypeLocalBUSINESSLocalOptimizationLocalDataFeaturesFeaturesDataSmoothingN=debt, L=12, …}yearly, low, …}2728POS TaggingFeaturesFeaturesthat we want to predict.cfeature has a real value: f: C × D → RAf 1 (c, d) ≡ [c= “NN” ∧ islower(w 0 ) ∧ ends(w 0 , “d”)]f 2 (c, d) ≡ [c = “NN” ∧ w -1 = “to” ∧ t -1 = “TO”]f 3 (c, d) ≡ [c = “VB” ∧ islower(w 0 )]For example:TO NNINto aidThey pick out a subset.Models will assign each feature a weightThese are <strong>the</strong> parameters <strong>of</strong> a probability model2930feature <strong>of</strong> <strong>the</strong> data-class pair (c, d).5

In <strong>the</strong> last few years <strong>the</strong>re has been extensive<strong>of</strong> conditional or discriminativeusemake it easy to incorporate lots <strong>of</strong>Theyimportant featureslinguisticallyallow automatic building <strong>of</strong> languageTheyretargetable <strong>NLP</strong> modulesindependent,net diagrams draw circles for randomBayesand lines for direct dependenciesvariables,node is a little classifier (conditionalEachtable) based on incoming arcsprobabilityare many ways to chose weightsTherePerceptron: find a currently misclassified example, and<strong>the</strong> best known Stat<strong>NLP</strong> models:Alln-gram models, Naïve Bayes classifiers, hidden(conditional) models take <strong>the</strong> dataDiscriminativegiven, and put a probability overasstructure given <strong>the</strong> data:hiddenLogistic regression, conditional loglinear models,entropy markov models, (SVMs,maximumperceptrons)models work well:ConditionalSense DisambiguationWordwith exactly <strong>the</strong>Evenfeatures, changingsamejoint to conditionalfromincreasesestimationis, we use <strong>the</strong> sameThatand <strong>the</strong> samesmoothing,features, weword-classchange <strong>the</strong> numbersjust<strong>of</strong> <strong>the</strong>(parametersmodel)<strong>the</strong> linear combination Σλ Use i f i to produce a(c,d)model:probabilistic P(NN|to, aid, TO) = e 1.2 e –1.8 /(e 1.2 e –1.8 + e 0.3 ) = 0.29 P(VB|to, aid, TO) = e 0.3 /(e 1.2 e –1.8 + e 0.3 ) = 0.71weights are <strong>the</strong> parameters <strong>of</strong> <strong>the</strong> probabilityThecombined via a “s<strong>of</strong>t max” functionmodel,Joint vs. Conditional modelsDiscriminative models(generative) models place probabilities overJointobserved data and <strong>the</strong> hidden stuff (gene-bothrate <strong>the</strong> observed data from hidden stuff):probabilistic models in <strong>NLP</strong>, IR, and SpeechP(c,d) Because:They give high accuracy performanceMarkov models, probabilistic context-free grammarsP(c|d)3132Bayes Net/Graphical modelsTraining SetObjectiveAccuracySome variables are observed; some are hiddenJoint Like.86.8performancec 1 c 2 c 3cc98.5Cond. Like.Test Setd 1 d 2 d 3d 1 d 2 d 3d 1 d 2 d 3AccuracyObjective73.6Joint Like.HMMLogistic RegressionNaïve BayesCond. Like.333476.1(Klein and Manning 2002, using Senseval-1 Data)DiscriminativeGenerativeFeature-Based ClassifiersFeature-Based ClassifiersClassify from features sets {f i } to classes {c}.“Linear” classifiers:Exponential (log-linear, maxent, logistic, Gibbs) models: Assign a weight λ i to each feature f i .a pair (c,d), features vote with <strong>the</strong>ir weights:Forvote(c) = Σλ i f i (c,d)P( c | d,λ)=c'∑ exp λifi(c,d)Makes votes positiveiexp λ f ( c',d)ii iNormalizes votesTO NNTO VB∑∑to aid1.2 –1.8 0.3aid toChoose <strong>the</strong> class c which maximizes Σλ i f i (c,d) = VBthis model form, we will choose parametersGiven{λ i that maximize <strong>the</strong> conditional likelihood <strong>of</strong> <strong>the</strong>}35data according to this model.36nudge weights in <strong>the</strong> direction <strong>of</strong> a correct classification6

22.6W 0W +1fellW -1%T -1-T -2on both left and right tags fixesConditioningproblem<strong>the</strong>BEST test sett 1t 3T -1discriminative decisions are chained toge<strong>the</strong>r toLocala conditional markov modelgivebad independence assumptions <strong>of</strong> directionalThecan lead to label bias (Bottou 91, Lafferty 01)modelst 1t 3P(MD|will,)*P(TO|to,MD)=P(MD|will,)*1{MD, NN} to {TO} fight {NN, VB, VBP}willwill be mis-tagged as MD, because MD is <strong>the</strong> mostwillL+L 2 R+R 2t t t t -2t 2t 0t -1 1t 01t -1 00 wL+L 2w 0w 0Model L+R has 13.2% error reduction from Model L+L 2CMM POS Tagging ModelsExample: POS TaggingFeatures can include:Current, previous, next words in isolation or toge<strong>the</strong>r.Previous (or next) one, two, three tags.Word-internal features: word types, suffixes, dashes, etc.or observation bias (Klein & Manning 02)Decision PointTOP(t 1 =MD,t 2 =TO|will,to)=FeaturesLocal Context+10-3-1-2to??????VBDNNPDTwillfightVBDDowNNP-VBD%22.6ThefelltruehasDigit?(Ratnaparkhi 1996; Toutanova et al. 2003, etc.)37common tagging38……Centered Context is BetterDependency NetworksL+RTOTokenModeltoFeaturesfightSentencewillUnknown85.92%96.05%32,935R+R 244.04%33,42395.25%84.49%37.20%49.50%87.15%96.57%L+R 32,6103940Named Entity Recognition taskFinal POS Tagging Test ResultsModelTask: Predict semantic label <strong>of</strong> each word in textFeaturesSentenceUnknownToken89.04%460,55297.24%56.34%Foreign NNP I-NP ORG2.90%4.4% error reductionMinistry NNP I-NP ORG2.71%spokesman NN I-NP OUs(Statistically significant)Shen NNP I-NP PER2.51%CollinsTokenErrorGu<strong>of</strong>ang NNP I-NP PERtold VBD I-VP ORateReuters NNP I-NP ORG: : O O4142Comparison to best published results – Collins 027

include <strong>the</strong> word, previousFeaturesnext words, previous classes,andnext, and current POSprevious,character n-gram features andtag,signature <strong>of</strong> word(> 92% on English devtest set)Highcomes fromperformancemany informativecombiningfeatures.smoothing / regularization,Withfeatures never hurt!moreWords and LetterCommonSequencesAlso inherent in common <strong>NLP</strong> word-levelmodelsBut for names, this misses valuable source <strong>of</strong>informationin web search?NERfalse name matchesSolvingExample: NERNER conditional models(Klein et al. 2003; also, Borthwick 1999, etc.)Feature WeightsPERSLOC(Klein et al. 2003)Sequence model across wordsFeature TypeFeatureEach word classified by local modelat-0.730.94Previous wordGraceDecision Point:0.030.00Decision Point:Current word-0.04State for GraceState for GraceBeginning bigram 800K features0.80XxCurrent signaturePrevNextCurNextCurPrev0.460.68??????O-Xx??????O<strong>the</strong>rPrev state, cur sigClassO<strong>the</strong>r0.37Classx-Xx-XxGraceatatPrev-cur-next sigWordWord0.37Road-0.69GraceRoadO-x-Xx-0.20P. state - p-cur sig0.82NNPINTagNNPNNPINTagNNP43…Total:-0.582.6844XxXxXxxSigxXxSigCharacter-level informationFinal NER Results: English100 Traditional linguistics idea (Saussure, etc.):Form is usually uncorrelated with meaning95“The arbitariness <strong>of</strong> <strong>the</strong> linguistic sign”9085<strong>of</strong>ten classify PNPs by how <strong>the</strong>y lookPeopleCotrimoxazole We<strong>the</strong>rs<strong>field</strong>80LOC MISC ORG PER OverallAlien Fury: Countdown to InvasionPrecision Recall F1 454675oxa000 06:0 0John174144Inc<strong>field</strong>180710708140 86241drugcompanymovie1432placeperson6847488

models for lexicalizedFactoredparsingUses an interpolation <strong>of</strong> estimates <strong>of</strong> differentspecificitiesAim is to use richly conditioned estimatesavailable, but to back <strong>of</strong>f to coarserwhenA hugely increased ability to do accurate,broad coverage parsing <strong>of</strong> sentencesrobust,Achieved by converting parsing into atask and using probabilisticclassificationStatistical methods quite accurately resolvestructural and real world ambiguitiesmostProvide probabilistic models which can befrom speech recognition systemsintegratedmillion words <strong>of</strong> hand-parsed WSJ newswire1And o<strong>the</strong>r stuff: Switchboard, partial Brown More recently, growing number <strong>of</strong> treebanks:shows that grammar induction is a hard(It… but one I’m interested in.)problem1 million words isSparseness:nothinglike 965,000 constituents, but only 66 WHADJP Only 6 aren't how much or how many, but:clever/original/incompetent (at riskhowand evaluation})assessmentMost <strong>of</strong> <strong>the</strong> probabilities that you would likecompute, you can't computetoParsers use complex models with a lot <strong>of</strong>estimated from very sparse dataparametersE.g., Gildea 01 Collins 97 reimplementation:parameters [for Model 1]735,850Modern statistical parsingmachine learning methods Quickly: find a good parse in a few seconds49 50through to Knowledge RepresentationMarked-up data Key resource for parsing: <strong>the</strong> Penn Treebank Lexicalized PCFG parsing: Charniak (1997)Prague (Czech) dependency treebank(Penn) Chinese Treebank5152Charniak 1997 smoothingestimates when <strong>the</strong>y’re not available A lot <strong>of</strong> smoothing/back<strong>of</strong>f (“regularization”)53549

work uses bilexical statistics: likelihoods <strong>of</strong>Muchbetween pairs <strong>of</strong> wordsrelationships stocks plummeted 2 occurrences stocks stabilized 1 occurrence stocks skyrocketed 0 occurrences stocks discussed 0 occurrencesfar very little success in augmenting <strong>the</strong>Sowith extra unannotated materials ortreebank TREC 8+ QA competition (1999–)massive collections <strong>of</strong> on-line documents,Withtranslation <strong>of</strong> knowledge is impractical:manual(Early on) evaluated output was 5 answers <strong>of</strong> 50snippets <strong>of</strong> text drawn from a 3 Gb textbyte(IR think.) Get reciprocal points forcollection.correct answer.highestfragments belched out <strong>of</strong> <strong>the</strong> mountain“lavaas hot as 300 degrees Fahrenheit”were lava ISPARTOF volcano ■ lava inside volcanoneeded semantic information is in WordNetTheand was successfully translated into adefinitions,and Harabagiu (2001) –Pascafrom sophisticated <strong>NLP</strong>valuetaxonomy <strong>of</strong> question types and expectedLargetypes is crucialanswerparser used to parse questions andStatisticaltext for answers, and to build KBrelevantexpansion loops (morphological, lexicalQueryand semantic relations) importantsynonyms,(<strong>the</strong> former)Semantics:Analytic Section QuestionsGRESix sculptures – C, D, E, F, G, H – are to be exhibited in rooms 1, 2,3 <strong>of</strong> an art gallery.andsculptures E and F are exhibited in <strong>the</strong> same room, no o<strong>the</strong>rIfmay be exhibited in that room.sculptureleast one sculpture must be exhibited in each room, and noAtthan three sculptures may be exhibited in any room.moresculpture D is exhibited in room 3 and sculptures E and F areIfin room 1, which <strong>of</strong> <strong>the</strong> following may be true?exhibitedSculpture C is exhibited in room 1A.Sculpture H is exhibited in room 1B.Sculpture G is exhibited in room 2C.Sculptures C and H are exhibited in <strong>the</strong> same roomD.E. Sculptures G and F are exhibited in <strong>the</strong> same roomParsing resultsSparseness Labeled precision/recall F measure: 86–90% Unlabeled dependency accuracy: 90+%Very sparse, even on topics central to <strong>the</strong> WSJ:using semantic classes or clustersbilexical statistics on WSJ; nothing cross-domain5556Gildea 01: You only lose 0.5% by eliminatingQuestion answering from textGood IR is needed: SMART paragraph retrievalAn idea originating from <strong>the</strong> IR communitywe want answers from textbases [cf. bioinformatics]Answer ranking by simple ML methodMainly factoid ‘Trivial Pursuit’ questionsfor web accessUsablestill a little too slow….But5758Question Answering ExampleHow hot does <strong>the</strong> inside <strong>of</strong> an active volcano get?get(TEMPERATURE, inside(volcano(active)))Sculptures C and E may not be exhibited in <strong>the</strong> same room.Sculptures D and G must be exhibited in <strong>the</strong> same room.fragments(lava, TEMPERATURE(degrees(300)),belched(out, mountain))volcano ISA mountainfragments <strong>of</strong> lava HAVEPROPERTIESOF lava5960form that can be used for rough ‘pro<strong>of</strong>s’10

former)(TheAnalytic Section QuestionsGREleast one sculpture must be exhibited in each room, and noAtthan three sculptures may be exhibited in any room.moreat least 2x4=8 readings; taking second conjunct:HasNo more than three sculptures may be exhibited in any room.¬(∃ 4 x(sculpture(x) ∧∃y (room(y) ∧ exhibit(x, y))))1.¬(∃y (room(y) ∧∃ 4 x(sculpture(x) ∧ exhibit(x, y))))2.¬ (∃ 4 x(sculpture(x) ∧∃y (room(y) ∧ exhibit(x, y))))3.¬ (∃y (room(y) ∧∃ 4 x(sculpture(x) ∧ exhibit(x, y))))4.uoy !knahTnatural language interaction is still aHuman-levelgoaldistant<strong>the</strong>re are now practical and usable NLUButapplicable to many problemssystems<strong>NLP</strong> methods have opened up newStatisticalfor robust high performance textpossibilitiesshould be looking more forPeopleto embed <strong>NLP</strong> into systems!opportunitiesConclusionunderstanding systems.6162The End6311