The Effectiveness of Problem Based Learning 1 - Teaching and ...

The Effectiveness of Problem Based Learning 1 - Teaching and ...

The Effectiveness of Problem Based Learning 1 - Teaching and ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

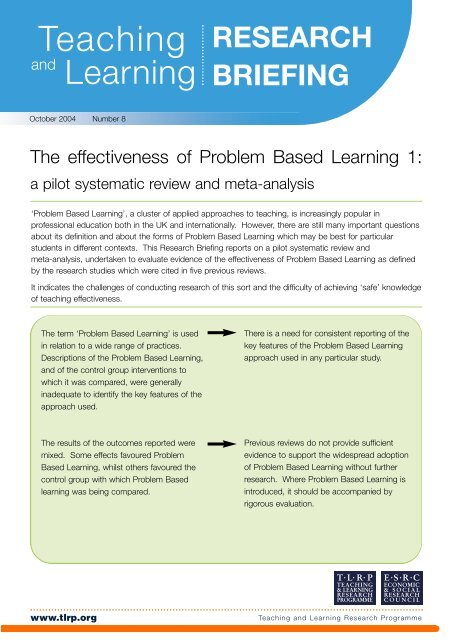

<strong>Teaching</strong><strong>and</strong><strong>Learning</strong>RESEARCHBRIEFINGOctober 2004 Number 8<strong>The</strong> effectiveness <strong>of</strong> <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> 1:a pilot systematic review <strong>and</strong> meta-analysis‘<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>’, a cluster <strong>of</strong> applied approaches to teaching, is increasingly popular inpr<strong>of</strong>essional education both in the UK <strong>and</strong> internationally. However, there are still many important questionsabout its definition <strong>and</strong> about the forms <strong>of</strong> <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> which may be best for particularstudents in different contexts. This Research Briefing reports on a pilot systematic review <strong>and</strong>meta-analysis, undertaken to evaluate evidence <strong>of</strong> the effectiveness <strong>of</strong> <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> as definedby the research studies which were cited in five previous reviews.It indicates the challenges <strong>of</strong> conducting research <strong>of</strong> this sort <strong>and</strong> the difficulty <strong>of</strong> achieving ‘safe’ knowledge<strong>of</strong> teaching effectiveness.<strong>The</strong> term ‘<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>’ is usedin relation to a wide range <strong>of</strong> practices.Descriptions <strong>of</strong> the <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>,<strong>and</strong> <strong>of</strong> the control group interventions towhich it was compared, were generallyinadequate to identify the key features <strong>of</strong> theapproach used.<strong>The</strong>re is a need for consistent reporting <strong>of</strong> thekey features <strong>of</strong> the <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>approach used in any particular study.<strong>The</strong> results <strong>of</strong> the outcomes reported weremixed. Some effects favoured <strong>Problem</strong><strong>Based</strong> <strong>Learning</strong>, whilst others favoured thecontrol group with which <strong>Problem</strong> <strong>Based</strong>learning was being compared.Previous reviews do not provide sufficientevidence to support the widespread adoption<strong>of</strong> <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> without furtherresearch. Where <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> isintroduced, it should be accompanied byrigorous evaluation.www.tlrp.org<strong>Teaching</strong> <strong>and</strong> <strong>Learning</strong> Research Programme

<strong>The</strong> researchBackground<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> is a majordevelopment in educational practicethat has a large impact across subjects<strong>and</strong> disciplines worldwide. It is notalways clear what exactly has beendone in the name <strong>of</strong> <strong>Problem</strong> <strong>Based</strong><strong>Learning</strong>. <strong>The</strong>re is also a growingnumber <strong>of</strong> references in the literature toadapted or “Hybrid” <strong>Problem</strong> <strong>Based</strong><strong>Learning</strong> courses <strong>and</strong> to coursesdescribed as 'Enquiry' or 'Inquiry'<strong>Based</strong> learning. <strong>Problem</strong> <strong>Based</strong><strong>Learning</strong> has arguably been one <strong>of</strong> themost scrutinised innovations inpr<strong>of</strong>essional education. However,despite the volume <strong>of</strong> literature on<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> it is not at allclear that we have safe knowledgeabout the effects <strong>of</strong> <strong>Problem</strong> <strong>Based</strong><strong>Learning</strong> in different learning contexts<strong>and</strong> in different modes <strong>of</strong> operation.Whilst the principle <strong>of</strong> research reviewsis well established in education, theappropriate process <strong>and</strong> purpose forthem is not. Systematic reviews can bea valid <strong>and</strong> reliable means <strong>of</strong> avoidingthe bias that comes from the fact thatsingle studies are specific to a time,sample <strong>and</strong> contexts <strong>and</strong> may be <strong>of</strong>questionable methodological quality.<strong>The</strong>y attempt to discover theconsistencies <strong>and</strong> account for thevariability in similar appearing studies.<strong>The</strong>re is a consensus emerging aboutthe need for systematic reviewscovering selected topics in education.Such reviews will identify the existingevidence, provide at least someanswers to the review questions <strong>and</strong>provide directions for future primaryresearch.Objectives <strong>of</strong> thepilot review• To establish the level <strong>and</strong> quality <strong>of</strong>existing ‘safe’ knowledge about theeffectiveness <strong>of</strong> <strong>Problem</strong> <strong>Based</strong><strong>Learning</strong> based on previouslypublished reviews• To confirm the need for a fullsystematic review <strong>of</strong> theeffectiveness <strong>of</strong> <strong>Problem</strong> <strong>Based</strong><strong>Learning</strong>• To establish the value <strong>of</strong> the method<strong>of</strong> systematic review used• To identify <strong>and</strong> clarify any problemswith the review protocol, process<strong>and</strong> instrumentsReview questions<strong>The</strong> initial review questions were…When compared to other approachesdoes <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> result inincreased participant performance at:• adapting to <strong>and</strong> participating inchange;• dealing with problems <strong>and</strong> makingreasoned decisions in unfamiliarsituations;• reasoning critically <strong>and</strong> creatively;• adopting a more universal or holisticapproach;• practising empathy, appreciating theother person’s point <strong>of</strong> view;• collaborating productively in groupsor teams;• identifying own strengths <strong>and</strong>weaknesses <strong>and</strong> undertakingappropriate remediation (self-directedlearning)?MethodsReview Design<strong>The</strong> review followed the approach usedby <strong>The</strong> Cochrane Collaboration, aninternational effort to identify evidence<strong>of</strong> effectiveness in the field <strong>of</strong> healthcare. <strong>The</strong> design <strong>of</strong> the review protocol,data extraction tools <strong>and</strong> overall reviewprocess is based on the guidance forreviewers produced by <strong>The</strong> CochraneEffective Practice <strong>and</strong> Organisation <strong>of</strong>Care Review Group <strong>and</strong> the NHSCentre for Reviews <strong>and</strong> Dissemination.This approach has been developedfrom the emerging ‘science’ <strong>of</strong>systematic reviewing in order minimisebias <strong>and</strong> errors. <strong>The</strong> review protocolwas registered with the CampbellCollaboration. Copies <strong>of</strong> the reviewprotocol <strong>and</strong> instrumentation areincluded in the full project report.Inclusion criteria• <strong>The</strong> review included participants inpost-school education programmes.• Study designs included wereR<strong>and</strong>omised Controlled Trials (RCT),Controlled Clinical Trials (CCT) ,Interrupted Time Series (ITS), <strong>and</strong>Controlled Before & After studies(CBA). Qualitative data collectedwithin such studies e.g. researchers’observations <strong>of</strong> events, wereincorporated in reporting. Studiesthat use solely qualitative approacheswere not included in the review.• <strong>The</strong> minimum methodologicalinclusion criteria across all studydesigns were the objectivemeasurement <strong>of</strong> studentperformance/behaviour or otheroutcome(s) <strong>and</strong> relevant <strong>and</strong>interpretable data presented orobtainable.Minimum inclusion criteria forinterventions (i.e. what is <strong>Problem</strong><strong>Based</strong> <strong>Learning</strong>) were set out in thereview protocol. However, reporting wasinsufficient to establish whether thesecriteria were met in virtually all theprimary studies considered.Review Process<strong>The</strong> review coordinator examined thereviews <strong>and</strong> identified the relevantcitations. For each citation either anabstract or full text copy was obtained.Two members <strong>of</strong> the review team thenscreened the citations to eliminatethose that obviously did not meet theminimum inclusion criteria. <strong>The</strong>included papers were then distributedamongst the reviewers for qualityappraisal <strong>and</strong> data extraction. Eachpaper was reviewed independently bytwo reviewers. Where there weredifferences <strong>of</strong> opinion betweenreviewers the review coordinator alsoreviewed the paper. At all stages, theprocess used <strong>and</strong> outcomes areexplicit. A full list <strong>of</strong> included <strong>and</strong>excluded studies is provided in the fullreport. <strong>The</strong> completed qualityassessment <strong>and</strong> data extraction toolswere returned to the review co-ordinatorwho lead the process <strong>of</strong> producing areport <strong>of</strong> the review synthesising <strong>and</strong>analysing the results where appropriate.Results91 citations were identified from the fivereviews. Of these 15 were judged tomeet the inclusion criteria. Of the 15only 12 reported extractable data. <strong>The</strong>discussion below refers only to these 12studies. <strong>The</strong> studies all reported on<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> used in highereducation programmes for healthwww.tlrp.org<strong>Teaching</strong> <strong>and</strong> <strong>Learning</strong> Research Programme

pr<strong>of</strong>essional education at both pre <strong>and</strong>post registration levels. <strong>The</strong> majority <strong>of</strong>students were in medicine <strong>and</strong> themajority <strong>of</strong> these studies reported onpre-registration medication education.<strong>The</strong> majority <strong>of</strong> reported effects were forwhat appeared to be tests <strong>of</strong>‘knowledge’ using multiple choiceformats. <strong>The</strong>re were also a smallnumber <strong>of</strong> effects reported on 'impacton practice' <strong>and</strong> on 'approaches tolearning'. Very little information wasgiven in the papers from which datawas extracted about the design,preparation or delivery processes <strong>of</strong>either the <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>intervention or the control to which<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> was beingcompared. Four <strong>of</strong> the studies used ar<strong>and</strong>omised experimental design, two aquasi-r<strong>and</strong>omised experimental design<strong>and</strong> the remainder were controlledbefore <strong>and</strong> after studies. Only onestudy reported st<strong>and</strong>ard deviated effectsizes. Effect sizes were calculated forall the other extracted effects with theexception <strong>of</strong> three studies whereinsufficient data were provided in thestudy report.<strong>The</strong> studies that reported 'effects onpractice' all used different outcomes<strong>and</strong> measurement instruments. In onlyone <strong>of</strong> the studies that reported 'effectson practice' was sufficient data providedto calculate effect sizes. This makes itdifficult to synthesise the study results.One study reported 'attitudes topractice' <strong>and</strong> found effect sizes thatfavoured <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>.Another measured 'nursing processskills' <strong>and</strong> <strong>of</strong> the seven effects reportedfive favoured the control group. <strong>The</strong>third study reported 'consultation skills'<strong>and</strong> on all the effects reported theresults favoured the control group.However, in this particular study, whichused a quasi – experimental design, thenature <strong>of</strong> the Control group intervention<strong>and</strong> the outcome measures used wouldappear to have put the control group ata distinct advantage.Two studies reported effects on'approaches to learning'. <strong>The</strong> twostudies used different instruments <strong>and</strong>reported five effects. In both studies,the results favoured <strong>Problem</strong> <strong>Based</strong><strong>Learning</strong> on all the scales. <strong>The</strong> <strong>Problem</strong><strong>Based</strong> <strong>Learning</strong> groups had fewer <strong>of</strong> theundesirable <strong>and</strong> more <strong>of</strong> the desirable'approaches to learning' after theintervention. However, the overallMajor implications<strong>The</strong> review found comparatively fewstudies cited in the five sample reviewpapers whose design <strong>and</strong> methodologywere good enough to eliminate bias <strong>and</strong>provide precise estimates <strong>of</strong> effect.Studies that achieved this wereconcentrated largely in medicaleducation in North America <strong>and</strong> Europe<strong>and</strong> focussed mainly on measuring'accumulation <strong>of</strong> knowledge' usingmultiple choice assessments.However, an outcome <strong>of</strong> 'accumulation<strong>of</strong> knowledge' <strong>and</strong> in particular itsassessment through multiple choiceinstruments is not regarded by manyadvocates <strong>of</strong> <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>as congruent with the approach itself oras a valid indicator <strong>of</strong> success. <strong>The</strong>second issue is how generalisable theseresults are to other disciplines <strong>and</strong> toother cultural contexts.Figure 1: Effect size for reported study outcomes focussing on 'knowledge'picture was <strong>of</strong> deterioration in theapproaches to learning <strong>of</strong> both the<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> <strong>and</strong> controlgroups, which <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong>appears to have mitigated.Figure 1 illustrates the effect sizes forreported outcomes that could usefullybe grouped under a heading‘knowledge’. Effect sizes ranged from d=-4.9 to 2.0. <strong>The</strong>re were sufficienteffects reported to justify an attemptedmeta analysis. <strong>The</strong> meta analysis <strong>of</strong> theoutcome ‘knowledge’ included 14effects reported in 8 different studies.Concerning the review questions, theresults <strong>of</strong> the meta analysis are largelyinconclusive. <strong>The</strong> mean effect size wasd= -0.3 but the 95% confidence intervaldid not exclude an effect size <strong>of</strong> d = 0.Sensitivity analysis suggested that studydesign, r<strong>and</strong>omisation, level <strong>of</strong>education <strong>and</strong> assessment format areall potential moderating variables but theresults were not conclusive. Importantlythe 95% confidence intervals do notexclude potentially ‘large effect sizes <strong>of</strong>d = + 1.0 or – 1.0. An effect size <strong>of</strong> d= 1.0 would mean that 84% <strong>of</strong> studentsin the control group were below thelevel <strong>of</strong> the average person in the<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> group. Aneffect <strong>of</strong> this size would appear to haveimportant practical significance.Only one study reported effects on'satisfaction with the learningenvironment' that met the reviewinclusion criteria. <strong>The</strong> study in anundergraduate medical educationprogramme required students to ratetheir experience on a series <strong>of</strong> scales.On all except two <strong>of</strong> the nine effectsreported, the effect size favoured the<strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> group. <strong>The</strong>largest effect size in favour <strong>of</strong> <strong>Problem</strong><strong>Based</strong> <strong>Learning</strong> was the students rating<strong>of</strong> innovation (d=1.6). <strong>The</strong> largest effectsize in favour <strong>of</strong> the Control group wasstudents’ rating <strong>of</strong> clarity (d= -1.0).<strong>Teaching</strong> <strong>and</strong> <strong>Learning</strong> Research Programmewww.tlrp.org

FurtherinformationFurther information about the project canbe downloaded from the project website(address below). A detailed summary <strong>of</strong>the two empirical studies can bedownloaded from the ESRC Regardwebsite (www.regard.ac.uk). A fullreport <strong>of</strong> the Pilot Systematic Review<strong>and</strong> Meta-analysis was published by the<strong>Learning</strong> & <strong>Teaching</strong> Subject NetworkCentre for Medicine, Dentistry <strong>and</strong>Veterinary Medicine <strong>and</strong> can bedownloaded from its website www.ltsn-01.ac.uk. A full report on the evaluation<strong>of</strong> <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> inContinuing Nursing Education isavailable from the project website.Systematic Review teamPiet Van den Bossche (University <strong>of</strong>Maastricht, <strong>The</strong> Netherl<strong>and</strong>s);Charles Engel (Centre for HigherEducation Studies, Institute <strong>of</strong>Education, University <strong>of</strong> London);David Gijbels (University <strong>of</strong> Antwerp,Belgium); Jean McKendree (<strong>Learning</strong><strong>and</strong> <strong>Teaching</strong> Support Network forMedicine, Dentistry <strong>and</strong> VeterinaryMedicine, University <strong>of</strong> Newcastle);Mark Newman (Middlesex University);Anthony Roberts (South Tees HospitalTrust, North Tees Primary Care Trust <strong>and</strong>University <strong>of</strong> Durham); Isobel Rolfe(Faculty <strong>of</strong> Health, University <strong>of</strong>Newcastle, Australia); John Smucny(State University <strong>of</strong> New York UpstateMedical University, USA);Giovanni De Virgilio (Istituto Superioredi Sanità, Italy).<strong>The</strong> warrant<strong>The</strong> research question in this study wasspecifically concerned with establishingwhether the use <strong>of</strong> activities termed <strong>Problem</strong><strong>Based</strong> <strong>Learning</strong> results in different‘outcomes’ to the use <strong>of</strong> other teaching <strong>and</strong>learning approaches. <strong>The</strong> review used asystematic <strong>and</strong> transparent process that hasbeen demonstrated to provide a more valid<strong>and</strong> reliable appraisal <strong>of</strong> existing researchevidence that allows for the development <strong>and</strong>accumulation <strong>of</strong> ‘knowledge’ specifically by:• Defining transparent inclusion criteriathat limit consideration to studies thatused research designs which have beenempirically established as optimal forproviding evidence about effectiveness• Using these criteria to make decisionsabout the inclusion <strong>of</strong> studies, theresults <strong>of</strong> studies <strong>and</strong> the interpretation<strong>of</strong> these results• Two reviewers independently appraisedthe quality <strong>of</strong> these studies <strong>and</strong> carriedout data extraction• <strong>The</strong> use <strong>of</strong> existing frameworks for thereporting <strong>of</strong> <strong>Problem</strong> <strong>Based</strong> <strong>Learning</strong> asa basis for providing descriptivesummaries <strong>of</strong> the included studies• <strong>The</strong> use <strong>of</strong> an explicit framework for theanalysis <strong>of</strong> the results from the individualstudies using both systematic narrativesynthesis <strong>and</strong> exploratory meta-analysis<strong>The</strong> limitations <strong>of</strong> the study design <strong>and</strong> itsconduct <strong>and</strong> their consequences have beenreported <strong>and</strong> explored. <strong>The</strong> relationshipbetween the results <strong>and</strong> conclusions istherefore explicit <strong>and</strong> the limited conclusionsdrawn do not go beyond that justified by theresults <strong>of</strong> the study.<strong>Teaching</strong><strong>and</strong> <strong>Learning</strong>Research ProgrammeTLRP is the largest education researchprogramme in the UK, <strong>and</strong> benefits from researchteams <strong>and</strong> funding contributions from Engl<strong>and</strong>,Northern Irel<strong>and</strong>, Scotl<strong>and</strong> <strong>and</strong> Wales. Projectsbegan in 2000 <strong>and</strong> will continue withdissemination <strong>and</strong> impact work extendingthrough 2008/9.<strong>Learning</strong>: TLRP’s overarching aim is toimprove outcomes for learners <strong>of</strong> all ages inteaching <strong>and</strong> learning contexts within the UK.Outcomes: TLRP studies a broad range <strong>of</strong> learningoutcomes. <strong>The</strong>se include both the acquisition <strong>of</strong> skill,underst<strong>and</strong>ing, knowledge <strong>and</strong> qualifications <strong>and</strong> thedevelopment <strong>of</strong> attitudes, values <strong>and</strong> identities relevantto a learning society.Lifecourse: TLRP supports research projects <strong>and</strong> relatedactivities at many ages <strong>and</strong> stages in education, training<strong>and</strong> lifelong learning.Enrichment: TLRP commits to user engagement at allstages <strong>of</strong> research. <strong>The</strong> Programme promotes researchacross disciplines, methodologies <strong>and</strong> sectors, <strong>and</strong>supports various forms <strong>of</strong> national <strong>and</strong> international cooperation<strong>and</strong> comparison.Expertise: TLRP works to enhance capacity for allforms <strong>of</strong> research on teaching <strong>and</strong> learning, <strong>and</strong> forresearch-informed policy <strong>and</strong> practice.Improvement: TLRP develops the knowledge base onteaching <strong>and</strong> learning <strong>and</strong> collaborates with users totransform this into effective policy <strong>and</strong> practice in the UK.TLRP is managed by the Economic <strong>and</strong> SocialResearch Council research mission is to advanceknowledge <strong>and</strong> to promote its use to enhance thequality <strong>of</strong> life, develop policy <strong>and</strong> practice <strong>and</strong>strengthen economic competitiveness. ESRC isguided by principles <strong>of</strong> quality, relevance <strong>and</strong>independence.PEPBL website:http://www.hebes.mdx.ac.uk/teaching/Research/PEPBL/index.htmPEPBL Grant holder <strong>and</strong> Principal Investigator: Mark NewmanPEPBL contact: Mark NewmanE-mail: m.newman@ioe.ac.uk Tel: +44 (0)20 7612 6575Social Science Research UnitInstitute <strong>of</strong> EducationUniversity <strong>of</strong> London, 18 Woburn Square London WC1H 0NRTLRP Directors’ Team❚Pr<strong>of</strong>essor Andrew Pollard ❚ Cambridge❚Dr Mary James ❚ Cambridge❚Dr Kathryn Ecclestone ❚ Newcastle❚Dr Alan Brown ❚ Warwick❚John Siraj-Blatchford ❚ CambridgeTLRP Programme Office❚Dr Lynne Blanchfield ❚ Lsb32@cam.ac.uk❚Suzanne Fletcher ❚ sf207@cam.ac.ukTLRPUniversity <strong>of</strong> CambridgeFaculty <strong>of</strong> EducationShaftesbury RoadCambridge CB2 2BX UKTel: +44 (0)1223 369631Fax: +44 (0)1223 324421www.tlrp.org