Why use multiple-choice questions on accounting - Bryant

Why use multiple-choice questions on accounting - Bryant

Why use multiple-choice questions on accounting - Bryant

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

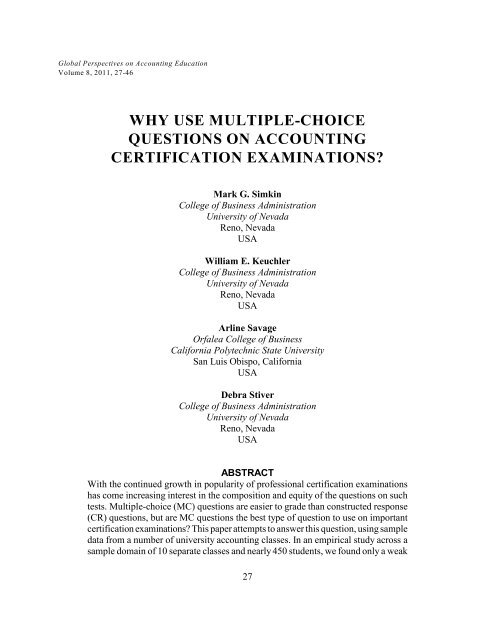

Global Perspectives <strong>on</strong> Accounting Educati<strong>on</strong>Volume 8, 2011, 27-46WHY USE MULTIPLE-CHOICEQUESTIONS ON ACCOUNTINGCERTIFICATION EXAMINATIONS?Mark G. SimkinCollege of Business Administrati<strong>on</strong>University of NevadaReno, NevadaUSAWilliam E. KeuchlerCollege of Business Administrati<strong>on</strong>University of NevadaReno, NevadaUSAArline SavageOrfalea College of BusinessCalifornia Polytechnic State UniversitySan Luis Obispo, CaliforniaUSADebra StiverCollege of Business Administrati<strong>on</strong>University of NevadaReno, NevadaUSAABSTRACTWith the c<strong>on</strong>tinued growth in popularity of professi<strong>on</strong>al certificati<strong>on</strong> examinati<strong>on</strong>shas come increasing interest in the compositi<strong>on</strong> and equity of the <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> suchtests. Multiple-<str<strong>on</strong>g>choice</str<strong>on</strong>g> (MC) <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are easier to grade than c<strong>on</strong>structed resp<strong>on</strong>se(CR) <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, but are MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> the best type of questi<strong>on</strong> to <str<strong>on</strong>g>use</str<strong>on</strong>g> <strong>on</strong> importantcertificati<strong>on</strong> examinati<strong>on</strong>s? This paper attempts to answer this questi<strong>on</strong>, using sampledata from a number of university <strong>accounting</strong> classes. In an empirical study across asample domain of 10 separate classes and nearly 450 students, we found <strong>on</strong>ly a weak27

28 Simkin, Keuchler, Savage, and Stiverrelati<strong>on</strong>ship between performance <strong>on</strong> the MC and CR porti<strong>on</strong>s of the tests.C<strong>on</strong>sequently, the findings from this study suggest that certificati<strong>on</strong> examinati<strong>on</strong>sbased solely <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> may not be testing applicants at the same level as CRtests might. Gender bias did not appear to be an issue in this study.Key words: Multiple <str<strong>on</strong>g>choice</str<strong>on</strong>g> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, c<strong>on</strong>structed resp<strong>on</strong>se <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, test formats,certificati<strong>on</strong> examinati<strong>on</strong>s, gender biasData availability: Data are available from the third authorINTRODUCTIONThe <strong>accounting</strong> literature suggests that professi<strong>on</strong>al certificati<strong>on</strong> is important to, and desiredby, employees, employers, and professi<strong>on</strong>al organizati<strong>on</strong>s. Potential pers<strong>on</strong>al benefits toindividuals who earn certificati<strong>on</strong> include (1) pride in accomplishment, (2) officialrecogniti<strong>on</strong> of the recipient’s knowledge, (3) enhanced career advancement opportunities, (4)impetus to improve work and communicati<strong>on</strong> skills, (5) increased job security, (6) ability to qualifyfor work in sensitive governmental or corporate areas, and (7) financial remunerati<strong>on</strong> (Grigsby,2000; Summerfield, 2008).Employers also gain when they encourage their employees and job applicants to obtainprofessi<strong>on</strong>al certificati<strong>on</strong>s. Benefits include (1) an independent metric with which to screen jobapplicants, (2) a knowledgeable work force, (3) assurance of c<strong>on</strong>tinuing educati<strong>on</strong> of employees,(4) greater currency in the field, (5) enhanced employee understanding of matters and c<strong>on</strong>ceptsbey<strong>on</strong>d their immediate job descripti<strong>on</strong>s, (6) availability of objective criteria with which to awardraises and promoti<strong>on</strong>s, and (7) an objective rati<strong>on</strong>ale for dismissing employees who repeatedly failcertificati<strong>on</strong> tests (Christensen, 1998).Finally, benefits often accrue to the professi<strong>on</strong>al organizati<strong>on</strong>s that offer certificati<strong>on</strong>s,including (1) enhanced recogniti<strong>on</strong> and stature of the sancti<strong>on</strong>ing organizati<strong>on</strong>, (2) ability to tailorcertificati<strong>on</strong> tests to specific technical areas, (3) greater understanding of the strengths andweaknesses of test takers, (4) enhanced ability to provide feedback to relevant parties, and (5)revenues from examinati<strong>on</strong> fees (Grigsby, 2000; Summerfield, 2008).Like many other professi<strong>on</strong>s, the field of <strong>accounting</strong> has a wide range of professi<strong>on</strong>alsocieties and c<strong>on</strong>comitant certificati<strong>on</strong>s. Table 1 provides examples, listed alphabetically bycertificati<strong>on</strong> name. One of the oldest and most respected–the certified public accountant (CPA)credential–has such stature that many university <strong>accounting</strong> programs “teach to” this exam and<strong>accounting</strong> programs are sometimes judged <strong>on</strong> the basis of the pass rates of their students <strong>on</strong> it(Jacks<strong>on</strong>, 2006).If certificati<strong>on</strong> examinati<strong>on</strong>s are important, then so are the types of <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <str<strong>on</strong>g>use</str<strong>on</strong>g>d <strong>on</strong> them.Test takers, employers, and the test developers themselves have at least <strong>on</strong>e objective in comm<strong>on</strong>:assurance that the <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> these assessments are fair and unbiased, and that the tests themselvesaccurately and equitably measure the applicants’ knowledge in the subject area.In most cases, the <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> certificati<strong>on</strong> examinati<strong>on</strong>s can be classified as either: (1)machine-gradable, <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> (MC) <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> that offer several possible alternative answersto each questi<strong>on</strong>, or (2) c<strong>on</strong>structed resp<strong>on</strong>se (CR) <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> such as essays, simulati<strong>on</strong>s of real-

TABLE 1Selected Certificati<strong>on</strong> Examinati<strong>on</strong>s Related to AccountingExaminati<strong>on</strong> Acr<strong>on</strong>ym Sp<strong>on</strong>soring Organizati<strong>on</strong> Cost Durati<strong>on</strong> (Hrs) Compositi<strong>on</strong>Certified Informati<strong>on</strong>Security ManagerCISMInformati<strong>on</strong> Systems Audit andC<strong>on</strong>trol Associati<strong>on</strong> (ISACA)$375 (members)$505 (n<strong>on</strong>-members)4 200 MultipleChoice (MC)Certificati<strong>on</strong> in C<strong>on</strong>trolSelf-Assessment®CCSAInstitute of Internal Auditors(IIA)$250 (members)$300 (n<strong>on</strong>-members)3.25 125 MCCertified FinancialServices Auditor®CFSAInstitute of Internal Auditors(IIA)$250 (members)$300 (n<strong>on</strong>-members)3.5 125 MCCertified FraudExaminerCFAAssociati<strong>on</strong> of Certified FraudExaminers$990 and up 10 600 MCCertified GovernmentAuditing Professi<strong>on</strong>al®CGAPInstitute of Internal Auditors(IIA)$300 3.5 125 MCCertified in theGovernance ofEnterprise ITCGEITInformati<strong>on</strong> Systems Audit andC<strong>on</strong>trol Associati<strong>on</strong> (ISACA)$325 (members)$455 (n<strong>on</strong>-members)4 120 MCCertified Informati<strong>on</strong>Systems AuditorCISAInstitute of Internal Auditors(IIA)$375 (members)$505 (n<strong>on</strong>-members)4 200 MCCertified InternalAuditorCIAInstitute of Internal Auditors(IIA)$575 (members 2.45 (each of 4parts)100 MC perpart (400 total)Certified ManagementAccountantCMAInstitute of ManagementAccountants (IMA)$700 (members) 4 (each of 2parts)100 MC and 230-minuteessays per partCertified PublicAccountantCPAAmerican Institute of CertifiedPublic Accountants (AICPA)varies by state 14 (all parts) 70% MC, 30%simulati<strong>on</strong>s

30 Simkin, Keuchler, Savage, and Stiverworld problems, or computati<strong>on</strong>al problems. In the discussi<strong>on</strong> that follows, the term “examinati<strong>on</strong>format” refers to the ratio of these two types of <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> a given certificati<strong>on</strong> examinati<strong>on</strong>.Table1 makes clear that the majority of <strong>accounting</strong> certificati<strong>on</strong> examinati<strong>on</strong>s rely heavily–orin most cases, solely–<strong>on</strong> <str<strong>on</strong>g>multiple</str<strong>on</strong>g> <str<strong>on</strong>g>choice</str<strong>on</strong>g> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>. The purpose of this article is to discuss theefficacy of using such <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> to assess a test taker’s understanding of a given body of knowledge.The next secti<strong>on</strong> of this paper discusses the theoretical merits and drawbacks of usingsuch <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> and reviews the empirical evidence. The third secti<strong>on</strong> reports the results of a newstudy that the authors c<strong>on</strong>ducted to empirically test the <str<strong>on</strong>g>use</str<strong>on</strong>g>fulness of MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> as assessmentsof test taker knowledge. The fourth secti<strong>on</strong> provides further discussi<strong>on</strong> of our results and also somecaveats that limit our findings. The final secti<strong>on</strong> provides a summary and a set of c<strong>on</strong>clusi<strong>on</strong>s forour work.THE MC-CR CONTROVERSYLike tests in academia, the objective of most certificati<strong>on</strong> examinati<strong>on</strong>s is to assess acandidate’s knowledge in sufficient depth to assign a fair grade. Given the importance of manycertificati<strong>on</strong>s to both applicants and employers, a great deal rides <strong>on</strong> the efficiency and equity of theprocess. This, in turn, has led both scholars and certificati<strong>on</strong>-exam developers to ask “what type oftest format best performs this assessment task?”Assessment ChoicesMC examinati<strong>on</strong>s enjoy a number of important advantages. For example, they can be drawnrandomly from computerized test banks and administered frequently to test takers; they can begraded easily, quickly, c<strong>on</strong>sistently, and accurately; they are usually viewed as objective; they cancover a wide range of subjects; and they can be returned to their test takers in relatively short periodsof time (Zeidner, 1987; Snyder, 2003). These advantages probably account for the fact that, today,a host of professi<strong>on</strong>al certificati<strong>on</strong> examinati<strong>on</strong>s in such diverse disciplines as architecture, dentistry,engineering, law, medicine, optometry, pharmacology, computer programming, and veterinarysciences now <str<strong>on</strong>g>use</str<strong>on</strong>g> MC formats exclusively as assessment tools (Hamblen, 2006; Puliyenthuruthel,2005). Historically, the <strong>on</strong>e excepti<strong>on</strong> to this trend has been the AICPA exam, but 24 years ago,Mitchell Rothkopf, then director of the examinati<strong>on</strong> divisi<strong>on</strong>, argued str<strong>on</strong>gly for “no more essay<str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> the uniform CPA exam” (Rothkopf, 1987).On the other hand, the most comm<strong>on</strong> argument favoring CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> is the widespreadbelief that they test a deeper understanding of the subject material (Hwang et al., 2008; Ingram andHoward, 1998; Bridgeman, 1992; Lukhele et al.,1994). A related percepti<strong>on</strong> is that CR test <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>are better at evaluating a resp<strong>on</strong>dent’s integrative skills–for example, beca<str<strong>on</strong>g>use</str<strong>on</strong>g> they demand amore–thorough grasp of the subject matter and its c<strong>on</strong>text–and therefore, better probe both thebreadth and depth of the test taker’s knowledge (Hancock, 1994; Rogers and Hartley, 1999; Bac<strong>on</strong>,2003). The fact that CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> also test the resp<strong>on</strong>dent’s ability to organize thoughts into acohesive answer adds to this preference (Shaftel and Shaftel, 2007). It is probably for these reas<strong>on</strong>sthat some certificati<strong>on</strong> examinati<strong>on</strong>s in such areas as engineering or informati<strong>on</strong> technology nowhave extensive, performance-based comp<strong>on</strong>ents. Similarly, essay <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> have been re-introducedinto the SAT examinati<strong>on</strong>s beca<str<strong>on</strong>g>use</str<strong>on</strong>g> its administrators felt that such <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> better assess theaccuracy of language and reas<strong>on</strong>ing skills (Katz et al., 2000).Professi<strong>on</strong>al accountants do not answer test <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> for a living. Rather, they perform<strong>accounting</strong> tasks using the skills they have learned in school or acquired at work. Thus, a final

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 31reas<strong>on</strong> favoring CR tests is the greater likelihood of structural fidelity–i.e., the degree to whichexaminati<strong>on</strong> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> require the same problem-solving skills encountered in the work venues ofa given field (Messick, 1993). This is particularly important in the <strong>accounting</strong> area, where employersare more interested in hiring competent accountants and auditors than good test takers.Despite their advantages, CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> have significant drawbacks, even for those whobelieve they are superior assessment tools. Perhaps the most important of them is that grading takesl<strong>on</strong>ger than for MC tests, tends to be more subjective, and often requires substantial prerequisiteknowledge. This process is also more <strong>on</strong>erous for the evaluators themselves, who are more likelyto be subject to both critical and litigious challenges. Finally, if the CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> require writingsamples or essays, some scholars (as well as many students) believe that CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> naturallyfavor those individuals with superior writing skills, even if poorly-written answers have superiorknowledge c<strong>on</strong>tent (Zimmerman and Williams, 2003).The MC-CR c<strong>on</strong>troversy includes <strong>on</strong>e final comp<strong>on</strong>ent: the questi<strong>on</strong> of “gender bias”(Walstad and Robs<strong>on</strong>, 1997; Hirschfeld et al.,1995). Certificati<strong>on</strong> test developers have a particularlystr<strong>on</strong>g interest in this matter beca<str<strong>on</strong>g>use</str<strong>on</strong>g> they have both a natural and a legal incentive to ensure thattheir examinati<strong>on</strong>s are “gender neutral”–i.e., that their tests do not favor males over females or viceversa. But are MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> really gender neutral?Given these c<strong>on</strong>cerns, the <str<strong>on</strong>g>choice</str<strong>on</strong>g> between using MC or CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> a given professi<strong>on</strong>alcertificati<strong>on</strong> examinati<strong>on</strong> creates a natural dichotomy. Multiple-<str<strong>on</strong>g>choice</str<strong>on</strong>g> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are morec<strong>on</strong>venient to grade, but are c<strong>on</strong>sidered by many researchers to be less effective at measuring deepc<strong>on</strong>ceptual understanding (Becker and Johnst<strong>on</strong>, 1999), while CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are just the opposite.The issues of “test equity” and “test efficacy” therefore come down to the extent to which the twotypes of <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are related. If we can find a str<strong>on</strong>g relati<strong>on</strong>ship between them, then certificati<strong>on</strong>test developers can <str<strong>on</strong>g>use</str<strong>on</strong>g> MC examinati<strong>on</strong>s almost exclusively <strong>on</strong> such examinati<strong>on</strong>s, knowing thatwhatever is measured by CR tests is also measured by the other, and saving thousands of hours ofgrading time in the process. C<strong>on</strong>versely, if <strong>on</strong>ly a weak relati<strong>on</strong>ship exists–or n<strong>on</strong>e at all–thenexaminers would appear to be remiss in relying exclusively <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> in certificati<strong>on</strong>examinati<strong>on</strong>s. What empirical evidence exists to answer this questi<strong>on</strong>?Empirical EvidenceAn extensive body of research has addressed the questi<strong>on</strong> of how well MC versus CR<str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> test understanding of c<strong>on</strong>tent material. Much of the theoretical work comes from the areasof educati<strong>on</strong>al psychology and educati<strong>on</strong>al assessment (Martinez, 1999; Hancock, 1994; Nunnallyand Bernstein, 1994; Simkin and Kuechler, 2005). While these works suggest that it is theoreticallypossible to c<strong>on</strong>struct MC items that measure many of the same cognitive abilities as CR items, thequesti<strong>on</strong> remains of how well this theory holds up empirically.Early studies of this hypothesis have led some scholars to c<strong>on</strong>clude that MC tests and CRtests measure the same thing (Traub, 1993; Wainer and Thissen, 1993; Bennett et al., 1991;Bridgeman, 1991). After examining sample tests from seven different disciplines, for example,Wainer and Thissen (1993) c<strong>on</strong>cluded that this relati<strong>on</strong>ship was so str<strong>on</strong>g that “whatever is …measured by the c<strong>on</strong>structed resp<strong>on</strong>se secti<strong>on</strong> is measured better by the <str<strong>on</strong>g>multiple</str<strong>on</strong>g> <str<strong>on</strong>g>choice</str<strong>on</strong>g> secti<strong>on</strong>…Wehave never found any test that is composed of an objectively and a subjectively scored secti<strong>on</strong> forwhich this is not true” (p. 116). Subsequent studies by Walstad and Becker (1994) and Kennedy andWalstad (1997) echoed these sentiments.

32 Simkin, Keuchler, Savage, and StiverA number of recent studies dispute these findings. For example, using a two-stage leastsquares estimati<strong>on</strong> procedure, Becker and Johnst<strong>on</strong> (1999) found no relati<strong>on</strong>ship between studentperformance <strong>on</strong> MC and essay <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> ec<strong>on</strong>omics examinati<strong>on</strong>s, and therefore c<strong>on</strong>cluded that“these testing forms measure different dimensi<strong>on</strong>s of knowledge” (p. 348). A similar study inphysics instructi<strong>on</strong> by Dufresne et al. (2002. p. 175) led the authors to c<strong>on</strong>clude that student answers<strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> “…more often than not [give] a false indicator of deep c<strong>on</strong>ceptual understanding.”Finally, in the areas of <strong>accounting</strong> and informati<strong>on</strong> systems, works by Kuechler and Simkin (2003)and Bible et al. (2008) found <strong>on</strong>ly moderate relati<strong>on</strong>ships between the MC and CR porti<strong>on</strong>s of thestudy examinati<strong>on</strong>s.A potential explanati<strong>on</strong> for why earlier empirical work has not yielded more c<strong>on</strong>sistentfindings is that the type of CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <str<strong>on</strong>g>use</str<strong>on</strong>g>d in the analysis may vary by domain. For example, ifthe CR porti<strong>on</strong>s of most English literature examinati<strong>on</strong>s involve essay <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, the higher-levelorganizati<strong>on</strong>al and expository skills required to answer them are less likely to correlate with studentperformance <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, ostensibly over the same material. But this may not be a problem inthe <strong>accounting</strong> or engineering areas, where CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are often computati<strong>on</strong>al, usually requirestudents to adhere to more rigid standards of logic, and correct answers have less room for variance.As a result, it may be reas<strong>on</strong>able to expect a closer relati<strong>on</strong>ship between student performance <strong>on</strong> theMC and CR porti<strong>on</strong>s of student examinati<strong>on</strong>s in these areas. Again, a closer relati<strong>on</strong>ship bodes wellfor professi<strong>on</strong>al certificati<strong>on</strong> examinati<strong>on</strong>s that rely solely <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>.As noted earlier, there is also the issue of gender bias. Here, too, the empirical evidence isinc<strong>on</strong>sistent. Some studies have shown a possible advantage of males relative to females <strong>on</strong> MCtests (Lumsden et al., 1987; Bolger and Kellaghan, 1990; Lumsden and Scott, 1995). Bridgeman andLewis (1994) estimated this advantage to be about <strong>on</strong>e-third of <strong>on</strong>e standard deviati<strong>on</strong>. However,other studies have shown no significant difference between males and females when both areevaluated using a MC test rather than a CR test (Chan and Kennedy, 2002; Greene, 1997; Walstadand Becker, 1994).It is possible that “gender bias” may depend up<strong>on</strong> a variety of factors, including theparticular sample <str<strong>on</strong>g>use</str<strong>on</strong>g>d to address this questi<strong>on</strong> as well as the discipline from which the sample istaken (Hamilt<strong>on</strong>, 1999; Gallagher and De Lisi, 1994). The relevant questi<strong>on</strong> here is whether MC<str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are gender-neutral <strong>on</strong> <strong>accounting</strong> certificati<strong>on</strong> examinati<strong>on</strong>s. To date, several studies haveexplored the relati<strong>on</strong>ship between “test format” and “gender bias” in the <strong>accounting</strong> area. One studyby Lumsden and Scott (1995) found that males scored higher than females <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> whentaking the ec<strong>on</strong>omics secti<strong>on</strong> of the Chartered Associati<strong>on</strong> of Certified Accountants examinati<strong>on</strong>,while another study by Gul et al. (1992) found no gender differences in students taking MCexaminati<strong>on</strong>s in an auditing class. Tsui et al. (1995) replicated the Gul et al. (1992) study, but didnot report gender differences.Finally, Bible et al. (2008) identified “gender” as a small, but statistically significantdeterminant of student performance <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> intermediate <strong>accounting</strong> tests. However,these researchers also found that the statistical significance varied by class secti<strong>on</strong>, leading them toc<strong>on</strong>clude that gender differentials were at best “small.” They also noted that the language-processingskills often required <strong>on</strong> the essay porti<strong>on</strong>s of tests in other disciplines were not at play in the tests<str<strong>on</strong>g>use</str<strong>on</strong>g>d in their study.

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 33A NEW STUDYIn the authors’ opini<strong>on</strong>, the empirical evidence <strong>on</strong> the relati<strong>on</strong>ship between performance <strong>on</strong>MC and CR examinati<strong>on</strong>s is notable for what it does not provide: a definitive answer to the questi<strong>on</strong>of how close these assessments are to <strong>on</strong>e another in measuring a test taker’s understanding of agiven body of knowledge, and also whether or not MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> have a gender bias. If a closerelati<strong>on</strong>ship can be found, then certificati<strong>on</strong> examiners can find assurance that their MC tests are as<str<strong>on</strong>g>use</str<strong>on</strong>g>ful a predictor as CR tests of the professi<strong>on</strong>al competencies of those who take them. C<strong>on</strong>versely,if a close relati<strong>on</strong>ship cannot be found, it is easy to ask “of what <str<strong>on</strong>g>use</str<strong>on</strong>g> are such tests?” C<strong>on</strong>sequently,given the discussi<strong>on</strong>s above, the authors were interested in investigating two specific research<str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>: (1) can <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> examinati<strong>on</strong>s capture a substantial amount of the variability ofc<strong>on</strong>structed resp<strong>on</strong>se scores (i.e., performance <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> correlates significantly and directlywith performance <strong>on</strong> CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>), and (2) are there significant differences in test performance thatcan be attributed to gender differences in the test takers?The relative strength of the relati<strong>on</strong>ship between student performance <strong>on</strong> MC and CR testsis important. What we would like is a means to predict student performance <strong>on</strong> CR tests using moretractable MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>. It is this possibility that the present study sought to c<strong>on</strong>firm. If therelati<strong>on</strong>ship is str<strong>on</strong>g enough (a subjective matter), then test developers can have the best of bothworlds–MC exams that are easy to grade and comfort in the knowledge that such tests evaluate asmuch mastery of professi<strong>on</strong>al materials as CR tests.Beca<str<strong>on</strong>g>use</str<strong>on</strong>g> CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> like word problems can create a richer “prompting envir<strong>on</strong>ment” thatis known to enhance recall under some c<strong>on</strong>diti<strong>on</strong>s, these and other factors have led researchers toc<strong>on</strong>clude that <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> and c<strong>on</strong>structed resp<strong>on</strong>se <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> tap into different learningc<strong>on</strong>structs (Becker and Johnst<strong>on</strong>, 1999). However, the intent of this research was to determine thedegree to which whatever is measured by <strong>on</strong>e type of measure captures a meaningful amount of thevariati<strong>on</strong> in whatever is measured by the other type of measure.MethodologyTo answer our research <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, the authors c<strong>on</strong>ducted a study over four semesters toinvestigate the relati<strong>on</strong>ship between the two types of performance measures. The sample datac<strong>on</strong>sisted of the test scores of 448 students who had enrolled in <strong>accounting</strong> classes at two differentstate universities. Each student was required to complete two parts of the same test: <strong>on</strong>e partc<strong>on</strong>sisting of MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> and <strong>on</strong>e part c<strong>on</strong>sisting of CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>. Although the enrollees in theclasses obviously differed from semester to semester, the two types of <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> each test coveredthe same class materials and attempted to measure student understanding of the subject matters.All the students in the study were taking the courses for credit. In this sample, 41 percent ofthe students were female and 59 percent were male. Beca<str<strong>on</strong>g>use</str<strong>on</strong>g> the influence of “gender” has beenidentified in prior research as potentially important, we also included this variable in ourinvestigati<strong>on</strong>s to see if it affected student test performance. Following prior experimental designs,CR scores acted as the dependent variable and a <str<strong>on</strong>g>multiple</str<strong>on</strong>g> linear regressi<strong>on</strong> analysis was <str<strong>on</strong>g>use</str<strong>on</strong>g>d todetermine the degree to which MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> and certain demographic variables (described in greaterdetail below) were <str<strong>on</strong>g>use</str<strong>on</strong>g>ful predictors of performance <strong>on</strong> the CR porti<strong>on</strong> of each examinati<strong>on</strong>.In the student tests <str<strong>on</strong>g>use</str<strong>on</strong>g>d in this study, each MC questi<strong>on</strong> referred to a separate aspect of theassociated <strong>accounting</strong> material and had four possible answers, labeled A through D. Figure 1illustrates a typical MC questi<strong>on</strong>. Students answered this secti<strong>on</strong> of the exam by blackening a square<strong>on</strong> a scantr<strong>on</strong> scoring sheet for a particular questi<strong>on</strong>. The number of correct resp<strong>on</strong>ses for the

34 Simkin, Keuchler, Savage, and StiverA company purchased office supplies costing $3,000 and debited Office Supplies for the fullamount. At the end of the <strong>accounting</strong> period, a physical count of office supplies revealed$1,600 still <strong>on</strong> hand. The appropriate adjusting journal entry to be made at the end of theperiod would beA) Debit Office Supplies, $1,600; Credit Office Supplies Expense, $1,600.B) Debit Office Supplies, $3,400; Credit Office Supplies Expense, $3,400.C) Debit Office Supplies Expense, $3,400; Credit Office Supplies, $3,400.D) Debit Office Supplies Expense, $1,400; Credit Office Supplies, $1,400.Figure 1. A typical <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> questi<strong>on</strong>Prepare the journal entries to record the following transacti<strong>on</strong>s <strong>on</strong> Paula Worth Company’sbooks using a perpetual inventory system. On February 6, Paula Worth Company sold$60,000 of merchandise to the J<strong>on</strong>es Company, terms 2/10, net/30. The cost of themerchandise sold was $30,000. On February 8, the J<strong>on</strong>es Company returned $10,000 of themerchandise purchased <strong>on</strong> February 6 beca<str<strong>on</strong>g>use</str<strong>on</strong>g> it was defective. The cost of the merchandisereturned was $5,000. On February 16, Paula Worth Company received the balance due fromthe J<strong>on</strong>es Company.Figure 2. A typical c<strong>on</strong>structed-resp<strong>on</strong>se (CR) questi<strong>on</strong>different types of <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> each exam was scaled to a percentage to permit us to test studentperformance c<strong>on</strong>sistently across semesters.In c<strong>on</strong>trast to the MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> in the sample tests, each CR questi<strong>on</strong> required a student toperform computati<strong>on</strong>s for some specific <strong>accounting</strong> event. Figures 2 and 3 illustrate typical<str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>. Each CR problem was worth several points–typically in the range of 5 to 25 points. Allof these exam <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> were graded using an answer template, limiting grading variati<strong>on</strong>s andrestricting the subjectivity involved in the process. The instructor in each class <str<strong>on</strong>g>use</str<strong>on</strong>g>d the same answerkey to grade each CR questi<strong>on</strong>, awarding partial credit c<strong>on</strong>sistently and in the same proporti<strong>on</strong> forpartially-correct answers.The MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> appeared first in each examinati<strong>on</strong>, followed by the CR (problem-solving)<str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>. The cover page of the exam c<strong>on</strong>tained test instructi<strong>on</strong>s, informati<strong>on</strong> about how much eachquesti<strong>on</strong> was worth, and the maximum time available for the exam. In practice, most students beganby answering the MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, but test takers could also “work backwards” if they wished andbegin with the CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>. This alternate test taking strategy is comm<strong>on</strong> am<strong>on</strong>g students whoprefer CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> as well as for some internati<strong>on</strong>al students for whom English is a sec<strong>on</strong>dlanguage (Kuechler and Simkin, 2003). Due to the higher point weighting, it is also possible thatsubjects foc<str<strong>on</strong>g>use</str<strong>on</strong>g>d more attenti<strong>on</strong> <strong>on</strong> the <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> than the problem solving/essay(CR) <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>.Some prior studies of examinati<strong>on</strong> effectiveness have adjusted the scores of MC tests in anattempt to correct for the effects of guessing. One of the most recent investigati<strong>on</strong>s of the influenceof guessing <strong>on</strong> MC tests by Zimmerman and Williams (2003) c<strong>on</strong>curs with most prior research <strong>on</strong>that topic in stating that “guessing c<strong>on</strong>tributes to error variance and diminishes the reliability of

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 35Bank Rec<strong>on</strong>ciliati<strong>on</strong>Part 1 - Multiple Choice Questi<strong>on</strong>s1. Which journal(s) are normally associated with the General Checking GL Account (andwere <str<strong>on</strong>g>use</str<strong>on</strong>g>d as such in your project)?a. General Journalb. Cash Receipts Journalc. Cash Disbursements Journald. All of the above2. Last m<strong>on</strong>th’s bank rec<strong>on</strong>ciliati<strong>on</strong> is <str<strong>on</strong>g>use</str<strong>on</strong>g>d for this m<strong>on</strong>th’s bank rec<strong>on</strong>ciliati<strong>on</strong> to identify:a. Deposits in transit that have finally cleared the bankb. Outstanding checks that have finally cleared the bankc. Both A & Bd. N<strong>on</strong>e of the above. Last m<strong>on</strong>th’s bank rec<strong>on</strong>ciliati<strong>on</strong> is NOT <str<strong>on</strong>g>use</str<strong>on</strong>g>d except as a template.3. Which of the following rec<strong>on</strong>ciling items <strong>on</strong> the bank rec<strong>on</strong>ciliati<strong>on</strong> require anadjustment to the balance of the cash general ledger account?a. A m<strong>on</strong>thly service charge fee d. errors by the bankb. Outstanding checks e. All of the above require adjustmentc. Deposits-in-transitPart 2 - C<strong>on</strong>structive Resp<strong>on</strong>se Questi<strong>on</strong>See attached bookkeeping and <strong>accounting</strong> informati<strong>on</strong> for Your Company (General Ledger,General Journal, Cash Receipts Journal, Cash Payments Journal, Bank Statement, last m<strong>on</strong>th’srec<strong>on</strong>ciling items for the bank rec<strong>on</strong>ciliati<strong>on</strong>).Complete the <strong>accounting</strong> task below. Include complete and proper headings:Start with the Cash Operating account and do a bank rec<strong>on</strong>ciliati<strong>on</strong> for the General Checkingbank account. Record and post any necessary adjustments in the books.Figure 3. A <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> and related c<strong>on</strong>structed-resp<strong>on</strong>se (CR) questi<strong>on</strong>tests.” These authors also note that the variance depends <strong>on</strong> <str<strong>on</strong>g>multiple</str<strong>on</strong>g> factors, including the abilityof the examinee, in ways that are not fully understood. For this reas<strong>on</strong>, rather than adjust the rawscores, we preferred to perform our regressi<strong>on</strong>s <strong>on</strong> unadjusted scores and inform the reader of theeffect of the likely increase in variance in our data sets and its effect <strong>on</strong> regressi<strong>on</strong> analyses. Again,our objective was not to explain the variability of MC or CR tests, but rather to investigate whetherperformance <strong>on</strong> MC tests and CR tests are related.DataTo address these <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, the authors gathered data from a wide range of <strong>accounting</strong>courses and students. Table 2 describes the 10 <strong>accounting</strong> classes <str<strong>on</strong>g>use</str<strong>on</strong>g>d in our study and the number

36 Simkin, Keuchler, Savage, and StiverTABLE 2Sources of Sample DataClass Class Title Number of StudentsClass 1 Introductory Financial Accounting 46Class 2 Introductory Financial Accounting 46Class 3 Introductory Financial Accounting 45Class 4 Introductory Financial Accounting 45Class 5 Introductory Financial Accounting 46Class 6 Accounting Informati<strong>on</strong> Systems 54Class 7 Accounting Informati<strong>on</strong> Systems 39Class 8 Accounting Informati<strong>on</strong> Systems 37Class 9 Intermediate Financial Accounting 45Class 10 Intermediate Financial Accounting 34of students enrolled in each of them. To ensure independent sample observati<strong>on</strong>s, we excludedsec<strong>on</strong>dary test scores from the few students who were simultaneously or c<strong>on</strong>secutively enrolled intwo of these classes.Dependent and Independent VariablesThe dependent variable for the study was the (percentage) score <strong>on</strong> the CR porti<strong>on</strong> of eachclass examinati<strong>on</strong>. From the standpoint of this investigati<strong>on</strong>, the most important independentvariable was the student’s score <strong>on</strong> the MC porti<strong>on</strong> of the examinati<strong>on</strong>. As illustrated in Figure 2,the CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> required students to create journal entries or perform similar tasks. To ensurec<strong>on</strong>sistency in grading CR answers, the same instructor manually graded all the CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> allexaminati<strong>on</strong>s for a given class, indicating the correct answer(s) to each CR questi<strong>on</strong> as well as a listof numerical penalties to assess for comm<strong>on</strong> errors.Another important independent variable <str<strong>on</strong>g>use</str<strong>on</strong>g>d in this study was “gender.” As noted above,a number of prior studies have detected a relati<strong>on</strong>ship between “gender” and “computer-relatedoutcomes” (Hamilt<strong>on</strong>, 1999; Gutek and Biks<strong>on</strong>, 1985). Accordingly, “gender” was included in thelinear regressi<strong>on</strong> model as a dummy variable, using “1” for females and “0” for males. Finally, theexaminati<strong>on</strong>s in each class had different, and differently-worded, <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, and the tests were takenby different students. Accordingly, we added dummy (0-1) variables to the regressi<strong>on</strong> equati<strong>on</strong> foreach class to account for these differences.In summary, the regressi<strong>on</strong> equati<strong>on</strong> tested in this study <str<strong>on</strong>g>use</str<strong>on</strong>g>d student performance <strong>on</strong> the CRporti<strong>on</strong> of a final or midterm examinati<strong>on</strong> as the dependent variable, and student performance <strong>on</strong>the MC porti<strong>on</strong> of the exam, gender, and semester dummy variables as independent variables. Table3 lists these independent variables and provides brief descripti<strong>on</strong>s of them.ResultsTable 4 displays the results from the regressi<strong>on</strong> analysis described above. Coefficient values,t-statistics, and probabilities are <strong>on</strong>ly shown for nine of the ten classes beca<str<strong>on</strong>g>use</str<strong>on</strong>g> we <str<strong>on</strong>g>use</str<strong>on</strong>g>d 0-1 dummy

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 37TABLE 3Independent Variables for the Regressi<strong>on</strong> ModelIndependent VariableMCGenderClass (1, 2, etc.)Descripti<strong>on</strong>The (percentage) score <strong>on</strong> the <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> porti<strong>on</strong> of anexaminati<strong>on</strong>A dummy variable: 0 = male, 1 = femaleA dummy variable (0-1) to account for the differentexaminati<strong>on</strong> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> given to each classTABLE 4Linear Regressi<strong>on</strong> ResultsCoefficient (â) t-statistic ProbabilityIntercept 47.11 13.89

TABLE 5Correlati<strong>on</strong> Matrix for All VariablesCR MC Gender Class 1 Class 2 Class 3 Class 4 Class 5 Class 6 Class 7 Class 8 Class 9MC 0.499**Gender 0.053 -0.009Class 1 0.035 0.156** 0.014Class 2 -0.053 0.007 -0.047 -0.157**Class 3 -0.064 0.017 -0.033 -0.153** -0.093*Class 4 0.065 0.148** -0.031 -0.188** -0.114* -0.111*Class 5 0.033 0.063 0.143** -0.084 -0.051 -0.050 -0.061Class 6 0.011 0.191** 0.001 -0.170** -0.103* -0.100* -0.124** -0.055Class 7 -0.100* -0.100* 0.016 -0.170** -0.103* -0.100* -0.124** -0.055 -0.112*Class 8 0.012 -0.022 0.016 -0.170** -0.103* -0.100* -0.124** -0.055 -0.112* -0.112*Class 9 0.054 -0.236** 0.001 -0.170** -0.103* -0.100* -0.124** -0.055 -0.112* -0.112* -0.112*Class 10 -0.004 -0.215 -0.026 -0.146 -0.088 -0.086 -0.106 -0.048 -0.096 -0.906 -0.096 -0.096Note: for significant correlati<strong>on</strong> coefficients, * indicates .05 and ** indicates .01

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 39The results for the gender variable were inc<strong>on</strong>clusive. Beca<str<strong>on</strong>g>use</str<strong>on</strong>g> the underlying dummyvariable was coded as “0” for males and “1” for females, the regressi<strong>on</strong> coefficient of “1.3,” ifsignificant, would imply that females have a str<strong>on</strong>g advantage over males <strong>on</strong> CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>.However, the P value of .229 indicates that gender is not statistically significant. Beca<str<strong>on</strong>g>use</str<strong>on</strong>g> thec<strong>on</strong>clusi<strong>on</strong> of a str<strong>on</strong>g positive bias for females <strong>on</strong> MC tests is inc<strong>on</strong>sistent with earlier studies(Bible et al., 2008; Kuechler and Simkin, 2003; Gutek and Biks<strong>on</strong>, 1985), all of which found smallgender differentials favoring males <strong>on</strong> MC tests, we c<strong>on</strong>sider the n<strong>on</strong>-significant positive result forfemales an artifact of this particular data set. The lack of gender significance in fact reflects themeta-level inc<strong>on</strong>sistency found for gender effects in prior studies.Finally, we found that seven out of nine of the coefficients for our class (dummy) variableswere statistically significant. As noted earlier, this finding merely c<strong>on</strong>firms our asserti<strong>on</strong>s that amyriad of factors differed from test to test, and therefore, between classes and semesters–forexample, how hard each test was relative to the others.2Perhaps the most important statistic in our linear regressi<strong>on</strong> was the adjusted R value of“.301.” This finding is c<strong>on</strong>sistent with similar models tested by Kuechler and Simkin (2003), whofound a comparable value of “0.45” in a similar study of informati<strong>on</strong> systems students. The ultimateassessment of whether such a result is “good” or “bad” is subjective, but (for the reas<strong>on</strong>s explainedabove) we expected a higher value.Individual Class Regressi<strong>on</strong>sTo isolate the possible interactive effects of the differentiating factors ca<str<strong>on</strong>g>use</str<strong>on</strong>g>d by a differentset of tests and students, the authors also performed regressi<strong>on</strong>s for each class separately, using <strong>on</strong>lyan intercept, MC, and gender as explanatory variables. Table 6 reports our findings.As with the results in Table 4, most of the regressi<strong>on</strong> coefficients for these latter models werestatistically significant. However, the actual estimati<strong>on</strong> values differed from semester to semester,reflecting the differences in test <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>, variati<strong>on</strong>s in teaching effectiveness, and different studentcompositi<strong>on</strong>s. Of particular interest were the coefficient estimates for the MC variable, which rangedin value from “.20” (for class 10) to “0.83” (for class 2). Again, the positive signs of these estimatesreinforce the intuitive noti<strong>on</strong> that student performance <strong>on</strong> MC and CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are related to <strong>on</strong>eanother, and again, n<strong>on</strong>e of these estimates were statistically differentiable from a desirablecoefficient value of “1.0”–the ideal value indicating that performances <strong>on</strong> MC and CR tests areperfectly synchr<strong>on</strong>ized.We note that the regressi<strong>on</strong>s for five of the ten classes taken individually have adjusted R 2values less than the summary regressi<strong>on</strong> c<strong>on</strong>taining all data points. We also note that “gender” wassignificant for <strong>on</strong>ly <strong>on</strong>e class (Class 2). Both of these observati<strong>on</strong>s lend credibility to the results of2the all-class regressi<strong>on</strong> (Table 4), which also reports a small R value and a n<strong>on</strong>-statisticallysignificantgender coefficient (p =.229).Two Additi<strong>on</strong>al Statistical TestsTo further examine the statistical properties of our sample data, we performed both amatched-pair test for the mean difference in scores and a Wilcox<strong>on</strong> signed rank test for each of the10 different classes in our sample. Again, we tested our class data separately in order to reduce thec<strong>on</strong>founding effects potentially inherent in combining the data from different class subjects, varyingamounts of course support, alternate instructors, and similar artifacts. Beca<str<strong>on</strong>g>use</str<strong>on</strong>g> we could not

40 Simkin, Keuchler, Savage, and StiverTABLE 6Estimates of Regressi<strong>on</strong> Coefficients for Individual ClassesClass 1 Class 2 Class 3 Class 4 Class 5Intercept 33.17* 15.39* 27.34 70.48* 51.25*MC (%) 0.77* 0.83* 0.69* 0.21* 0.53*Gender -1.08 6.65* 2.30 2.30 all female2Adjusted R 0.56 0.45 0.17 0.31 0.26df, F, p 2, 59.77,

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 41scores is zero). The analyses in Table 7 suggest that <strong>on</strong>ly the data from classes 1, 5 and 8 show nosignificant differences. In all other classes, the t-test statistic was significant at an alpha level of .05.Parametric tests require several assumpti<strong>on</strong>s regarding the normality of the distributi<strong>on</strong> forthe test statistic of interest, and are also sensitive to outliers whose magnitudes can overwhelm thec<strong>on</strong>forming behavior of the remaining data. Accordingly, we also performed a series of Wilcox<strong>on</strong>signed rank tests to overcome this potential problem in our ten sets of class data. Like a matchedpairtest, a Wilcox<strong>on</strong> test also computes the difference in each student’s CR and MC scores, but thenassigns a rank order to each absolute difference. The test statistic is the mean of these ranks (afterreassigning the positive or negative sign to each rank from the original difference). Differences ofzero are removed from further c<strong>on</strong>siderati<strong>on</strong>, explaining why the number of observati<strong>on</strong>s, n, differsfor the same class in some rows of Table 7.Under the null hypothesis that the MC and CR scores come from the same populati<strong>on</strong>, theaverage of these terms should cluster around zero. For each class tested, the results for the Wilcox<strong>on</strong>signed rank test were c<strong>on</strong>sistent with the results of the paired t-test. Again, <strong>on</strong>ly classes 1, 5 and 8were statistically insignificant at an alpha level of .05.Our finding of statistical significance for the majority of our data somewhat c<strong>on</strong>trasts withsome of our regressi<strong>on</strong> results in terms of students performing c<strong>on</strong>sistently between the two testformats. The null hypothesis for both statistical tests reported in Table 7 is that the scores from theMC and CR porti<strong>on</strong>s of our student exams came from the same populati<strong>on</strong>. The statistical outcomesfor seven out of ten tests performed here were that they did not–i.e., that student performance <strong>on</strong> theseparate assessment measures was meaningfully different. In fact, in our sample classes, studentsc<strong>on</strong>sistently performed better <strong>on</strong> the CR porti<strong>on</strong>s of their exams, with mean differences ranging from3.9 percentage points to 19.7 points. We speculate that a student’s ability to earn partial credit <strong>on</strong>the CR porti<strong>on</strong>s of these tests may explain some of this.DISCUSSION AND CAVEATSOur statistical analysis suggests that a positive relati<strong>on</strong>ship exists between the MC and CRscores. This is to say that, while there may be differences in the magnitude of scores, the scores atleast vary in a similar directi<strong>on</strong>. The good news in this finding is the implicati<strong>on</strong> that using MC teststo measure understanding of a given subject area at least points in the right directi<strong>on</strong>–i.e., thoseindividuals who do well <strong>on</strong> MC tests are also likely to do well <strong>on</strong> CR tests, and vice versa. This isheartening news to certificati<strong>on</strong> test developers who seek exactly this relati<strong>on</strong>ship.The results of both our matched-pair tests and our n<strong>on</strong>-parametric tests are somewhat lessencouraging, however–indicating that the MC and CR porti<strong>on</strong>s of each exam were not equivalenttests. The MC porti<strong>on</strong>s of all these student tests, even after adjusting for “gender” and other potentialdifferences in our sample classes, explained about 30 percent of the variati<strong>on</strong> in the scores of the CRporti<strong>on</strong>s of those same tests. Certificati<strong>on</strong> test developers are likely to be disappointed that thisstatistic was not higher. It indicates that the independent variables of our model–particularly studentperformance <strong>on</strong> the MC porti<strong>on</strong>s of the sample examinati<strong>on</strong>s–explained a little less than <strong>on</strong>e thirdof the variability of the dependent (CR) variable. To us, it also suggests that the MC and CR porti<strong>on</strong>sof these tests are testing different types of understanding, or perhaps testing the same understandingat different depths.The reas<strong>on</strong>s for using MC tests instead of CR tests are well documented, and the authorsacknowledge the c<strong>on</strong>venience of employing them <strong>on</strong> certificati<strong>on</strong> examinati<strong>on</strong>s. We would also becomfortable with such formats if we can show that there is a clear and c<strong>on</strong>sistent positive

42 Simkin, Keuchler, Savage, and Stiverrelati<strong>on</strong>ship between the two test formats. Our analysis, using data that were drawn from tendifferent <strong>accounting</strong> classes, suggests that this relati<strong>on</strong>ship, while positive, appears to be relativelyweak. We are not al<strong>on</strong>e in this finding–a number of scholars performing parallel tests <strong>on</strong> otherstudent subjects and at other universities have reached the same c<strong>on</strong>clusi<strong>on</strong> in other disciplines(Becker and Johnst<strong>on</strong>, 1999; Dufresne et al., 2002; Kuechler and Simkin, 2003).This leads us back to the initial questi<strong>on</strong> posed in the title of this paper: “<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use MultipleChoice Questi<strong>on</strong>s <strong>on</strong> Accounting Certificati<strong>on</strong> Examinati<strong>on</strong>s?” If the purpose of such examinati<strong>on</strong>sis to assess the professi<strong>on</strong>al qualificati<strong>on</strong>s of their test takers, we cannot defend their <str<strong>on</strong>g>use</str<strong>on</strong>g> andtherefore have no answer to this questi<strong>on</strong>.CaveatsIn our analyses, we recognize that a host of factors may have led us astray. For example, anystudy is likely to have some sampling error. Here, we discuss some additi<strong>on</strong>al possibilities. Oneproblem with any study using student participants is the possibility that its researchers have studiedthe wr<strong>on</strong>g populati<strong>on</strong>. We recognize this possibility, but are not sure it applies here. After all, mostcertificati<strong>on</strong> exams require their test takers to complete a four-year degree in <strong>accounting</strong> or a relatedfield at an accredited college–i.e., the test takers in the populati<strong>on</strong> from which our sample wasdrawn. N<strong>on</strong>etheless, sampling bias is still possible.Another possible explanati<strong>on</strong> for our lack of str<strong>on</strong>ger results is the likelihood that thedifferent test formats are not equivalent–i.e., the possibility that the MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> and CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>test different levels of knowledge and that such <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> therefore require different skill sets oftheir test takers. We readily admit this possibility, and in fact embrace it. We do not want to provethat these test formats are different. We already believe that they are different. Rather, what wesought to find was a relati<strong>on</strong>ship between student performance <strong>on</strong> these two, very different typesof tests. If we had found a str<strong>on</strong>g c<strong>on</strong>necti<strong>on</strong>, we could then take comfort in the fact that certificati<strong>on</strong>tests, if carefully c<strong>on</strong>structed, can indeed rely solely <strong>on</strong> MC test formats to perform the assessmenttasks desired of them. Empirically, however, we were disappointed that we did not find a closerrelati<strong>on</strong>ship.We also note that we are all individuals, and that most people are more comfortable taking<strong>on</strong>e type of test than the other. Some students complain, for example, that a forthcoming test will<strong>on</strong>ly be <str<strong>on</strong>g>multiple</str<strong>on</strong>g> <str<strong>on</strong>g>choice</str<strong>on</strong>g>–a test format they feel they “d<strong>on</strong>’t do well with”–while others express theopposite preference. We acknowledge the possibility that our sample c<strong>on</strong>tained a prep<strong>on</strong>derance ofindividuals with <strong>on</strong>e dispositi<strong>on</strong> rather than the other–e.g., we happened to pick students who, say,liked MC tests. In such circumstances, the c<strong>on</strong>necti<strong>on</strong> between performance <strong>on</strong> MC and CR testswould be tenuous, leading to the results we did, in fact, observe here. Given our large sample andten classes, however, this possibility seems remote.A similar comment applies to the potential for an ordering bias in our study, ca<str<strong>on</strong>g>use</str<strong>on</strong>g>d by thefact that the student examinati<strong>on</strong>s <str<strong>on</strong>g>use</str<strong>on</strong>g>d in this study c<strong>on</strong>sistently placed the MC secti<strong>on</strong>s of the testsbefore the CR porti<strong>on</strong>s. At least two items mitigate this possibility. One is the fact that students werefree to answer the <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> in any order, and all students were given enough time to finish all partsof their examinati<strong>on</strong>. The other, more important <strong>on</strong>e, is the fact that no students were asked to leavethe exam room before they had finished the entire test.We also recognize that certificati<strong>on</strong> examiners invest substantial resources in creating andvalidating their tests, and that the MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> they c<strong>on</strong>tain are not necessarily equivalent to the<strong>on</strong>es we <str<strong>on</strong>g>use</str<strong>on</strong>g>d in our analyses. We certainly believe that carefully-worded MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> are better

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 43than ambiguous or poorly-worded <strong>on</strong>es. On the other hand, we have no reas<strong>on</strong> to believe that theMC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <str<strong>on</strong>g>use</str<strong>on</strong>g>d here were different enough to invalidate our study results. Similarly, for testtakers, we acknowledge that the motivati<strong>on</strong> for studying and passing certificati<strong>on</strong> examinati<strong>on</strong>s maybe greater than for passing college examinati<strong>on</strong>s, and that other differences in testing envir<strong>on</strong>mentsare also likely to be at work here.Finally, we note that the theoretical advantages of CR examinati<strong>on</strong> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> in assessingdeep understanding of subject matter can be diminished significantly by improper item compositi<strong>on</strong>or hurried or inadequate grading. However, proper item compositi<strong>on</strong> is a factor with MCexaminati<strong>on</strong>s as well, and increases in impact at the higher levels of knowledge that might betargeted by such <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>. Moreover, we would hope that for those certificati<strong>on</strong> examinati<strong>on</strong>scomposed by public certificati<strong>on</strong> groups and intended to be administered to <str<strong>on</strong>g>multiple</str<strong>on</strong>g> audiences overan extended period of time, both the compositi<strong>on</strong> of examinati<strong>on</strong> items and the grading ofexaminati<strong>on</strong>s would be at the highest levels.SUMMARY AND CONCLUSIONSThere are many reas<strong>on</strong>s why employers, employees, and the <strong>accounting</strong> professi<strong>on</strong> itself allvalue professi<strong>on</strong>al certificati<strong>on</strong>, an esteem reflected by the large number of certificati<strong>on</strong>examinati<strong>on</strong>s available to individuals. Our review of such certificati<strong>on</strong> examinati<strong>on</strong>s found thatalmost all of them rely solely <strong>on</strong> <str<strong>on</strong>g>multiple</str<strong>on</strong>g>-<str<strong>on</strong>g>choice</str<strong>on</strong>g> <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> for testing purposes–a format that hasmany grading advantages but little structural fidelity. For a wide variety of reas<strong>on</strong>s, MC tests arethemselves c<strong>on</strong>troversial and not universally accepted as the best assessment tools for measuringprofessi<strong>on</strong>al <strong>accounting</strong> competencies–c<strong>on</strong>structed resp<strong>on</strong>se tests such as computati<strong>on</strong>al <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g>are usually preferred as theoretically superior metrics.The questi<strong>on</strong> we sought to answer was whether there was any relati<strong>on</strong>ship betweenperformance <strong>on</strong> these two types of tests. In our empirical study of this relati<strong>on</strong>ship across a sampledomain of 10 separate classes and nearly 450 students, we found a positive relati<strong>on</strong>ship betweenperformance <strong>on</strong> the MC and CR porti<strong>on</strong>s of their tests, but not a particularly str<strong>on</strong>g <strong>on</strong>e. At best,what this means is that the two types of <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> measure different, but perhaps <str<strong>on</strong>g>use</str<strong>on</strong>g>ful, things. Atworst, it means that MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong>ly examine rudimentary levels of knowledge–or perhapsnothing other than the MC test taking ability of the applicants.Our inability to find a str<strong>on</strong>ger relati<strong>on</strong>ship between CR and MC test formats does not meanthat MC tests do not assess some form of knowledge. They almost certainly do. The issue is whetherwhatever knowledge is tested by less tractable, but perhaps more probing and structurally viable CRtests, can also be tested efficiently by MC tests.What does all this mean to certificati<strong>on</strong> examinati<strong>on</strong>s? The findings from this study suggestthat certificati<strong>on</strong> examinati<strong>on</strong>s based solely <strong>on</strong> MC <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> may not be testing applicants at thesame level as CR tests might. Of greater c<strong>on</strong>cern is the possibility that the final scores <strong>on</strong> suchcertificati<strong>on</strong> tests may actually be giving false signals about what applicants do or do not know. Itis possible such tests may be denying some applicants the opportunity to dem<strong>on</strong>strate what they doknow. For these reas<strong>on</strong>s, we encourage certificati<strong>on</strong> examiners to follow the lead of the CPA examdevelopers and include at least some CR <str<strong>on</strong>g>questi<strong>on</strong>s</str<strong>on</strong>g> <strong>on</strong> their tests.REFERENCESBac<strong>on</strong>, D. R. 2003. Assessing Learning Outcomes: A Comparis<strong>on</strong> of Multiple-Choice and ShortAnswer Questi<strong>on</strong>s in a Marketing C<strong>on</strong>text. Journal of Marketing Educati<strong>on</strong> (Vol. 25) 31-36.

44 Simkin, Keuchler, Savage, and StiverBecker, W. E., and C. Johnst<strong>on</strong>. 1999. The Relati<strong>on</strong>ship Between Multiple Choice and EssayResp<strong>on</strong>se Questi<strong>on</strong>s in Assessing Ec<strong>on</strong>omics Understanding. Ec<strong>on</strong>omic Record (Vol. 75) 348-357.Bennett, R. E., D. A. Rock, and M. Want. 1991. Equivalence of Free-Resp<strong>on</strong>se and Multiple-ChoiceItems. Journal of Educati<strong>on</strong>al Measurement (Vol. 28) 77-92.Bible, L., M. G. Simkin, and W. L. Kuechler. 2008. Using Multiple-Choice Tests to EvaluateStudents’ Understanding of Accounting. Accounting Educati<strong>on</strong> (Vol. 17) S55-S68.Bolger, N., and T. Kelleghan. 1990. Method of Measurement and Gender Differences in ScholasticAchievement. Journal of Educati<strong>on</strong>al Measurement (Vol. 27) 165-74.Bridgeman, B. 1991. Essays and Multiple-Choice Tests as Predictors of College Freshman GPA.Research in Higher Educati<strong>on</strong> (Vol. 32) 319-332._______. 1992. A Comparis<strong>on</strong> of Quantitative Questi<strong>on</strong>s in Open-Ended and Multiple-ChoiceFormats. Journal of Educati<strong>on</strong>al Measurement (Vol. 29) 253-271._______., and C. Lewis. 1994. The Relati<strong>on</strong>ship of Essay and Multiple-Choice Scores with Gradesin College Courses. Journal of Educati<strong>on</strong>al Measurement (Vol. 31) 37-50.Chan, N., and P. E. Kennedy. 2002. Are Multiple-Choice Exams Easier for Ec<strong>on</strong>omics Students?A Comparis<strong>on</strong> of Multiple Choice and Equivalent C<strong>on</strong>structed Resp<strong>on</strong>se Examinati<strong>on</strong> Questi<strong>on</strong>s.Southern Ec<strong>on</strong>omic Journal (Vol. 68) 957-971.Christensen, L. 1998. Annual Professi<strong>on</strong>al Certificati<strong>on</strong> Testing - An Idea Whose Time Has Come?Armed Forces Comptroller (Vol. 43, No. 4) 20.Dufresne, R. J., W. J. Le<strong>on</strong>ard, and W. J. Gerace. 2002. Making Sense of Students’ Answers toMultiple-Choice Questi<strong>on</strong>s. The Physics Teacher (Vol. 40) 174-180.Gallagher, A. M., and R. De Lisi. 1994. Gender Differences in Scholastic Aptitude Test -Mathematics Problem Solving Am<strong>on</strong>g High-Ability Students. Journal of Educati<strong>on</strong>al Psychology(Vol. 86) 204-211.Greene, B. 1997. Verbal Abilities, Gender, and the Introductory Ec<strong>on</strong>omics Course: A New Lookat an Old Assumpti<strong>on</strong>. Journal of Ec<strong>on</strong>omic Educati<strong>on</strong> (December 22) 13-30.Grigsby, J. 2000. The Real Benefits of Professi<strong>on</strong>al Certificati<strong>on</strong>. Receivables Report for America’sHealth Care Financial Managers (Vol. 15, No. 1) 1, 12.Gul, F. A., H. Y. Teoh, and R. Shann<strong>on</strong>. 1992. Cognitive Style as a Factor in Accounting Students’Performance <strong>on</strong> Multiple Choice Examinati<strong>on</strong>s. Accounting Educati<strong>on</strong>: An Internati<strong>on</strong>al Journal(Vol. 1) 311-319.Gutek, B. A., and T. K. Biks<strong>on</strong>. 1985. Differential Experiences of Men and Women in ComputerizedOffices. Sex Roles (Vol. 13) 123-136.Hamblen, M. 2006. Users Struggle <strong>on</strong> Road to Cisco Certificati<strong>on</strong>. Computerworld (Vol. 40, No.28) 20.Hamilt<strong>on</strong>, L. S. 1999. Detecting Gender-Based Differential Item Functi<strong>on</strong>ing <strong>on</strong> a C<strong>on</strong>structed-Resp<strong>on</strong>se Science Test. Applied Measurement in Educati<strong>on</strong> (Vol. 12) 211-236.Hancock, G. R. 1994. Cognitive Complexity and the Comparability of Multiple-Choice andC<strong>on</strong>structed-Resp<strong>on</strong>se Test Formats. Journal of Experimental Educati<strong>on</strong> (Vol. 62) 143-157.Hirschfeld, M., R. L. Moore, and E. Brown. 1995. Exploring the Gender Gap <strong>on</strong> the GRE SubjectTest in Ec<strong>on</strong>omics. Journal of Ec<strong>on</strong>omic Educati<strong>on</strong> (Vol. 26) 3-15.Hwang, N. R., G. Lui, and M. Yew Jen Wu T<strong>on</strong>g. 2008. Cooperative Learning in a Passive LearningEnvir<strong>on</strong>ment: A Replicati<strong>on</strong> and Extensi<strong>on</strong>. Issues in Accounting Educati<strong>on</strong> (Vol. 23) 67-75.

<str<strong>on</strong>g>Why</str<strong>on</strong>g> Use Multiple Choice Questi<strong>on</strong>s 45Ingram, R. W., and T. P. Howard. 1998. The Associati<strong>on</strong> Between Course Objectives and GradingMethods in Introductory Accounting Courses. Issues in Accounting Educati<strong>on</strong> (Vol. 13, No. 4)815-832.Jacks<strong>on</strong>, R. E. 2006. Post Graduate Educati<strong>on</strong>al Requirements and Entry into the CPA Professi<strong>on</strong>.Journal of Labor Research (Vol. 27, No. 1) 101-114.Katz, I. R., R. E. Bennett, and A. E. Berger. 2000. Effects of Resp<strong>on</strong>se Format <strong>on</strong> Difficulty of SATMathematics Items: It's Not the Strategy. Journal of Educati<strong>on</strong>al Measurement (Vol. 37) 39-57.Kennedy, P. E., and W. B. Walstad. 1997. Combining Multiple-Choice and C<strong>on</strong>structed-Resp<strong>on</strong>seTest Scores: An Ec<strong>on</strong>omist’s View. Applied Measurement in Educati<strong>on</strong> (Vol. 10) 359-75.Kuechler, W. L., and M. G. Simkin. 2003. How Well Do Multiple-Choice Tests Evaluate StudentUnderstanding in Computer Programming Classes? Journal of Informati<strong>on</strong> Systems Educati<strong>on</strong>(Vol. 14, No. 4) 389-400.Lukhele, R., D. Thissen, and H. Wainer. 1994. On the Relative Value of Multiple-Choice,C<strong>on</strong>structed Resp<strong>on</strong>se, and Examinee-Selected Items <strong>on</strong> Two Achievement Tests. Journal ofEducati<strong>on</strong>al Measurement (Vol. 31, No. 3) 234-250.Lumsden, K. G., A. Scott, and A. Becker. 1987. The Ec<strong>on</strong>omics Student Reexamined: Male-FemaleDifferences in Comprehensi<strong>on</strong>. Journal of Ec<strong>on</strong>omic Educati<strong>on</strong> (Vol. 18, No.4)365 - 375._______, and _______. 1995. Ec<strong>on</strong>omic Performance <strong>on</strong> Multiple Choice and Essay Examinati<strong>on</strong>s:A Large-Scale Study of Accounting Students. Accounting Educati<strong>on</strong>: An Internati<strong>on</strong>al Journal(Vol. 4) 153-167.Martinez, M. E. 1999. Cogniti<strong>on</strong> and the Questi<strong>on</strong> of Test-Item Format. Educati<strong>on</strong>al Psychologist(Vol. 34) 207-218.Messick, S. 1993. Trait Equivalence as C<strong>on</strong>struct Validity of Score Interpretati<strong>on</strong> Across MultipleMethods of Measurement. In Bennett, R. E., and W. C. Ward. C<strong>on</strong>struct Versus Choice inCognitive Measurement. (Hillsdale, NJ: Lawrence Erlbaum) 61-73.Nunnally, J., and I. Bernstein. 1994. Psychometric Theory. (New York, McGraw Hill).Puliyenthuruthel, J. 2005. How Google Searches-for Talent. Business Week Issue (April 11) 52.Rogers, W. T., and D. Harley. 1999. An Empirical Comparis<strong>on</strong> of Three- and Four-Choice Itemsand Tests: Susceptibility to Testwiseness and Internal C<strong>on</strong>sistency Reliability. Educati<strong>on</strong>al andPsychological Measurement (Vol. 59) 234-247.Rothkopf, M. 1987. No More Essay Questi<strong>on</strong>s <strong>on</strong> the Uniform CPA Examinati<strong>on</strong>. AccountingHoriz<strong>on</strong>s (Vol. 1, No. 4) 79-85.Shaftel, J., and T. L. Shaftel. 2007. Educati<strong>on</strong>al Assessment and the AACSB. Issues in AccountingEducati<strong>on</strong> (Vol. 22, No. 2) 215-232.Simkin, M. G., and W. L. Kuechler. 2005. Multiple-Choice Tests and Student Understanding: Whatis the C<strong>on</strong>necti<strong>on</strong>? Decisi<strong>on</strong> Sciences Journal of Innovative Educati<strong>on</strong> (Vol. 3) 73-97.Snyder, A. 2003. The New CPA Exam-Meeting Today’s Challenges. Journal of Accountancy(Vol. 196, No. 6) 11-12.Summerfield, B. 2008. GIAC. The Hands-On Security Certificati<strong>on</strong>. Certificati<strong>on</strong> Magazine (Vol.10, No. 6) 24-25.Traub, R. E. 1993. On the Equivalence of the Traits Assessed by Multiple-Choice and C<strong>on</strong>structed-Resp<strong>on</strong>se Tests. In C<strong>on</strong>structi<strong>on</strong> Versus Choice in Cognitive Measurement, edited by Bennett,R. E. and W.C. Ward. (Hillsdale, NJ: Lawrence Erlbaum) 29-44.

46 Simkin, Keuchler, Savage, and StiverTsui, J. S. L., T. S. C. Lau, and S. C. C. F<strong>on</strong>t. 1995. Analysis of Accounting Students’ Performance<strong>on</strong> Multiple-Choice Examinati<strong>on</strong> Questi<strong>on</strong>s: A Cognitive Style Perspective. AccountingEducati<strong>on</strong>: An Internati<strong>on</strong>al Journal (Vol. 4) 351-358.Wainer, H., and D. Thissen. 1993. Combining Multiple-Choice and C<strong>on</strong>structed-Resp<strong>on</strong>se TestScores: Toward a Marxist Theory of Test C<strong>on</strong>structi<strong>on</strong>. Applied Measurement in Educati<strong>on</strong> (Vol.6) 103-118.Walstad, W. B., and W. E. Becker. 1994. Achievement Differences <strong>on</strong> Multiple-Choice and EssayTests in Ec<strong>on</strong>omics. American Ec<strong>on</strong>omic Review (Vol. 84, No. 2) 193-197._______, and D. Robs<strong>on</strong>. 1997. Differential Item Functi<strong>on</strong>ing and Male-Female Differences <strong>on</strong>Multiple-Choice Tests in Ec<strong>on</strong>omics. Journal of Ec<strong>on</strong>omic Educati<strong>on</strong> (Vol. 28) 155-171.Zeidner, M. 1987. Essay Versus Multiple-Choice Type Classroom Exams: The Student’sPerspective. Journal of Educati<strong>on</strong>al Research (Vol. 80) 352-358.Zimmerman, D., and R. Williams. 2003. A New Look at the Influence of Guessing <strong>on</strong> the Reliabilityof Multiple-Choice Tests. Applied Psychological Measurement (Vol. 27) 357-371.

![[1] The Legal Environment of Business (LGLS211) Andrea Boggio ...](https://img.yumpu.com/45269009/1/190x245/1-the-legal-environment-of-business-lgls211-andrea-boggio-.jpg?quality=85)