E-LETTER - IEEE Communications Society

E-LETTER - IEEE Communications Society

E-LETTER - IEEE Communications Society

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

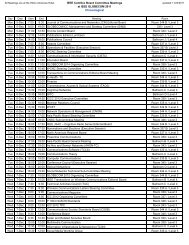

<strong>IEEE</strong> COMSOC MMTC E-Letter<strong>IEEE</strong> MULTIMEDIA COMMUNICATIONS TECHNICAL COMMITTEECONTENTSE-<strong>LETTER</strong>Vol. 4, No. 7, August 2009Message from Editor-in-Chief ......................................................................................... 2HIGHLIGHT NEWS & INFORMATION..................................................................... 3<strong>IEEE</strong> ICC 2010 @ Cape Town, South Africa ............................................................ 3Message from the ICC 2010 TPC Co-Chairs ............................................................. 5Chengshan Xiao, Missouri University of S&T, USA .................................................. 5Jan C. Olivier, University of Pretoria, South Africa .................................................. 5How to Submit Papers to ICC 2010 ............................................................................ 6TECHNOLOGY ADVANCES ........................................................................................ 7Distinguished Position Paper Series ............................................................................. 7A Framework on Multimodal Telecommunications from a Human Perspective... 7Zhengyou Zhang (<strong>IEEE</strong> Fellow), Microsoft Research, USA ...................................... 7Trends in Mobile Graphics ........................................................................................ 11Kari Pulli, Nokia Research Center Palo Alto, USA.................................................. 11Assessing 3D Displays: Naturalness as a Visual Quality Metric ............................ 15Wijnand A. IJsselsteijn, Eindhoven University of Technology, The Netherlands..... 15Three-Dimensional Video Capture and Analysis .................................................... 19Cha Zhang, Microsoft Research, USA...................................................................... 193D Visual Content Compression for <strong>Communications</strong> ........................................... 22Karsten Müller,FraunhoferHeinrich Hertz Institute, Germany ............................... 22Interactive 3D Online Applications........................................................................... 25Irene Cheng, University of Alberta, Canada ............................................................ 25Editor’s Selected Paper Recommendation ............................................................... 34Focused Technology Advances Series ........................................................................ 35Distributed Algorithm Design for Network Optimization Problems with CoupledObjectives..................................................................................................................... 35Jianwei Huang, the Chinese University of Hong Kong, China ................................ 35MMTC COMMUNICATIONS & EVENTS................................................................ 39Call for Papers of Selected Journal Special Issues .................................................. 39Call for Papers of Selected Conferences ................................................................... 40E-Letter Editorial Board................................................................................................ 41http://www.comsoc.org/~mmc/ 1/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterHIGHLIGHT NEWS & INFORMATION<strong>IEEE</strong> ICC 2010 @ Cape Town, South Africa<strong>IEEE</strong> ICC 2010 is to be held during 23-27 May2010 in Cape Town, South Africa. The city ofCape Town, being voted one of the mostbeautiful cities in the world, welcomesconference attendees from all over the world.The theme of <strong>IEEE</strong> ICC 2010, "<strong>Communications</strong>:Accelerating Growth and Development," isspecifically matched to the conference location,emphasizing the role of communications that isplaying in the rapid development of Africa.As shown in the map above, Cape Town islocated at the northern end of the Cape Peninsula.Table Mountain forms a dramatic backdrop tothe City Bowl, with its plateau over 1,000 m(3,300 ft) high; it is surrounded by near-verticalcliffs, Devil's Peak and Lion's Head. Sometimeshttp://www.comsoc.org/~mmc/ 3/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Lettera thin strip of cloud forms over the mountain,and owing to its appearance, it is colloquiallyknown as the "tablecloth". The peninsulaconsists of a dramatic mountainous spine juttingsouthwards into the Atlantic Ocean, ending atCape Point. There are over 70 peaks above 300mwithin Cape Town's official city limits.Mountain in the north to Cape Point in the southand encompasses the seas and coastline of thepeninsula. Within Cape Point the treacherouscliffs forming the most southwestern tip ofAfrica are some of the highest in the world andmark the spot where the cold Beguela current onthe West coast and the warm Agulhus current onthe East coast merge.Cape Town is also famous for the beaches due tothe city’s unique geography. It is possible to visitseveral different beaches in the same day, eachwith a different setting and atmosphere. Beacheslocated on the Atlantic Coast, for exampleClifton beach, tend to have very cold water fromthe Benguela current which originates from theSouthern Ocean,Cape Town is not only the most popularinternational tourist destination in South Africa;it is Africa's main tourist destination evenovertaking Cairo. This is due to its good climate,natural setting, and relatively well-developedinfrastructure. Table Mountain is one the mostnotable attractions. Reaching the top of themountain can be achieved either by hiking up, orby taking the Table Mountain Cableway. On theother hand, Chapman’s Peak Drive, a narrowroad that links Noordhoek with Hout Bay, alsoattracts many tourists for the views of theAtlantic Ocean and nearby mountains. It ispossible to either drive or hike up Signal Hill forcloser views of the City Bowl and TableMountain.Cape Town is noted for its architectural heritage,with the highest density of Cape Dutch stylebuildings in the world. Cape Dutch stylecombines the architectural traditions of theNetherlands, Germany and France.Cape Town is also a host city for the 2010 FIFAWorld Cup Soccer tournament, which starts justafter the conference ends.If you have not started to plan for your family’svacation in 2010 yet, please do consider CapeTown. Remember to have your papers (orproposal) ready as soon as possible for ICC 2010following the deadlines below:At the tip of the Cape Peninsula you will findCape Point within the Table Mountain NationalPark. The expansive Table Mountain NationalPark stretches from Signal Hill and TablePaper Submission: September 10, 2009Tutorial Proposal: September 10, 2009Panel Proposal: August 28, 2009See you in Cape Town next May!http://www.comsoc.org/~mmc/ 4/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letter<strong>IEEE</strong> ICC 2010 @ Cape Town, South Africa<strong>Communications</strong>: Accelerating Growth and Development!!!Message from the ICC 2010 TPC Co-ChairsChengshan Xiao, Missouri University of S&T, USAJan C. Olivier, University of Pretoria, South AfricaThe flagship conference in communications,<strong>IEEE</strong> International Conference on<strong>Communications</strong> (<strong>IEEE</strong> ICC 2010), will be heldin Cape Town, South Africa, from 23 to 27 May2010, just before the FIFA World Cup Soccertournament hosted by South Africa for 2010.<strong>IEEE</strong> ICC 2010 will feature a comprehensivetechnical program including 11 symposia and anumber of tutorials and workshops. <strong>IEEE</strong> ICC2010 will also feature an attractive programincluding keynote speakers, business andtechnology panels, and industry forums.Prospective authors are invited to submit originalpapers by the deadline 10 September 2009 forpublication in the <strong>IEEE</strong> ICC 2010 ConferenceProceedings and presentations for the symposia.For detailed information about each symposiumand submission guidelines, please visit ourwebsite at http://www.ieee-icc.org/2010/.The technical program committee (TPC)including the symposium co-chairs and theirinvited TPC members is committed to provide acomprehensive peer review and selection processto further raise the quality of the conference. Allthe accepted and presented papers at theconference will be published online via <strong>IEEE</strong>Xplore. We encourage everyone in thiscommunity to participate in the conference bysubmitting papers, proposals for symposia,tutorials and workshops, and most importantlyby attending the conference in beautiful andexotic Cape Town, where you will have a widerange of exciting options to add to yourconference tour: hikes up the famous TableMountain; beautiful white sandy beaches; whalewatching; tours of the Cape Winelands, historicRobben Island where Nelson Mandela was heldfor 27 years; game reserves with Safaris and thebig 5; and even Cape Point where the Atlanticocean and the Indian oceans meet with itspenguin inhabitants.<strong>IEEE</strong> ICC 2010 will be the first <strong>Communications</strong><strong>Society</strong> flagship conference to be held on theAfrican Continent. The organizing committeehas been working diligently to make sure that<strong>IEEE</strong> ICC 2010 will be a great and fantasticconference. See you in Cape Town.Thank you and best regards,Chengshan Xiao and Jan C. Olivier<strong>IEEE</strong> ICC 2010 Technical Program Co-Chairshttp://www.comsoc.org/~mmc/ 5/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterHow to Submit Papers to ICC 2010For ICC 2010, Multimedia Services,Communication Software and ServiceSymposium (MCS) is the ONLY symposiumthat is fully sponsored by MMTC, hence weencourage all our members to submit yourMultimedia related papers to MCS symposium,where a Co-Chair and many TPC membersrecommended from MMTC would handle all thereview process of the multimedia related papersubmissions.Here are the few steps to submit your papers toICC 2010:(1) Go to EDAS website:http://edas.info/index.php and sign intoyour account;(2) Click the “Submit paper” Tab on thetop of the webpage;(3) In the list of the conferences, find“ICC’10” and click the icon on therightmost column;(4) In the list of ICC’10 symposia, pleaseselect the “ICC’10 MCS” and click theicon on the rightmost column;(5) Start the normal paper submissionprocess.http://www.comsoc.org/~mmc/ 6/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterTECHNOLOGY ADVANCESDistinguished Position Paper SeriesA Framework on Multimodal Telecommunications from a Human PerspectiveZhengyou Zhang (<strong>IEEE</strong> Fellow), Microsoft Research, USAzhang@microsoft.comIn this position paper, I am arguing forresearching and developing multimodaltelecommunications systems from theperspective of users who are the communicationcreatures. Human’s innate communications skillsare the natural consequence of hundreds ofthousands of years of evolution. We shouldfollow and leverage our own skills rather thantrying to change our behaviors.One of the major goals of communications is toget messages across to people on the other side.There are a variety of messages in any face-tofacecommunication. However, one can basicallyfind three elements, known as “3 Vs”, behindeach message:• Verbal: Words, what you say;• Vocal: Tone of voice, how you say thewords;• Visual: Facial expression, gaze, bodylanguage.The second and third elements are sometimessimply referred to as non-verbal elements. Prof.Albert Mehrabian conducted experimentsdealing with communications of feelings andattitudes (i.e., like-dislike) in 1960s and foundthat the non-verbal elements are particularlyimportant for communicating feelings andattitude, especially when they are incongruent: ifwords and body language disagree, one tends tobelieve the body language (Mehrabian, 1981).More concretely, according to Mehrabian, whena person talks about their feelings or altitudes,the three elements account for 7%, 28% and 55%,respectively. The exact numbers might bearguable depending on experimental settings, butclearly, non-verbal messages are extremelyimportant, as confirmed by other researchers(Argyle, Salter, Nicholson, Williams, & Burgess,1970).<strong>Communications</strong> across distances are muchharder, and in our human history, many toolshave been invented to overcome this difficulty:• Letters: Verbal• Telephony: Verbal + Vocal• Videoconferencing: Verbal + Vocal +VisualVideoconferencing is leveraging all 3 Vs. So, arepeople satisfying with the videoconferencingsystems we have? Obviously, the answer is no.Let’s take a look at the visual aspect of somenonverbal behaviors in face-to-facecommunications:• Facial expressions• Eye gaze• Eye gestures• Head gaze• Head gestures• Arm gestures• Body posturesHow much can a current videoconferencingsystem convey? Not much, mostly facialexpressions and head gestures. Other behaviorsare lost. Even if facial expressions and headgestures are displayed on the video, withoutother elements, say eye gaze, one may get animprecise or even wrong perception (who is shereally smiling to?).In the area of audio, there is also a lot to desire.Current conferencing systems (audio only oraudio-visual) are essentially monaural. Whenmultiple people are involved in conferencing,one major problem is that a participant at oneend has difficulties in identifying who is talkingat the other end and comprehending what isbeing discussed. The reason is that the voices ofmultiple participants are intermixed into a singleaudio stream. This is in sharp contrast to faceto-facecommunications where sounds arecoming from all directions and human canperceive the direction and distance of a soundsource using two ears. Human auditory systemsexploit the knowledge of cues such as theinteraural time difference (ITD) and theinteraural level difference (ILD) due to distancebetween the two ears and the shadowing by thehead (Blauert, 1983).http://www.comsoc.org/~mmc/ 7/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterAnother important aspect to consider is attention,which is the cognitive process of selectivelyconcentrating on one aspect of the environmentwhile ignoring other things. For example,humans have the ability to focus one’s listeningattention on the voice of a particular talker in anenvironment in which there are many othersspeaking at the same time, known as the cocktailparty effect (Bregman, 1990). For an effectivecommunication, the listener must pay attentionand the speaker must react to where the listener’sattention is. In a face-to-face communication, thebandwidth between people can be considered asboundless, but a person can only consume acertain amount of information due to his/herattention. In telecommunication, bandwidth islimited, and it is even more crucial to be awareof each participant’s attention and deliver onlythe information important to the participants.Decades ago, Herbert Simon already articulatedvery nicely: “...in an information-rich world, thewealth of information means a dearth ofsomething else: a scarcity of whatever it is thatinformation consumes. What informationconsumes is rather obvious: it consumes theattention of its recipients. Hence a wealth ofinformation creates a poverty of attention and aneed to allocate that attention efficiently amongthe overabundance of information sources thatmight consume it” (Simon, 1971). When webuild telecommunications systems, we shouldconsider attention scarcity as a design requisite,and filter out unimportant or irrelevantinformation. With the rapid growth of bandwidthcapacity and the decrease in cost and increase inquality of audio-visual capture devices, it is tooeasy to overload information.Below is a diagram illustrating the working ofcurrent telecommunication systems. Theinformation flows from the source (here Site 1)to the destination (here Site 2). Filter H(R)represents a person’s attention and sensorylimitation. The person at Site 2 has his/herexpectation of what he/she wants. However,capture devices, processing algorithms andrendering equipment are pre-designed to meetwhat the designer expects the expectation is. Theinformation the person at Site 2 actually obtainsusually does not meet his/her expectation.Below is a diagram illustrating a new frameworkI am proposing. The capture devices, processingalgorithms and rendering equipment should bedesigned to reflect the receiver’s expectation andattention in real-time.There are a number of challenges in thisframework:• How to model users’ expectation?• How to determine users’ attention?http://www.comsoc.org/~mmc/ 8/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letter• How to accommodate the deficiency ofhuman perception?• How to incorporate users’ expectationand attention in each component?• Real-Time! Real-Time!! Real-Time!!!There have been several efforts along thisdirection. One example is the boardroom highendvideo conferencing systems including HPHalo and Cisco TelePresence. My research grouphas been working on a number of projects. Oneis audio spatialization to leverage cocktail partyeffect. We have done audio spatialization overheadphones (Chen & Zhang, 2009) as well asover loudspeakers (Zhang, Cai, & Stokes, 2008).For the latter, we have to address the issue ofmultichannel acoustical echo cancellation. Theother is the Personal Telepresence Station (Knies,2009), which provides the correct gaze cues inmultiparty conferencing through videospatialization. We also work on 3Dvideoconferencing to make conferencing visuallymore immersive. A lot more, however, needs tobe done, and this is an exciting area and the righttime to work on.[6] Knies, R. (2009, March 9). Making VirtualMeetings Feel Real. Retrieved from MicrosoftResearch: http://research.microsoft.com/en-us/news/features/personaltelepresencestation-030909.aspx[7] Mehrabian, A. (1981). Silent messages: Implicitcommunication of emotions and attitudes (2nded.). Belmont, California: Wadsworth.[8] Moore, B. C. (2003). An Introduction to thePsychology of Hearing (Vol. 5th Edition).Academic Press.[9] Simon, H. A. (1971). Designing Organizationsfor an Information-Rich World. In M.Greenberger, Computers, Communication, andthe Public Interest (pp. 40-41). The JohnsHopkins Press.[10] Zhang, Z., Cai, Q., & Stokes, J. (2008).Multichannel Acoustic Echo Cancelation inMultiparty Spatial Audio Conferencing WithConstrained Kalman Filtering. Proc.International Workshop on Acoustic Echo andNoise Control (IWAENC'08) .AcknowledgmentMany ideas in this paper were presented in anICME 2006 panel and in an ImmersCom 2009panel.Bibliography[1] Argyle, M., Salter, V., Nicholson, H., Williams,M., & Burgess, P. (1970). The communication ofinferior and superior attitudes by verbal and nonverbalsignals. British journal of social andclinical psychology , 9, 222-231.[2] Baldis, J. (2001). Effects of spatial audio onmemory, compression, and preference duringdesktop conferences. Proceedings of the CHIConference on Human Factors in ComputingSystems (pp. 166-173). Seattle, USA: ACM Press.[3] Blauert, J. (1983). Spatial Hearing. MIT Press.[4] Bregman, A. (1990). Auditory Scene Analysis.MIT Press.[5] Chen, W.-g., & Zhang, Z. (2009). Highly realisticaudio spatialization for multiparty conferencingusing headphones. <strong>IEEE</strong> International Workshopon Multimedia Signal Processing ( MMSP'09) .Zhengyou Zhang (SM’97–F’05) received theB.S. degree in electronic engineering from theUniversity of Zhejiang, Hangzhou, China, in1985, the M.S. degree in computer science fromthe University of Nancy, Nancy, France, in 1987,and the Ph.D. degree in computer science and theDoctor of Science (Habilitation à diriger desrecherches) diploma from the University of ParisXI, Paris, France, in 1990 and 1994, respectively.He is a Principal Researcher with MicrosoftResearch, Redmond, WA, USA, and manageshttp://www.comsoc.org/~mmc/ 9/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letterthe multimodal collaboration research team.Before joining Microsoft Research in March1998, he was with INRIA (French NationalInstitute for Research in Computer Science andControl), France, for 11 years and was a SeniorResearch Scientist from 1991. In 1996-1997, hespent a one-year sabbatical as an InvitedResearcher with the AdvancedTelecommunications Research InstituteInternational (ATR), Kyoto, Japan. He haspublished over 200 papers in refereedinternational journals and conferences, and hascoauthored the following books: 3-D DynamicScene Analysis: A Stereo Based Approach(Springer-Verlag, 1992); Epipolar Geometry inStereo, Motion and Object Recognition (Kluwer,1996); and Computer Vision (Science Publisher,1998, 2003, in Chinese). He has given a numberof keynotes in international conferences.Dr. Zhang is an <strong>IEEE</strong> Fellow, the FoundingEditor-in-Chief of the <strong>IEEE</strong> Transactions onAutonomous Mental Development, an AssociateEditor of the International Journal of ComputerVision, an Associate Editor of the InternationalJournal of Pattern Recognition and ArtificialIntelligence, and an Associate Editor of MachineVision and Applications. He served as anAssociate Editor of the <strong>IEEE</strong> Transactions onPattern Analysis and Machine Intelligence from2000 to 2004, an Associate Editor of the <strong>IEEE</strong>Transactions on Multimedia from 2004 to 2009,among others. He has organized or participatedin organizing numerous internationalconferences. More details are available athttp://research.microsoft.com/~zhang/.http://www.comsoc.org/~mmc/ 10/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterTrends in Mobile GraphicsKari Pulli, Nokia Research Center Palo Alto, USAkari.pulli@nokia.comMobile handheld devices such as mobile orcellular phones, PDAs, and PNDs (personalnavigation devices) are the new frontier ofcomputer graphics, bringing graphics toeveryone and everywhere. Mobile phone is themost widely used electronic device of any kind,and the current high-end phones have as muchprocessing power as a typical laptop had perhaps7 years ago. It is the latest incarnation in theprogression of more compact computing, startingfrom main frames and moving on to minicomputers, PCs, laptops, and finally smartphones,phones where users can install new applicationsand that are almost general computers.Around the turn of the millenium, when thefirst mobile graphics systems were being created,there were in practice only two targetapplications: games, and so-called screen saversshowing simple animations. Games still remainan important application area, but there areothers that are currently even more important [2].The most important of them is graphical userinterfaces (GUIs), followed by navigationapplications. The demand from theseapplications drive development in new APIs andmobile graphics hardware, which again enableinteresting new areas such as 3D web browsersand augmented reality (AR) applications.User interfacesThe early user interfaces on mobile deviceswere simple and text-based. As the displays gotbetter, more eye candy was added in form ofapplication icons and simple animations. Now astouch displays allow most of the device’s frontsurface to be a display, so that less real estateneeds to be reserved for buttons, people havecome to expect beautiful graphics and fasttransitions, as first demonstrated by the iPhone.Such large displays increasingly requirededicated graphics hardware, as software-basedgraphics engines are not fast enough, or are toopower-hungry to drive real-time graphics onlarge displays. There are two main APIs formobile GUIs, OpenVG [4] and OpenGL ES[11,8]. OpenVG was created both forpresentation graphics, such as SVG (scalablevector graphics), PDF, Flash, and PowerPoint,and for user interfaces. Even though it is a 2DAPI, it can be used for 3D-like graphics, as longas the 3D surfaces consist of a relatively smallnumber of flat polygons. Even though all 2DAPIs allow the application to project the polygoncorners from 3D to 2D, most 2D APIs do notsupport perspective transformations of imagesused to texture map the polygons; OpenVG doesprovide that support. The other possibility is touse OpenGL ES to draw the UI graphics, even ifthe UI is not really 3D. Currently most UIs arereally 2D, and although they may have some 3Dappearance, and show some 3D objects, thegraphics hardware is really needed to providesmooth animation transitions. In fact, eventhough there have been several 3D UIs createdby researchers, none of them have caught on,since 2D UIs are easier to use and navigate.OpenVG can be emulated on OpenGL ES 2.0hardware, but not as power-efficiently as whenthe hardware design is originally targeted forOpenVG.Maps and navigationPNDs (personal navigation devices) havebecome ubiquitous as they have become cheaper.Even though it may be fun to view maps andplan trips on a desktop computer, one has thegreatest need for navigation instructions whiletraveling, be that with a car, bicycle, or on foot.Most new smartphones also come with built-inGPS (global positioning system) receivers, maps,and navigation routing services, some even witha compass to facilitate orienting the mapscorrectly.The first maps consisted of simple bitmapimages. The current trend, however, is to moveto using vector graphics such as SVG to renderthe maps. Maps are naturally viewed at differentlevels of resolution, especially on small displays,and with vector graphics every scale can berendered neatly without scaling artifacts, andwithout having to store or transmit new pixeldata at every different resolution level.The next trend is to make the maps 3D [9].The easiest 3D feature to add is topography,rendering geographic structures such asmountains and hills. The next step is to add 3Dmodels of visible landmarks, such as the Eiffeltower in Paris or the Big Ben in London. As onecontinues to increase the realism of the displays,many buildings can be cleanly and compactlyhttp://www.comsoc.org/~mmc/ 11/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letterrepresented in a procedural form, such that onlythe ground extent, building height, and somestyle and color parameters have to be stored, anda 3D representation is created from thedescription on the fly. Full realism may not evenbe a desirable target in a navigation system asthey may more distract the user than aid her.Symbolic representations with simple, clear lines,and clear audio instructions may be betterchoices, especially when one is driving a car andneeds to make decisions about where to drivequickly.GamesThe first mobile games had very simplegraphics. Snake was a very popular mobilephone game, where the snake grows in length asone captures more points, and becomes moredifficult to maneuver without the head hitting awall or the snake itself and thus ending the game.With color displays came various sports andrally games, and today games of almost anygenre are available on mobile devices.Before standard mobile 3D APIs games cameeach with their own proprietary softwarerendering engines. Even the launch of OpenGLES 1.0 and 1.1 didn’t change the situation much,as a special engine is always faster than a genericone. As graphics hardware began to appear, thegames began to use standard APIs, and soonproprietary engines will disappear as OpenGLES 2.0, which allows a new level of visualrichness, will be widely available.While native games (written in C or C++) arevery closely tied to a particular device family,and even to an individual model, mobile Javaaims for application portability across differentdevice manufacturers. M3G (JSR-184) wasdefined to be compatible with OpenGL ES sographics hardware developed for OpenGL EScould be leveraged for M3G [11]. In addition tobasic rendering, the API supports features usefulin game engines such as scene graphs,sophisticated animation, and a compact fileformat for 3D content. While fast-pacedgraphics-rich action games tend to be native anduse OpenGL ES, far more games have beenwritten with the more portable M3G [1]. AsOpenGL ES 2.0 allows shaders for nativeapplications, M3G 2.0 (JSR-297) allows use ofshaders from mobile Java applications.Web and browsersMobile web used to, and had to be differentfrom the desktop web experience, as the mobiledata bandwidth and the screen sizes were bothvastly smaller than on a desktop or laptop PC.This is now rapidly changing, and newsmartphones have very complete web browsersproviding a “one-web” experience, where theweb is experienced in essentially the same wayon any device. This is one of the new drivers forgraphics performance on mobile phones.Another recent development is re-birth of theidea of 3D graphics on the web. VRML, andlater X3D attempted to be standard 3Ddescriptions for web content, but they neverreally took off. There were also proprietary 3Dengines running on browsers, (e.g.,Macromedia’s Shockwave), but they didn’tsucceed either. Khronos group has a newworking group for 3D Web, where they arecreating essentially OpenGL ES 2.0 bindings toJavaScript. Most major browsers are representedin the working group, and the new standard mayfinally bring hardware-accelerated 3D graphicsboth to mobile and desktop browsers.Augmented RealityAugmented Reality (AR) enhances oraugments user’s sensations of the real worldaround her, typically by rendering additionalobjects, icons, or text that relate to the realobjects. Whereas traditional AR systems tendedto have wearable displays, data goggles, themobile AR systems capture the video streamfrom the camera on handheld device’s rear side,and post the augmented image on the display,providing a “magic lens”. A use case for an ARsystem could be a tourist guide helping the userto navigate to a destination and labeling objectsof interest, or an educational system pointing tosome device’s controls in a correct sequence,together with operation instructions. AR is aclass of graphics applications that makes muchmore sense in a mobile setting than at one’s deskwith a desktop PC.A key enabler for AR is image recognitioncapability, so the system knows what is visible,and also where in the field of view of the camerathe object is located, so that it can be annotatedor augmented. A secondary tool is efficienttracking, so that the labels, arrows, icons, etc.,remain attached to the correct objects even whenthe user moves the camera around [5, 12].Recent advances in computer vision algorithms,together with increased capabilities of cameraphones equipped with other sensors such as GPShttp://www.comsoc.org/~mmc/ 12/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letterand compass, allow simple mobile ARapplications running in real time. There aredemos and startups that provide informationoverlay using only GPS and compass [6, 7], andothers that also use cameras to recognize targets[10]. Some simple AR games only requiresimple motion tracking: in Mosquito Hunt fromSiemens virtual mosquitos drawn over thebackground of a camera image seem to attack theuser, who tries to get them to a cross hair and zapthem first.GPUs and GPGPUThe driving force of desktop graphicsprocessing units (GPU) has been increasingperformance in terms of speed. While speed isimportant also with mobile GPUs, even moreimportant is low power consumption. Theirdesign differs from desktop GPUs, for examplemobile GPUs can’t afford to get performance bysimply adding more parallel processing. The firstgeneration of mobile GPUs was designed for thefixed functionality of OpenGL 1.X, the secondgeneration allows use of vertex and fragmentshaders of OpenGL 2.0.As desktop GPUs got more powerful,programmers wanted to use them also for othercalculations. General purpose processing onGPUs, also known as GPGPU, uses graphicshardware for generalized number crunching.GPGPU is also now becoming attractive onmobile devices. Many image processing andcomputer vision algorithms can be mapped toOpenGL ES 2.0 shaders, benefiting from thespeed and power advantages of GPUs.Unfortunately the second generation of mobileGPUs was only designed for graphics processingand does not support efficient transmission ofdata back to CPU from GPU. Careful schedulingof processing may allow data readback from aprevious image to proceed while the next imageis being processed, reducing the transferoverhead. With the advent of OpenCL (OpenComputing Language) [3], a GPGPU APIsimilar to NVidia’s CUDA except that it isvendor-independent, and can run on CPUs andDSPs in addition to GPUs, the third generationof mobile GPUs will have better support forefficient data transfer. OpenCL will allow betterportability for many image processingalgorithms both on desktop and on mobiledevices.REFERENCES[1] M. Callow, P. Beardow, and D. Brittain,“Big Games, small screens”, ACM Queue,Nov./Dec., pp. 2-12, 2007.[2] T. Capin, K. Pulli, and T. Akenine-Möller,“The State of the Art in Mobile GraphicsResearch”, <strong>IEEE</strong> Computer Graphics andApplications, vol. 28, no. 4, pp. 74 - 84,2008.[3] Khronos OpenCL Working Group, A.Munshi Ed., “The OpenCL Specification,Version 1.0, Rev. 43”, Khronos Group,USA, May 2009.http://www.khronos.org/opencl/[4] Khronos OpenVG Working Group, D.Rice and R. Simpson Eds., “OpenVGSpecification, Version 1.1”, KhronosGroup, USA, Dec. 2008.http://www.khronos.org/openvg/[5] G. Klein, “PTAM + AR on an iPhone”,http://www.youtube.com/watch?v=pBI5HwitBX4[6] Layar: http://layar.eu/[7] MARA:http://www.technologyreview.com/Biztech/17807/[8] A. Munshi and D. Ginsburg, “OpenGLES 2.0 Programming Guide”, Addison-Wesley Professional, 2008.[9] A. Nurminen, “Mobile 3D City Maps”,<strong>IEEE</strong> Computer Graphics andApplications, vol. 28, no. 4, pp. 20-31,2008.[10] Point&Find:http://pointandfind.nokia.com[11] K. Pulli, T. Aarnio, V. Miettinen, K.Roimela, and J. Vaarala, “Mobile 3DGraphics with OpenGL ES and M3G”,Morgan Kauffman, 2006.[12] D. Wagner, “High-speed natural featuretracking”, http://studierstube.icg.tugraz.ac.at/handheld_ar/highspeed_nft.phphttp://www.comsoc.org/~mmc/ 13/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterKari Pulli received his Ph.D. in ComputerScience from University of Washington inSeattle, WA, USA and an MBA from Universityof Oulu, Finland. He is currently a ResearchFellow at Nokia Research Center in Palo Alto,CA http://research.nokia.com/people/kari_pulli/.He has been an active contributor to severalKhronos standards, Research Associate atStanford University, Adjunct Professor (Docent)at University of Oulu, Visiting Scientist at MIT,and briefly worked at Microsoft, SGI, andAlias|Wavefront.http://www.comsoc.org/~mmc/ 14/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterAssessing 3D Displays: Naturalness as a Visual Quality MetricWijnand A. IJsselsteijn, Eindhoven University of Technology, The Netherlandsw.a.ijsselsteijn@tue.nlBinocular vision is based on the fact thatobjects and environments are seen from twoseparate vantage points, created by theinterocular distance between the two eyes. Thishorizontal separation causes an interoculardifference in the relative projections ofmonocular images onto the left and right retinas.When points from one eye's view are matched tocorresponding points in the other eye's view, theretinal disparity variation across the imageprovides information about the relative distancesand depth structure of objects. Stereopsis thusacts as a strong depth cue, particularly at shortdistances [1].Stereoscopic display techniques are based onthe principle of taking two images anddisplaying them in such a way that the left viewis seen only by the left eye, and the right viewseen only by the right eye. There are a number ofways of achieving this [2, 3, 4]. Stereoscopicdisplays can be categorized based on thetechnique used to channel the right and leftimages to the appropriate eyes. A distinguishingfeature in this regard is whether the displaymethod requires a viewing aid (e.g., polarizedglasses) to separate the right and left eye images.Stereoscopic displays that do not require such aviewing aid are known as autostereoscopicdisplays. They have the eye-addressingtechniques completely integrated into the displayitself. Other distinguishing features are whetherthe display is suitable for more than one viewer(i.e., allows for more than one geometricallycorrect viewpoint), and whether look-aroundcapabilities are supported, a feature inherent to anumber of autostereoscopic displays (e.g.,holographic or volumetric displays), but whichrequires some kind of head-tracking whenimplemented in most other stereoscopic andautostereoscopic displays.Potential benefits of stereoscopic displaysinclude an improvement of subjective imagequality, improved separation of an object ofinterest from its visual surrounding, and betterrelative depth judgment and surface detection.Such characteristics make the use of stereoscopicdisplays advantageous to a variety of fields,including camouflage detection (e.g.,stereoscopic aerial photography), precisionmanipulation in teleoperation or reduced visionenvironments (e.g., minimally invasivetelesurgery, underseas operations), as well ascommunication and entertainment environmentsthrough creating a greater sense of social orspatial presence [5].The effectiveness of stereoscopic displays insupporting certain tasks can be assessed in arelatively straightforward manner, by takingcertain quantitative outcome measures (e.g.,number of errors, time taken to successfullycomplete a task) and comparing monoscopic andstereoscopic modes of operation in terms of suchmeasures. Such a performance-oriented approachwill not be applicable, however, whenstereoscopic displays are being developed anddeployed for entertainment purposes, such astelevision or digital games. In an appreciationorientedcontext, the standard assessmentcriterion that is applied to assess the fidelity ofimage creation, transmission and display systemsis image quality [6].Engeldrum [7] has defined image quality as“the integrated set of perceptions of the overalldegree of the excellence of the image” (p.1).Image quality research has traditionally focusedon determining the subjective impact of imagedistortions and artifacts that may occur as aconsequence of errors in image capture, coding,transmission, rendering, or display. Instereoscopic imaging, image quality research hasfocused on effects of (i) stereoscopic imagecompression, (ii) commonly encountered opticalerrors (image shifts, magnification errors,rotation errors, keystone distortions), (iii)imperfect filter characteristics (luminanceasymmetry, color asymmetry, crosstalk), and (iv)stereoscopic disparities (in particular verticaldisparities as a consequence of toed-in cameraconfigurations). More recently, with the adventof the RGB+Z format [8], new image artifactshave been introduced that require more extensivequality evaluations.The International Telecommunication Union(ITU) offers a series of recommendations(standards) for the optimal assessment of picturequality of television images. Tworecommendations are of specific importance tous here. The first, ITU RecommendationBT.500-11, Methodology for the SubjectiveAssessment of the Quality of Television Pictures,http://www.comsoc.org/~mmc/ 15/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letterdescribes the proper application of severalscaling techniques for assessing the quality oftelevision images (e.g., double-stimuluscontinuous quality-scale (DSCQS) method,double-stimulus impairment scale (DSIS)method). The recommendation includesdescriptions of the general setting, presentationof test materials, viewing parameters, observercharacteristics, grading scales, and analysis ofresults.The second recommendation of interest inthis context is BT.1438, Subjective Assessmentof Stereoscopic Television Pictures. Thisrecommendation describes the assessmentfactors that are peculiar to stereoscopic televisionsystems, including depth resolution, depthmotion, puppet theatre effect, and cardboardeffect. The same quality assessment method asdescribed in BT.500-11 is recommended for usein the case of evaluating stereoscopic televisionpictures. These recommendations enableengineers to optimize and enhance their systemsand to evaluate competitive systems.Many studies report a clear preference forstereoscopic images over their 2D counterparts[5, 9-11]. So, the introduction of the thirddimension clearly contains an added value.However, over recent years, several experimentshave pointed in the direction that image qualitymay not be the most appropriate term to capturethe evaluation processes associated with 3Dimages [5, 9, 12]. Tam et al. [9] reported a lowcorrelation between image quality and depth,indicating that when observers are asked toassess perceived image quality of MPEG-2compressed images, the scores are mainlydetermined by the introduced impairments andnot by depth. More recently, Seuntiëns et al. [12]showed that JPEG coding artifacts degrade theimage quality, but that different camera basedistances (CBD) do not influence the imagequality. As IJsselsteijn [5] has argued, it appearsthat the added value of depth in 3D images ifcompared to 2D images can clearly be identifiedby image quality evaluations as long as thepictures are not impaired with image artifactssuch as noise, blockiness or blur. When theimage is impaired, the quality assessmentbecomes more dependent on the grade of theimpairment and much less on the depthdimension. Taken together, these results pointtowards the need for additional assessmentcriteria or terms that allow researchers tomeasure the added value of depth, even whenimages contain artifacts or distortions due tocompression, conversion, rendering, or display.At the 3D Interaction, Communication andExperience (3D/ice) Lab of EindhovenUniversity of Technology, we are running anextensive research programme investigating abroad set of human factors issues associated with3D displays, including work on assessing andminimizing visual (dis)comfort associated with(auto)stereoscopic displays, quantifyingperformance benefits of the use of 3D displays invarious medical domains, and assessing theeffectiveness of stereoscopic displays insupporting (non-verbal) communication inimmersive teleconferencing. One of thelongstanding lines of research at our lab has beenthe assessment of stereoscopic displays from anappreciation oriented perspective, which includeswork on image quality and other candidatemetrics that are potentially able to more fullycharacterize the visual experience associatedwith 3D displays (see, e.g., [13]). In the recentpast, two specific evaluation terms, viewingexperience and naturalness, have shownpromising results [14].We have recently completed a series of threeexperiments where we systematicallyinvestigated the sensitivity of image quality,naturalness and viewing experience in relation tothe introduction of stereoscopic disparity (i.e.,measuring the added value of depth) as well asthe introduction of image artifacts. Theexperiments cover multiple display types(stereoscopic and autostereoscopic), multipleimage distortions (blur and noise), and avariation in image content and stereoscopicdisparity settings. The figure below shows somerepresentative results from the series ofexperiments. Participants were asked to providean assessment of a number of stereoscopic stills,which varied in disparity and image distortion. Inthe figure below, noise (4 levels of Gaussianwhite noise) is plotted along the x-axis, and thevarious assessment scores (Depth, Image Quality,Naturalness and Viewing Experience) are plottedalong the y-axes in separate figures. Disparity isvaried as a function of the camera-base distance(CBD) and plotted as three separate graphs ineach figure (0, 4, and 8 cm). Note that a CBD of0 cm equals the monoscopic viewing condition.The results demonstrate that, first, Image Quality,Naturalness and Viewing Experience appear tobe equally (and predictably) sensitive to theintroduction of an image artifact – in this casenoise level. However, only Naturalness andViewing Experience also show a differentiationbetween the monoscopic condition on the onehand and the two stereoscopic conditions on thehttp://www.comsoc.org/~mmc/ 16/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letterother. Image Quality, although consistentlysensitive to image artifacts, does not account forthe added value that stereoscopic modes ofviewing bring to the total visual experience.Whereas the results for Viewing Experience area little more erratic, Naturalness consistentlyshows a stereo-advantage, in addition tosensitivity to image artifacts.Although traditionally image quality has beensuggested to be a sufficient overall fidelitymetric, published literature as well as thefindings from our experiments suggest that thisconcept does not adequately address the addedvalue of depth introduced by the stereoscopic 3Deffect. Our experimental work has been aimed atinvestigating the sensitivity of potentialalternatives to image quality as an indication of“overall degree of the excellence of the image”[7]. The robust finding emerging throughout ourwork, including the experiment discussed in thispaper, is that the naturalness concept is morelikely to be sensitive to the 3D effect than imagequality.Our findings are crucial for a fair evaluationof 3D displays against 2D benchmarks, and toimprove our understanding of 2D versus 3Dtradeoffs. Stereoscopic display technologyfrequently employs techniques for imageseparation (e.g., temporal multiplexing, spatialmultiplexing) which come at a cost ofmonoscopic image quality. In order to make aconvincing case for stereoscopic displays, oneneeds to be able to quantify what the added valueof a 3D stereoscopic image is, over and abovethe monoscopic rendition of the same image,even when the image quality of each monoscopicimage has been compromised in order to renderthe image in stereo.In order to make a fair comparison, we needa quality assessment metric that is sensitive toboth ‘traditional’ image distortions and artifacts,as well as the perceptual cost and/or benefitassociated with stereoscopic, rather thanmonoscopic, image presentation. We believe thatthe naturalness concept offers significantpromise in this respect.ACKNOWLEDGEMENTWijnand IJsselsteijn gratefully acknowledgessupport from Philips Research as well as theEuropean Commission through the MUTED(http://www.muted3d.eu/) and HELIUM3D(http://www.helium3d.eu/) projects. Workreported here has been performed incollaboration with Marc Lambooij, AndréKuijsters, Frans van Vugt, and IngridHeynderickx.http://www.comsoc.org/~mmc/ 17/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterREFERENCES[1] J. Cutting, and P. Vishton, "Perceivinglayout and knowing distances: Theintegration, relative potency, and contextualuse of different information about depth," inW. Epstein & S. Rogers (eds), Perception ofSpace and Motion, Academic Press, SanDiego, CA, pp. 69-117, 1995.[2] T. Okoshi, "Three dimensional displays,"Proceedings of the <strong>IEEE</strong>, vol. 68, pp.548-564, 1980.[3] S. Pastoor, "3-D displays: A review ofcurrent technologies," Displays, vol. 17,no. 2, pp. 100-110, 1997.[4] I. Sexton, and P. Surman, “Stereoscopic andautostereoscopic display systems,” <strong>IEEE</strong>Signal Processing Magazine, vol. 16, no.3, pp. 85-99, 1999.[5] W.A. IJsselsteijn, “Presence in Depth,”Ph.D. thesis, Eindhoven University ofTechnology, The Netherlands.[6] J. A. J. Roufs, “Perceptual image quality:Concept and measurement,” PhilipsJournal of Research 47, pp. 35–62, 1992.[7] P.G. Engeldrum, “Psychometric Scaling: aToolkit for Imaging SystemsDevelopment,” Imcotek Press, Winchester,USA, 2002.[8] C. Fehn, “Depth-image-based rendering(DIBR), compression and transmissionfor a new approach on 3D-TV,”Proceedings of the SPIE, 5291, pp 93-104,2004.[9] W. Tam, L. Stelmach, and P. Corriveau,“Psychovisual aspects of viewingstereoscopic video sequences,”Proceedings of the SPIE, 3295, pp. 226–235, 1998.[10] A. Berthold, “The influence of blur on theperceived quality and sensation of depthof 2D and stereo images,” Technicalreport, ATR Human InformationProcessing Research Laboratories, 1997.[11] J. Freeman and S. Avons, “Focus groupexploration of presence through advancedbroadcast services,” Proceedings of theSPIE, 3959, pp. 530–539, 2000.[12] P.J.H. Seuntiëns, L.M.J. Meesters and W.A.IJsselsteijn, “Perceived quality ofcompressed stereoscopic images: Effectsof symmetric and asymmetric JPEGcoding and camera separation,” ACMTransactions on Applied Perception, 3(2),pp. 95-109, 2006.[13] W.A. IJsselsteijn, D.G. Bouwhuis, J.Freeman, and H. de Ridder, “Presence asan experiential metric for 3-D displayevaluation,” SID Digest 2002, pp. 252-255, 2002.[14] P. Seuntiëns, I. Heynderickx, and W.A.IJsselsteijn, “Capturing the added value of3D-TV: Viewing experience andnaturalness of stereoscopic images,” TheJournal of Imaging Science andTechnology, 52(2), 2008.Wijnand A. IJsselsteijn is Associate Professorat the Human-Technology Interaction group ofEindhoven University of Technology in TheNetherlands. He has a background in psychologyand artificial intelligence, with an MSc incognitive neuropsychology from UtrechtUniversity, and a PhD in media psychology/HCIfrom Eindhoven University of Technology onthe topic of telepresence. His current researchinterests include social digital media, immersiveand stereoscopic media technology, and digitalgaming. His focus is on conceptualizing andmeasuring human experiences, including socialperception and self-perception, in relation tothese advanced media. Wijnand is significantlyinvolved in various industry and governmentsponsored projects on awareness systems,enriched communication media, digital games,and autostereoscopic display technologies. He isassociate director of the Media, Interface, andNetwork Design labs, and co-founder and codirectorof the Game Experience Lab(http://www.gamexplab.nl), and director of the3D/ice Lab at Eindhoven University ofTechnology. He has published over 120 peerreviewedjournal and conference papers, and coeditedfive books.http://www.comsoc.org/~mmc/ 18/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterThree-Dimensional Video Capture and AnalysisCha Zhang, Microsoft Research, USAchazhang@microsoft.comThree-dimensional movies have re-gained a lotof interest recently, with companies like WaltDisney and DreamWorks Animation allinvesting heavily in making 3D films. While it isrelatively easy to render stereoscopic imagesfrom graphical models, for many otherapplications, such as 3D TV broadcasting, freeviewpoint 3D video and 3D teleconferencing, itis necessary to capture 3D video contents usingcamera arrays. In this short article, we brieflyreview techniques required for 3D video captureand analysis, which serve as the front end formany 3D video related applications.Considering that most 3D displays today(including 3D films) need only two slightlydifferent views to be sent to the left and righteyes of the user, the simplest 3D video capturingfront end is a stereo camera pair. To createsatisfactory 3D images, one needs to be carefulon a few things. First, the cameras need to besynchronized. It used to be that camerasynchronization has to be conducted through acommon external trigger. Nowadays, when thenumber of cameras is small, one can simplydaisy chain a few 1394 FireWire cameras on acommon bus, and these cameras will beautomatically synchronized. The secondimportant thing is camera calibration and imagerectification [1]. For human eyes to perceive 3Dcontents comfortably, it is necessary that the twovideo streams are rectified. Rectification is atransformation process that project images frommultiple cameras onto a common imaging plane,such that the epipolar lines are alignedhorizontally. In other words, when a point in the3D scene is projected to the two cameras, theprojected pixels must lie on the same horizontalline. Image rectification requires carefulcalibration of the cameras. The most popularcamera calibration algorithm today is inventedby Zhang in [2]. Bouguet had a nice MATLABimplementation of the same algorithm [3], whichalso has a routine to rectify an image pair.The data format for stereoscopic displays is notlimited to image pairs. As part of the EuropeanInformation <strong>Society</strong> Technologies (IST) project“Advanced Three-Dimensional TelevisionSystem Technologies” (ATTEST), Fehn et al. [4]proposed to use image plus depth as the captureand transmission format for stereoscopic videos,which is now the default 3D video format forsome 3D display manufactures such as Philips.Image plus depth is an evolutionary format thathas low overhead in terms of bitrate (because thedepth map can be compressed as a grayscaleimage) and is backward compatible to 2D videostandards. The depth map can be manuallycreated for legacy videos, or computed from astereo camera pair, or directly captured by depthsensors. The main challenge in using the imageplus depth format is that the left and right viewsto be displayed still need to be synthesized. Forscenes with a lot of occlusions, such a task isnon-trivial since some of the occluded regions inthe synthesized views are not visible in the 2Dimage. Various algorithms have been proposedin literature for this hole-filling challenge [5].Fortunately, for most users, this is not a concernas it is dealt internally by the 3D displaymanufacturers.It is worth spending a few additional words ondepth sensors. Triangulation and time-of-flightare two of the most popular mechanisms fordepth sensing. In triangulation, a stripe pattern isprojected onto the scene, which is captured by acamera positioned at a distance from theprojector. To avoid contaminating the color andtexture of the scene, the projector canperiodically switch among a few stripe patternsin order to create a uniform illumination bytemporal averaging. Another possibility is to useinvisible spectrum lights such as infrared lightsources for the stripe pattern. Time-of-flightdepth sensors can be roughly divided into twomain categories: pulsed wave and continuousmodulated wave. Pulsed wave sensors measurethe time of delay directly, while continuousmodulated wave sensors measure the phase shiftbetween the emitted and received laser beams todetermine the scene depth. One example ofpulsed wave sensors is the 3D terrestrial laserscanner systems manufactured by Riegl(http://www.riegl.com/). Continuous modulatedwave sensors include SwissRanger(http://www.swissranger.ch/), ZCam DepthCamera from 3DV Systems(http://www.3dvsystems.com/), etc.http://www.comsoc.org/~mmc/ 19/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterOne limitation of many stereoscopic 3D displaystoday is the lack of motion parallax. Indeed, inthe real world the relative position of objectschanges as we move our head. To replicate thatexperience, we need not only to provide differentviews to each eye, but the view has to change asthe viewer’s position changes. Alternatively, onemay like to have some interactive control overthe virtual viewpoint where the images aresynthesized. Another possibility is when one hasa multi-view autostereoscopic 3D display, whichdisplays multiple views in order to increase therange of sweep spot for 3D perception. Thesemultiple views need to be synthesized from agiven camera array (often with smaller numberof cameras than the number of views). To fulfillthese tasks, one needs to synthesize novel viewsfrom a wide range of possible viewing positions,which creates a set of new challenges for 3Dvideo capture and analysis.The state-of-the-art approach to creating freeviewpoint 3D video is to use large scale cameraarrays [6][7], or to use a small scale camera arraywith prior knowledge of the objects in the scene[8], or to use hybrid depth camera and regularcamera arrays [9]. The cameras still need to besynchronized by external triggers. Large cameraarrays typically involve huge amount of data,which are typically handled by many computersor dedicated video capture/compression card.Calibration of large camera arrays is a verytedious task, but in principle they can still behandled by Zhang’s method mentioned above.Another interesting issue is color calibration.Color inconsistency across cameras may causeincorrect view-dependent color variation duringrendering. The problem is very interesting butrarely explored in literature, except the recentwork by Joshi et al. [10].The main challenge in free viewpoint 3D videois view synthesis, namely, how to render thevirtual views specified by the user from the arrayof images captured. This is far from a solvedproblem, although there has been an extensiveliterature on this topic, commonly referred asimage-based rendering. Since a detailed reviewof image-based rendering is out of the scope ofthis article, we refer the readers to [11] and [11]for two detailed survey papers of the topic.In summary, although 3D video capture requirescareful consideration of issues such asgeometry/color calibration and data storage, it islargely an engineering problem, and there havebeen many existing systems ranging from 2cameras to over a hundred cameras reported inliterature. On the other hand, analyzing thecaptured content, such as hole filling, depthreconstruction and novel view synthesis, is stillfar from mature. For MultimediaCommunication researchers, in addition to thetraditional issues of 3D video compression andnetwork delivery, the interplay between thesetraditional topics and 3D video analysis could bea very interesting topic to work upon [12].Reference[1] R. Hartley and A. Zisserman, MultipleView Geometry in Computer Vision,Cambridge University Press, 2000.[2] Z. Zhang, “A flexible new technique forcamera calibration”, <strong>IEEE</strong> Trans. on PAMI,22(11):1330-1334, 2000.[3] J.-Y. Bouguet, Camera CalibrationToolbox for Matlab,http://www.vision.caltech.edu/bouguetj/calib_doc/.[4] C. Fehn, P. Kauff, M. Op De Beeck, F.Ernst, W. IJsselsteijn, M. Pollefeys, L.Van Gool, E. Ofek and I. Sexton, “AnEvolutionary and Optimised Approach on3D-TV”, Proc. of International BroadcastConference, 2002.[5] C. Fehn, “A 3D-TV approach using depthimage-basedrendering (DIBR)”, Proc. ofVIIP 2003.[6] B. Wilburn, M. Smulski, H.-H. K. Lee, M.Horowitz, “The light field video camera”,Proc. Media Processors 2002, SPIEElectronic Imaging, 2002.[7] M. Tanimoto, “Overview of free viewpointtelevision”, EURASIP Signal Process.Image Commun., 21(6), 454-461, 2006.[8] J. Carranza, C. Theobalt, M. Magnor andH.-P. Seidel, “Free-viewpoint video ofhuman actors”, Proc. SIGGRAPH, 2003.[9] E. Tola, C. Zhang, Q. Cai and Z. Zhang,“Virtual view generation with a hybridcamera array”, Tech. report, CVLAB-REPORT-2009-001, EPFL.[10] N. Joshi, B. Wilburn, V. Vaish, M. Levoyand M. Horowitz, “Automatic colorcalibration for large camera arrays”, Tech.report, CS2005-0821, UCSD CSE.[11] H.-Y. Shum, S. B. Kang and S.-C. Chan,“Survey of image-based representationsand compression techniques”, <strong>IEEE</strong> Trans.CSVT, 13(11), 1020-1037, 2003.[12] C. Zhang and T. Chen, “A survey onhttp://www.comsoc.org/~mmc/ 20/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letterimage-based rendering – representation,sampling and compression”, EURASIPSignal Process. Image Commun., 19(1), 1-28, 2004.[13] D. Florencio and C. Zhang, “Multiviewvideo Compression and Streaming Basedon Predicted Viewer Position”, Proc.ICASSP 2009.Cha Zhang received his B.S. and M.S. degreesfrom Tsinghua University, Beijing, China in1998 and 2000, respectively, both in ElectronicEngineering, and the Ph.D. degree in Electricaland Computer Engineering from CarnegieMellon University, in 2004. He then joinedMicrosoft Research as a researcher in theCommunication and Collaboration SystemsGroup.Dr. Zhang was the Publicity Chair for theInternational Packet Video Workshop in 2002,the Probram Co-Chair for the first ImmersiveTelecommunication Conference in 2007, theSteering Committee Co-Chair for the secondImmersive Telecommunication Conference in2009, and the Program Co-Chair for ACMWorkshop on Media Data Integration (inconjunction with ACM Multimedia) 2009. He isan Associate Editor for the Journal of DistanceEducation Technologies and on the EditorialBoard of the ICST Trans. on ImmersiveTelecommunications. He served as a TechnicalProgram Committee member for numerousconferences such as ACM Multimedia, CVPR,ICCV, ECCV, ICME, ICPR, ICWL, etc. He wonthe Best Paper Award at ICME 2007.Dr. Zhang’s current research interest includeimmersive telecommunication, audio/videoprocessing for multimedia collaboration, patternrecognition and machine learning, etc.Previously, he has also worked on image-basedrendering (compression / sampling / rendering),3D model retrieval, active learning, peer-to-peernetworking, automated lecture rooms, etc.http://www.comsoc.org/~mmc/ 21/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letter3D Visual Content Compression for <strong>Communications</strong>Karsten Müller,FraunhoferHeinrich Hertz Institute, GermanyKarsten.mueller@hhi.fraunhofer.deThe recent popularity of 3D media is driven byresearch and development projects worldwide,targeting all aspects from 3D content creationand capturing, format representation, coding andtransmission as well as new 3D displaytechnologies and extensive user studies. Here,efficient compression technologies are developedthat also consider these aspects: 3D productiongenerates different types of content, like naturalcontent, recorded by multiple cameras orsynthetic computer-generated content.Transmission networks have different conditionsand requirements for stationary as well as mobiledevices. Finally, different end user devices anddisplays are entering the market, likestereoscopic and auto-stereoscopic displays, aswell as multi-view displays. From thecompression point of view, visual data requiresmost of the data rate and different formats withappropriate compression methods are currentlyinvestigated. These visual methods can beclustered into vision-based approaches fornatural content, and (computer) graphics-basedmethods for synthetic content.A straight-forward representation for naturalcontent is the use of N camera views as N colorvideos. For this, compression methods derivetechnology from 2D video coding, where spatialcorrelations in each frame, as well as temporalcorrelations within the video sequence areexploited. In multi-view video coding (MVC) [1],also correlations between neighboring camerasare considered and thus multi-view video can becoded more efficiently than coding each viewindividually.The first types of 3D displays, currently hittingthe market, are stereoscopic displays. Therefore,special emphasis has been given to 2-view orstereoscopic video. Here, three main codingapproaches are investigated. The first approachconsiders conventional stereo video and appliesindividual coding of both views as well as multiviewvideo coding. Although the latter providesbetter coding efficiency, some mobile devicesrequire reduced decoding complexity, such thatindividual coding might be considered. Thesecond approach considers data reduction priorto coding. This method is called mixedresolution coding and is based on the binocularsuppression theorem. This states, that the overallvisual perception is close to that of the originalhigh image resolution of both views, if oneoriginal view and one low-pass filtered view (i.e.the upsampled smaller view) is presented to theviewer. The third coding approach is based onone original view and associated per-pixel depthdata. The second view is generated from this dataafter decoding. Usually, depth data can becompressed better than color data, thereforecoding gains are expected in comparison to the 2view-color-only approaches. This video+depthmethod has another advantage: The baseline orvirtual eye distance can be varied and thusadapted to the display and/or adjusted by the userto change the depth impression. However, thismethod also has disadvantages, namely theinitial creation/provision of depth data bylimited-capability range cameras or error-pronedepth estimation. Also, areas only visible in thesecond view are not covered. All this may lead toa disturbed synthesized second view. Currently,studies are carried out about the suitability ofeach method, considering all aspects of the 3Dcontent delivery chain.Both approaches, multi-view video coding andstereo video coding, still have limitations, suchthat newer 3D video coding approaches focus onadvanced multi-view formats. Here, the mostgeneral format is multi-view video + depth(MVD) [2]. It combines sparse multi-view colordata with depth information and allows renderinginfinitely dense intermediate views similar forany kind of autostereoscopic N-view displays.The methods, described above are a subset ofthis format. For possible better data compression,also variants of the MVD format are considered,like layered depth video (LDV). Here, one fullview and depth is considered with additionalresidual color and depth information fromneighboring views. The latter covers thedissoccluded areas in these views. For theseadvanced formats, coding approaches will beconsidered with respect to best compressioncapability, but also for down-scalability andbackward compatibility to existing approaches,like stereo video coding. The new approaches aremore than simply extending existing methods:Classical video coding is based on rate-distortionoptimization, where the best compression at thebest possible quality is sought. This objectivequality is measured by pixel-wise mean squarederror (MSE), comparing the lossy decoded andhttp://www.comsoc.org/~mmc/ 22/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letteroriginal picture. In MVD or LDV, newintermediate views are synthesized, where nooriginal views are available. Therefore, aclassical MSE is not suited for synthesizedimages. Therefore, new objective qualitymeasures are required. Also, depth datacompression has to be evaluated by the quality ofintermediate color views, not by comparisonwith the uncompressed depth data. Furtherresearch is required for finding the optimal bitrate distribution between color and depth data,such that a number of open questions need to beaddressed.A completely different branch of 3D codingapproaches was developed for synthetic content.This content is represented by color and scenegeometry data in the most general case and maycontain a number of further attributes, likereflection models. The most important, color andgeometry data, have there analogies in naturalscene content representation, i.e. color and depthdata. In graphics-based approaches, 3D scenecolor data is treated as multi-texture or multiviewvideo data, similar to the methods,described above. In its most general form, 3Dscene geometry is treated as dynamic 3Dwireframes with 3D points or vertices andassociated connectivity. The latter tells whichpoints are connected and the most widely used isa triangular connectivity, where the wireframeconsists of planar triangles. For temporalchanges, the wireframe is “animated”, i.e. thespatial positions of the vertices may change overtime. Coding of such scene geometry is carriedout by coding the vertex positions andconnectivity of the first frame or static mesh (e.g.by 3D Mesh Compression), followed by codingof the dynamic part, which consists of the vertexposition changes or 3D motion vectors of thefollowing frames [3]. Here, the connectivity isassumed constant and thus only needs to becoded for the first frame. For this, methods likedynamic 3D or frame-animated meshcompression have been introduced. Spatialcorrelation between motion vectors ofneighboring vertices, as well as temporalcorrelation between subsequent meshes areexploited. Further special formats for 3Dsynthetic content exist, where the scenegeometry takes a special form due to priorknowledge of scene objects, like terrain data andhuman faces/bodies. Examples for this are meshgrid and facial/bone-based animationrespectively.Both approaches, Computer Vision andComputer Graphics based coding, exist inparallel. In future work, more emphasis will beput on interchangeability to provide a continuumbetween Computer Vision and ComputerGraphics also for coding methods and to alloweasy format conversion between both codedrepresentations.References[1] ISO/IEC JTC1/SC29/WG11, “Text ofISO/IEC 14496-10:200X/FDAM 1 MultiviewVideo Coding”, Doc. N9978, Hannover,Germany, July 2008.[2] P. Merkle, A. Smolic, K. Müller, and T.Wiegand, “Multi-view Video plus DepthRepresentation and Coding”, Proc. <strong>IEEE</strong>International Conference on Image Processing(ICIP'07), San Antonio, TX, USA, pp. 201-204, Sept. 2007.[3] K. Müller, P. Merkle, and T. Wiegand,“Compressing 3D Visual Content”, <strong>IEEE</strong>Signal Processing Magazine, vol. 24, no. 6, pp.58-65, November 2007.Karsten Müller received the Dipl.-Ing. degreein Electrical Engineering and Dr.-Ing. degreefrom the Technical University of Berlin,Germany, in 1997 and 2006 respectively.In 1993 he spent one year studying Electronicsand Communication Engineering at NapierUniversity of Edinburgh/Scotland, including ahalf-year working period at IntegratedCommunication Systems Inc. inWestwick/England.http://www.comsoc.org/~mmc/ 23/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterIn 1996 he joint the Heinrich-Hertz-Institute(HHI) Berlin, Image Processing Department,where he is working on projects focused onmotion and disparity estimation, incomplete 3Dsurface representation and coding, combined 2Dand 3D object description, viewpoint synthesisand 3D scene reconstruction.He has been actively involved in MPEGactivities, standardizing the multi-viewdescription for MPEG-7 and view-dependentmulti-texturing methods and dynamic meshcompression for the 3D scene representation inMPEG-4 AFX (Animation FrameworkeXtension). He contributed to the multi-viewcoding in MPEG-4 MVC (Multi-View VideoCoding) and co-chairs the MPEG 3D Videoactivities.In recent projects he was involved in researchand development of traffic surveillance systemsand visualization of multiple-view video, objectsegmentation and tracking and interactive usernavigation in 3D environments. Currently, he isa Project Manager for European projects in thefield of 3D video technology and multimediacontent description.He is Senior Member of the <strong>IEEE</strong> (M’98-SM’07).http://www.comsoc.org/~mmc/ 24/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-LetterInteractive 3D Online ApplicationsIrene Cheng, University of Alberta, Canadalin@cs.ualberta.caAbstractInteractive 3D content has become an integralpart in online applications. Examples can befound in Second Life, Google 3D City scene andMicrosoft 3D reconstruction using multiple 2Dphotos. One advantage attributable to 3D contentis the extra dimension, which provides bettervisual communication than 2D to representstructures and explain concepts. Moreimportantly, human computer interaction makesthe user feel engaged and in control, and thusobtain higher satisfaction. The increasingpopularity of Blackberry, iPhone and cell-phonesmakes us constantly anticipate what main streaminteractive 3D online applications will beavailable next on mobile devices. Thesepromises also lead us to think what newstrategies should be employed to overcomeobstacles such as competing bandwidth, signalinterference and limited processing power onmobile devices. Despite the advancements in thelast two decades, providing interactive 3D onlinecontent in high quality without jeopardizinginteractivity is still a challenge. This articlereviews current and potential trend, the issuesinvolved, as well as the compression andtransmission strategies designed to address thesechallenges.Keywords: Interactive 3D, VisualCommunication, Online Applications, 3Dcompression and transmission, Level-of-detail,Perceptual Quality1. IntroductionSpontaneous system response and high qualitydisplay are two main user expectations frominteractive 3D online applications. In the olddays, due to slow rendering, insufficientbandwidth and low processing power, usinginteractive 3D content was not possible in onlineapplications. In order to support fast real timerendering, display quality had been compromised,where coarse 3D models mapped with lowquality texture were used. With the advancementin technology, the qualities of 3D graphics andanimations have improved significantly in thelast ten years, particularly in the onlineentertainment industry. 3D content providesvibrant and realistic sensation, which is moreappealing than still images. Augmented realityincorporating 3D objects in video streamsenhances human perception by strengthening thedepth perspective. Virtual 3D scene presented ina panoramic setting brings the viewers to atotally immersive environment. Moreover, theemerging 3D television and display (3DTV)technology has changed the landscape relating tohow digital content is delivered, presented andappreciated by human observers. While some3DTV technology still uses eye-wear to simulateimmersion, more advanced stereoscopic displayshave been launched providing multiple and freeviewpoint entertainment without using eye-wear.This trend will have great impact on homeentertainment.Visual communication no doubt has evolvedfrom the traditional 2D media to a 3D virtualworld and beyond. Although there is a generalacceptance of interactive 3D content and, to acertain extent, an addiction to online games, thecapacities and benefits of interactive 3Dtechnology are still not fully explored as theyshould have been. The goal of this article is toinspire thoughts to develop more effectivesystems and robust algorithms to supportinteractive 3D online applications.The rest of this paper is organized as follows;Section 2 reviews some interactive 3D onlineapplications; Section 3 looks at some 3Dcompression and transmission strategies; Section4 highlights the emerging development in 3DTV;and Section 5 gives the summary.2. Evolution of Visual Communicationtowards Interactive 3D2.1 Interactive 3D Online Learning, Testingand AuthoringOnline education, which includes K-12,university degrees and diplomas, has been usedto complement classroom teaching. Other thanmaking education more accessible, the adaptionof interactive 3D content in online educationaims to provide more semantically and visuallyappealing information that cannot be provided byusing traditional media. For example, instead ofusing text and image in a multiple choice formatto ask a question, interactive 3D can beembedded in Math and Chemistry questions –http://www.comsoc.org/~mmc/ 25/41 Vol.4, No.7, August 2009

<strong>IEEE</strong> COMSOC MMTC E-Letterstudents drag and drop the numbered 3D objectsinto different baskets in order to make the sumequal in each basket (Figure 1 & 2). Studentspick the appropriate atoms from the Periodictable and put bonds between them to constructmolecules (Figure 3). By manipulating andvisualizing the molecules in 3D space, studentscan understand the molecular structures better.Figure 1: A 3D Math item: beforedistributing the numbered objectsFigure 2: A 3D Math item: afterdistributing the numberedobjectsFigure 3: Construct aninteractive 3D moleculesFigure 4: An interactive 3Deducation game to test a Physictopic – Moment.Figure 5: 3D video – Augmentedreality combining real and virtualcharacters.Figure 6: Interactive 3Dcontent on a cell phone.Figure 7: Stereoscopic view of alung implemented forvisualization using red-blueglasses.Figure 8: Animated 3Dcharacter driven by music.Figure 9: 3D contentmanipulated on a multi-touchdisplay.Research studies find that interactive 3Dcontent can induce student engagement andimprove learning performance [12]. Interactive3D content can also be used to test differentcognitive skills, such as music [14]. Moreover,3D online content can support adaptive testingand effective student modeling, enablingstudents to work at their own pace and obtainpersonal guidance depending on their learningabilities. A student’s skill level can be evaluatedusing Item Response Theory (IRT) [19]. Otherbenefits associated with interactive 3D contentincludes: Automatic estimation of the difficultylevel of a question item using a ParametersBased Model [15], and scoring partial marksusing a Graph Edit Algorithm [40].There is a major concern relating toproviding interactive 3D online content ineducation. While multiple choice questions canbe easily created using a standard template, thehttp://www.comsoc.org/~mmc/ 26/41 Vol.4, No.7, August 2009