Stable parallel algorithms for solving the inverse gravimetry and ...

Stable parallel algorithms for solving the inverse gravimetry and ...

Stable parallel algorithms for solving the inverse gravimetry and ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

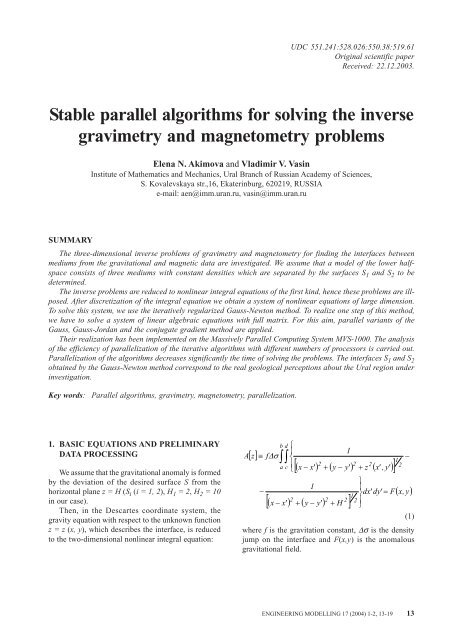

E.N. Akimova, V.V. Vasin: <strong>Stable</strong> <strong>parallel</strong> <strong>algorithms</strong> <strong>for</strong> <strong>solving</strong> <strong>the</strong> <strong>inverse</strong> <strong>gravimetry</strong> <strong>and</strong> magnetometry problemsThe magnetometry equation has <strong>the</strong> following<strong>for</strong>m:B[] z≡ ∆ J−b d∫∫⎧⎪⎨z( x' ,y' )22 2( x − x' ) + ( y − y' ) + z ( x' ,y' )[ ]3a c ⎪2⎩H22 2[( x − x' ) + ( y − y' ) + H ]32⎫⎪⎬dx'dy' = G⎪⎭−( x, y)(2)where G is <strong>the</strong> anomalous magnetic field <strong>and</strong> ∆J is <strong>the</strong>averaged jump of <strong>the</strong> component z of <strong>the</strong> magnetizationvector.To select <strong>the</strong> anomalous gravity <strong>and</strong> magnetic field,which serve <strong>for</strong> <strong>the</strong> right-h<strong>and</strong> sides of Eqs. (1) <strong>and</strong>(2), we imply <strong>the</strong> following technique (see Ref. [1]).It is commonly accepted that <strong>the</strong> field recalculationupward to a level z=+H practically eliminates <strong>the</strong>effect of anomaly-<strong>for</strong>ming objects, located up to <strong>the</strong>depth z=−H. By geological evidence, <strong>the</strong> field sources<strong>for</strong>eign to our analysis are located more deeply thanz=−H (H=2 km or H=10 km). There<strong>for</strong>e, <strong>the</strong> measuredfield was continued upward to <strong>the</strong> level z=H.The stronger distortions in this procedure occurnear <strong>the</strong> boundary of <strong>the</strong> domain, hence <strong>the</strong> integrationis done over a finite area. To diminish <strong>the</strong>se distortions,<strong>the</strong> values of a function that is <strong>the</strong> solution of <strong>the</strong> planeDirichlet problem were preliminary subtracted from<strong>the</strong> measured field. In <strong>the</strong> investigated domain thisfunction satisfies <strong>the</strong> two-dimensional Laplaceequation <strong>and</strong> coincides with <strong>the</strong> given field on <strong>the</strong>boundary of <strong>the</strong> domain.In our opinion, this function can be used as <strong>the</strong> fieldof <strong>the</strong> lateral sources. For recalculation upward, <strong>the</strong>Poisson <strong>for</strong>mula <strong>for</strong> a subspace was used:U( x, y,H )≡12π+∞ +∞∫∫HU ( x' , y' ,0)2( x − x' ) + ( y − y' )dx' dy'2 2[ H ]−∞ − ∞+To get rid of <strong>the</strong> sources in <strong>the</strong> horizontal layer from<strong>the</strong> ground surface to <strong>the</strong> level z=−H, <strong>the</strong> fieldrecalculated upward was <strong>the</strong>n continued downward to<strong>the</strong> depth z=−H. In this case we solve <strong>the</strong> integralequation:1Kω≡2π= U+∞ +∞2Hω( x' , y' ) dx' dy'∫∫22−∞ −∞ ( x − x' ) + ( y − y' ) + ( 2H )( x, y,H )2[ ]to find <strong>the</strong> unknown function ω(x,y)=U(x,y,−H). Here<strong>the</strong> function U(x,y,H) is given. In order to stably solvethis equation we used <strong>the</strong> Lavrentiev regularizationmethod of <strong>the</strong> <strong>for</strong>m:(K+αI)ω = U<strong>for</strong> a suitable regularization parameter α>0.3232=Since <strong>the</strong> singularity of <strong>the</strong> function obtained liesbelow <strong>the</strong> plane z=−H, this function can be interpretedas <strong>the</strong> field of deep sources.The sum of this field recalculated on <strong>the</strong> groundsurface <strong>and</strong> <strong>the</strong> solution of <strong>the</strong> Dirichlet problem wasused as a field of lateral <strong>and</strong> deep sources (extrinsicsources). The difference of <strong>the</strong> measured field <strong>and</strong> <strong>the</strong>field of <strong>the</strong> extrinsic sources was used as <strong>the</strong> gravityeffect (<strong>the</strong> function F(x,y) in Eq. (1)) of sources locatedin <strong>the</strong> horizontal layer from <strong>the</strong> ground surface to <strong>the</strong>depth z=−H.The similar procedure was used <strong>for</strong> selecting <strong>the</strong>anomalous magnetic field (<strong>the</strong> function G(x,y) in Eq. (2)).2. ITERATIVE METHOD FOR SOLVINGTHE NONLINEAR SYSTEMAfter discretizing <strong>the</strong> Eqs. (1) <strong>and</strong> (2) on <strong>the</strong> gridn = M × N <strong>and</strong> approximating <strong>the</strong> integral operatorsA <strong>and</strong> B by <strong>the</strong> quadrature <strong>for</strong>mulas, we obtain asystem of nonlinear equations of <strong>the</strong> <strong>for</strong>m:A n[z] = F n(3)For <strong>solving</strong> this system <strong>the</strong> iteratively regularizedNewton method [2] is used:z k+1 = z k − [A' n(z k ) + α kI] -1 (A n(z k ) + α kz k − F n) (4)where A' n (z k ) is <strong>the</strong> Jacobi matrix calculated at <strong>the</strong>point z k , I is <strong>the</strong> identity operator, α k is a sequence of<strong>the</strong> positive parameters <strong>and</strong> F n is a vectorapproximation of <strong>the</strong> function F(x,y) (or G(x,y)).The iterative method of <strong>the</strong> Eq. (3) can be writtenin <strong>the</strong> following <strong>for</strong>m:A k z k+1 = F k (5)where A k = A' n (z k ) + α k I is an n×n matrix, F k = A k z k −(A n (z k ) + α k z k − F) is an n-dimensional vector.So, <strong>for</strong> finding <strong>the</strong> next approximation z k+1 inNewton method, Eq. (4), it is necessary to solve <strong>the</strong>system of linear algebraic equations, Eq. (5), with fulln × n matrix.The careful analysis showed that, <strong>for</strong> aacceptable starting point z 0 <strong>and</strong> parameters α k , <strong>the</strong>matrix A k has n different eigenvalues <strong>for</strong> all realizediterations (k = 1,2,...,5).This implies that <strong>the</strong> corresponding eigenvectors arelinearly independent <strong>and</strong> <strong>the</strong> matrix S k whose columnsare eigenvectors has <strong>the</strong> <strong>inverse</strong> (S k ) -1 . Hence, <strong>the</strong> matrixA k can be represented in <strong>the</strong> <strong>for</strong>m [3]:A k = S k Λ k (S k ) -1where Λ k is <strong>the</strong> diagonal matrix. From this <strong>for</strong>mula itfollows that <strong>the</strong> matrix A k (k = 1, 2,..., 5) is invertible.Moreover, <strong>for</strong> problem described by Eq. (1) <strong>the</strong>condition number cond(A k ) of <strong>the</strong> matrix A k varieswithin <strong>the</strong> intervals 2.8≤cond(A k )≤727 <strong>for</strong> <strong>the</strong> interfaceS 1 <strong>and</strong> 1.8≤cond(A k )≤2642 <strong>for</strong> <strong>the</strong> interface S 2 . Forproblem described by Eq. (2) <strong>the</strong> condition numbervaries within <strong>the</strong> intervals 1.1≤cond(A k )≤405 <strong>for</strong> <strong>the</strong>interface S 1 <strong>and</strong> 1.2≤cond(A k )≤328 <strong>for</strong> <strong>the</strong> interface S 2 .14 ENGINEERING MODELLING 17 (2004) 1-2, 13-19

E.N. Akimova, V.V. Vasin: <strong>Stable</strong> <strong>parallel</strong> <strong>algorithms</strong> <strong>for</strong> <strong>solving</strong> <strong>the</strong> <strong>inverse</strong> <strong>gravimetry</strong> <strong>and</strong> magnetometry problemsThis guarantees <strong>the</strong> stability of calculations under<strong>the</strong> realization of <strong>the</strong> iterative process given by Eq. (4)<strong>and</strong> <strong>the</strong> monotone decrease of <strong>the</strong> Newton methoderrors ∆ k =⏐z k − z⏐.The fur<strong>the</strong>r analysis showed that, in fact, <strong>for</strong>method given by Eq. (4) <strong>the</strong> conditions of <strong>the</strong> Newton-Kantorovich <strong>the</strong>orem [4] at <strong>the</strong> iteration points z k werefulfilled, which implies practical convergence of thismethod.It should be noted that if an operator A is monotone,<strong>the</strong>n <strong>for</strong> any α k >0 <strong>the</strong> operator [A'(z)+α k I] -1 exists <strong>and</strong>is bounded, <strong>and</strong> under some conditions method givenby Eq. (4) converges to a solution of <strong>the</strong> equationA(z) = F <strong>for</strong> α k →0, k→∞ (see Ref. [2]). It is known[5] that <strong>the</strong> <strong>gravimetry</strong> operator is not monotone, so<strong>the</strong> convergence <strong>the</strong>orem has not been proved.3. GAUSS, GAUSS-JORDAN ANDCONJUGATE GRADIENT METHODS.PARALLEL REALIZATIONSo, <strong>for</strong> finding <strong>the</strong> next approximation z k+1 <strong>for</strong> eachiteration of Newton method given by Eq. (4) it isnecessary to solve Eq. (5). The problem given by Eq. (5)can be solved by <strong>the</strong> Gauss or Gauss-Jordan elimination<strong>algorithms</strong> or by <strong>the</strong> conjugate gradient method.The main idea of <strong>the</strong> Gaussian elimination methodis reducing <strong>the</strong> full matrix A of system described byEq. (5) to <strong>the</strong> upper triangular <strong>for</strong>m, that is, to obtain<strong>the</strong> system of equations in <strong>the</strong> following <strong>for</strong>m:⎧ x1+ c12x2+ c13x3+ ... + c1nxn= c1n+1⎪x2+ c23x3+ ... + c2nxn= c2n+1⎨⎪..............................................................⎪⎩xn= cnn+1(6)From system given by Eq. (6) we find <strong>the</strong>unknowns by <strong>the</strong> <strong>for</strong>mulas:x k= c kn+1−c kk+1x k+1−...− c knx n, k=n, n-1,...,1 (7)The Gauss-Jordan algorithm is one of <strong>the</strong> variants of<strong>the</strong> Gaussian elimination algorithm. In this case <strong>the</strong> matrixA of system given by Eq. (5) is reduced to <strong>the</strong> diagonal<strong>for</strong>m, but not an upper triangular <strong>for</strong>m. In <strong>the</strong> (k+1)-thstep <strong>the</strong> current matrix A k has <strong>the</strong> following <strong>for</strong>m:⎡( k) ( k)1 0 ... 0 a1,k 1... a ⎤⎢+1n ⎥⎢... ... ... ... ... ... ... ⎥⎢⎥⎢( k) ( k)0 0 ... 1 akk,k 1... a ⎥+knA = ⎢⎥ (8)⎢ ( k) ( k)⎥⎢0 0 ... 0 ak+ 1,k+ 1... ak+1,n ⎥⎢... ... ... ... ... ... ... ⎥⎢⎥⎢( k ) ( k0 0 ... 0 a)n,k+1... a ⎥⎣nn ⎦We divide <strong>the</strong> (k+1)-th row by <strong>the</strong> coefficienta (k) k+1 , k+1 <strong>and</strong> eliminate all off-diagonal elements of<strong>the</strong> (k+1)-th column. We make this elimination by <strong>the</strong>subtraction of <strong>the</strong> obtained (k+1)-th row multiplied bya (k) j , k+l from <strong>the</strong> j-th row (j=1, 2,..., n; j≠k+1).To guarantee <strong>the</strong> numerical stability of <strong>the</strong> Gauss<strong>and</strong> Gauss-Jordan <strong>algorithms</strong> in <strong>the</strong> general case, apartial choice of <strong>the</strong> pivot element is necessary. If wetake <strong>the</strong> maximum (with respect to modulus) elementin <strong>the</strong> k-th row as <strong>the</strong> pivot element at <strong>the</strong> k-th step of<strong>the</strong> elimination, <strong>the</strong>n, be<strong>for</strong>e realization of <strong>the</strong> step, itis necessary to rearrange <strong>the</strong> k-th column <strong>and</strong> <strong>the</strong>column with <strong>the</strong> pivot element.The <strong>parallel</strong> realization of <strong>the</strong> Gauss or Gauss-Jordan method <strong>for</strong> m processors is <strong>the</strong> following.Conditionally, we divide <strong>the</strong> vectors z <strong>and</strong> F into mparts so that n=m⋅L. The matrix A is divided by <strong>the</strong>horizontal lines into m blocks, respectively (Figure 1).¹¹¹¹ÀÓ×عÔÖÓ××ÓÖ½¹ÔÖÓ××ÓÖ¾¹ÔÖÓ××ÓÖºººÑ¹ÔÖÓ××ÓÖFig. 1 The diagram of <strong>the</strong> data distribution over <strong>the</strong> processorsAssume that <strong>the</strong> rows of <strong>the</strong> matrix A with numbers1, 2,..., L are stored in <strong>the</strong> memory of <strong>the</strong> first processor(<strong>the</strong> Host), <strong>the</strong> rows with numbers (L+1), (L+2),..., 2Lare stored in <strong>the</strong> memory of <strong>the</strong> second processor, <strong>and</strong>so on. The rows with numbers (m-1)L+1, ..., Lm arestored in <strong>the</strong> memory of <strong>the</strong> m-th processor. The mainidea of <strong>the</strong> <strong>parallel</strong> elimination is <strong>the</strong> following. At <strong>the</strong>every step each processor eliminates <strong>the</strong> unknownsfrom its own part of <strong>the</strong> L equations. The Hostprocessor chooses <strong>the</strong> pivot element among <strong>the</strong>elements of <strong>the</strong> current row, modifies this row <strong>and</strong>sends it to each of <strong>the</strong> o<strong>the</strong>r processors [6].During <strong>the</strong> Gauss elimination process, more <strong>and</strong>more processors become idle at every step, since <strong>the</strong>number of <strong>the</strong> equations is diminished by one. Thisinfluences <strong>the</strong> efficiency of <strong>the</strong> algorithm. In <strong>the</strong> Gauss-Jordan method all <strong>the</strong> processors make calculationswith <strong>the</strong>ir own parts until <strong>the</strong> end. The waiting timedecreases <strong>and</strong> <strong>the</strong> efficiency of <strong>the</strong> algorithm increases.The conjugate gradient method is used <strong>for</strong> <strong>solving</strong> <strong>the</strong>problem with a symmetric matrix. For this we trans<strong>for</strong>m<strong>the</strong> system given by Eq. (5) to <strong>the</strong> following <strong>for</strong>m:(A k ) T A k z k+1 = (A k ) T F k (9)where (A k ) T is <strong>the</strong> conjugated matrix.For an arbitrary initial approximation z 0 , <strong>the</strong> firstapproximation z 1 is found from it by <strong>the</strong> steepestdescent method:020⋅ ( Az − F)0 0( Ar ,r )r1 00 0z = z −, r = Az − F (10)ENGINEERING MODELLING 17 (2004) 1-2, 13-19 15

E.N. Akimova, V.V. Vasin: <strong>Stable</strong> <strong>parallel</strong> <strong>algorithms</strong> <strong>for</strong> <strong>solving</strong> <strong>the</strong> <strong>inverse</strong> <strong>gravimetry</strong> <strong>and</strong> magnetometry problemsFur<strong>the</strong>r from z 0 <strong>and</strong> z 1 we find all successiveapproximations of <strong>the</strong> conjugate gradient method:zk+1α =kβ =kk = 0,1,2,...k kk k−1z − αk( Az − F ) + βk( z − z )2k k k k k k kr ( Ap , p ) − ( r , p )( Ar , p ),k k k k k k 2( Ar ,r )( Ap , p ) − ( Ar , p )2k k k k k k kr ( Ar , p ) − ( r , p )( Ar ,r ),k k k k k k 2( Ar ,r )( Ap , p ) − ( Ar , p )=rpkk= Az= zkk− F− zk−1(11)The stopping condition of <strong>the</strong> iterative processgiven by Eqs. (10) <strong>and</strong> (11) is:Az k − FF< εParallelization of <strong>the</strong> iterative process given by Eqs.(10) <strong>and</strong> (11) <strong>for</strong> <strong>solving</strong> <strong>the</strong> problem given by Eq. (9)is based on <strong>the</strong> dividing of <strong>the</strong> matrices (A k ) T <strong>and</strong> A kby <strong>the</strong> horizontal lines into m blocks. The datadistribution over <strong>the</strong> processors is similar to <strong>the</strong> datadistribution in <strong>the</strong> Gauss method (Figure 1).At every step of <strong>the</strong> conjugate gradient method,each processor calculates its own part of <strong>the</strong> solutionvector z k . In <strong>the</strong> case of <strong>the</strong> multiplication of <strong>the</strong> matrixby <strong>the</strong> vector, each processor multiplies its own part ofrows of <strong>the</strong> matrix by <strong>the</strong> whole vector. In <strong>the</strong> case of<strong>the</strong> matrix product (A k ) T A k each processor multipliesits own part of rows of <strong>the</strong> conjugated matrix (A k ) T by<strong>the</strong> whole matrix A k .4. EFFICIENCY OF THE METHODSParallelization of <strong>the</strong> basic <strong>algorithms</strong> <strong>and</strong> <strong>the</strong>irrealization on <strong>the</strong> Massively Parallel ComputingSystem MVS-1000 [7] is implemented. The analysisof <strong>the</strong> efficiency of <strong>parallel</strong>ization of <strong>the</strong> iterative<strong>algorithms</strong> with different numbers of processors iscarried out.MVS-1000/16 of <strong>the</strong> Research Institute KVANTproduction consists of 16 Intel Pentium III-800, 256MByte, 10 GByte disk, two 100 Mbit networkcontrollers (Digital DS21143 Tulip <strong>and</strong> Intel PRO/100). Educational computing cluster consists of 8 IntelPentium III700, 128 MByte, 14 GByte disk, 100 Mbitnetwork controller 3Com 3c905B Cyclone.For comparison of <strong>the</strong> executing times of <strong>the</strong>sequential <strong>and</strong> <strong>parallel</strong> <strong>algorithms</strong>, we will consider <strong>the</strong>coefficients of <strong>the</strong> speed up <strong>and</strong> efficiency:S m= T 1/T m, E m= S m/mwhere T m is <strong>the</strong> execution time of <strong>the</strong> <strong>parallel</strong> algorithmon MVS-1000 with m (m>1) processors, T 1 is <strong>the</strong>execution time of <strong>the</strong> sequential algorithm on oneprocessor:T m= T c+ T e+ T iwhere T c is <strong>the</strong> computing time, T e is <strong>the</strong> exchange time<strong>and</strong> T i is <strong>the</strong> idle time. The number m of processorscorresponds to <strong>the</strong> mentioned division of <strong>the</strong> vectors z<strong>and</strong> F into m parts so that n=m⋅L.On <strong>the</strong> o<strong>the</strong>r h<strong>and</strong>, <strong>the</strong> efficiency can be calculatedusing only <strong>the</strong> <strong>parallel</strong> version of a program on a<strong>parallel</strong> computer without using <strong>the</strong> execution time of<strong>the</strong> sequential algorithm T 1 . The efficiency can bedefined as:E = G/(G + 1)where G is <strong>the</strong> granularity of a <strong>parallel</strong> algorithm [8].The granularity of a <strong>parallel</strong> algorithm is <strong>the</strong> ratioof <strong>the</strong> amount of computations to <strong>the</strong> amount ofcommunications within a <strong>parallel</strong> algorithmimplementation. Taking into account <strong>the</strong> possibilitythat <strong>the</strong> processors may be not equally balanced <strong>and</strong><strong>the</strong> processor idle time can occur, <strong>the</strong>n <strong>the</strong> granularityis calculated using <strong>the</strong> following expression:G = T c/ (T e+ T i)The granularity may be estimated as:max TG ≤min( )comp⋅ m( T ) ⋅ mcommwhere max(T comp ) is <strong>the</strong> maximum computation time<strong>for</strong> one processor <strong>and</strong> min(T comm ) is <strong>the</strong> minimumcommunication time <strong>for</strong> one processor.Table 1 <strong>and</strong> Table 2 show <strong>the</strong> execution times T m<strong>and</strong> <strong>the</strong> coefficients of <strong>the</strong> speed up S m <strong>and</strong> <strong>the</strong>efficiencies E m <strong>and</strong> E obtained by using <strong>the</strong> granularityG of <strong>the</strong> iteratively regularized Newton method after 5iterations using <strong>the</strong> <strong>parallel</strong> <strong>and</strong> sequential (m=1) Gauss<strong>and</strong> Gauss-Jordan <strong>algorithms</strong> <strong>for</strong> problems given by Eqs.(1) to (4) <strong>for</strong> 111×35 points of <strong>the</strong> grid domain.Table 1Table 2Gauss Method <strong>for</strong> <strong>the</strong> 111×35 gridm T m , min. S m E m E1 57.48 - - -2 46.85 1.23 0.61 0.663 36.18 1.59 0.53 0.594 29.38 1.96 0.49 0.565 25.78 2.23 0.45 0.536 21.83 2.63 0.44 0.528 17.25 3.33 0.42 0.4910 14.17 4.06 0.41 0.4812 12.35 4.65 0.39 0.44Gauss-Jordan Method <strong>for</strong> <strong>the</strong> 111×35 gridm T m , min. S m E m E1 114.1 - - -2 60.50 1.89 0.94 0.973 42.38 2.69 0.90 0.914 33.53 3.40 0.85 0.885 28.48 4.01 0.80 0.856 23.88 4.78 0.79 0.838 19.88 5.74 0.72 0.7810 16.45 6.93 0.69 0.7212 15.35 7.42 0.62 0.6616 ENGINEERING MODELLING 17 (2004) 1-2, 13-19

E.N. Akimova, V.V. Vasin: <strong>Stable</strong> <strong>parallel</strong> <strong>algorithms</strong> <strong>for</strong> <strong>solving</strong> <strong>the</strong> <strong>inverse</strong> <strong>gravimetry</strong> <strong>and</strong> magnetometry problemsTable 3Conjugate Gradient Method <strong>for</strong> <strong>the</strong> 111×35 gridm T m , min. S m E m E1 84.38 - - -2 43.20 1.95 0.98 0.994 22.75 3.71 0.93 0.955 18.63 4.52 0.90 0.9310 10.37 8.14 0.81 0.8411 9.67 8.79 0.80 0.8217 7.03 12.0 0.71 0.75Table 3 shows <strong>the</strong> execution times T m <strong>and</strong> <strong>the</strong>coefficients of <strong>the</strong> speed up S m <strong>and</strong> <strong>the</strong> efficiencies E m<strong>and</strong> E obtained by using <strong>the</strong> granularity G of <strong>the</strong>iteratively regularized Newton method after 5iterations using <strong>the</strong> <strong>parallel</strong> <strong>and</strong> sequential (m=1)conjugate gradient method <strong>for</strong> problems given by Eqs.(1) to (4) <strong>for</strong> 111×35 points of <strong>the</strong> grid domain.The results of calculations show that <strong>the</strong> <strong>parallel</strong>Gauss <strong>and</strong> Gauss-Jordan <strong>algorithms</strong> have efficiencyof <strong>parallel</strong>ization high enough, <strong>and</strong> <strong>the</strong> Gauss-Jordanalgorithm efficiency is higher. But <strong>the</strong> conjugategradient method efficiency is higher than <strong>the</strong> efficiencyof <strong>the</strong> Gauss <strong>and</strong> Gauss-Jordan <strong>algorithms</strong>. This factcan be explained by <strong>the</strong> small exchange time. Theelements of <strong>the</strong> matrix (A k ) T A k are <strong>for</strong>medindependently in m processors. At every step of <strong>the</strong>conjugate gradient method, each processor calculatesits own part of <strong>the</strong> solution vector z k .In <strong>the</strong> case of <strong>the</strong> <strong>parallel</strong> Gauss algorithm with <strong>the</strong>number of processors m

E.N. Akimova, V.V. Vasin: <strong>Stable</strong> <strong>parallel</strong> <strong>algorithms</strong> <strong>for</strong> <strong>solving</strong> <strong>the</strong> <strong>inverse</strong> <strong>gravimetry</strong> <strong>and</strong> magnetometry problemsThey are reconstructed by <strong>the</strong> iteratively regularizedNewton method given by Eq. (4) with <strong>the</strong> help of <strong>the</strong><strong>parallel</strong> Gauss or Gauss-Jordan <strong>algorithms</strong> or <strong>the</strong>conjugate gradient method.Fig. 8 The reconstructed interface S 2 <strong>for</strong><strong>the</strong> magnetometry problem6. CONCLUDING REMARKSFig. 5 The reconstructed interface S 1 <strong>for</strong><strong>the</strong> <strong>gravimetry</strong> problemThe main conclusion is <strong>the</strong> following. Theinterfaces S 1 <strong>and</strong> S 2 obtained as solutions of <strong>the</strong><strong>gravimetry</strong> <strong>and</strong> magnetometry <strong>inverse</strong> problem, Eqs.(1) <strong>and</strong> (2), by <strong>the</strong> iteratively regularized Gauss-Newton method, Eq. (4), correspond to <strong>the</strong> realgeological perceptions about <strong>the</strong> investigated regionof <strong>the</strong> Urals.The nearest gravity <strong>and</strong> magnetic interfaces (seeFigure 3 <strong>and</strong> Figures 5 <strong>and</strong> 6) are ra<strong>the</strong>r different, but<strong>the</strong> deeper interfaces (see Figure 4 <strong>and</strong> Figures 7 <strong>and</strong>8) are similar. We believe that, probably in <strong>the</strong> firstcase, <strong>the</strong> sources of <strong>the</strong> gravity <strong>and</strong> magnetic fields aredifferent, <strong>and</strong> in <strong>the</strong> second case <strong>the</strong>se sources are <strong>the</strong>same (or very close) <strong>and</strong> so we have <strong>the</strong> gravity <strong>and</strong>magnetic solutions very close to each o<strong>the</strong>r.Fig. 6 The reconstructed interface S 1 <strong>for</strong><strong>the</strong> magnetometry problem7. ACKNOWLEDGEMENTSThe authors are deeply grateful to <strong>the</strong> RussianFoundation <strong>for</strong> Basic Research (project No. 03-01-00099) <strong>for</strong> <strong>the</strong> financial support.8. REFERENCESFig. 7 The reconstructed interface S 2 <strong>for</strong><strong>the</strong> <strong>gravimetry</strong> problem[1] V.V. Vasin, G.Ya. Perestoronina, I.L. Prutkin <strong>and</strong>L.Yu. Timerkhanova, Reconstruction of <strong>the</strong> reliefof geological boundaries in <strong>the</strong> three-layeredmedium using <strong>the</strong> gravitational <strong>and</strong> magneticdata, Proc. of <strong>the</strong> Conference on Geophysics <strong>and</strong>Ma<strong>the</strong>matics, Institute of Mines, UrB RAS,Perm, pp. 35-41, 2001.[2] A.B. Bakushinsky, A regularizing algorithm on<strong>the</strong> basis of <strong>the</strong> Newton- Kantorovich method <strong>for</strong><strong>the</strong> solution of variational inequalities, Zh.Vychisl. Mat. Mat. Fiz., Vol. 16, No. 6, pp. 1397-1404, 1976.18 ENGINEERING MODELLING 17 (2004) 1-2, 13-19

E.N. Akimova, V.V. Vasin: <strong>Stable</strong> <strong>parallel</strong> <strong>algorithms</strong> <strong>for</strong> <strong>solving</strong> <strong>the</strong> <strong>inverse</strong> <strong>gravimetry</strong> <strong>and</strong> magnetometry problems[3] G. Streng, Linear Agebra <strong>and</strong> Its Applications,Mir, Moskva, 1980.[4] N.S. Bakhvalov, N.P. Zhidkov <strong>and</strong> G.M.Kobelkov, Numerical Methods, Nauka, Moskva,1987.[5] V.V. Vasin <strong>and</strong> A.L. Ageev, Ill-posed Problemswith a Priori In<strong>for</strong>mation, Nauka, Ekaterinburg,1993.[6] E.N. Akimova, Parallelization of an algorithm <strong>for</strong><strong>solving</strong> <strong>the</strong> gravity <strong>inverse</strong> problem, Journal ofComputational <strong>and</strong> Applied Mechanics, MiskolcUniversity Press, Vol. 4, No. 1, pp. 5-12, 2003.[7] A.V. Baranov, A.O. Latsis, C.V. Sazhin <strong>and</strong>M.Yu. Khramtsov, The MVS-1000 SystemUser’s Guide, http://<strong>parallel</strong>.ru/mvs/user.html.[8] J. Kwiatkowski, Evaluation of <strong>parallel</strong> programsby measurement of its granularity, Proc. of <strong>the</strong>Conference on Parallel Processing <strong>and</strong> AppliedMa<strong>the</strong>matics, Lecture Notes in ComputerScience, Vol. 2328, pp. 145-153, 2001.STABILNI PARALELNI ALGORITMI ZA RJE[AVANJE INVERZNIH GRAVIMETRIJSKIH IMAGNETOMETRIJSKIH PROBLEMASA@ETAKU ovom radu ispituju se trodimenzionalni inverzni problemi gravimetrije i magnetometrije da bi se prona{liodnosi izme|u medija pomo}u gravitacijskih i magnetskih podataka. Pretpostavljamo da model donjeg poluprostorasadr`i tri medija koji imaju stalne gusto}e, a odvojeni su pomo}u povr{ina S 1 i S 2 koje treba odrediti.Ovi inverzni problemi svedeni su na nelinearne integralne jednad`be prve vrste, prema tome ovi problemi sulo{e postavljeni. Nakon diskretizacije integralne jednad`be dobivamo sustav nelinearnih jednad`bi velike dimenzije.Koristimo iterativno reguliranu Gauss-Newton metodu da bi rije{ili ovaj problem. Moramo rije{iti sustav linearnihalgebarskih jednad`bi s punom matricom da bi realizirali jedan korak u ovoj metodi. Da se postigne ovaj cilj,primjenjuju se paralelne varijante Gaussove i Gauss-Jordan metode konjugiranog gradijenta.Njihova realizacija iskoristila se u Massively Parallel Computing System MVS-1000. Izvr{ena je analizaefikasnosti paralelizacije iterativnih algoritama pomo}u razli~itog broja procesora. Paralelizacija algoritamazna~ajno skra}uje vrijeme rje{avanja problema. Odnosi S 1 i S 2 koji su postignuti Gauss-Newton-ovom metodomodgovaraju stvarnoj geolo{koj predod`bi o podru~ju Urala koje se istra`uje.Klju~ne rije~i: Paralelni algoritmi, gravimetrija, magnetometrija, paralelizacija.ENGINEERING MODELLING 17 (2004) 1-2, 13-19 19