Ensuring Data Survival on Solid-State Storage Devices - CiteSeerX

Ensuring Data Survival on Solid-State Storage Devices - CiteSeerX

Ensuring Data Survival on Solid-State Storage Devices - CiteSeerX

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

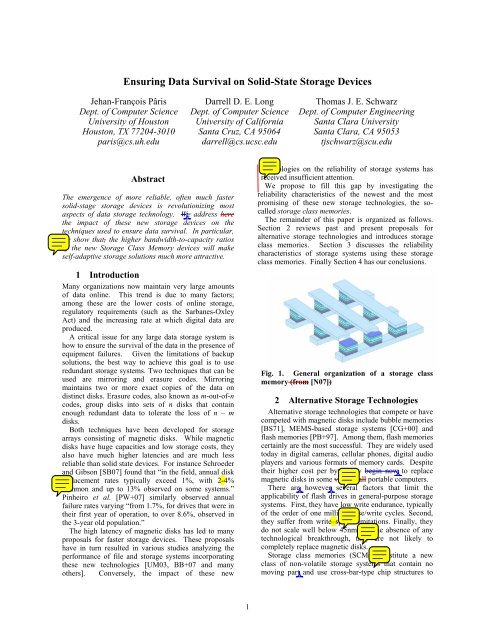

<str<strong>on</strong>g>Ensuring</str<strong>on</strong>g> <str<strong>on</strong>g>Data</str<strong>on</strong>g> <str<strong>on</strong>g>Survival</str<strong>on</strong>g> <strong>on</strong> <strong>Solid</strong>-<strong>State</strong> <strong>Storage</strong> <strong>Devices</strong>Jehan-François PârisDept. of Computer ScienceUniversity of Houst<strong>on</strong>Houst<strong>on</strong>, TX 77204-3010paris@cs.uh.eduDarrell D. E. L<strong>on</strong>gDept. of Computer ScienceUniversity of CaliforniaSanta Cruz, CA 95064darrell@cs.ucsc.eduThomas J. E. SchwarzDept. of Computer EngineeringSanta Clara UniversitySanta Clara, CA 95053tjschwarz@scu.eduAbstractThe emergence of more reliable, often much fastersolid-stage storage devices is revoluti<strong>on</strong>izing mostaspects of data storage technology. We address herethe impact of these new storage devices <strong>on</strong> thetechniques used to ensure data survival. In particular,we show that, the higher bandwidth-to-capacity ratiosof the new <strong>Storage</strong> Class Memory devices will makeself-adaptive storage soluti<strong>on</strong>s much more attractive.1 Introducti<strong>on</strong>Many organizati<strong>on</strong>s now maintain very large amountsof data <strong>on</strong>line. This trend is due to many factors;am<strong>on</strong>g these are the lower costs of <strong>on</strong>line storage,regulatory requirements (such as the Sarbanes-OxleyAct) and the increasing rate at which digital data areproduced.A critical issue for any large data storage system ishow to ensure the survival of the data in the presence ofequipment failures. Given the limitati<strong>on</strong>s of backupsoluti<strong>on</strong>s, the best way to achieve this goal is to useredundant storage systems. Two techniques that can beused are mirroring and erasure codes. Mirroringmaintains two or more exact copies of the data <strong>on</strong>distinct disks. Erasure codes, also known as m-out-of-ncodes, group disks into sets of n disks that c<strong>on</strong>tainenough redundant data to tolerate the loss of n – mdisks.Both techniques have been developed for storagearrays c<strong>on</strong>sisting of magnetic disks. While magneticdisks have huge capacities and low storage costs, theyalso have much higher latencies and are much lessreliable than solid state devices. For instance Schroederand Gibs<strong>on</strong> [SB07] found that “in the field, annual diskreplacement rates typically exceed 1%, with 2-4%comm<strong>on</strong> and up to 13% observed <strong>on</strong> some systems.”Pinheiro et al. [PW+07] similarly observed annualfailure rates varying “from 1.7%, for drives that were intheir first year of operati<strong>on</strong>, to over 8.6%, observed inthe 3-year old populati<strong>on</strong>.”The high latency of magnetic disks has led to manyproposals for faster storage devices. These proposalshave in turn resulted in various studies analyzing theperformance of file and storage systems incorporatingthese new technologies [UM03, BB+07 and manyothers]. C<strong>on</strong>versely, the impact of these newtechnologies <strong>on</strong> the reliability of storage systems hasreceived insufficient attenti<strong>on</strong>.We propose to fill this gap by investigating thereliability characteristics of the newest and the mostpromising of these new storage technologies, the socalledstorage class memories.The remainder of this paper is organized as follows.Secti<strong>on</strong> 2 reviews past and present proposals foralternative storage technologies and introduces storageclass memories. Secti<strong>on</strong> 3 discusses the reliabilitycharacteristics of storage systems using these storageclass memories. Finally Secti<strong>on</strong> 4 has our c<strong>on</strong>clusi<strong>on</strong>s.Fig. 1. General organizati<strong>on</strong> of a storage classmemory (from [N07])2 Alternative <strong>Storage</strong> TechnologiesAlternative storage technologies that compete or havecompeted with magnetic disks include bubble memories[BS71], MEMS-based storage systems [CG+00] andflash memories [PB+97]. Am<strong>on</strong>g them, flash memoriescertainly are the most successful. They are widely usedtoday in digital cameras, cellular ph<strong>on</strong>es, digital audioplayers and various formats of memory cards. Despitetheir higher cost per byte, they begin now to replacemagnetic disks in some very small portable computers.There are however several factors that limit theapplicability of flash drives in general-purpose storagesystems. First, they have low write endurance, typicallyof the order of <strong>on</strong>e milli<strong>on</strong> erase/write cycles. Sec<strong>on</strong>d,they suffer from write–speed limitati<strong>on</strong>s. Finally, theydo not scale well below 45nm. In the absence of anytechnological breakthrough, they are not likely tocompletely replace magnetic disks.<strong>Storage</strong> class memories (SCMs) c<strong>on</strong>stitute a newclass of n<strong>on</strong>-volatile storage systems that c<strong>on</strong>tain nomoving part and use cross-bar-type chip structures to1

with initial c<strong>on</strong>diti<strong>on</strong>sp2(0)= 1p1(0)= 0whose soluti<strong>on</strong> is−2λtp2( t)= e ,−2λtλtp1= 2e( e −1).The data survival probability for our mirrored deviceisS t)= p ( t)+ p ( ) .2(2 1tTable 2. Ec<strong>on</strong>omic life span for a single device and amirrored device.Number of 9s Single Mirrored1 (90%) 0.10536 0.380132 (99%) 0.01005 0.105363 (99.9%) 0.00100 0.032134 (99.99%) 1.00E-04 0.010055 (99.999%) 1.00E-05 0.00317To obtain the ec<strong>on</strong>omic life span L(r) of eachorganizati<strong>on</strong>, we solve observe that L=(r) is thesoluti<strong>on</strong> of the equati<strong>on</strong> S n (t) = r. After setting λ = 1,the ec<strong>on</strong>omic life span is expressed in multiples of thedevice MTTF.These results are summarized in Table 1 forreliability level r equal to 0.9, 0.99, 0.999, 0.9999, and0.99999, that is, 1, 2, 3, 4, and 5 nines. As we can see,a single device achieves a life span of 1% of its MTTFwith probability 99%. An SCM module with an MTTFof ten milli<strong>on</strong> hours would thus have an ec<strong>on</strong>omic lifespan of <strong>on</strong>e hundred thousand hours or slightly morethan eleven years. More demanding applicati<strong>on</strong>s coulduse a mirrored organizati<strong>on</strong> to achieve the sameec<strong>on</strong>omic life span with a reliability level of 99.99%.This is an excellent result as we c<strong>on</strong>sidered that thedevices could not be repaired during their useful life.SCMs thus appear to become the technology of choiceto store a few gigabytes of data at locati<strong>on</strong>s makingrepairs impossible (satellites) or unec<strong>on</strong>omical (remotelocati<strong>on</strong>s).3.2 <strong>Storage</strong> capacitiesThe relatively low storage capacity of SCMs preventsus from extending these c<strong>on</strong>clusi<strong>on</strong>s to larger storagesystems. C<strong>on</strong>sider for instance a storage system with acapacity of fifty terabytes. By the expected arrival timeof the first generati<strong>on</strong> of SCMs in 2012, we are verylikely to have magnetic disks with capacities wellexceeding two terabytes. We would thus use at mostfifty of them. To achieve the same total capacity, wewould need 6,250 SCM modules. In other words, eachmagnetic disk would be replaced by 125 SCM modules.Let λ m and λ s respectively denote the failure rates ofmagnetic disks and SCM. To achieve comparabledevice failure rates in our hypothetical storage systems,we would need to have λ s < λ m /125, which is not likelyto be the case.Our sec<strong>on</strong>d c<strong>on</strong>clusi<strong>on</strong> is that storage systems builtusing first-generati<strong>on</strong> SCMs will be inherently lessreliable than comparable storage systems usingmagnetic disks. This situati<strong>on</strong> will remain true as l<strong>on</strong>gas the capacity of a SCM module remains less thanλ s /λ m times that of a magnetic disk.D 11 D 12D 13 D 14 P 1D 21 D 22D 23 D 24P 2Fig. 3. A storage system c<strong>on</strong>sisting of two RAIDarrays. (In reality, the functi<strong>on</strong>s of the two paritydisks will be distributed am<strong>on</strong>g the five disks of eacharray.)D 11 D 21 D 12 D 22D 13 D 23 P QD 14D 24Fig. 4. The same storage system organized as asingle 8-out-of-10 array. (In reality, the functi<strong>on</strong>s ofthe two check disks will be distributed am<strong>on</strong>g the allten disks).D 11 D 12D 13 D 14 P 1D 21 D 23 D DX2422Fig. 5. How the original pair of RAID arrays couldbe reorganized after the failure of data disk D 22 .3.3 Bandwidth c<strong>on</strong>siderati<strong>on</strong>sM-out-of-n codes are widely used to increase thereliability of storage systems because they have a muchlower space overhead than mirroring. The mostpopular of these codes are n–1-out-of-n codes, orRAIDs, which protect a disk array with n disks againstany single disk failure [CL+94, PG+88, SG+99].Despite their higher reliability, n–2-out-of-n codes[BM93, SB92] are much less widely used due to theirhigher update overhead.C<strong>on</strong>sider for instance a small storage systemc<strong>on</strong>sisting of ten disk drives and assume that we arewilling to accept a 20% space overhead. Figure 3shows the most likely organizati<strong>on</strong> for our array: the tendisks are grouped into two RAID level 5 arrays, eachhaving five disks. This organizati<strong>on</strong> will protect thedata against any single disk failure and thesimultaneous failures of <strong>on</strong>e disk in each array.Grouping the 10 disks into a single 8-out-of-10 arraywould protect the data against any simultaneous failure3

of two disks without increasing the space overhead.This is the organizati<strong>on</strong> that Fig. 4 displays. As wementi<strong>on</strong>ed earlier, this soluti<strong>on</strong> is less likely to beadopted due to its high update overhead.A very attractive opti<strong>on</strong> would then be to use twoRAID level 5 arrays and let the two arrays reorganizethemselves self in a transparent fashi<strong>on</strong> as so<strong>on</strong> as <strong>on</strong>eof them has detected the failure of <strong>on</strong>e of its disks. Fig.5 shows the outcome of such a process: parity disk P 2now c<strong>on</strong>tains the data that were stored <strong>on</strong> the disk thatfailed and the other parity disks while parity disk P 1now c<strong>on</strong>tains the parity of the eight other disks.A key issue for self-reorganizing disk arrays is thedurati<strong>on</strong> of the reorganizati<strong>on</strong> process [PS+06]. In ourexample, a quasi-instantaneous reorganizati<strong>on</strong> wouldachieve the same reliability as an 8-out-of-10 code butwith a lower update overhead. Two factors dominatethis reorganizati<strong>on</strong> process, namely, the time it takes todetect disk failure and the time it perform the arrayreorganizati<strong>on</strong> itself. This latter time is proporti<strong>on</strong>al tothe time it takes to overwrite a full disk. Assuming afuture disk capacity of 2 terabytes and a future diskbandwidth of 100MB/s, this operati<strong>on</strong> would requireslightly more than five hours and half. The situati<strong>on</strong> isnot likely to improve as disk bandwidths have alwaysgrown at a lower rate than disk capacities.With data rates in excess of 200 MB/s, SCMs makethis approach much more attractive as the c<strong>on</strong>tents of a16 GB module could be saved in at most 80 sec<strong>on</strong>ds.By the time larger SCM modules become available, wecan expect their bandwidths to be closer to 1 GB/s thanto 10 MB/s. As a result, we can expect selfreorganizingsets of RAID arrays built with SCMmodules to be almost as reliable as single m-out-of-narrays with the same space overhead and much higherupdate overheads.4 C<strong>on</strong>clusi<strong>on</strong>sSCMs are a new class of more reliable and much fastersolid-stage storage devices that are likely torevoluti<strong>on</strong>ize most aspects of data storage technology.We have investigated how these new devices willimpact the techniques used to ensure data survival andhave come with three major c<strong>on</strong>clusi<strong>on</strong>s. First, SCMstare likely to become the technology of choice to store afew gigabytes of data at locati<strong>on</strong>s making repairsimpossible (satellites) or unec<strong>on</strong>omical (remotelocati<strong>on</strong>s). Sec<strong>on</strong>d, the low capacities of firstgenerati<strong>on</strong>SCM modules will negate the benefits oftheir lower failure rate in most other data storageapplicati<strong>on</strong>s. Finally, the higher bandwidth-to-capacityratios of SCM devices will make self-adaptive storagesoluti<strong>on</strong>s much more attractive.More work is still needed to estimate how the highershock and vibrati<strong>on</strong> resistance of SCMs will affect theirfield reliability and find the most cost-effective selfreorganizingarray soluti<strong>on</strong>s for SCMs.Proc. Internati<strong>on</strong>al Performance C<strong>on</strong>ference <strong>on</strong>Computers and Communicati<strong>on</strong> (IPCCC '07), NewOrleans. LA, Apr. 2007[BS71] A. H. Bobeck and H. E. D. Scovil, Magnetic Bubbles,Scientific American, 224(6):78-91, June 1971[BM93] W. A. Burkhard and J. Men<strong>on</strong>. Disk array storage systemreliability. In Proc. 23 rd Internati<strong>on</strong>al Symposium. <strong>on</strong>Fault-Tolerant Computing, Toulouse, France, pp. 432-441,June 1993.[CG+00] L. R. Carley, G. R. Ganger, and D. F. Nagle, MEMS-basedintegrated-circuit mass-storage systems. Communicati<strong>on</strong>sof the ACM, 43(11):73-80, Nov. 2000.[CL+94] P. M. Chen, E. K. Lee, G. A. Gibs<strong>on</strong>, R. Katz and D. A.Patters<strong>on</strong>. RAID, High-performance, reliable sec<strong>on</strong>darystorage, ACM Computing Surveys 26(2):145–185, 1994.[E07[ E-week, Intel previews potential replacement for flashmemory, www.eweek.com/article2/0,1895,2021815,00.asp[N07] S. Narayan, <strong>Storage</strong> Class Memory A DisruptiveTechnology, Presentati<strong>on</strong> at Disruptive TechnologiesPanel: Memory Systems of SC '07, Reno, NV, Nov. 2007[PS+06] J.-F. Pâris, T. J. E. Schwarz and D. D. E. L<strong>on</strong>g. Selfadaptivedisk arrays. In Proc. 8 th Internati<strong>on</strong>al Symposium<strong>on</strong> Stabilizati<strong>on</strong>, Safety, and Security of DistributedSystems, Dallas, TX, pp. 469–483, Nov. 2006.[PS08] J.-F. Pâris and T. J. Schwarz. On the Possibility of Small,Service-Free Disk Based <strong>Storage</strong> Systems, In Proc. 3 rdInternati<strong>on</strong>al C<strong>on</strong>ference <strong>on</strong> Availability, Reliability andSecurity (ARES 2008), Barcel<strong>on</strong>a, Spain, Mar. 2008, toappear.[PG+88] D. A. Patters<strong>on</strong>, G. A. Gibs<strong>on</strong> and R. Katz. A case forredundant arrays of inexpensive disks (RAID). In Proc.SIGMOD 1988 Internati<strong>on</strong>al C<strong>on</strong>ference <strong>on</strong> <str<strong>on</strong>g>Data</str<strong>on</strong>g>Management, Chicago, IL, pp. 109–116, June 1988.[PB+97] P. Pavan, R. Bez, P. Olivo, E. Zan<strong>on</strong>i,. Flash memorycells-an overview, Proceedings of the IEEE, 85(8):1248-1271, Aug 1997.[PW07] E. Pinheiro, W.-D. Weber and L. A. Barroso, FailureTrends in a Large Disk Drive Populati<strong>on</strong>, In Proc. 5thUSENIX C<strong>on</strong>ference <strong>on</strong> File and <strong>Storage</strong> Technologies,San Jose, CA, pp. 17–28, Feb. 2007.[SG07] B. Schroeder and G. A. Gibs<strong>on</strong>, Disk Failures in the RealWorld: What Does an MTTF of 1,000,000 Hours Mean toYou? In Proc. 5th USENIX C<strong>on</strong>ference <strong>on</strong> File and<strong>Storage</strong> Technologies, San Jose, CA, pp. 1–16, Feb. 2007.[SG99] M. Schulze, G. A. Gibs<strong>on</strong>, R. Katz, R. and D. A. Patters<strong>on</strong>.How reliable is a RAID? In Proc. Spring COMPCON 89C<strong>on</strong>ference, pp. 118–123, Mar. 1989.[SB92] T. J. E. Schwarz and W. A. Burkhard. RAID organizati<strong>on</strong>and performance. In Proc. 12 th Internati<strong>on</strong>al C<strong>on</strong>ference<strong>on</strong> Distributed Computing Systems, pp. 318–325 June1992.[UM+03] Mustafa Uysal, Arif Merchant, Guillermo A. Alvarez.Using MEMS-based storage in disk arrays, In Proc. 2 ndUSENIX C<strong>on</strong>ference <strong>on</strong> File and <strong>Storage</strong> Technologies(FAST '03), San Francisco, CA, pp. 89–101, Mar.-Apr.2003.[XB99] L. Xu and J. Bruck: X-code: MDS array codes withoptimal encoding. IEEE Trans. <strong>on</strong> Informati<strong>on</strong> Theory,45(1):272–276, Jan. 1999.References[BB+07] T. Biss<strong>on</strong>, S. Brandt, and D. D E. L<strong>on</strong>g, A Hybrid diskawarespin-down algorithm with I/O subsystem support, In4