Part Of Speech Tagging and Chunking with HMM and CRF

Part Of Speech Tagging and Chunking with HMM and CRF

Part Of Speech Tagging and Chunking with HMM and CRF

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

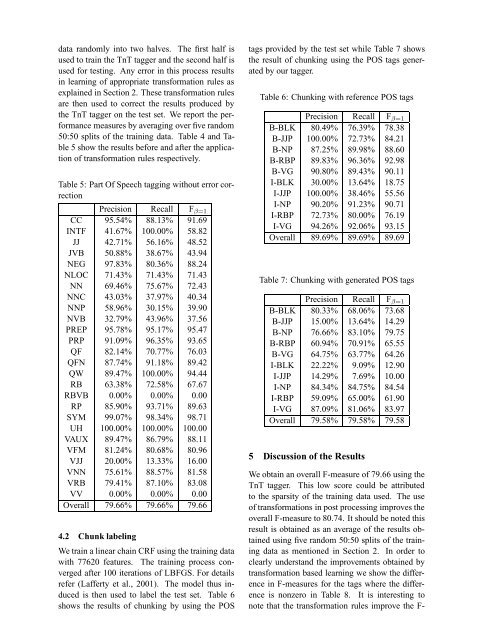

data r<strong>and</strong>omly into two halves. The first half isused to train the TnT tagger <strong>and</strong> the second half isused for testing. Any error in this process resultsin learning of appropriate transformation rules asexplained in Section 2. These transformation rulesare then used to correct the results produced bythe TnT tagger on the test set. We report the performancemeasures by averaging over five r<strong>and</strong>om50:50 splits of the training data. Table 4 <strong>and</strong> Table5 show the results before <strong>and</strong> after the applicationof transformation rules respectively.Table 5: <strong>Part</strong> <strong>Of</strong> <strong>Speech</strong> tagging <strong>with</strong>out error correctionPrecision Recall F β=1CC 95.54% 88.13% 91.69INTF 41.67% 100.00% 58.82JJ 42.71% 56.16% 48.52JVB 50.88% 38.67% 43.94NEG 97.83% 80.36% 88.24NLOC 71.43% 71.43% 71.43NN 69.46% 75.67% 72.43NNC 43.03% 37.97% 40.34NNP 58.96% 30.15% 39.90NVB 32.79% 43.96% 37.56PREP 95.78% 95.17% 95.47PRP 91.09% 96.35% 93.65QF 82.14% 70.77% 76.03QFN 87.74% 91.18% 89.42QW 89.47% 100.00% 94.44RB 63.38% 72.58% 67.67RBVB 0.00% 0.00% 0.00RP 85.90% 93.71% 89.63SYM 99.07% 98.34% 98.71UH 100.00% 100.00% 100.00VAUX 89.47% 86.79% 88.11VFM 81.24% 80.68% 80.96VJJ 20.00% 13.33% 16.00VNN 75.61% 88.57% 81.58VRB 79.41% 87.10% 83.08VV 0.00% 0.00% 0.00Overall 79.66% 79.66% 79.664.2 Chunk labelingWe train a linear chain <strong>CRF</strong> using the training data<strong>with</strong> 77620 features. The training process convergedafter 100 iterations of LBFGS. For detailsrefer (Lafferty et al., 2001). The model thus inducedis then used to label the test set. Table 6shows the results of chunking by using the POStags provided by the test set while Table 7 showsthe result of chunking using the POS tags generatedby our tagger.Table 6: <strong>Chunking</strong> <strong>with</strong> reference POS tagsPrecision Recall F β=1B-BLK 80.49% 76.39% 78.38B-JJP 100.00% 72.73% 84.21B-NP 87.25% 89.98% 88.60B-RBP 89.83% 96.36% 92.98B-VG 90.80% 89.43% 90.11I-BLK 30.00% 13.64% 18.75I-JJP 100.00% 38.46% 55.56I-NP 90.20% 91.23% 90.71I-RBP 72.73% 80.00% 76.19I-VG 94.26% 92.06% 93.15Overall 89.69% 89.69% 89.69Table 7: <strong>Chunking</strong> <strong>with</strong> generated POS tagsPrecision Recall F β=1B-BLK 80.33% 68.06% 73.68B-JJP 15.00% 13.64% 14.29B-NP 76.66% 83.10% 79.75B-RBP 60.94% 70.91% 65.55B-VG 64.75% 63.77% 64.26I-BLK 22.22% 9.09% 12.90I-JJP 14.29% 7.69% 10.00I-NP 84.34% 84.75% 84.54I-RBP 59.09% 65.00% 61.90I-VG 87.09% 81.06% 83.97Overall 79.58% 79.58% 79.585 Discussion of the ResultsWe obtain an overall F-measure of 79.66 using theTnT tagger. This low score could be attributedto the sparsity of the training data used. The useof transformations in post processing improves theoverall F-measure to 80.74. It should be noted thisresult is obtained as an average of the results obtainedusing five r<strong>and</strong>om 50:50 splits of the trainingdata as mentioned in Section 2. In order toclearly underst<strong>and</strong> the improvements obtained bytransformation based learning we show the differencein F-measures for the tags where the differenceis nonzero in Table 8. It is interesting tonote that the transformation rules improve the F-