Ch 9 Interval Estimation

Ch 9. Interval Estimation

Ch 9. Interval Estimation

- No tags were found...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Intro<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

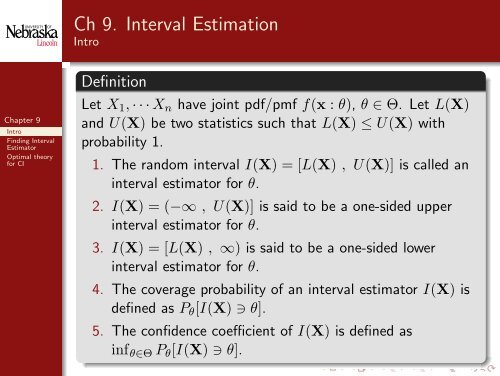

Definition<br />

Let X 1 , · · · X n have joint pdf/pmf f(x : θ), θ ∈ Θ. Let L(X)<br />

and U(X) be two statistics such that L(X) ≤ U(X) with<br />

probability 1.<br />

1. The random interval I(X) = [L(X) , U(X)] is called an<br />

interval estimator for θ.<br />

2. I(X) = (−∞ , U(X)] is said to be a one-sided upper<br />

interval estimator for θ.<br />

3. I(X) = [L(X) , ∞) is said to be a one-sided lower<br />

interval estimator for θ.<br />

4. The coverage probability of an interval estimator I(X) is<br />

defined as P θ [I(X) ∋ θ].<br />

5. The confidence coefficient of I(X) is defined as<br />

inf θ∈Θ P θ [I(X) ∋ θ].

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Intro<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ Uniform(0, θ). Consider the<br />

following two interval estimator of θ.<br />

I 1 (X) = [aX (n) , bX (n) ], 1 ≤ a < b<br />

I 2 (X) = [X (n) + c , ∞)

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Inverting test<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ N(θ, 1)<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

H 0 : θ = θ 0 vs H 1 : θ ≠ θ 0<br />

The LRT of size α is<br />

{<br />

1, | √ n(¯x − θ 0 )| ≥ z<br />

φ(x) =<br />

1−α/2 ,<br />

0, elsewhere.<br />

=⇒ P θ0 { ¯X − z 1−α/2 / √ n ≤ θ 0 ≤ ¯X + z 1−α/2 / √ n} =

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Inverting test<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

Theorem<br />

Let X 1 , · · · , X n have joint pdf/pmf f(x : θ), θ ∈ Θ. For each<br />

θ 0 ∈ Θ, let A(θ 0 ) denote the acceptance region of a size α<br />

simple test for testing<br />

H 0 : θ = θ 0 vs H 1 : θ ≠ θ 0<br />

Define a set C(x) = {θ 0 ∈ Θ : x ∈ A(θ 0 )}. Then C(X) is a<br />

confidence set with confidence coefficient 1 − α.<br />

⊳ Note:<br />

1. C(X) is not necessarily an interval.<br />

2. One may need to consider one-sided test for one-sided<br />

confidence interval.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Inverting test<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ N(µ, σ 2 ). µ and σ 2 are unknown.<br />

Find a 1 − α two-sided CI and on e-sided lower CI for µ.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Inverting test<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ f(x|θ) = θ 2 xe −θx , x > 0. Find an<br />

approximate 1 − α confidence set of θ

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Using PQ<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

Definition<br />

Let X 1 , · · · , X n have joint pdf/pmf f(x : θ), θ ∈ Θ. A random<br />

variable Y = Q(X : θ) is called a pivotal quantity (PQ) if the<br />

distribution of Y = Q(X : θ) does not depend on θ.<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ f(x : θ). Consider the following<br />

families of distributions and statistics.<br />

1. f(x : θ) = f 0 (x − θ)<br />

2. f(x : θ) = 1 θ f 0(x)<br />

3. f(x : θ) = 1 θ 2<br />

f 0 [(x − θ 1 )/θ 2 ]<br />

¯X n − θ, ¯Xn /θ, ( ¯X n − θ 1 )/θ 2

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Using PQ<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ exp(λ).<br />

T = ∑ X i ∼ Gamma( , )<br />

Find a (1 − α)% confidence interval of λ.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Using PQ<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

Theorem (See the theorem 2.1.10 for reference)<br />

Suppose T = T (X) is a statistic calculated from X 1 , · · · , X n .<br />

Assume T has a continuous distribution with cdf<br />

F (t : θ) = P θ (T ≤ t).<br />

Then<br />

Q(T : θ) = F (T : θ)<br />

is a PQ.<br />

⊳ Note: In order for Q(T : θ) = F (T : θ) to result in a<br />

confidence interval, we want F (T : θ) to be monotone in θ. A<br />

cdf F (T : θ) that is increasing or decreasing in θ for all t is said<br />

to be stochastically increasing or decreasing.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Using PQ<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

iid<br />

⊲ Example: X 1 , · · · , X n ∼ N(µ, σ 2 ), σ 2 is known. Let<br />

T (X) = ¯X. Then<br />

( ) T − µ<br />

Q(T : µ) = F (T : µ) = Φ<br />

σ/ √ .<br />

n

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Using PQ<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

iid<br />

⊲ Example: X 1 , · · · , X n ∼ f(x : µ) = e −(x−µ) , x ≥ µ. Let<br />

T (X) = X (1) = min 1≤i≤n X i . Derive (1 − α)100% confidence<br />

interval using the cdf of T .

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Finding <strong>Interval</strong> Estimator - Bayesian <strong>Interval</strong><br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

Definition<br />

[L(x), U(x)] is called a (1 − α)100% credible set (or Bayesian<br />

interval) if<br />

1 − α = P [L(x) < θ < U(x)|X = x]<br />

{ ∑<br />

θ<br />

π(θ|x) discrete<br />

= ∫<br />

θ π(θ|x)dθ continuous<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ N(µ, 1). µ ∼ N(0, 1). Find a<br />

(1 − α) credible set.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Optimal theory for CI<br />

CI: Length of CI vs Coverage probability<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

Definition<br />

f(x) is a unimodal pdf if f(x) is nondecreasing for x ≤ x ∗ and<br />

nonincreasing for x ≥ x ∗ in which case x ∗ is the mode of the<br />

distribution.<br />

Theorem (Shortest CI based on unimodal distn.)<br />

Let f(x) be a unimodal pdf. If the interval [a, b] satisfies<br />

i. ∫ b<br />

a f(x)dx = 1 − α<br />

ii. f(a) = f(b) > 0<br />

iii. a ≤ x ∗ ≤ b, when x ∗ is a mode of f(x)<br />

Then no other interval estimator satisfying (i) is shorter than<br />

[a, b].

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Optimal theory for CI<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ N(µ, σ 2 ). σ 2 is known.<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ N(µ, σ 2 ). σ 2 is unknown.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Optimal theory for CI<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

Definition (Probability of false coverage)<br />

For θ ′ ≠ θ, P θ [L(X) ≤ θ ′ ≤ U(X)]<br />

For θ ′ < θ, P θ [L(X) ≤ θ ′ ]<br />

For θ ′ > θ, P θ [θ ′ ≤ U(X)]<br />

Definition<br />

A 1 − α confidence interval with minimum probability of false<br />

coverage is called a Uniformly Most Accurate (UMA) 1 − α<br />

confidence interval.

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Optimal theory for CI<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

Theorem (UMA CI based on UMP test)<br />

Let X 1 , · · · , X n have a joint pdf/pmf f(x : θ). Suppose that a<br />

UMP test of size α for testing H 0 : θ ≤ θ 0 vs H 1 : θ > θ 0<br />

exists and given as<br />

{<br />

φ(x) =<br />

1, x /∈ A ∗ (θ 0 ),<br />

0, x ∈ A ∗ (θ 0 ).<br />

Let C ∗ (X) be the confidence interval obtained by inverting the<br />

UMP acceptance region. Then, for any other 1 − α confidence<br />

region(set, interval),<br />

P θ [θ ′ ∈ C ∗ (X)] ≤ P θ [θ ′ ∈ C ∗ (X)],<br />

for all θ ′ < θ. That is, inverting UMP test yields a UMA<br />

confidence region(set, interval).

<strong>Ch</strong> 9. <strong>Interval</strong> <strong>Estimation</strong><br />

Optimal theory for CI<br />

<strong>Ch</strong>apter 9<br />

Intro<br />

Finding <strong>Interval</strong><br />

Estimator<br />

Optimal theory<br />

for CI<br />

⊳ Note: UMP unbiased test can be inverted to obtain UMA<br />

unbiased confidence region(set, interval).<br />

⊲ Example: X 1 , · · · , X n<br />

iid<br />

∼ N(µ, σ 2 ). σ 2 is known.