Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

and Local-Disk Failover. Failover will only happen if the host taking over is part of a<br />

quorum of greater than 50% of the hosts in the cluster. 15 (For administrative details see<br />

the Scalability, Availability, and Failover Guide.)<br />

SHARED-DISK FAILOVER<br />

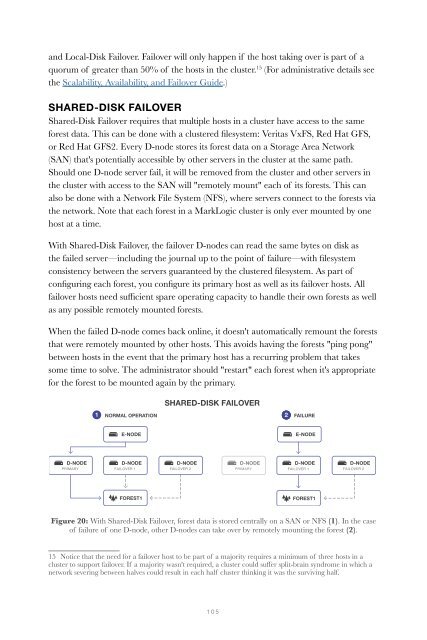

Shared-Disk Failover requires that multiple hosts in a cluster have access to the same<br />

forest data. This can be done with a clustered filesystem: Veritas VxFS, Red Hat GFS,<br />

or Red Hat GFS2. Every D-node stores its forest data on a Storage Area Network<br />

(SAN) that's potentially accessible by other servers in the cluster at the same path.<br />

Should one D-node server fail, it will be removed from the cluster and other servers in<br />

the cluster with access to the SAN will "remotely mount" each of its forests. This can<br />

also be done with a Network File System (NFS), where servers connect to the forests via<br />

the network. Note that each forest in a MarkLogic cluster is only ever mounted by one<br />

host at a time.<br />

With Shared-Disk Failover, the failover D-nodes can read the same bytes on disk as<br />

the failed server—including the journal up to the point of failure—with filesystem<br />

consistency between the servers guaranteed by the clustered filesystem. As part of<br />

configuring each forest, you configure its primary host as well as its failover hosts. All<br />

failover hosts need sufficient spare operating capacity to handle their own forests as well<br />

as any possible remotely mounted forests.<br />

When the failed D-node comes back online, it doesn't automatically remount the forests<br />

that were remotely mounted by other hosts. This avoids having the forests "ping pong"<br />

between hosts in the event that the primary host has a recurring problem that takes<br />

some time to solve. The administrator should "restart" each forest when it's appropriate<br />

for the forest to be mounted again by the primary.<br />

SHARED-DISK FAILOVER<br />

1<br />

NORMAL OPERATION<br />

2<br />

FAILURE<br />

Figure 20: With Shared-Disk Failover, forest data is stored centrally on a SAN or NFS (1). In the case<br />

of failure of one D-node, other D-nodes can take over by remotely mounting the forest (2).<br />

15 Notice that the need for a failover host to be part of a majority requires a minimum of three hosts in a<br />

cluster to support failover. If a majority wasn't required, a cluster could suffer split-brain syndrome in which a<br />

network severing between halves could result in each half cluster thinking it was the surviving half.<br />

105