You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

01 april<br />

2012<br />

Augmented ReAlity, <strong>AR</strong>t And technology<br />

intRoducing<br />

Added woRlds<br />

Yolande Kolstee<br />

the<br />

technology<br />

Behind<br />

Augmented<br />

ReAlity<br />

Pieter Jonker<br />

Re-intRoducing<br />

mosquitos<br />

Maarten Lamers<br />

how did we do it<br />

Wim van Eck

2<br />

<strong>AR</strong>[t]<br />

Magazine about Augmented<br />

Reality, art and technology<br />

APRil 2012<br />

3

CoLoPHoN<br />

issn numBeR<br />

2213-2481<br />

contAct<br />

The Augmented Reality <strong>Lab</strong> (<strong>AR</strong> <strong>Lab</strong>)<br />

Royal Academy of Art, The Hague<br />

(Koninklijke Academie van Beeldende Kunsten)<br />

Prinsessegracht 4<br />

2514 AN The Hague<br />

The Netherlands<br />

+31 (0)70 3154795<br />

www.arlab.nl<br />

info@arlab.nl<br />

editoRiAl teAm<br />

Yolande Kolstee, Hanna Schraffenberger,<br />

Esmé Vahrmeijer (graphic design)<br />

and Jouke Verlinden.<br />

contRiButoRs<br />

Wim van Eck, Jeroen van Erp, Pieter Jonker,<br />

Maarten Lamers, Stephan Lukosch, Ferenc Molnár<br />

(photography) and Robert Prevel.<br />

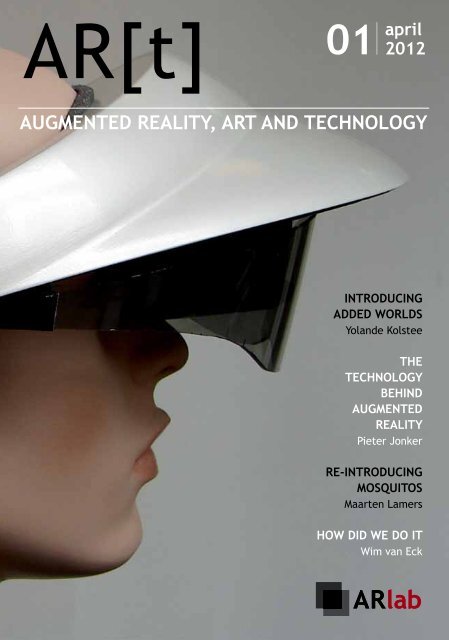

coVeR<br />

‘George’, an augmented reality headset designed<br />

by Niels Mulder during his Post Graduate Course<br />

Industrial Design (KABK), 2008<br />

www.arlab.nl<br />

TABLE oF CoNTENTS<br />

32<br />

welcome<br />

to <strong>AR</strong>[t]<br />

intRoducing Added woRlds<br />

Yolande Kolstee<br />

inteRView with<br />

helen PAPAgiAnnis<br />

Hanna Schraffenberger<br />

the technology Behind <strong>AR</strong><br />

Pieter Jonker<br />

Re-intRoducing mosquitos<br />

Maarten Lamers<br />

lieVen VAn VelthoVen —<br />

the RAcing st<strong>AR</strong><br />

Hanna Schraffenberger<br />

how did we do it<br />

Wim van Eck<br />

Pixels wAnt to Be fReed!<br />

intRoducing Augmented<br />

ReAlity enABling h<strong>AR</strong>dw<strong>AR</strong>e<br />

technologies<br />

Jouke Verlinden<br />

07 60<br />

<strong>AR</strong>tist in Residence<br />

PoRtRAit: m<strong>AR</strong>inA de hAAs<br />

Hanna Schraffenberger<br />

A mAgicAl leVeRAge —<br />

in se<strong>AR</strong>ch of the<br />

killeR APPlicAtion.<br />

Jeroen van Erp<br />

the Positioning<br />

of ViRtuAl oBjects<br />

Robert Prevel<br />

mediAted ReAlity foR cRime<br />

scene inVestigAtion<br />

Stephan Lukosch<br />

die wAlküRe<br />

Wim van Eck, <strong>AR</strong> <strong>Lab</strong> Student Project<br />

4 5<br />

08<br />

12<br />

20<br />

66<br />

70<br />

28 72<br />

30<br />

36<br />

42<br />

36<br />

76

WELCoME...<br />

to the first issue of <strong>AR</strong>[t],<br />

the magazine about<br />

Augmented Reality, art<br />

and technology!<br />

Starting with this issue, <strong>AR</strong>[t] is an aspiring<br />

magazine series for the emerging <strong>AR</strong> community<br />

inside and outside the Netherlands. The<br />

magzine is run by a small and dedicated team<br />

of researchers, artists and lecturers of the <strong>AR</strong><br />

<strong>Lab</strong> (based at the Royal Academy of Arts, The<br />

Hague), Delft University of Technology (TU<br />

Delft), Leiden University and SME. In <strong>AR</strong>[t], we<br />

share our interest in Augmented Reality (<strong>AR</strong>),<br />

discuss its applications in the arts and provide<br />

insight into the underlying technology.<br />

At the <strong>AR</strong> <strong>Lab</strong>, we aim to understand, develop,<br />

refine and improve the amalgamation of the<br />

physical world with the virtual. We do this<br />

through a project-based approach and with the<br />

help of research funding from RAAK-Pro. In the<br />

magazine series, we invite writers from the industry,<br />

interview artists working with Augmented<br />

Reality and discuss the latest technological<br />

developments.<br />

It is our belief that <strong>AR</strong> and its associated<br />

technologies are important to the field of new<br />

media: media artists experiment with the intersection<br />

of the physical and the virtual and probe<br />

the limits of our sensory perception in order to<br />

create new experiences. Managers of cultural<br />

heritage are seeking after new possibilities for<br />

worldwide access to their collections. Designers,<br />

developers, architects and urban planners<br />

are looking for new ways to better communicate<br />

their designs to clients. Designers of games and<br />

theme parks want to create immersive experiences<br />

that integrate both the physical and the<br />

virtual world. Marketing specialists are working<br />

with new interactive forms of communication.<br />

For all of them, <strong>AR</strong> can serve as a powerful tool<br />

to realize their visions.<br />

Media artists and designers who want to acquire<br />

an interesting position within the domain of new<br />

media have to gain knowledge about and experience<br />

with <strong>AR</strong>. This magazine series is intended to<br />

provide both theoretical knowledge as well as a<br />

guide towards first practical experiences with <strong>AR</strong>.<br />

our special focus lies on the diversity of contributions.<br />

Consequently, everybody who wants<br />

to know more about <strong>AR</strong> should be able to find<br />

something of interest in this magzine, be they<br />

art and design students, students from technical<br />

backgrounds as well as engineers, developers,<br />

inventors, philosophers or readers who just<br />

happened to hear about <strong>AR</strong> and got curious.<br />

We hope you enjoy the first issue and invite you<br />

to check out the website www.arlab.nl to learn<br />

more about Augmented Reality in the arts and<br />

the work of the <strong>AR</strong> <strong>Lab</strong>.<br />

www.arlab.nl<br />

6 7

intRoducing Added woRlds:<br />

Augmented ReAlity is heRe!<br />

By yolande kolstee<br />

Augmented Reality is a relatively recent computer<br />

based technology that differs from the earlier<br />

known concept of Virtual Reality. Virtual Reality is<br />

a computer based reality where the actual, outer<br />

world is not directly part of, whereas Augmented<br />

Reality can be characterized by a combination of<br />

the real and the virtual.<br />

Augmented Reality is part of the broader concept of<br />

Mixed Reality: environments that consist of the real<br />

and the virtual. To make these differences and relations<br />

more clear, industrial engineer Paul Milgram<br />

and Fumio Kishino introduced the Mixed Reality<br />

Continuum diagram in 1994, in which the real world<br />

is placed on the one end and the virtual world is<br />

placed on the other end.<br />

Real<br />

environment<br />

Augmented<br />

Reality (<strong>AR</strong>)<br />

mixed ReAlity (mR)<br />

Virtuality continuum by Paul Milgram and Fumio Kishino (1994)<br />

8<br />

Augmented<br />

Virtuality (AV)<br />

Virtual<br />

environment<br />

A shoRt oVeRView of <strong>AR</strong><br />

We define Augmented Reality as integrating 3-D<br />

virtual objects or scenes into a 3-D environment<br />

in real time (cf. Azuma, 1997).<br />

WHERE 3D VIRTUAL oBJECTS<br />

oR SCENES CoME FRoM<br />

What is shown in the virtual world, is created<br />

first. There are three ways of creating virtual<br />

objects:<br />

1. By hand: using 3d computer graphics<br />

Designers create 3D drawings of objects, game<br />

developers create 3D drawings of (human) figures,<br />

(urban) architects create 3D drawings of buildings<br />

and cities. This 3D modeling by (product)<br />

designers, architects, and visual artists is done<br />

by using specific software. Numerous software<br />

programs are developed. While some software<br />

packages can be <strong>download</strong>ed for free, others<br />

are pretty expensive. Well known examples are<br />

Maya, Cinema 4D, Studio Max, Blender, Sketch up,<br />

Rhinoceros, Solidworks, Revit, Zbrush, AutoCad,<br />

Autodesk. By now at least 170 different software<br />

programs are available.<br />

2. By computer controlled imaging<br />

equipment/3d scanners.<br />

We can distinguish different types of threedimensional<br />

scanners – the ones used in the<br />

bio-medical world and the ones used for other<br />

purposes – although there is some overlapping.<br />

Inspecting a piece of medieval art or inspecting a<br />

living human being is different but somehow also<br />

alike. In recent years we see a vigorous expansion<br />

of the use of image-producing bio-medical<br />

equipment. We owe these developments to the<br />

of engineer sir Godfrey Hounsfield and physicist<br />

Allan Cormack, among others, who were<br />

jointly awarded the Nobel Prize in 1979 for their<br />

pioneering work on X-ray computed tomography<br />

(CT). Another couple of Nobel Prize winners are<br />

Paul C. Lauterbur and Peter Mansfield, who won<br />

the prize in 2003 for their discoveries concerning<br />

magnetic resonance imaging (MRI). Although<br />

their original goals were different, in the field of<br />

Augmented Reality one might use the 3D virtual<br />

models that are produced by such systems. However,<br />

they have to be processed prior to use in<br />

<strong>AR</strong> because they might be too heavy. A 3D laser<br />

scanner is a device that analyses a real-world object<br />

or environment to collect data on its shape<br />

and its appearance (i.e. colour). The collected<br />

data can then be used to construct digital, three<br />

dimensional models. These scanners are sometimes<br />

called 3D digitizers. The difference is that<br />

the above medical scanners are looking inside to<br />

create a 3D model while the laser scanners are<br />

creating a virtual image from the reflection of<br />

the outside of an object.<br />

3. Photo and/or film images<br />

It is possible to use a (moving) 2D image like a<br />

picture as a skin on a virtual 3D model. In this<br />

way the 2D model gives a three-dimensional<br />

impression.<br />

INTEGRATING 3-D VIRTUAL oB-<br />

JECTS IN THE REAL WoRLD IN<br />

REAL TIME<br />

There are different ways of integrating the virtual<br />

objects or scenes into the real world. For all<br />

three we need a display possibility. This might<br />

be a screen or monitor, small screens in <strong>AR</strong><br />

glasses, or an object on which the 3D images are<br />

projected. We distinguish three types of (visual)<br />

Augmented Reality:<br />

display type i : screen based<br />

<strong>AR</strong> on a monitor, for example on a flatscreen or<br />

on a smart phone (using e.g. LAY<strong>AR</strong>). With this<br />

technology we see the real world and added at<br />

the same time on a computer screen, monitor,<br />

smartphone or tablet computer, the virtual<br />

object. In that way, we can, for example,<br />

9

Artist: k<strong>AR</strong>olinA soBeckA | http://www.gravitytrap.com<br />

add information to a book, by looking at the<br />

book and the screen at the same time.<br />

display type ii:<br />

<strong>AR</strong> glasses (off-screen)<br />

A far more sophisticated but not yet consumer<br />

friendly method uses <strong>AR</strong> glasses or a head<br />

mounted display (HMD), also called a head-up<br />

display. With this device the extra information is<br />

mixed with one’s own perception of the world.<br />

The virtual images appear in the air, in the real<br />

world, around you, and are not projected on a<br />

screen. In type II there are two types of mixing<br />

the real world with the virtual world:<br />

Video see-through: a camera captures the real<br />

world. The virtual images are mixed with the<br />

captures (video images) of the real world and<br />

this mix creates an Augmented Reality.<br />

optical see-through: the real world is perceived<br />

directly with one’s own eyes in real time. Via<br />

small translucent mirrors in goggles, virtual<br />

images are displayed on top of the perceived<br />

Reality.<br />

display type iii:<br />

Projection based Augmented Reality<br />

With projection based <strong>AR</strong> we project virtual 3D<br />

scenes or objects on a surface of a building of an<br />

object (or a person). To do this, we need to know<br />

exactly the dimensions of the object we project<br />

<strong>AR</strong> info onto. The projection is seen on the object<br />

or building with remarkable precision. This can<br />

generate very sophisticated or wild projections<br />

on buildings. The Augmented Matter in Context<br />

group, led by Jouke Verlinden at the Faculty of<br />

Industrial Design Engineering, TU-Delft, uses<br />

pro jection-based <strong>AR</strong> for manipulating the appear-<br />

ance of products.<br />

CoNNECTING <strong>AR</strong>T AND<br />

TECHNoLoGY<br />

The 2011 IEEE International Symposium on Mixed<br />

and Augmented Reality (ISM<strong>AR</strong>) was held in<br />

Basel, Switzerland. In the track Arts, Media, and<br />

Humanities, 40 articles were offered discussing<br />

the connection of ‘hard’ physics and ‘soft’ art.<br />

There are several ways in which art and Augmented<br />

Reality technology can be connected:<br />

we can, for example, make art with Augmented<br />

Reality technology, create Augmented Reality<br />

artworks or use Augmented Reality technology<br />

to show and explain existing art (such as a<br />

monument like the Greek Pantheon or paintings<br />

from the grottos of Lascaux). Most of the contributions<br />

of the conference concerned Augmented<br />

Reality as a tool to present, explain or augment<br />

existing art. However, some visual artists use <strong>AR</strong><br />

as a medium to create art.<br />

The role of the artist in working with the emerg-<br />

ing technology of Augmented Reality has been<br />

discussed by Helen Papagiannis in her ISM<strong>AR</strong><br />

paper The Role of the Artist in Evolving <strong>AR</strong> as a<br />

New Medium (2011). In her paper, Helen Papagi-<br />

annis reviews how the use of technology as a cre-<br />

ative medium has been discussed in recent years.<br />

She points out, that in 1988 John Pearson wrote<br />

about how the computer offers artists “new<br />

means for expressing their ideas” (p.73., cited in<br />

Papagiannis, 2011, p.61). According to Pearson,<br />

“Technology has always been, the handmaiden of<br />

the visual arts, as is obvious, a technical means is<br />

always necessary for the visual communication of<br />

ideas, of expression or the development of works<br />

of art—tools and materials are required.” (p. 73)<br />

However, he points out that new technologies<br />

“were not developed by the artistic community<br />

for artistic purposes, but by science and industry<br />

to serve the pragmatic or utilitarian needs of<br />

society.” (p.73., cited in Papagiannis, 2011, p.61)<br />

As Helen Papagiannis concludes, it is then up to<br />

the artist “to act as a pioneer, pushing forward<br />

a new aesthetic that exploits the unique materi-<br />

als of the novel technology” (2011, p.61). Like<br />

Helen, we believe this holds also for the emerging<br />

field of <strong>AR</strong> technologies and we hope, artists will<br />

set out to create exciting new Augmented Reality<br />

art and thereby contribute to the interplay<br />

between art and technology. An interview with<br />

Helen Papagiannis can be found on page 12 of this<br />

magazine. A portrait of the artist Marina de Haas,<br />

who did a residency at the <strong>AR</strong> <strong>Lab</strong>, can be found<br />

on page 60.<br />

RefeRences<br />

■ Milgram P. and Kishino, F., “A Taxonomy of<br />

Mixed Reality Visual Displays,” IEICE Trans.<br />

Information Systems, vol. E77-D, no. 12, 1994,<br />

pp. 1321-1329.<br />

■ Azuma, Ronald T., “A Survey of Augmented<br />

Reality”. In Presence: Teleoperators and<br />

Virtual Environments 6, 4 (August 1997),<br />

pp. 355-385.<br />

■ Papagiannis, H., “The Role of the Artist<br />

in Evolving <strong>AR</strong> as a New Medium”, 2011<br />

IEEE International Symposium on Mixed and<br />

Augmented Reality(ISM<strong>AR</strong>) – Arts, Media, and<br />

Humanities (ISM<strong>AR</strong>-AMH), Basel, Switserland,<br />

pp. 61-65.<br />

■ Pearson, J., “The computer: Liberator or<br />

Jailer of The creative Spirit.” Leonardo,<br />

Supplemental Issue, Electronic Art, 1 (1988),<br />

pp. 73-80.<br />

10 11

BIoGRAPHY -<br />

HELEN PAPAGIANNIS<br />

helen Papagiannis is a designer, artist,<br />

and Phd researcher specializing in Augmented<br />

Reality (<strong>AR</strong>) in toronto, canada.<br />

helen has been working with <strong>AR</strong> since<br />

2005, exploring the creative possibilities<br />

for <strong>AR</strong> with a focus on content development<br />

and storytelling. she is a senior<br />

Research Associate at the Augmented<br />

Reality lab at york university, in the<br />

department of film, faculty of fine Arts.<br />

helen has presented her interactive<br />

artwork and research at global juried<br />

12<br />

conferences and events including tedx<br />

(technology, entertainment, design),<br />

ism<strong>AR</strong> (international society for mixed<br />

and Augmented Reality) and iseA (international<br />

symposium for electronic Art).<br />

Prior to her Augmented life, helen was a<br />

member of the internationally renowned<br />

Bruce mau design studio where she was<br />

project lead on “massive change:<br />

the future of global design." Read more<br />

about helen’s work on her blog and follow<br />

her on twitter: @<strong>AR</strong>stories.<br />

www.augmentedstories.com<br />

INTERVIEW WITH<br />

HELEN PAPAGIANNIS<br />

BY HANNA SCHRAFFENBERGER<br />

What is Augmented Reality?<br />

Augmented Reality (<strong>AR</strong>) is a real-time layering of<br />

virtual digital elements including text, images,<br />

video and 3D animations on top of our existing<br />

reality, made visible through <strong>AR</strong> enabled devices<br />

such as smart phones or tablets equipped with<br />

a camera. I often compare <strong>AR</strong> to cinema when<br />

it was first new, for we are at a similar moment<br />

in <strong>AR</strong>’s evolution where there are currently no<br />

conventions or set aesthetics; this is a time ripe<br />

with possibilities for <strong>AR</strong>’s creative advancement.<br />

Like cinema when it first emerged, <strong>AR</strong> has commenced<br />

with a focus on the technology with<br />

little consideration to content. <strong>AR</strong> content needs<br />

to catch up with <strong>AR</strong> technology. As a community<br />

of designers, artists, researchers and commercial<br />

industry, we need to advance content in <strong>AR</strong><br />

and not stop with the technology, but look at<br />

what unique stories and utility <strong>AR</strong> can present.<br />

So far, <strong>AR</strong> technologies are still<br />

new to many people and often<br />

<strong>AR</strong> works cause a magical experience.<br />

Do you think <strong>AR</strong> will lose<br />

its magic once people get used to<br />

the technology and have developed<br />

an understanding of how <strong>AR</strong><br />

works? How have you worked with<br />

this ‘magical element’ in your<br />

work ‘The Amazing Cinemagician’?<br />

I wholeheartedly agree that <strong>AR</strong> can create a<br />

magical experience. In my TEDx 2010 talk, “How<br />

Does Wonderment Guide the Creative Process”<br />

(http://youtu.be/ScLgtkVTHDc), I discuss how<br />

<strong>AR</strong> enables a sense of wonder, allowing us to see<br />

our environments anew. I often feel like a magician<br />

when presenting demos of my <strong>AR</strong> work live;<br />

astonishment fills the eyes of the beholder questioning,<br />

“How did you do that?” So what happens<br />

when the magic trick is revealed, as you ask,<br />

when the illusion loses its novelty and becomes<br />

habitual? In Virtual Art: Illusion to Immersion<br />

(2004), new media art-historian oliver Grau<br />

discusses how audiences are first overwhelmed<br />

by new and unaccustomed visual experiences,<br />

but later, once “habituation chips away at the<br />

illusion”, the new medium no longer possesses<br />

“the power to captivate” (p. 152). Grau writes<br />

that at this stage the medium becomes “stale<br />

and the audience is hardened to its attempts<br />

at illusion”; however, he notes, that it is at this<br />

stage that “the observers are receptive to content<br />

and media competence” (p. 152).<br />

When the initial wonder and novelty of the<br />

technology wear off, will it be then that <strong>AR</strong> is<br />

explored as a possible media format for various<br />

content and receive a wider public reception as<br />

a mass medium? or is there an element of wonder<br />

that need exist in the technology for it to<br />

be effective and flourish?<br />

13

Picture: PiPPin lee<br />

“Pick a card. Place it here.<br />

Prepare to be amazed and<br />

entertained.”<br />

14<br />

I believe <strong>AR</strong> is currently entering the stage of<br />

content development and storytelling, however,<br />

I don’t feel <strong>AR</strong> has lost its “power to captivate”<br />

or “become stale”, and that as artists, designers,<br />

researchers and storytellers, we continue to<br />

maintain wonderment in <strong>AR</strong> and allow it to guide<br />

and inspire story and content. Let’s not forget<br />

the enchantment and magic of the medium. I<br />

often reference the work of French filmmaker<br />

and magician George Méliès (1861-1938) as a<br />

great inspiration and recently named him the<br />

Patron Saint of <strong>AR</strong> in an article for The Creators<br />

Project (http://www.thecreatorsproject.com/<br />

blog/celebrating-georges-méliès-patron-saintof-augmented-reality)<br />

on what would have been<br />

Méliès’ 150th birthday. Méliès was first a stage<br />

magician before being introduced to cinema at<br />

a preview of the Lumiere brothers’ invention,<br />

where he is said to have exclaimed, “That’s<br />

for me, what a great trick”. Méliès became<br />

famous for the “trick-film”, which employed a<br />

stop- motion and substitution technique. Méliès<br />

applied the newfound medium of cinema to<br />

extend magic into novel, seemingly impossible<br />

visualities on the screen.<br />

I consider <strong>AR</strong>, too, to be very much about creat-<br />

ing impossible visualities. We can think of <strong>AR</strong> as<br />

a real-time stop-substitution, which layers content<br />

dynamically atop the physical environment<br />

and creates virtual actualities with shapeshifting<br />

objects, magically appearing and disappearing—<br />

as Méliès first did in cinema.<br />

In tribute to Méliès, my Mixed Reality exhibit,<br />

The Amazing Cinemagician integrates Radio<br />

Frequency Identification (RFID) technology with<br />

the FogScreen, a translucent projection screen<br />

consisting of a thin curtain of dry fog. The<br />

Amazing Cinemagician speaks to technology as<br />

magic, linking the emerging technology of the<br />

FogScreen with the pre-cinematic magic lantern<br />

and phantasmagoria spectacles of the Victorian<br />

era. The installation is based on a card-trick,<br />

using physical playing cards as an interface<br />

to interact with the FogScreen. RFID tags are<br />

hidden within each physical playing card. Part<br />

of the magic and illusion of this project was to<br />

disguise the RFID tag as a normal object, out<br />

of the viewer’s sight. Each of these tags corresponds<br />

to a short film clip by Méliès, which is<br />

projected onto the FogScreen once a selected<br />

card is placed atop the RFID tag reader. The<br />

RFID card reader is hidden within an antique<br />

wooden podium (adding to the aura of the magic<br />

performance and historical time period).<br />

The following instructions were provided to the<br />

participant: “Pick a card. Place it here. Prepare<br />

to be amazed and entertained.” once the<br />

participant placed a selected card atop the designated<br />

area on the podium (atop the concealed<br />

RFID reader), an image of the corresponding<br />

card was revealed on the FogScreen, which was<br />

then followed by one of Méliès’ films. The decision<br />

was made to provide visual feedback of the<br />

participant’s selected card to add to the magic<br />

of the experience and to generate a sense of<br />

wonder, similar to the witnessing and questioning<br />

of a magic trick, with participants asking,<br />

“How did you know that was my card? How did<br />

you do that?” This curiosity inspired further<br />

exploration of each of the cards (and in turn,<br />

Méliès’ films) to determine if each of the participant’s<br />

cards could be properly identified.<br />

You are an artist and researcher.<br />

Your scientific work as well as<br />

your artistic work explores how<br />

<strong>AR</strong> can be used as a creative<br />

medium. What’s the difference<br />

between your work as an artist /<br />

designer and your work as a researcher?<br />

Excellent question! I believe that artists and<br />

designers are researchers. They propose novel<br />

paths for innovation introducing detours into the<br />

usual processes. In my most recent TEDx 2011<br />

talk in Dubai, “Augmented Reality and the Power<br />

of Imagination” (http://youtu.be/7QrB4cYxjmk),<br />

15

Picture: HELEN PAPAGIANNIS<br />

I discuss how as a designer/artist/PhD researcher<br />

I am both a practitioner and a researcher, a maker<br />

and a believer. As a practitioner, I do, create,<br />

design; as a researcher I dream, aspire, hope.<br />

I am a make-believer working with a technology<br />

that is about make-believe, about imagining<br />

possibilities atop actualities. Now, more than<br />

ever, we need more creative adventurers and<br />

make-believers to help <strong>AR</strong> continue to evolve<br />

and become a wondrous new medium, unlike<br />

anything we’ve ever seen before! I spoke to the<br />

importance and power of imagination and makebelieve,<br />

and how they pertain to <strong>AR</strong> at this critical<br />

junction in the medium’s evolution. When<br />

we make-believe and when we imagine, we are<br />

in two places simultaneously; make-believe is<br />

about projecting or layering our imagination<br />

on top of a current situation or circumstance.<br />

In many ways, this is what <strong>AR</strong> is too: layering<br />

imagined worlds on top of our existing reality.<br />

You’ve had quite a success with<br />

your <strong>AR</strong> pop-up book ‘Who’s<br />

Afraid of Bugs?’ In your blog you<br />

talk about your inspiration for<br />

the story behind the book: it was<br />

inspired by <strong>AR</strong> psychotherapy<br />

studies for the treatment of<br />

phobias such as arachnophobia.<br />

Can you tell us more?<br />

Who’s Afraid of Bugs? was the world’s first Augmented<br />

Reality (<strong>AR</strong>) Pop-up designed for iPad2<br />

and iPhone 4. The book combines hand-crafted<br />

paper-engineering and <strong>AR</strong> on mobile devices to<br />

create a tactile and hands-on storybook that<br />

explores the fear of bugs through narrative and<br />

play. Integrating image tracking in the design,<br />

as opposed to black and white glyphs commonly<br />

seen in <strong>AR</strong>, the book can hence be enjoyed alone<br />

as a regular pop-up book, or supplemented with<br />

Augmented digital content when viewed through<br />

a mobile device equipped with a camera. The<br />

book is a playful exploration of fears using <strong>AR</strong> in<br />

a meaningful and fun way. Rhyming text takes<br />

the reader through the storybook where various<br />

‘creepy crawlies’ (spider, ant, and butterfly) are<br />

awaiting to be discovered, appearing virtually<br />

as 3D models you can interact with. A tarantula<br />

attacks when you touch it, an ant hyperlinks to<br />

educational content with images and diagrams,<br />

and a butterfly appears flapping its wings atop<br />

a flower in a meadow. Hands are integrated<br />

throughout the book design, whether its pla cing<br />

one’s hand down to have the tarantula crawl<br />

over you virtually, the hand holding the magnifying<br />

lens that sees the ant, or the hands that popup<br />

holding the flower upon which the butterfly<br />

appears. It’s a method to involve the reader in<br />

the narrative, but also comments on the unique<br />

tactility <strong>AR</strong> presents, bridging the digital with<br />

the physical. Further, the story for the <strong>AR</strong><br />

Pop-up Book was inspired by <strong>AR</strong> psychotherapy<br />

studies for the treatment of phobias such as<br />

arachnophobia. <strong>AR</strong> provides a safe, controlled<br />

environment to conduct exposure therapy<br />

within a patient’s physical surroundings, creating<br />

a more believable scenario with heightened<br />

presence (defined as the sense of really being in<br />

an imagined or perceived place or scenario) and<br />

provides greater immediacy than in Virtual Reality<br />

(VR). A video of the book may be watched at<br />

http://vimeo.com/25608606.<br />

In your work, technology serves<br />

as an inspiration. For example,<br />

rather than starting with a story<br />

which is then adapted to a certain<br />

technology, you start out with<br />

<strong>AR</strong> technology, investigate its<br />

strengths and weaknesses and so<br />

the story evolves. However, this<br />

does not limit you to only use the<br />

strength of a medium.<br />

On the contrary, weaknesses such<br />

as accidents and glitches have<br />

for example influenced your work<br />

‘Hallucinatory <strong>AR</strong>’. Can you tell us<br />

a bit more about this work?<br />

Hallucinatory Augmented Reality (<strong>AR</strong>), 2007,<br />

was an experiment which investigated the<br />

possibility of images which were not glyphs/<strong>AR</strong><br />

trackables to generate <strong>AR</strong> imagery. The projects<br />

evolved out of accidents, incidents in earlier<br />

experiments in which the <strong>AR</strong> software was mistaking<br />

non-marker imagery for <strong>AR</strong> glyphs and<br />

attempted to generate <strong>AR</strong> imagery. This confusion,<br />

by the software, resulted in unexpected<br />

and random flickering <strong>AR</strong> imagery. I decided to<br />

explore the creative and artistic possibilities<br />

of this effect further and conduct experiments<br />

with non-traditional marker-based tracking.<br />

The process entailed a study of what types of<br />

non-marker images might generate such ‘hallucinations’<br />

and a search for imagery that would<br />

evoke or call upon multiple <strong>AR</strong> imagery/videos<br />

from a single image/non-marker.<br />

Upon multiple image searches, one image<br />

emerged which proved to be quite extraordinary.<br />

A cathedral stained glass window was<br />

able to evoke four different <strong>AR</strong> videos, the only<br />

instance, from among many other images, in<br />

which multiple <strong>AR</strong> imagery appeared. Upon close<br />

examination of the image, focusing in and out<br />

with a web camera, a face began to emerge in<br />

the black and white pattern. A fantastical image<br />

of a man was encountered. Interestingly, it<br />

was when the image was blurred into this face<br />

using the web camera that the <strong>AR</strong> hallucinatory<br />

imagery worked best, rapidly multiplying and<br />

appearing more prominently. Although numerous<br />

attempts were made with similar images,<br />

no other such instances occurred; this image<br />

appeared to be unique.<br />

The challenge now rested in the choice of what<br />

types of imagery to curate into this hallucinatory<br />

viewing: what imagery would be best suited to<br />

this phantasmagoric and dream-like form?<br />

My criteria for imagery/videos were like-form<br />

and shape, in an attempt to create a collage-like<br />

set of visuals. As the sequence or duration of<br />

the imagery in Hallucinatory <strong>AR</strong> could not be<br />

predetermined, the goal was to identify imagery<br />

16 17

that possessed similarities, through which the<br />

possibility for visual synchronicities existed.<br />

Themes of intrusions and chance encounters are<br />

at play in Hallucinatory <strong>AR</strong>, inspired in part by<br />

Surrealist artist Max Ernst. In What is the Mechanism<br />

of Collage? (1936), Ernst writes:<br />

one rainy day in 1919, finding myself on a village<br />

on the Rhine, I was struck by the obsession<br />

which held under my gaze the pages of an illustrated<br />

catalogue showing objects designed for<br />

anthropologic, microscopic, psychologic, mineralogic,<br />

and paleontologic demonstration. There<br />

I found brought together elements of figuration<br />

so remote that the sheer absurdity of that collection<br />

provoked a sudden intensification of<br />

the visionary faculties in me and brought forth<br />

an illusive succession of contradictory images,<br />

double, triple, and multiple images, piling up<br />

on each other with the persistence and rapidity<br />

which are particular to love memories and visions<br />

of half-sleep (p. 427).<br />

of particular interest to my work in exploring<br />

and experimenting with Hallucinatory <strong>AR</strong> was<br />

Ernst’s description of an “illusive succession of<br />

contradictory images” that were “brought forth”<br />

(as though independent of the artist), rapidly<br />

multiplying and “piling up” in a state of “halfsleep”.<br />

Similarities can be drawn to the process<br />

of the seemingly disparate <strong>AR</strong> images jarringly<br />

coming in and out of view, layered atop one<br />

another.<br />

one wonders if these visual accidents are what<br />

the future of <strong>AR</strong> might hold: of unwelcome<br />

glitches in software systems as Bruce Sterling<br />

describes on Beyond the Beyond in 2009; or<br />

perhaps we might come to delight in the visual<br />

poetry of these Augmented hallucinations that<br />

are “As beautiful as the chance encounter of a<br />

sewing machine and an umbrella on an operating<br />

table.” 1<br />

To a computer scientist, these ‘glitches’, as<br />

applied in Hallucinatory <strong>AR</strong>, could potentially<br />

be viewed or interpreted as a disaster, as an<br />

18<br />

example of the technology failing. To the artist,<br />

however, there is poetry in these glitches, with<br />

new possibilities of expression and new visual<br />

forms emerging.<br />

on the topic of glitches and accidents, I’d like to<br />

return to Méliès. Méliès became famous for the<br />

stop trick, or double exposure special effect,<br />

a technique which evolved from an accident:<br />

Méliès’ camera jammed while filming the streets<br />

of Paris; upon playing back the film, he observed<br />

an omnibus transforming into a hearse. Rather<br />

than discounting this as a technical failure, or<br />

glitch, he utilized it as a technique in his films.<br />

Hallucinatory <strong>AR</strong> also evolved from an accident,<br />

which was embraced and applied in attempt<br />

to evolve a potentially new visual mode in the<br />

medium of <strong>AR</strong>. Méliès introduced new formal<br />

styles, conventions and techniques that were<br />

specific to the medium of film; novel styles and<br />

new conventions will also emerge from <strong>AR</strong> artists<br />

and creative adventurers who fully embrace<br />

the medium.<br />

[1] Comte de Lautreamont’s often quoted allegory,<br />

famous for inspiring both Max Ernst and Andrew<br />

Breton, qtd. in: Williams, Robert. “Art Theory: An<br />

Historical Introduction.” Malden, MA: Blackwell<br />

Publishing, 2004: 197<br />

“As beautiful as the chance<br />

encounter of a sewing<br />

machine and an umbrella<br />

on an operating table.”<br />

Comte de Lautréamont<br />

Picture: PiPPin lee<br />

19

THE TECHNoLoGY BEHIND<br />

AUGMENTED REALITY<br />

Augmented Reality (<strong>AR</strong>) is a field that is primarily<br />

concerned with realistically adding computergenerated<br />

images to the image one perceives<br />

from the real world.<br />

<strong>AR</strong> comes in several flavors. Best known is the<br />

practice of using flatscreens or projectors,<br />

but nowadays <strong>AR</strong> can be experienced even on<br />

smartphones and tablet PCs. The crux is that 3D<br />

digital data from another source is added to the<br />

ordinary physical world, which is for example<br />

seen through a camera. We can create this additional<br />

data ourselves, e.g. using 3D drawing<br />

programs such as 3D Studio Max, but we can<br />

also add CT and MRI data or even live TV images<br />

to the real world. Likewise, animated three<br />

dimensional objects (avatars), which then can be<br />

displayed in the real world, can be made using a<br />

visualization program like Cinema 4D. Instead of<br />

displaying information on conventional monitors,<br />

the data can also be added to the vision of the<br />

user by means of a head-mounted display (HMD)<br />

or Head-Up Display. This is a second, less known<br />

form of Augmented Reality. It is already known<br />

to fighter pilots, among others. We distinguish<br />

two types of HMDs, namely: optical See Through<br />

(oST) headsets and Video See Through (VST)<br />

headsets. oST headsets use semi-transparent<br />

mirrors or prisms, through which one can keep<br />

seeing the real world. At the same time, virtual<br />

objects can be added to this view using small<br />

displays that are placed on top of the prisms.<br />

VSTs are in essence Virtual Reality goggles, so<br />

the displays are placed directly in front of your<br />

eyes. In order to see the real world, there are<br />

two cameras attached on the other side of the<br />

little displays. You can then see the Augmented<br />

Reality by mixing the video signal coming from<br />

the camera with the video signal containing the<br />

virtual objects.<br />

20 21

undeRlying technology<br />

screens and glasses<br />

unlike screen-based <strong>AR</strong>, hmds provide depth<br />

perception as both eyes receive an image.<br />

when objects are projected on a 2d screen,<br />

one can convey an experience of depth by<br />

letting the objects move. Recent 3d screens<br />

allow you to view stationary objects in depth.<br />

3d televisions that work with glasses quickly<br />

alternate the right and left image - in sync with<br />

this, the glasses use active shutters which let<br />

the image in turn reach the left or the right<br />

eye. this happens so fast that it looks like you<br />

view both, the left and right image simultaneously.<br />

3d television displays that work without<br />

glasses make use of little lenses which are<br />

placed directly on the screen. those refract<br />

the left and right image, so that each eye can<br />

only see the corresponding image. see for<br />

example www.dimenco.eu/display-technology.<br />

this is essentially the same method as used<br />

on the well known 3d postcards on which a<br />

beautiful lady winks when the card is slightly<br />

turned. 3d film makes use of two projectors<br />

that show the left and right images simultaneously,<br />

however, each of them is polarized in a<br />

different way. the left and right lenses of the<br />

glasses have matching polarizations and only<br />

let through the light of to the corresponding<br />

projector. the important point with screens<br />

is that you are always bound to the physical<br />

location of the display while headset based<br />

techniques allow you to roam freely. this is<br />

called immersive visualization — you are immersed<br />

in a virtual world. you can walk around<br />

in the 3d world and move around and enter<br />

virtual 3d objects.<br />

Video-see-through <strong>AR</strong> will become popular<br />

within a very short time and ultimately become<br />

an extension of the smartphone. this is<br />

because both display technology and camera<br />

technology have made great strides with the<br />

advent of smartphones. what currently still<br />

might stand in the way of smartphone models<br />

22<br />

is computing power and energy consumption.<br />

companies such as microsoft, google, sony,<br />

Zeiss,... will enter the consumer market soon<br />

with <strong>AR</strong> technology.<br />

tracking technology<br />

A current obstacle for major applications which<br />

soon will be resolved is the tracking technology.<br />

the problem with <strong>AR</strong> is embedding the virtual<br />

objects in the real world. you can compare<br />

this with color printing: the colors, e.g., cyan,<br />

magenta, yellow and black have to be printed<br />

properly aligned to each other. what you<br />

often see in prints which are not cut yet, are<br />

so called fiducial markers on the edge of the<br />

printing plates that serve as a reference for<br />

the alignment of the colors. these are also<br />

necessary in <strong>AR</strong>. often, you see that markers<br />

are used onto which a 3d virtual object is<br />

projected. moving and rotating the marker, lets<br />

you move and rotate the virtual object. such<br />

a marker is comparable to the fiducial marker<br />

in color printing. with the help of computer<br />

vision technology, the camera of the headset<br />

can identify the marker and based on it’s size,<br />

shape and position, conclude the relative position<br />

of the camera. if you move your head relative<br />

to the marker (with the virtual object), the<br />

computer knows how the image on the display<br />

must be transformed so that the virtual object<br />

remains stationary. And conversely, if your<br />

head is stationary and you rotate the marker,<br />

it knows how the virtual object should rotate<br />

so that it remains on top of the marker.<br />

<strong>AR</strong> smartphone applications such as layar use<br />

the build in gPs and compass for the tracking.<br />

this has an accuracy of meters and measures<br />

angles of 5-10 degrees. camera-based tracking,<br />

however, is accurate to the centimetre and can<br />

measure angles of several degrees. nowadays,<br />

using markers for the tracking is already out<br />

of date and we use so called “natural feature<br />

tracking” also called “keypoint tracking”.<br />

here, the computer searches for conspicuous<br />

(salient) key points in the left and right camera<br />

image. if, for example, you twist your head, this<br />

shift is determined on the basis of those key points<br />

with more than 30 frames per second. this way, a<br />

3d map of these keypoints can be built and the computer<br />

knows the relationship (distance and angle)<br />

between the keypoints and the stereo camera. this<br />

method is more robust than marker based tracking<br />

because you have many keypoints — widely spread<br />

in the scene — and not just the four corners of the<br />

marker close together in the scene. if someone<br />

walks in front of the camera and blocks some of the<br />

keypoints, there will still be enough keypoints left<br />

and the tracking is not lost. moreover, you do not<br />

have to stick markers all over the world.<br />

collaboration with the Royal Academy of Arts<br />

(kABk) in the hague in the <strong>AR</strong> lab (Royal<br />

Academy, tu delft, leiden university, various<br />

smes) in the realization of applications.<br />

the tu delft has done research on <strong>AR</strong> since 1999.<br />

since 2006, the university works with the art<br />

academy in the hague. the idea is that <strong>AR</strong> is a new<br />

technology with its own merits. Artists are very<br />

good at finding out what is possible with the new<br />

technology. here are some pictures of realized<br />

projects. liseerde projecten<br />

Fig 1. The current technology that replaces the markers<br />

with natural feature tracking or so called keypoint<br />

tracking. Instead of the four corners of the marker, the<br />

computer itself determines which points in the left and<br />

right images can be used as anchor points for calculating<br />

the 3D pose of the camera in 3D space. From top:<br />

1: you can use all points in the left and right images<br />

to slowly build a complete 3D map. Such a map can,<br />

for example, be used to relive your past experience<br />

because you can again walk in the now virtual space.<br />

2: the 3D keypoint space and the trace of the<br />

camera position within it.<br />

3: keypoints (the color indicates the suitability)<br />

4: you can place virtual objects (eyes) on an existing<br />

surface<br />

23

Fig 2. Virtual furniture exhibition at the Salone di Mobile in Milan (2008); students of the Royal<br />

Academy of Art, The Hague show their furnitures by means of <strong>AR</strong> headsets. This saves transportation<br />

costs.<br />

Fig 3. Virtual sculpture exhibition in Kröller-Müller (2009). From left:<br />

1) visitors on adventure with laptops on walkers, 2) inside with a optical see-through headset,<br />

3) large pivotable screen on a field of grass, 4) virtual image.<br />

24<br />

Fig 4. Exhibition in Museum Boijmans van Beuningen (2008-2009). From left: 1) Sgraffitto in 3D;<br />

2) the 3D print version may be picked up by the spectator, 3) animated shards, the table covered<br />

in ancient pottery can be seen via the headset, 4) scanning antique pottery with the CT scanner<br />

delivers a 3D digital image.<br />

Fig 5. The TUD, partially in collaboration with the Royal Academy (with the oldest industrial design<br />

course in the Netherlands), has designed a number of headsets.This design of headsets is an ongoing<br />

activity. From left: 1) first optical see-through headset with Sony headset and self-made inertia<br />

tracker (2000), 2) on a construction helmet (2006), 3) SmartCam and tracker taped on a Cyber Mind<br />

Visette headset (2007); 4) headset design with engines by Niels Mulder, a student at Royal Academy<br />

of Art, The Hague (2007), based on Cybermind technology, 5) low cost prototype based on the Carl<br />

Zeiss Cinemizer headset, 6) future <strong>AR</strong> Vizor?, 7) future <strong>AR</strong> lens?<br />

25

there are many applications<br />

that can be realized using <strong>AR</strong>;<br />

they will find their way in the<br />

coming decades:<br />

1. head-up displays have already been used<br />

for many years in the Air force for fighter<br />

pilots; this can be extended to other<br />

vehicles and civil applications.<br />

2. the billboards during the broadcast of<br />

a football game are essentially also <strong>AR</strong>;<br />

more can be done by also ivolving the<br />

game itself an allowing interaction of teh<br />

user, such as off-side line projection.<br />

3. in the professional sphere, you can, for<br />

example, visualize where pipes under the<br />

street lie or should lie. ditto for designing<br />

ships, houses, planes, trucks and cars.<br />

what’s outlined in a cAd drawing could<br />

be drawn in the real world, allowing<br />

you to see in 3d if and where there is a<br />

mismatch.<br />

4. you can easily find books you are looking<br />

for in the library.<br />

5. you can find out where restaurants are in<br />

a city...<br />

6. you can pimp theater / musical / opera /<br />

pop concerts with (immersive) <strong>AR</strong> decor.<br />

7. you can arrange virtual furniture or curtains<br />

from the ikeA catalog and see how<br />

they look in your home.<br />

8. maintenance of complex devices will<br />

become easier, e.g. you can virtually see<br />

where the paper in the copier is jammed.<br />

9. if you enter a restaurant or the hardware<br />

store, a virtual avatar can show you the<br />

place to find that special bolt or table.<br />

showing the seRRA Room<br />

in museum BoijmAns VAn<br />

Beuningen duRing the<br />

exhiBition sgRAffito in 3d<br />

Picture: joAchim RotteVeel<br />

26 27

28<br />

RE-INTRoDUCING MoSQUIToS<br />

MA<strong>AR</strong>TEN LAMERS<br />

<strong>AR</strong>oUND 2004, MY YoUNGER BRoTHER VALENTIJN INTRoDUCED ME<br />

To THE FASCINATING WoRLD oF AUGMENTED REALITY. HE WAS A<br />

MoBILE PHoNE SALESMAN AT THE TIME, AND SIEMENS HAD JUST<br />

LAUNCHED THEIR FIRST “SM<strong>AR</strong>TPHoNE”, THE BULKY SIEMENS SX1.<br />

THIS PHoNE WAS QUITE M<strong>AR</strong>VELoUS, WE THoUGHT – IT RAN THE<br />

SYMBIAN oPERATING SYSTEM, HAD A BUILT-IN CAMERA, AND CAME<br />

WITH… THREE GAMES.<br />

one of these games was mozzies, a.k.a Virtual<br />

mosquito hunt, which apparently won some<br />

2003 Best mobile game Award and my brother<br />

was eager to show it to me in the store where<br />

he worked at that time. i was immediately<br />

hooked… mozzies lets you kill virtual mosquitos<br />

that fly around superimposed over the<br />

live camera feed. By physically moving the<br />

phone you could chase after the mosquitos<br />

when they attempted to fly off the phone’s<br />

display. those are all the ingredients for<br />

Augmented Reality in my personal opinion:<br />

something that interacts with my perception<br />

and manipulation of the world around me, at<br />

that location, at that time. And mozzies did<br />

exactly that.<br />

now almost eight years later, not much<br />

has changed. whenever people around me<br />

speak of <strong>AR</strong>, because they got tired of saying<br />

“Augmented Reality”, they still refer to bulky<br />

equipment (even bulkier than the siemens<br />

sx1!) that projects stuff over a live camera<br />

feed and lets you interact with whatever<br />

that “stuff” is. in mozzies it was pesky little<br />

mosquitos -- nowadays it is anything from<br />

restaurant information to crime scene data.<br />

But nothing really changed, right?<br />

Right! technology became more advanced,<br />

so we no longer need to hold the phone in<br />

our hand, but get to wear it strapped to our<br />

skull in the form of goggles. But the idea is<br />

unchanged; you look at fake stuff in the real<br />

world and physically move around to deal<br />

with it. you still don’t get the tactile sensation<br />

of swatting a mosquito or collecting<br />

“virtually heavy” information. you still don’t<br />

even hear the mosquito flying around you…<br />

it’s time to focus on those matters also, in my<br />

opinion. let’s take up the challenge and make<br />

<strong>AR</strong> more than visual, exploring interaction<br />

models for other senses. let’s enjoy the full<br />

experience of seeing, hearing, and particularly<br />

swatting mosquitos, but without the<br />

itchy bites.<br />

29

LIEVEN VAN<br />

VELTHoVEN —<br />

THE RACING ST<strong>AR</strong><br />

“IT AIN’T FUN IF IT AIN’T REAL TIME”<br />

BY HANNA SCHRAFFENBERGER<br />

WHEN I ENTER LIEVEN VAN<br />

VELTHoVEN’S RooM, THE PEoPLE<br />

FRoM THE EFTELING HAVE JUST<br />

LEFT. THEY <strong>AR</strong>E INTERESTED IN HIS<br />

‘VIRTUAL GRoWTH’ INSTALLATIoN.<br />

AND THEY <strong>AR</strong>E NoT THE oNLY oNES<br />

INTERESTED IN LIEVEN’S WoRK. IN<br />

THE LAST YE<strong>AR</strong>, HE HAS WoN THE<br />

JURY AW<strong>AR</strong>D FoR BEST NEW MEDIA<br />

PRoDUCTIoN 2011 oF THE INTER-<br />

NATIoNAL CINEKID YoUTH MEDIA<br />

FESTIVAL AS WELL AS THE DUTCH<br />

GAME AW<strong>AR</strong>D 2011 FoR THE BEST<br />

STUDENT GAME. THE WINNING<br />

MIXED REALITY GAME ‘RooM RAC-<br />

ERS’ HAS BEEN SHoWN AT THE<br />

DISCoVERY FESTIVAL, MEDIAMATIC,<br />

THE STRP FESTIVAL AND THE ZKM IN<br />

K<strong>AR</strong>LSRUHE. HIS VIRTUAL GRoWTH<br />

INSTALLATIoN HAS EMBELLISHED<br />

THE STREETS oF AMSTERDAM AT<br />

NIGHT. NoW, HE IS GoING To SHoW<br />

RooM RACERS To ME, IN HIS LIVING<br />

RooM — WHERE IT ALL ST<strong>AR</strong>TED.<br />

The room is packed with stuff and on first sight<br />

it seems rather chaotic, with a lot of random<br />

things laying on the floor. There are a few<br />

plants, which probably don’t get enough light,<br />

because Lieven likes the dark (that’s when his<br />

projections look best). It is only when he turns<br />

on the beamer, that I realize that his room is<br />

actually not chaotic at all. The shoe, magnifying<br />

class, video games, tape and stapler which cover<br />

the floor are all part of the game.<br />

“You create your own race game<br />

tracks by placing real stuff on the<br />

floor”<br />

Lieven tells me. He hands me a controller and<br />

soon we are racing the little projected cars<br />

around the chocolate spread, marbles, a remote<br />

control and a flash light. Trying not to crash the<br />

car into a belt, I tell him what I remember about<br />

when I first met him a few years ago at a Media<br />

Technology course at Leiden University. Back<br />

then, he was programming a virtual bird, which<br />

would fly from one room to another, preferring<br />

the room in which it was quiet. Loud and sudden<br />

sounds would scare the bird away into another<br />

room. The course for which he developed it was<br />

called sound space interaction, and his installation<br />

was solely based on sound. I ask him<br />

whether the virtual bird was his first contact<br />

with Augmented Reality. Lieven laughs.<br />

“It’s interesting that you call it<br />

<strong>AR</strong>, as it only uses sound!”<br />

Indeed, most of Lieven’s work is based on<br />

interactive projections and plays with visual<br />

augmentations of our real environment. But like<br />

the bird, all of them are interactive and work<br />

in real-time. Looking back, the bird was not his<br />

first <strong>AR</strong> work.<br />

“My first encounter with <strong>AR</strong> was<br />

during our first Media Technology<br />

course — a visit to the Ars Electroncia<br />

festival in 2007 — where<br />

I saw Pablo Valbuena’s Augmented<br />

Sculpture. It was amazing. I was<br />

asking myself, can I do something<br />

like this but interactive instead?”<br />

Armed with a bachelor in technical computer<br />

science from TU Delft and the new found possibility<br />

to bring in his own curiosity and ideas at<br />

the Media Technology Master program at Leiden<br />

University, he set out to build his own interactive<br />

projection based works.<br />

30 31

Room RAceRs<br />

Up to four players race their virtual cars around real objects<br />

which are lying on the floor. Players can drop in or out of the<br />

game at any time. Everything you can find can be placed on<br />

the floor to change the route.<br />

Room Racers makes use of projection-based mixed reality.<br />

The structure of the floor is analysed in real-time using a<br />

modified camera and self-written software. Virtual cars are<br />

projected onto the real environment and interact with the<br />

detected objects that are lying on the floor.<br />

The game has won the Jury Award for Best New Media Produc-<br />

tion 2011 of the international Cinekid Youth Media Festival,<br />

and the Dutch Game Award 2011 for Best Student Game. Room<br />

Racers shas been shown at several international media festivals.<br />

You can play Room Racers at the 'Car Culture' exposition<br />

at the Lentos Kunstmuseum in Linz, Austria until 4th of July<br />

2012.<br />

Picture: lieVen VAn VelthoVen, Room RAceRs At Zkm | centeR foR <strong>AR</strong>ts And mediA<br />

in k<strong>AR</strong>lsRuhe, geRmAny on june 19th, 2011<br />

32<br />

33

“The first time, I experimented<br />

with the combination of the real<br />

and the virtual myself was in a<br />

piece called shadow creatures<br />

which I made with Lisa Dalhuijsen<br />

during our first semester in 2007.”<br />

More interactive projections followed in the<br />

next semester and in 2008, the idea for Room<br />

Racers was born. A first prototype was build in<br />

a week: a projected car bumping into real world<br />

things. After that followed months and months<br />

of optimizations. Everything is done by Lieven<br />

himself, mostly at night in front of the computer.<br />

“My projects are never really<br />

finished, they are always work in<br />

progress, but if something works<br />

fine in my room, it’s time to take<br />

it out in the world.”<br />

After having friends over and playing with the<br />

cars until six o’clock in the morning, Lieven<br />

knows it’s time to steer the cars out of his room<br />

and show them to the outside world.<br />

“I wanted to present Room Racers<br />

but I didn’t know anyone, and<br />

no one knew me. There was no<br />

network I was part of.”<br />

Uninhibited by this, Lieven took the initiative<br />

and asked the Discovery Festival if they were<br />

interested in his work. Luckily, they were — and<br />

showed two of his interactive games at the Discovery<br />

Festival 2010. After the festival requests<br />

started coming and the cars kept rolling. When<br />

I ask him about this continuing success he is<br />

divided:<br />

“It’s fun, but it takes a lot of time<br />

— I have not been able to program<br />

as much as I used to.”<br />

His success does surprise him and he especially<br />

did not expect the attention it gets in an art<br />

context.<br />

“I knew it was fun. That became<br />

clear when I had friends over and<br />

we played with it all night. But I<br />

did not expect the awards. And I<br />

did not expect it to be relevant<br />

in the art scene. I do not think<br />

it’s art, it’s just a game. I don’t<br />

consider myself an artist. I am a<br />

developer and I like to do interactive<br />

projections. Room Racers is<br />

my least arty project, nevertheless<br />

it got a lot of response in the<br />

art context.”<br />

A piece which he actually considers more of an<br />

artwork is Virtual Growth: a mobile installation<br />

which projects autonomous growing structures<br />

onto any environment you place it in, be it<br />

buildings, people or nature.<br />

“For me <strong>AR</strong> has to take place in<br />

the real world. I don’t like screens.<br />

I want to get away from them. I<br />

have always been interested in<br />

other ways of interacting with<br />

computers, without mice, without<br />

screens. There is a lot of screen<br />

based <strong>AR</strong>, but for me <strong>AR</strong> is really<br />

about projecting into the real<br />

world. Put it in the real world,<br />

identify real world objects, do it in<br />

real-time, thats my philosophy. It<br />

ain’t fun if it ain’t real-time. One<br />

day, I want to go through a city<br />

with a van and do projections on<br />

buildings, trees, people and whatever<br />

else I pass.”<br />

For now, he is bound to a bike but that does<br />

not stop him. Virtual Growth works fast and<br />

stable, even on a bike. That has been witnessed<br />

in Amsterdam, where the audiovisual bicycle<br />

project ‘Volle Band’ put beamers on bikes and<br />

invented Lieven to augmented the city with his<br />

mobile installation. People who experienced<br />

Virtual Growth on his journeys around Amsterdam,<br />

at festivals and parties, are enthusiastic<br />

about his (‘smashing!’) entertainment-art. As the<br />

virtual structure grows, the audience members<br />

not only start to interact with the piece but also<br />

with each other.<br />

“They put themselves in front<br />

of the projector, have it projecting<br />

onto themselves and pass on<br />

the projection to other people<br />

by touching them. I don’t explain<br />

anything. I believe in simple<br />

ideas, not complicated concepts.<br />

The piece has to speak for itself.<br />

If people try it, immediately get<br />

it, enjoy it and tell other people<br />

about it, it works!”<br />

Virtual Growth works, that becomes clear from<br />

the many happy smiling faces the projection<br />

grows upon. And that’s also what counts for<br />

Lieven.<br />

“At first it was hard, I didn’t get<br />

paid for doing these projects. But<br />

when people see them and are enthusiastic,<br />

that makes me happy.<br />

If I see people enjoying my work,<br />

and playing with it, that’s what<br />

really counts.”<br />

I wonder where he gets the energy to work that<br />

much alongside being a student. He tells me,<br />

what drives him, is that he enjoys it. He likes to<br />

spend the evenings with the programming language<br />

C#. But the fact that he enjoys working on<br />

his ideas, does not only keep him motivated but<br />

also has caused him to postpone a few courses<br />

at university. While talking, he smokes his<br />

cigarette and takes the ashtray from the floor.<br />

With the road no longer blocked by it, the cars<br />

take a different route now. Lieven might take a<br />

different route soon as well. I ask him, if he will<br />

still be working from his living room, realizing<br />

his own ideas, once he has graduated.<br />

“It’s actually funny. It all started<br />

to fill my portfolio in order to get<br />

a cool job. I wanted to have some<br />

things to show besides a diploma.<br />

That’s why I started realizing my<br />

ideas. It got out of control and<br />

soon I was realizing one idea after<br />

the other. And maybe, I’ll just<br />

continue doing it. But also, there<br />

are quite some companies and<br />

jobs I’d enjoy working for. First<br />

I have to graduate anyway.”<br />

If I have learned anything about Lieven and his<br />

work, I am sure his graduation project will be<br />

placed in the real world and work in in realtime.<br />

More than that, it will be fun. It ain’t<br />

Lieven, if it ain’t’ fun.<br />

name: lieven van Velthoven<br />

Born: 1984<br />

study: media technology msc,<br />

leiden university<br />

Background: computer science,<br />

tu delft<br />

selected <strong>AR</strong> works: Room Racers,<br />

Virtual growth<br />

watch: http://www.youtube.com/<br />

user/lievenvv<br />

34 35

HoW DID WE Do IT:<br />

ADDING VIRTUAL SCULPTURES<br />

AT THE KRöLLER-MüLLER MUSEUM<br />

By Wim van Eck<br />

ALWAYS WANTED To CREATE YoUR oWN AUGMENTED REALITY PRo-<br />

JECTS BUT NEVER KNEW HoW? DoN’T WoRRY, <strong>AR</strong>[T] IS GoING To<br />

HELP YoU! HoWEVER, THERE <strong>AR</strong>E MANY HURDLES To TAKE WHEN<br />

REALIZING AN AUGMENTED REALITY PRoJECT. IDEALLY YoU SHoULD<br />

BE A SKILLFUL 3D ANIMAToR To CREATE YoUR oWN VIRTUAL oB-<br />

JECTS, AND A GREAT PRoGRAMMER To MAKE THE PRoJECT TECHNI-<br />

CALLY WoRK. PRoVIDING YoU DoN’T JUST WANT To MAKE A FANCY<br />

TECH-DEMo, YoU ALSo NEED To CoME UP WITH A GREAT CoNCEPT!<br />

My name is Wim van Eck and I work at the <strong>AR</strong><br />

<strong>Lab</strong>, based at the Royal Academy of Art. one of<br />

my tasks is to help art-students realize their Augmented<br />

Reality projects. These students have<br />

great concepts, but often lack experience in 3d<br />

animation and programming. Logically I should<br />

tell them to follow animation and programming<br />

courses, but since the average deadline for their<br />

projects is counted in weeks instead of months<br />

or years there is seldom time for that... In the<br />

coming issues of <strong>AR</strong>[t] I will explain how the <strong>AR</strong><br />

<strong>Lab</strong> helps students to realize their projects and<br />

how we try to overcome technical boundaries,<br />

showing actual projects we worked on by example.<br />

Since this is the first issue of our magazine<br />

I will give a short overview of recommendable<br />

programs for Augmented Reality development.<br />

We will start with 3d animation programs, which<br />

we need to create our 3d models. There are<br />

many 3d animation packages, the more well<br />

known ones include 3ds Max, Maya, Cinema 4d,<br />

Softimage, Lightwave, Modo and the open source<br />

Blender (www.blender.org). These are all great<br />

programs, however at the <strong>AR</strong> <strong>Lab</strong> we mostly use<br />

Cinema 4d (image 1) since it is very user friendly<br />

and because of that easier to learn. It is a shame<br />

that the free Blender still has a steep learning<br />

curve since it is otherwise an excellent program.<br />

You can <strong>download</strong> a demo of Cinema 4d at<br />

http://www.maxon.net/<strong>download</strong>s/demo-version.html,<br />

these are some good tutorial sites to<br />

get you started:<br />

http://www.cineversity.com<br />

http://www.c4dcafe.com<br />

http://greyscalegorilla.com<br />

image 1<br />

image 2 image 3 | Picture by klaas A. mulder image 4<br />

In case you don’t want to create your own 3d<br />

models you can also <strong>download</strong> them from various<br />

websites. Turbosquid (http://www.turbosquid.com),<br />

for example, offers good quality but<br />

often at a high price, while free sites such as<br />

Artist-3d (http://artist-3d.com) have a more varied<br />

quality. When a 3d model is not constructed<br />

properly it might give problems when you import<br />

it or visualize it. In coming issues of <strong>AR</strong>[t] we<br />

will talk more about optimizing 3d models for<br />

Augmented Reality usage. To actually add these<br />

3d models to the real world you need Augmented<br />

Reality software. Again there are many<br />

options, with new software being added continuously.<br />

Probably the easiest to use software is<br />

Build<strong>AR</strong> (http://www.buildar.co.nz) which is<br />

available for Windows and oSX. It is easy to<br />

import 3d models, video and sound and there is<br />

a demo available. There are excellent tutorials<br />

on their site to get you started. In case you want<br />

to develop for ioS or Android the free Junaio<br />

(http://www.junaio.com) is a good option. Their<br />

online GLUE application is easy to use, though<br />

their preferred .m2d format for 3d models is<br />

not the most common. In my opinion the most<br />

powerful Augmented Reality software right now<br />

is Vuforia (https://developer.qualcomm.com/<br />

develop/mobile-technologies/Augmented-reality)<br />

in combination with the excellent game-engine<br />

Unity (www.unity3d.com). This combination<br />

offers high-quality visuals with easy to script<br />

interaction on ioS and Android devices<br />

Sweet summer nights<br />

at the Kröller-Müller<br />

Museum.<br />

As mentioned before in the introduction we<br />

will show the workflow of <strong>AR</strong> <strong>Lab</strong> projects with<br />

these ‘How did we do it’ articles. In 2009 the <strong>AR</strong><br />

<strong>Lab</strong> was invited by the Kröller-Müller Museum to<br />

present during the ‘Sweet Summer Nights’, an<br />

evening full of cultural activities in the famous<br />

sculpture garden of the museum. We were asked<br />

to develop an Augmented Reality installation<br />

aimed at the whole family and found a diverse<br />

group of students to work on the project. Now<br />

the most important part of the project started,<br />

brainstorming!<br />

our location in the sculpture garden was in-<br />

between two sculptures, ‘Man and woman’, a<br />

stone sculpture of a couple by Eugène Dodeigne<br />

(image 2) and ‘Igloo di pietra’, a dome shaped<br />

sculpture by Mario Merz (image 3). We decided<br />

to read more about these works, and learned<br />

that Dodeigne had originally intended to create<br />

two couples instead of one, placed together in a<br />

wild natural environment. We decided to virtually<br />

add the second couple and also add a more<br />

wild environment, just as Dodeigne initially had<br />

in mind. To be able to see these additions we<br />

placed a screen which can rotate 360 degrees<br />

between the two sculptures (image 4).<br />

36 37

A webcam was placed on top of the screen,<br />

and a laptop running <strong>AR</strong>Toolkit (http://www.<br />

hitl.washington.edu/artoolkit) was mounted<br />

on the back of the screen. A large marker was<br />

placed near the sculpture as a reference point<br />

for <strong>AR</strong>Toolkit.<br />

Now it was time to create the 3d models of the<br />

extra couple and environment. The students<br />

working on this part of the project didn’t have<br />

much experience with 3d animation, and there<br />

wasn’t much time to teach them, so manually<br />

modeling the sculptures would be a difficult task.<br />

Soon options such as 3d scanning the sculpture<br />

were opted, but it still needs quite some skill<br />

to actually prepare a 3d scan for Augmented<br />

Reality usage. We will talk more about that in<br />

a coming issue of this magazine.<br />

But when we look carefully at our setup (image<br />

5) we can draw some interesting conclusions.<br />

our screen is immobile, we will always see our<br />

added 3d model from the same angle. So since<br />

we will never be able to see the back of the 3d<br />

model there is no need to actually model this<br />

part. This is a common practice while making 3d<br />

models, you can compare it with set construction<br />

for Hollywood movies where they also only<br />

38<br />

image 5<br />

actually build what the camera will see. This will<br />

already save us quite some work. We can also<br />

see the screen is positioned quite far away from<br />

the sculpture, and when an object is viewed<br />

from a distance it will optically lose its depth.<br />

When you are one meter away from an object<br />

and take one step aside you will see the side of<br />

the object, but if the same object is a hundred<br />

meter away you will hardly see a change in perspective<br />

when changing your position (see image<br />

6). From that distance people will hardly see the<br />

difference between an actual 3d model and a<br />

plain 2d image. This means we could actually use<br />

photographs or drawings instead of a complex 3d<br />

model, making the whole process easier again.<br />

We decided to follow this route.<br />

image 6<br />

image 7<br />

image 8<br />

image 9<br />

image 10<br />

image 11<br />

=<br />

39

original photograph by klaas A. mulder<br />

image 12<br />

To be able to place the photograph of the<br />

sculpture in our 3d scene we have to assign<br />