Agile Performance Testing - Testing Experience

Agile Performance Testing - Testing Experience

Agile Performance Testing - Testing Experience

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

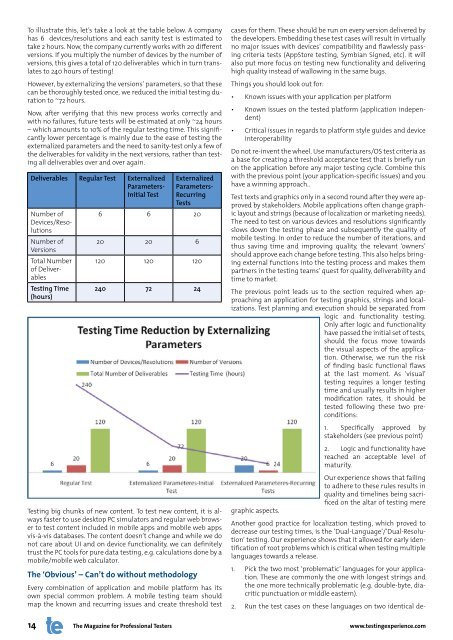

To illustrate this, let’s take a look at the table below. A company<br />

has 6 devices/resolutions and each sanity test is estimated to<br />

take 2 hours. Now, the company currently works with 20 different<br />

versions. If you multiply the number of devices by the number of<br />

versions, this gives a total of 120 deliverables which in turn translates<br />

to 240 hours of testing!<br />

However, by externalizing the versions’ parameters, so that these<br />

can be thoroughly tested once, we reduced the initial testing duration<br />

to ~72 hours.<br />

Now, after verifying that this new process works correctly and<br />

with no failures, future tests will be estimated at only ~24 hours<br />

– which amounts to 10% of the regular testing time. This significantly<br />

lower percentage is mainly due to the ease of testing the<br />

externalized parameters and the need to sanity-test only a few of<br />

the deliverables for validity in the next versions, rather than testing<br />

all deliverables over and over again.<br />

Deliverables Regular Test Externalized<br />

Parameters-<br />

Initial Test<br />

Number of<br />

Devices/Resolutions<br />

Number of<br />

Versions<br />

Total Number<br />

of Deliverables<br />

<strong>Testing</strong> Time<br />

(hours)<br />

Externalized<br />

Parameters-<br />

Recurring<br />

Tests<br />

6 6 20<br />

20 20 6<br />

120 120 120<br />

240 72 24<br />

<strong>Testing</strong> big chunks of new content. To test new content, it is always<br />

faster to use desktop PC simulators and regular web browser<br />

to test content included in mobile apps and mobile web apps<br />

vis-à-vis databases. The content doesn’t change and while we do<br />

not care about UI and on device functionality, we can definitely<br />

trust the PC tools for pure data testing, e.g. calculations done by a<br />

mobile/mobile web calculator.<br />

The ‘Obvious’ – Can’t do without methodology<br />

Every combination of application and mobile platform has its<br />

own special common problem. A mobile testing team should<br />

map the known and recurring issues and create threshold test<br />

cases for them. These should be run on every version delivered by<br />

the developers. Embedding these test cases will result in virtually<br />

no major issues with devices’ compatibility and flawlessly passing<br />

criteria tests (AppStore testing, Symbian Signed, etc). It will<br />

also put more focus on testing new functionality and delivering<br />

high quality instead of wallowing in the same bugs.<br />

Things you should look out for:<br />

• Known issues with your application per platform<br />

• Known issues on the tested platform (application independent)<br />

• Critical issues in regards to platform style guides and device<br />

interoperability<br />

Do not re-invent the wheel. Use manufacturers/OS test criteria as<br />

a base for creating a threshold acceptance test that is briefly run<br />

on the application before any major testing cycle. Combine this<br />

with the previous point (your application-specific issues) and you<br />

have a winning approach..<br />

Test texts and graphics only in a second round after they were approved<br />

by stakeholders. Mobile applications often change graphic<br />

layout and strings (because of localization or marketing needs).<br />

The need to test on various devices and resolutions significantly<br />

slows down the testing phase and subsequently the quality of<br />

mobile testing. In order to reduce the number of iterations, and<br />

thus saving time and improving quality, the relevant ‘owners’<br />

should approve each change before testing. This also helps bringing<br />

external functions into the testing process and makes them<br />

partners in the testing teams’ quest for quality, deliverability and<br />

time to market.<br />

The previous point leads us to the section required when approaching<br />

an application for testing graphics, strings and localizations.<br />

Test planning and execution should be separated from<br />

logic and functionality testing.<br />

Only after logic and functionality<br />

have passed the initial set of tests,<br />

should the focus move towards<br />

the visual aspects of the application.<br />

Otherwise, we run the risk<br />

of finding basic functional flaws<br />

at the last moment. As ‘visual’<br />

testing requires a longer testing<br />

time and usually results in higher<br />

modification rates, it should be<br />

tested following these two preconditions:<br />

1. Specifically approved by<br />

stakeholders (see previous point)<br />

2. Logic and functionality have<br />

reached an acceptable level of<br />

maturity.<br />

Our experience shows that failing<br />

to adhere to these rules results in<br />

quality and timelines being sacrificed<br />

on the altar of testing mere<br />

graphic aspects.<br />

Another good practice for localization testing, which proved to<br />

decrease our testing times, is the ‘Dual-Language’/’Dual-Resolution’<br />

testing. Our experience shows that it allowed for early identification<br />

of root problems which is critical when testing multiple<br />

languages towards a release.<br />

1. Pick the two most ‘problematic’ languages for your application.<br />

These are commonly the one with longest strings and<br />

the one more technically problematic (e.g. double-byte, diacritic<br />

punctuation or middle eastern).<br />

2. Run the test cases on these languages on two identical de-<br />

14 The Magazine for Professional Testers www.testingexperience.com