Digital Photogrammetry - TEC-Digital

Digital Photogrammetry - TEC-Digital

Digital Photogrammetry - TEC-Digital

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

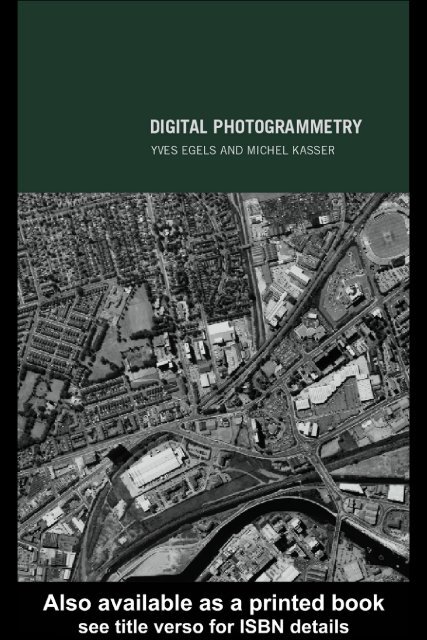

<strong>Digital</strong> <strong>Photogrammetry</strong><br />

Architectural photogrammetry i<br />

<strong>Photogrammetry</strong>, the use of photography for surveying, primarily facilitates the<br />

production of maps and geographic databases from aerial photographs. Along with<br />

remote sensing, it represents the principal means of generating data for geographic<br />

information systems.<br />

<strong>Photogrammetry</strong> has undergone a remarkable evolution in recent years with its<br />

transformation into ‘digital photogrammetry’. First, the distinctions between<br />

photogrammetry, remote sensing, geodesy and GIS are fast disappearing, as data<br />

can now be carried digitally from the plane to the GIS end-user. And second, the<br />

benefits of digital photogrammetric workstations have increased dramatically. The<br />

comprehensive use of digital tools, and the automation of the processes, have significantly<br />

cut costs and reduced processing time. The first digital aerial cameras have<br />

become available, and the introduction of more and more new digital tools allows<br />

the work of operators to be simplified, without the same need for stereoscopic<br />

skills. Engineers and technicians in other fields are now able to carry out photogrammetric<br />

work without reliance on specialist photogrammetrists.<br />

This book shows non-experts what digital photogrammetry is and what the software<br />

does, and provides them with sufficient expertise to use it. It also gives<br />

specialists an overview of these totally digital processes from A to Z. It serves as<br />

a textbook for graduate students, young engineers and university lecturers to<br />

complement a modern lecture course on photogrammetry.<br />

Michel Kasser and Yves Egels have synthesized the contributions of 21 topranking<br />

specialists in digital photogrammetry, lecturers, researchers, and production<br />

engineers, and produced a very up-to-date text and guide.<br />

Michel Kasser, who graduated from the École Polytechnique de Paris and from<br />

the École Nationale des Sciences Géographiques (ENSG), was formerly Head of<br />

ESGT, the main technical university for surveyors in France. A specialist in instrumentation<br />

and space imagery, he is now University Professor and Head of the<br />

Geodetic Department at IGN-France.<br />

Yves Egels, who graduated from the École Polytechnique de Paris and from ENSG,<br />

is senior photogrammetrist at IGN-France, where he pioneered analytical plotter<br />

software in the 1980s and digital workstations on PCs in the 1990s. He is now<br />

Head of the Photogrammetric Department at ENSG and lectures in photogrammetry<br />

at various French technical universities.

ii Pierre Grussenmeyer, Klaus Hanke, André Streilein

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

<strong>Digital</strong> <strong>Photogrammetry</strong><br />

Michel Kasser and Yves Egels<br />

London and New York<br />

Architectural photogrammetry iii

First published 2002<br />

by Taylor & Francis<br />

11 New Fetter Lane, London EC4P 4EE<br />

Simultaneously published in the USA and Canada<br />

by Taylor & Francis Inc,<br />

29 West 35th Street, New York, NY 10001<br />

Taylor & Francis is an imprint of the Taylor & Francis Group<br />

This edition published in the Taylor & Francis e-Library, 2004.<br />

© 2002 Michel Kasser and Yves Egels<br />

All rights reserved. No part of this book may be reprinted or<br />

reproduced or utilised in any form or by any electronic, mechanical,<br />

or other means, now known or hereafter invented, including<br />

photocopying and recording, or in any information storage or<br />

retrieval system, without permission in writing from the publishers.<br />

Every effort has been made to ensure that the advice and<br />

information in this book is true and accurate at the time of going to<br />

press. However, neither the publisher nor the authors can accept<br />

any legal responsibility or liability for any errors or omissions that<br />

may be made. In the case of drug administration, any medical<br />

procedure or the use of technical equipment mentioned within this<br />

book, you are strongly advised to consult the manufacturer’s<br />

guidelines.<br />

British Library Cataloguing in Publication Data<br />

A catalogue record for this book is available from the<br />

British Library<br />

Library of Congress Cataloging in Publication Data<br />

Kasser, Michel<br />

<strong>Digital</strong> photogrammetry/Michel Kasser and Yves Egels.<br />

p. cm<br />

Includes bibliographical references and index.<br />

I. Aerial photogrammetry. 2. Image processing – <strong>Digital</strong> techniques.<br />

I. Kasser, Michel. II. Title.<br />

TA 593.E34 2001<br />

526.9′82 – dc21 2001027205<br />

ISBN 0-203-30595-7 Master e-book ISBN<br />

ISBN 0-203-34383-2 (Adobe eReader Format)<br />

ISBN 0–748–40945–9 (pbk)<br />

ISBN 0–748–40944–0 (hbk)

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

Contents<br />

List of colour plates viii<br />

List of contributors ix<br />

Introduction xiii<br />

1 Image acquisition. Physical aspects. Instruments 1<br />

Introduction 1<br />

1.1 Mathematical model of the geometry of the aerial<br />

image 2<br />

YVES EGELS<br />

1.2 Radiometric effects of the atmosphere and the optics 16<br />

MICHEL KASSER<br />

1.3 Colorimetry 25<br />

YANNICK BERGIA, MICHEL KASSER<br />

1.4 Geometry of aerial and spatial pictures 34<br />

MICHEL KASSER<br />

1.5 <strong>Digital</strong> image acquisition with airborne CCD cameras 39<br />

MICHEL KASSER<br />

1.6 Radar images in photogrammetry 47<br />

LAURENT POLIDORI<br />

1.7 Use of airborne laser ranging systems for the<br />

determination of DSM 53<br />

MICHEL KASSER<br />

1.8 Use of scanners for the digitization of aerial pictures 58<br />

MICHEL KASSER<br />

1.9 Relations between radiometric and geometric precision<br />

in digital imagery 63<br />

CHRISTIAN THOM<br />

Architectural photogrammetry v

vi Contents<br />

2 Techniques for plotting digital images 78<br />

Introduction 78<br />

2.1 Image improvements 79<br />

ALAIN DUPÉRET<br />

2.2 Compression of digital images 100<br />

GILLES MOURY<br />

2.3 Use of GPS in photogrammetry 115<br />

THIERRY DUQUESNOY, YVES EGELS, MICHEL KASSER<br />

2.4 Automatization of aerotriangulation 124<br />

FRANCK JUNG, FRANK FUCHS, DIDIER BOLDO<br />

2.5 <strong>Digital</strong> photogrammetric workstations 145<br />

RAPHAËLE HENO, YVES EGELS<br />

3 Generation of digital terrain and surface models 159<br />

Introduction 159<br />

3.1 Overview of digital surface models 159<br />

NICOLAS PAPARODITIS, LAURENT POLIDORI<br />

3.2 DSM quality: internal and external validation 164<br />

LAURENT POLIDORI<br />

3.3 3D data acquisition from visible images 168<br />

NICOLAS PAPARODITIS, OLIVIER DISSARD<br />

3.4 From the digital surface model (DSM) to the digital<br />

terrain model (DTM) 221<br />

OLIVIER JAMET<br />

3.5 DSM reconstruction 225<br />

GRÉGOIRE MAILLET, PATRICK JULIEN,<br />

NICOLAS PAPARODITIS<br />

3.6 Extraction of characteristic lines of the relief 253<br />

ALAIN DUPÉRET, OLIVIER JAMET<br />

3.7 From the aerial image to orthophotography: different<br />

levels of rectification 282<br />

MICHEL KASSER, LAURENT POLIDORI<br />

3.8 Production of digital orthophotographies 288<br />

DIDIER BOLDO<br />

3.9 Problems relating to orthophotography production<br />

DIDIER MOISSET 292

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

4 Metrologic applications of digital photogrammetry 300<br />

Introduction 300<br />

4.1 Architectural photogrammetry 300<br />

PIERRE GRUSSENMEYER, KLAUS HANKE,<br />

ANDRÉ STREILEIN<br />

4.2 Photogrammetric metrology 340<br />

MICHEL KASSER<br />

Contents vii<br />

Index 349

viii Pierre Grussenmeyer, Klaus Hanke, André Streilein<br />

Plates<br />

There is a colour plate section between pages 192 and 193<br />

Figure 3.3.20 Left and right images of a 1m ground pixel size satellite<br />

across track stereopair; DSM result with a classical crosscorrelation<br />

technique; DSM using adaptive shape windows<br />

Figure 3.3.24 An example of the management of hidden parts<br />

Figure 3.3.27 Correlation matrix corresponding to the matching of the<br />

two epipolar lines appearing in yellow in the image extracts<br />

Figure 3.3.28 Results of the global matching strategy on a complete<br />

stereopair overlap in a dense urban area<br />

Figure 3.4.1 Images from the digital camera of IGN-F (1997) on Le<br />

Mans (France)<br />

Figure 3.8.3 Scanned images before balancing<br />

Figure 3.8.4 Scanned images after balancing<br />

Figure 3.9.2 Example from a real production problem: the radiometric<br />

balancing on digitized pictures<br />

Figure 3.9.4 Examples from the Ariège, France: problems posed by cliffs<br />

Figure 3.9.5 Generation of stretched pixels<br />

Figure 3.9.6 Example of radiometric difficulties (Ariège, France)<br />

Figure 3.9.7 Example of typical problems on water surfaces<br />

Figure 3.9.8 Two examples from the Isère department, France<br />

Figure 3.9.9 Two examples, using the digital aerial camera of IGN-F

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

Contributors<br />

Architectural photogrammetry ix<br />

Yannick Bergia, born in 1977, graduated as an informatics engineer from<br />

ISIMA (Institut Supérieur d’Informatique, de Modélisation et de leurs<br />

Applications, Clermont-Ferrand, France) in 1999. He performed his<br />

diploma work in MATIS in 1999, and is a specialist in image processing.<br />

y.bergia@infonie.fr<br />

Didier Boldo, born in 1973, graduated from the École Polytechnique de<br />

Paris and from ENSG. He is now pursuing a Ph.D. in pattern recognition<br />

and image analysis at MATIS. His main research interests are<br />

photogrammetry, 3D reconstruction and radiometric modelling and calibration.<br />

didier.boldo@ign.fr<br />

Olivier Dissard, born in 1967, graduated from the École Polytechnique de<br />

Paris and from ENSG. Previously in charge of research concerning 3D<br />

urban topics at MATIS laboratory, he is now in charge of digital<br />

orthophotography at IGN-F. His studies at MATIS have concerned<br />

urban DEM and DSM, focusing on raised structures and classification<br />

in buildings and vegetation, and true orthophotographies.<br />

olivier.dissard@ign.fr<br />

Alain Dupéret graduated as ingénieur géographe from ENSG. He started<br />

working as a surveyor in IGN-F in 1979 and undertook topographic<br />

missions in France and Africa before specialising in image processing<br />

and DTM production. After managing technical projects and giving<br />

lectures in photogrammetry and image processing in ENSG, he became<br />

Head of Studies Management at ENSG.<br />

duperet@ensg.ign.fr<br />

Thierry Duquesnoy (born in 1963) graduated from ENSG in 1989. He<br />

gained his Ph.D. in earth sciences in 1997 at the University of Paris.<br />

He has worked since 1989 with the LOEMI, where he is a specialist in<br />

GPS trajectography for photogrammetry.<br />

thierry.duquesnoy@ign.fr

x Contributors<br />

Yves Egels, born in 1947, graduated from the École Polytechnique de Paris<br />

and from ENSG, and is senior photogrammetrist at IGN-F, where he<br />

pioneered analytical plotter software in the 1980s and digital workstations<br />

on PCs in the 1990s. He is now Head of the Photogrammetric<br />

Department at ENSG and gives lectures in photogrammetry at various<br />

French technical universities.<br />

egels@ensg.ign.fr<br />

Frank Fuchs, born in 1971, graduated from the École Polytechnique de<br />

Paris and from ENSG. Since 1996 he has been working at MATIS on<br />

his Ph.D. concerning the automatic reconstruction of buildings in aerial<br />

imagery through a structural approach.<br />

frank.fuchs@ign.fr<br />

Pierre Grussenmeyer, born in 1961, graduated from ENSAIS, and gained a<br />

Ph.D. in photogrammetry in 1994 at the University of Strasbourg in<br />

collaboration with IGN-F. He has been on the academic staff of the<br />

Department of Surveying at ENSAIS, where he teaches photogrammetry,<br />

since 1989. Since 1996 he has been Professor and the Head of the<br />

<strong>Photogrammetry</strong> and Geomatics Group at ENSAIS-LERGEC.<br />

pierre.grussenmeyer@ensais.u-strasbg.fr<br />

Klaus Hanke, born 1954, studied geodesy and photogrammetry at the<br />

University of Innsbruck and the Technical University of Graz. In 1984<br />

he became Dr. techn., and since 1994 he has been a Professor teaching<br />

<strong>Photogrammetry</strong> and Architectural <strong>Photogrammetry</strong> at the University<br />

of Innsbruck.<br />

Raphaële Heno, born 1970, graduated in 1993 from ENSG and from ITC<br />

(Enschede – The Netherlands). She spent four years developing digital<br />

photogrammetric tools to improve IGN-France topographic database<br />

plotting. Since 1998 she has been senior consultant for the IGN-France<br />

advisory department.<br />

raphaele_heno@hotmail.com<br />

Olivier Jamet, born in 1963, graduated from the École Polytechnique de<br />

Paris and from ENSG. He gained a Ph.D. in signal and image processing<br />

at the École Nationale Supérieure des Télécommunications de Paris<br />

(1998). Formerly Head of the MATIS laboratory, he is currently in<br />

charge of research in physical geodesy at LAREG, ENSG.<br />

olivier.jamet@ign.fr<br />

Patrick Julien graduated from the University of Paris (in mathematics) and<br />

from ENSG, and joined IGN-F in 1975. He has developed various softwares<br />

for orthophotography, digital terrain models and digital image<br />

matching. He is presently a researcher at MATIS and gives lectures at<br />

ENSG in geographic information sciences.<br />

patrick.julien@ign.fr

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

Contributors xi<br />

Franck Jung, born in 1970, graduated from the École Polytechnique de<br />

Paris and from ENSG, and is currently a researcher at the MATIS laboratory.<br />

He is preparing a Ph.D. dissertation on automatic change<br />

detection using aerial stereo pairs at two different dates. His main<br />

research interests are automatic digital photogrammetry, change detection<br />

and machine learning.<br />

franck.jung@ign.fr<br />

Michel Kasser, born in 1953, graduated from the École Polytechnique de<br />

Paris and from ENSG. He created the LOEMI in 1984 and was from<br />

1991 to 1999 head of the ESGT. As a specialist in instrumentation, in<br />

geodesy and in space imagery, he is University Professor and Head of<br />

the Geodetic Department and of LAREG at IGN-F.<br />

michel.kasser@ign.fr<br />

Grégoire Maillet, born in 1973, graduated from ENSG in 1997. He is now<br />

a research engineer at MATIS, where he works on dynamic programming<br />

and automatic correlation for image matching.<br />

gregoire.maillet@ign.fr<br />

Didier Moisset, born in 1958, obtained the ENSG engineering degree in<br />

1983. He was in charge of the aerotriangulation team at IGN in 1989,<br />

and was Professor in photogrammetry and Head of the Photogrammetric<br />

Department at the ENSG during 1993–8. He was a project manager in<br />

1998 and developed new automatic orthophoto software for IGN. He<br />

was recently appointed as a consultant in photogrammetry at the MATIS<br />

laboratory.<br />

didier.moisset@ign.fr<br />

Gilles Moury, born in 1960, graduated from the École Polytechnique de<br />

Paris and from ENSAE (École Nationale Supérieure de l’Aéronautique<br />

et de l’Espace). He has been working in the field of satellite on-board<br />

data processing since 1985, within CNES. In particular, he was responsible<br />

for the development of image compression algorithms for various<br />

space missions (Spot 5, Clementine, etc.). He is now Head of the onboard<br />

data processing section and lectures in data compression at various<br />

French technical universities.<br />

gilles.moury@cnes.fr<br />

Nicolas Paparoditis, born in 1969, obtained a Ph.D. in computer vision<br />

from the University of Nice-Sophia Antipolis. He worked for five years<br />

for the Aérospatiale Company on the specification of HRV digital<br />

mapping satellite instruments (SPOT satellites). He is a specialist in<br />

image processing, in computer vision, and in digital aerial and satellite<br />

imagery, and is currently a Professor at ESGT where he leads the research<br />

activities in digital photogrammetry. He is also a research member of<br />

MATIS at IGN-F.<br />

nicolas.paparoditis@ign.fr

xii Contributors<br />

Laurent Polidori, born in 1965, graduated from ENSG in 1987. He gained<br />

a Ph.D. in 1991 on ‘DTM quality assessment for earth sciences’ at Paris<br />

University. A researcher at the Aérospatiale Company (Cannes, France)<br />

on satellite imagery, he is the author of Cartographie Radar (Gordon<br />

and Breach, 1997). He is Head of the Remote Sensing Laboratory of<br />

IRD (Cayenne, Guyane) and Associate Professor at ESGT.<br />

polidori@caiena.cayenne.ird.fr<br />

André Streilein in 1990 obtained a Dip.Ing. at the Rheinische Friedrich-<br />

Wilhelms-Universität, Bonn. In 1998 he obtained a Dr. sc. tech. at the<br />

Swiss Federal Institute of Technology, Zurich. His dissertation was on<br />

‘<strong>Digital</strong>e Photogrammetrie und CAAD’. Since September 2000 he has<br />

been at the Swiss Federal Office of Topography (Bern), Section<br />

<strong>Photogrammetry</strong> and Remote Sensing. (Postal address: Bundesamt für<br />

Landestopographie, Seftigenstrasse 2, 3084 Wabern, Bern, Switzerland.)<br />

Christian Thom, born in 1959, graduated from the École Polytechnique<br />

de Paris and from the ENSG. With a Ph.D. (1986) in robotics and signal<br />

processing, he has specialized in instrumentation. He is Head of LOEMI<br />

where he pioneered and developed the IGN program of digital aerial<br />

cameras from 1989.<br />

christian.thom@ign.fr<br />

Institutions<br />

IGN-F is the Institut Géographique National, France, administration in<br />

charge of national geodesy, cartography and geographic databases. Its main<br />

installation is in Saint-Mandé, close to Paris. The MATIS is the laboratory<br />

for research in photogrammetry of IGN-F; the LOEMI is its laboratory<br />

for new instrument development; and the LAREG its laboratory for<br />

geodesy. The ENSG (École Nationale des Sciences Géographiques) is the<br />

technical university owned by IGN-F.<br />

(Postal address: IGN, 2 Avenue Pasteur, F-94 165 Saint-Mandé Cedex,<br />

France.)<br />

The CNES is the French Space Agency.<br />

(Postal address: CNES, 2 Place Maurice Quentin, F-75 039 Paris Cedex<br />

01, France.)<br />

The ENSAIS is a technical university in Strasbourg with a section of engineers<br />

in surveying.<br />

(Postal address: ENSAIS, 24 Boulevard de la Victoire, F-67 000, Strasbourg,<br />

France.)<br />

The ESGT is the École Supérieure des Géomètres et Topographes<br />

(Le Mans), the technical university with the most important section of<br />

engineers in surveying in France.<br />

(Postal address: ESGT, 1 Boulevard Pythagore, F-72 000, Le Mans, France.)

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

Introduction<br />

Architectural photogrammetry xiii<br />

In 1998, when Taylor & Francis pushed me into the adventure of writing<br />

this book with my colleague Yves Egels, most specialists of photogrammetry<br />

around me were aware of the lack of communication between their<br />

technical community and other specialists. Indeed, this situation was not<br />

new, as 150 years after its invention by Laussédat photogrammetry was<br />

really reserved for photogrammetrists (considering for example the<br />

extremely high costs of photogrammetric plotters, but also the necessary<br />

visual ability of the required technicians, a skill that required a long and<br />

costly training, etc.). <strong>Photogrammetry</strong> was thus the speciality of a very<br />

small club, mostly European, and the related industrial activity was heavily<br />

based on the national cartographic institutions.<br />

As we saw it in the early 1990s, the situation has changed very rapidly<br />

with the improvement of the computational power available on cheap<br />

machines. First, one could see software developments trying to avoid the<br />

mechanical part of the photogrammetric plotters, but more or less based<br />

on the same algorithms as analytical plotters. These machines, still devoted<br />

to professional practitioners, appeared a little cheaper, but with a comparatively<br />

poor visual quality. They were often considered as good workstations<br />

for orthophotographic productions with, as a side quality, an additional<br />

production capacity of photogrammetric plotting in case of emergency<br />

work. The main residual difficulty was linked to the huge amount of bytes,<br />

and also to the digitization of the traditional argentic aerial images, as the<br />

necessary scanners were extremely expensive. The high cost of the very precise<br />

mechanical parts had already been reduced in the 1980s from the old<br />

opto-mechanical plotters to the analytical plotters, as the mechanics has<br />

been downsized from 3D to 2D. But as a final revolution they had just<br />

shifted from the analytical plotter to the scanner, although a scanner could<br />

be used for many plotting workstations, and thus the total production cost<br />

was already lower. But this necessity to use a high-cost scanner was the<br />

main limitation of any new development of photogrammetric activity.<br />

During this period, the industry developed for the consumer public very<br />

interesting products devoted to following the explosion of the microinformatics<br />

evolution and providing a set of extremely cheap tools: video

xiv Introduction<br />

cards allowing a fast and nearly real-time image management, stereoscopic<br />

glasses for video games, A4 scanners, digital colour cameras, etc. These<br />

developments were quickly taken into account by photogrammetrists, so<br />

that the <strong>Digital</strong> Photogrammetric Workstations (DPW) became cheaper<br />

and cheaper, most of the cost today being the software cost. This has<br />

completely changed the situation of photogrammetry: it is now possible<br />

to own a DPW and to use it only occasionally, as the acquisition costs<br />

are low. And if the user lacks a good stereoscopic vision capability, the<br />

automatic matching will help him to put the floating point in good contact<br />

with the surveyed zone. Of course, only trained people may quickly survey<br />

a large amount of data in a cost-effective way, but a good possibility to<br />

work is opened to non-specialists.<br />

If the situation changed a lot for non-photogrammetrists, in fact it<br />

changed a lot for the photogrammetrists themselves, too: the main revolution<br />

we have met in the 1990s is the availability of aerial digital cameras,<br />

providing images whose quality is far superior to the classic argentic ones,<br />

at least in terms of radiometric fidelity, noise level, and geometric perfection.<br />

Thus the last obstacle for a wide spreading of digital photogrammetry<br />

is disappearing. We are on the verge of a massive transfer of such techniques<br />

toward purely digital processes, and the improvement in quality of<br />

the products, hopefully, should be very significant in the next few years.<br />

Also, the use of digital images allows us to automate more and more tasks,<br />

even if a large part of the automation is still at a research level (most automatic<br />

cue extractions).<br />

We have then a completely new situation, where (for a basic activity at<br />

least) the equipment is no longer a limit for a wide spread of photogrammetric<br />

methodology. But if the use is now spreading rapidly, the theoretical<br />

aspects are not sufficiently known today, which could result in disappointing<br />

results for newcomers.<br />

Thus this book has been oriented towards engineers and technicians,<br />

including technicians who are basically external to the field of photogrammetry<br />

and who would like to understand at least part of this field, for<br />

example to use it from time to time, without the help of professionals of<br />

photogrammetry. The goal here is to get this specialty much closer to the<br />

end user, and to help him to be autonomous in most circumstances, a little<br />

like word processors allowed us to type what we wanted when we wanted<br />

it, twenty years ago. But this end user must already have a scientific and<br />

technical culture, as the theoretical concepts would not be accessible<br />

without such a background. So I have supposed in this publication that<br />

the reader had such an initial understanding. Of course, professors and<br />

students, young ones as well as former specialists going through Continuous<br />

Professional Development, will also find great benefit from this up-to-date<br />

material.<br />

A final very important point: the goal here being to be extremely close<br />

to the practical applications and to the production of data, we have looked

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

Introduction xv<br />

for co-authors who are, each of them, excellent specialists in given aspects<br />

of digital photogrammetry, and most of them are practitioners or directly<br />

working for them. This is a guarantee that the considerations discussed<br />

here are really close to the application world. But the unavoidable drawback<br />

is that we have here 21 different authors for a single book, and that<br />

despite our efforts it is obvious that the homogeneity is not perfect. Some<br />

aspects are presented twice, sometimes with significant differences of point<br />

of view: this will remind each of us that the reality is complex, even in<br />

technical matters, and that controversy is the essence of our societies. In<br />

any case we have included an abundant bibliography that may be useful<br />

for further reading. Of course, as many authors are from France, most of<br />

the illustrations come from studies performed in this country, especially<br />

as IGN-F has been one of the few pioneers of the digital CCD cameras.<br />

But I think that this material is more or less equivalent to what everybody<br />

may find in his own country, especially now that large companies commercially<br />

propose digital cameras.<br />

Michel Kasser

xvi Pierre Grussenmeyer, Klaus Hanke, André Streilein

1111<br />

2<br />

3<br />

4<br />

5111<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

44111<br />

1 Image acquisition. Physical<br />

aspects. Instruments<br />

INTRODUCTION<br />

We may define photogrammetry as ‘any measuring technique allowing the<br />

modelling of a 3D space using 2D images’. Of course, this is perfectly suitable<br />

for the case where one uses photographic pictures, but this is still the<br />

case when any other type of 2D acquisition device is used, for example a<br />

radar, or a scanning device: the photogrammetric process is basically independent<br />

of the image type. For that reason, we will present in this chapter<br />

the general physical aspects of the data acquisition in digital photogrammetry.<br />

It starts with §1.1, a presentation of the geometric aspects of<br />

photogrammetry, which is a classic problem but whose presentation is<br />

slightly different from books dealing with traditional photogrammetry. In<br />

§1.1 there are also some considerations about atmospheric refraction, the<br />

distortion due to the optics, and a few rules of thumb in planning aerial<br />

missions where digital images are used. In §1.2, we present some radiometric<br />

impacts of the atmosphere and of the optics on the image, which<br />

helps to understand the very physical nature of the aerial images. And in<br />

§1.3, these few lines about colorimetry are necessary to understand why<br />

the digital tools for managing black and white panchromatic images need<br />

not be the same as the tools for managing colour images. Then we will<br />

present the instruments used to obtain the images, digital of course. In<br />

§1.4 we will consider the geometric constraints on optical image acquisitions<br />

(aerial, and satellite as well). From §1.5 to §1.8, the main digital<br />

acquisition devices will be presented, CCD cameras (§1.5), radars (§1.6),<br />

airborne laser scanners (§1.7), and photographic image scanners (§1.8).<br />

And we will close this chapter with an analysis of the key problem of the<br />

size of the pixel, and the signal-to-noise ratio in the image.

2 Yves Egels<br />

1.1 MATHEMATICAL MODEL OF THE GEOMETRY OF<br />

THE AERIAL IMAGE<br />

Yves Egels<br />

The geometry of images is the basis of all photogrammetric processes,<br />

analogical, analytic or digital. Of course, the detailed geometry of an image<br />

essentially depends on the physical features of the sensor used. In analytic<br />

photogrammetry (the only difference with digital photogrammetry is the<br />

digital nature of the image) the use of this technology of image acquisition<br />

requires therefore a complete survey of its geometric features, and of<br />

the mathematical tools expressing the relation between the coordinates on<br />

the image and those of ground points (and, if the software is correct, this<br />

will be the only thing to do).<br />

We shall explain here, just as an example, the case of the traditional<br />

conical photography (the image being silver halide or digital), that is still<br />

today the most used and that will be able to be a guide for the analysis<br />

of a different sensor. The leading principle, here as well as in any other<br />

geometrical situation, is to approach the quite complex real geometry<br />

by a simple mathematical formula (here the perspective) and to consider<br />

the differences between the physical reality and this formula as corrections<br />

(‘corrections of systematic errors’) presented independently. The imaging<br />

devices whose geometry is different are presented later in this chapter<br />

(§§1.4 to 1.7).<br />

1.1.1 Mathematical perspective of a point of the space<br />

The perspective of a point M of the space, of centre S, on the plane P is<br />

the intersection m of the line SM with the plane P. Coordinates of M will<br />

be expressed in the reference system (O, X, Y, Z), and the ones of m in<br />

the reference system of the image (c, x, y, z). The rotation matrix R corresponds<br />

to the change of system (O, X, Y, Z) → (c, x, y, z). One will note<br />

F the coordinates of the summit S in the reference of the image.<br />

M XM YM ZM S XS YS ZS F xc yc m image of M ⇔ Fm → .SM → .<br />

p m x<br />

y<br />

If one calls K the vector unit orthogonal to the plane of the image one<br />

can write:<br />

0<br />

m ∈ picture ⇔ Kt K<br />

m 0 that implies ’<br />

tF K t R(M S)

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

X<br />

m<br />

Z<br />

O<br />

from where the basic equation of the photogrammetry, so-called collinearity<br />

equation:<br />

m F . (1.1)<br />

This equation can be developed and reordered according to coordinates<br />

of M, under the so-called ‘11 parameters equation’, which appears simpler<br />

to use, but should be handled with great care:<br />

KtFR(M S)<br />

K t R(M S)<br />

x a 1X M b 1Y M c 1Z M d 1<br />

a 3 X M b 3 Y M c 3 Z M 1<br />

K<br />

z<br />

c<br />

F<br />

S<br />

Y<br />

y<br />

x<br />

The geometry of the aerial image 3<br />

Figure 1.1.1 Systems of coordinates for the photogrammetry.<br />

R<br />

M<br />

y a 2X M b 2Y M c 2Z M d 2<br />

a 3 X M b 3 Y M c 3 Z M 1<br />

. (1.2)

4 Yves Egels<br />

1.1.2 Some mathematical tools<br />

1.1.2.1 Representation of the rotation matrixes, exponential and<br />

axiator<br />

In computations of analytic photogrammetry, the rotation matrixes appear<br />

quite often. On a vector space of n dimensions, they depend on n(n 1)/2<br />

parameters, but do not form a vector sub-space. There is therefore no<br />

linear formula between a rotation matrix and its ‘components’. It is,<br />

however, vital to be able to express such a matrix judiciously according<br />

to the chosen parameters. In the case of R3 , it is usual to analyse the rotation<br />

as the composition of three rotations around the three axes of<br />

coordinates (angles w, and , familiar to photogrammetrists). But the<br />

resulting formulas are laborious enough and lack symmetry, which obliges<br />

us to perform unnecessary developments of formulas. One will be able to<br />

define a rotation of the following manner physically: rotation of an angle<br />

around a unitary vector<br />

→ a<br />

b<br />

c , with (√a2 b2 c2 = 1)<br />

We will call here the axiator of a vector the matrix equivalent to a vectorial<br />

product:<br />

→ ∧ V → ~ ·V<br />

one will check that<br />

~ 0<br />

c<br />

b<br />

. (1.3)<br />

It is easy to control that the previous rotation can be written: R e ~ .<br />

Indeed,<br />

, (1.4)<br />

therefore, since ~ · → ∧ → 0 (a well-known property of the vectorial<br />

product) e ~ → →<br />

·. is the rotation axis, only invariant. One will<br />

check that ~ e<br />

~ ~ 3 4 , 2, ~ ~ t etc.; on the other hand, ~ ~ <br />

. . . ~ n n <br />

. . .<br />

n!<br />

(e ~ )<br />

c<br />

0<br />

a<br />

b<br />

a<br />

0<br />

~ t . . . ~ t n<br />

n!<br />

n<br />

. . .

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

<br />

(1.5)<br />

.<br />

Therefore this matrix is certainly an orthogonal matrix, which is a rotation<br />

matrix as det R 1 (as ~ e<br />

~ t ). One will also verify that the rotation<br />

angle is equal to , for example by computing the scalar product of a<br />

normal vector to with its transform.<br />

One sees therefore, while regrouping the even terms and the odd terms<br />

of the procedure:<br />

~ (e ~ 1 )<br />

R ~ ~ 3<br />

~ . . . (1) ~ n n<br />

R I . (1.6)<br />

~ sin ~ 2 (1 cos ) (Euler formula)<br />

This formula will permit a very comfortable calculation of rotations. Indeed<br />

one can choose, as parameters of the rotation, the three values a, b and<br />

c. One can then write:<br />

→ →<br />

Θ . (1.7)<br />

a<br />

c and thus ||Θ→ ||, → Θ→<br />

b<br />

If one expresses sin and cos using tan /2 with the help of the classic<br />

trigonometric formulas, it becomes:<br />

R (I . (1.8)<br />

~ tan (/2)) 1 (I ~ tan (/2)) (Thomson formula)<br />

1.1.2.2 Differential of a rotation matrix<br />

Expressed under this exponential shape, it is possible to differentiate the<br />

rotation matrixes. One may determine:<br />

dR ≈ e Θ~ dΘ ~ R dΘ ~<br />

3! . . . ~ 2 2 <br />

2! ~ 2 4 <br />

4!<br />

The geometry of the aerial image 5<br />

n!<br />

. . .<br />

. . .<br />

. (1.9)<br />

In this expression, the differential makes the parameters of the rotation<br />

appear under matrix shape, which is not very practical. Applied to a vector,<br />

it can be written (using the anticommutativity of the vectorial product):<br />

dR·A R·dΘ . (1.10)<br />

~ ·A R·A ~ ·dΘ

6 Yves Egels<br />

1.1.2.3 Differential relation binding m to the parameters of the<br />

perspective<br />

The previous equation is not linear, and to solve systems corresponding<br />

to analytical photogrammetry (orientation of images, aerotriangulation),<br />

it is necessary to linearize them. Variables that will be taken into account<br />

are F, M, S and R.<br />

If one puts A M S and U RA<br />

(1.11)<br />

dU R(dM dS) dRA R(dM dS A ~ dm dF <br />

d). (1.12)<br />

KtdF U<br />

KtU KtF KtU KtFUKt (Kt 2 U) dU<br />

On the other hand, K t F being a scalar, K t FU UK t F and K t dFU UK t dF.<br />

One then gets:<br />

dm . (1.13)<br />

If one writes<br />

KtU UKt (KtU) 2 [KtU dF KtFR(dM dS A ~ dΘ)]<br />

p KtF, U u1 u2 u3 and V u3 0 0<br />

u3 one gets after manipulation:<br />

dx V<br />

<br />

dy u3 dF p<br />

2 u3 . (1.14)<br />

Under this matrix shape, the computer implementation of this equation<br />

requires only a few code lines.<br />

1.1.3 True geometry of images<br />

u 1<br />

u 2 ,<br />

p<br />

VR(dS dM) 2 u3 VR A~ dΘ<br />

In physical reality, the geometry of images only reproduces in an approximate<br />

way the mathematical perspective whose formulas have just been<br />

established. If one follows the light ray from its starting point on the<br />

ground until the formation of the image on the sensor, one will find a<br />

certain number of differences between the perspective model and the reality.<br />

It is difficult to be comprehensive in this area, and one will mention here<br />

only the main reasons for distortion whose consequences are appreciable<br />

in the photogrammetric process.

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

1.1.3.1 Correction of Earth curvature<br />

The first reason for which photogrammetrists use the so-called correction<br />

of Earth curvature in photogrammetry is that the space in which cartographers<br />

operate is not the Euclidian space in which the collinearity<br />

equation is set up. It is indeed in a cartographic system, in which the<br />

planimetry is measured in a plane representation (often conform) of the<br />

ellipsoid, and the altimetry in a system of altitudes.<br />

The best solution for dealing with this is to make rigorous transformations<br />

from cartographic to tridimensional frames (it is usually more<br />

convenient to choose a local tangent tridimensional reference, if it is necessary<br />

just to write relations of planimetric or altimetric conversion in a<br />

simple way). This solution, if better in theory, is, however, a little difficult<br />

to put into an industrial operation, because it requires having a<br />

database of geodesic systems, of projection algorithms (sometimes bizarre)<br />

used in the client countries, and arranging a model of the zero level surface<br />

(close to the geoid) of the altimetric system concerned.<br />

An approximate solution to this problem can nevertheless be found, that<br />

is – experience proves it – of comparable precision with the precision of<br />

this measurement technique. It relies on the fact that all conform projections<br />

are locally equivalent, with only a changing scale factor. One can<br />

attempt to replace the real projection, in which is expressed the coordinates<br />

of the ground, by a unique conform projection therefore (and why<br />

not try for simplicity!), for example a stereographic oblique projection of<br />

the mean curvature sphere on the tangent plane to the centre of the work.<br />

The mathematical formulation then becomes as shown in Figure 1.1.2<br />

(the figure is made in the diametrical plane of the sphere containing the<br />

centre of the work A and the point I to transform).<br />

I is the point to transform (cartographic), J the result (tridimensional),<br />

I′ the projection of I on the plane and J′ the projection of J on the terrestrial<br />

sphere of R radius. I′ and J′ are the inverse of each other in an<br />

inversion of B pole and coefficient 4R2 .<br />

Let us put:<br />

I x<br />

Rh I′ x<br />

R J′ x′<br />

y′ J (1 ) x′<br />

y′<br />

The calculation of the inversion gives:<br />

||BI′|| · ||BJ′|| 4R 2<br />

BJ′ BI′ <br />

J′ B B′ x(12 )<br />

4R2<br />

⇒ 2 <br />

BI′<br />

The geometry of the aerial image 7<br />

x<br />

1<br />

2 ≈ 1 2<br />

1 <br />

R(12 2 ) J (12 )(1)x<br />

(12 2 )(1)R<br />

2R<br />

h<br />

.<br />

R<br />

(1.15)

8 Yves Egels<br />

O<br />

JI (2 )x<br />

2 2 R<br />

. (1.16)<br />

One can consider that J I corresponds to a correction to bring us to the<br />

coordinates of I:<br />

I curvature xh/Rx3 /4R 2<br />

x 2 /2R<br />

B<br />

A<br />

R<br />

Figure 1.1.2 Figure made in the diametrical plane of the sphere containing the<br />

centre of the work A and the point I to transform.<br />

.<br />

J′<br />

(1.17)<br />

In the current conditions, the term of altimetric correction is by far the<br />

most meaningful. But for aerotriangulations of large extent, planimetric<br />

corrections can become important, and must be considered. If the aerotriangulation<br />

relies on measures of an airborne GPS (global positioning<br />

system), it is necessary not to forget also to do this correction on coordinates<br />

of tops, that also have to be corrected for the geoid–ellipsoid<br />

discrepancy.<br />

1.1.3.2 Atmospheric refraction<br />

Before penetrating the photographic camera, the luminous rays cross the<br />

atmosphere, whose density, and therefore refractive index, decreases with<br />

the altitude (see Table 1.1.3).<br />

h<br />

J<br />

I'<br />

I<br />

h<br />

x

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

This variation of the index provokes a curvature of the luminous rays<br />

(oriented downwards) whose amplitude depends on atmospheric conditions<br />

(pressure and temperature at the time of the image acquisition), that<br />

are not uniform in the field of the camera, and are generally unknown.<br />

Nevertheless, this deviation is small with regard to the precision of photogrammetric<br />

measurements (with the exception of very small scales). It will<br />

thus be acceptable to correct the refraction using a standard reference<br />

atmosphere: in order to evaluate the influence of the refraction, it will be<br />

necessary to use a model providing the refraction index at various altitudes.<br />

Numerous formulas exist allowing an evaluation of the variation of the<br />

air density according to altitude. No one is certainly better that any other,<br />

because of the strong turbulence of low atmospheric layers. In the tropospheric<br />

domain (these formulas would not be valid if the sensor is situated<br />

in the stratosphere), one will be able to use, for example, the formula<br />

published by the OACI (Organisation de l’Aviation Civile Internationale):<br />

where<br />

n n 0 (1 ah bh 2 ), (1.18)<br />

a 2.560.10 8 , b 7.5.10 13 , n 0 1.000278 h in metres,<br />

or this one, whose integration is simpler:<br />

where<br />

n 1 ae bh , (1.19)<br />

a 278.10 6 , b 105.10 6 .<br />

The geometry of the aerial image 9<br />

Table 1.1.3 Atmospheric co-index of refraction–variations with the altitude<br />

H (km) 1 0 1 3 5 7 9 11<br />

N (n1)·10 6 306 278 252 206 167 134 106 83<br />

The rays appear to come then from a point situated in the extension of<br />

the tangent to the ray at the entrance into the optics, thus introducing a<br />

displacement of the image.<br />

The calculation of the refraction correction can be performed in the<br />

following way: the luminous ray coming from M seems to originate from<br />

the point M′ situated on one same vertical, so that MM′ y. One will<br />

modify the altitude of the object point M by the correction y by putting<br />

it in M′. This method of calculation has the advantage of not supposing

10 Yves Egels<br />

M′<br />

M<br />

y<br />

that the axis of the acquired image is vertical, and thus works also in<br />

oblique terrestrial photogrammetry. (See Figure 1.1.4.)<br />

dy h hM dv ,<br />

sin v · cos v<br />

from where<br />

S<br />

y MM′ M<br />

. (1.20)<br />

The formula of Descartes gives us the variation of the refraction angle:<br />

n · sin v cte,<br />

or dn sin v n cos v dv 0,<br />

or again<br />

d<br />

S<br />

h<br />

v<br />

Figure 1.1.4 Optical ray in the atmosphere (with a strong exaggeration of the<br />

curvature).<br />

h h M<br />

sin v · cos v<br />

dv . (1.21)<br />

In the case of photography of a zone of small extension, one will suppose<br />

the Earth to be flat, the vertical (and the refractive index gradients) are<br />

dn<br />

tan v<br />

n<br />

x

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

therefore all parallel to each other (this approximation is not valid in<br />

spatial imagery). The radius being very large, one will be able to assimilate<br />

the bow and the chord, the v angle and the refractive index can be<br />

considered constant for the integration.<br />

MM′ <br />

S<br />

M<br />

S 1<br />

(h h .<br />

M) (1.22)<br />

dn<br />

dh dh<br />

cos2 v M<br />

With this second formula for refraction, the calculation for the complete<br />

rays gives:<br />

dn<br />

dh ab ebh , dn<br />

dh dh a ebh n 1<br />

and<br />

h .<br />

(1.23)<br />

If one puts D ||SM|| and H hS hM one gets the following refraction<br />

correction:<br />

dn<br />

dh dh 1<br />

h (n 1) b<br />

y D2<br />

H2 nS nM H(nS 1) b<br />

.<br />

(1.24)<br />

For aerial pictures at the 1/50,000th scale with a focal length of 152 mm,<br />

this correction is of about 0.20 m to the centre, and reaches 0.50 m at<br />

the edge of the image. It is appreciably less important at larger scales for<br />

the same focal length.<br />

This formula also permits either the correction of refraction with oblique<br />

or horizontal optical axes lines (terrestrial photogrammetry), which are<br />

very obviously not acceptable domains in very commonly used formulas,<br />

bringing a radial correction to the image coordinates. One will be able to<br />

verify that when h M → h S, the limit of y is:<br />

y H n S 1 b<br />

2 D2 ,<br />

(h hM) sin v<br />

sin v · cos v cos v dn<br />

n<br />

The geometry of the aerial image 11<br />

S<br />

M<br />

(h hM) cos 2 v dn<br />

n<br />

the classical refraction formula for a horizontal optical axis.

12 Yves Egels<br />

1.1.3.3 At the entrance in the plane<br />

In a pressurized plane two phenomena may occur. First, the light ray<br />

crosses the glass plate placed before the objective. This glass plate, therefore<br />

of weak thermal conductivity, is subject to significant temperature<br />

differences and bends under the differential dilation effect (in the opposite<br />

direction to the bending due to the pressure difference, that induces<br />

a much smaller effect). It takes a more or less spherical shape, and operates<br />

then like a lens that introduces an optic distortion. Unfortunately, if<br />

the phenomenon can be experimentally tested (and the calculation shows<br />

that its influence is not negligible), it is practically impossible to quantify<br />

it, the real shape of the glass plate porthole depends on the history of the<br />

flight (temperatures, pressures inside and outside). There are techniques<br />

of calculation that will be applied to §1.1.3.6, however, (a posteriori<br />

unknowns) permitting one to model and to eliminate the influence of it<br />

in the aerotriangulation.<br />

When the plane is pressurized the luminous ray is refracted a second<br />

time at the entrance to the cabin, where the pressure is higher than that<br />

outside at the altitude of flight (the difference in pressure is a function<br />

of the airplane used). One can model this refraction in the same way as<br />

for the atmospheric refraction (the sign is opposed to the previous one,<br />

because the radius arrives in a superior index medium).<br />

While noting nc the refractive index inside the cabin, and while supposing<br />

that the porthole is horizontal,<br />

dv (n c n s ) tan v (n c n s ) R<br />

H<br />

dy D2<br />

H 2 (n c n s)H.<br />

(1.25)<br />

(1.26)<br />

While combining this equation with that of atmospheric refraction, one<br />

sees that it is sufficient to replace the altitude of the top by the altitude<br />

of the cabin in the second part:<br />

y D2<br />

H2 nS nM H(nc 1) b<br />

.<br />

(1.27)<br />

Thus we have to compute the cabin index of refraction. Often in pressurized<br />

planes, the pressure is limited to a maximal value of P max (for<br />

example 6 psi 41,370 Pa for the Beechcraft King Air of IGN-F) between<br />

inside and outside. Under a given altitude limit (around 4,300 m in the<br />

previous example), the pressure is kept to the ground value. The refractive<br />

index n and co-index N are linked to the temperature and pressure<br />

by an approximate law:

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

N (n 1)106 790 , P in kPa and T in K.<br />

The temperature decreases with the altitude with an empirical variation T<br />

T0 6.5 × 103 P<br />

T<br />

Z. If one takes TC T0 298 K, the cabin refractive<br />

index will be:<br />

N C N S (1 2.22 × 10 5 Z S) 2,696 P.<br />

The geometry of the aerial image 13<br />

This index will be limited to its ground value (N 278 in this model),<br />

if the altitude is below the pressurization limit. One may note that, in<br />

current flight conditions (flight altitude 5,000 m, pressurization limit<br />

4,300 m), this total correction is nearly the opposite of the correction<br />

computed without cabin pressurization.<br />

1.1.3.4 Distortion of the optics<br />

In elementary optics (conditions of Gauss: thin lenses, rays with a low tilt<br />

angle with respect to the optical axis, low aperture optics), all rays passing<br />

through the centre of the objective are not deviated. This replicates the<br />

mathematical perspective. Unfortunately, the real optics used in photogrammetric<br />

image acquisitions do not fulfil these conditions.<br />

In the case of perfect optics (centred spherical dioptres) the entrance<br />

ray, the exit ray, and the optical axis are coplanar. But the incident and<br />

emergent rays are not parallel. This gap of parallelism constitutes the<br />

distortion of the objective. It is a permanent and steady characteristic of<br />

the optics, which can be measured prior to the image acquisitions, and<br />

corrected at the time of photogrammetric calculations.<br />

The measure of this distortion can be achieved on an optical bench,<br />

using collimators, or by observation of a dense network of targets through<br />

the objective. Manufacturers, to provide certification of the aerial camera<br />

that they produce, use these methods, which are quite complicated and<br />

costly. It is also possible to determine the distortion (as well as the centring<br />

and the principal distance) by taking images of a tridimensional polygon<br />

of points very well known in coordinates, and by calculation of the<br />

collinearity equations, to which one adds unknowns of distortion according<br />

to the chosen model.<br />

In the case of perfect optics, the distortion is very correctly modelled<br />

by a radial symmetrical correction polynomial around the main point of<br />

symmetry (intersection of the optic axis with the plane of the sensor).<br />

Besides, one can demonstrate easily that this polynomial only contains<br />

some terms of odd rank. The term of first degree can be chosen arbitrarily,<br />

because it is bound by choice of the main distance: one often takes it as<br />

a null (main distance to the centre of the image) or so that the maximal<br />

distortion in the field is the weakest possible.

14 Yves Egels<br />

If the optics cannot be considered as perfect (it is sometimes the case if<br />

one attempts to use cameras designed for the general public for photogrammetric<br />

operations), the distortion can lose its radial and symmetrical<br />

character, and requires a means of calibration and more complex modelling.<br />

Besides, in the case of zooms (that one should avoid . . .), this distortion<br />

does not remain constant in time because of variations of centrage brought<br />

about by the displacement of the pancratic vehicle (mobile part permitting<br />

the displacement of lenses). Any modelling then becomes very uncertain.<br />

1.1.3.5 Distortions of the sensor<br />

The equation of the perspective supposes that the sensor is a plane, and<br />

that one can define there a reference of fixed measure. Thus, it will be<br />

necessary to compare the photochemical sensors (photographic emulsion)<br />

and the electronic sensors (CCD matrixes). These last are practically indeformable,<br />

and the image that they acquire is definitely steady (in any case<br />

on the geometric plane). The following remarks will therefore apply to the<br />

photochemical sensors only.<br />

The planarity of the emulsion was formerly obtained by the use of photographic<br />

glass plates, but this solution is no longer used, except maybe in<br />

certain systems of terrestrial photogrammetry. It was, besides, not very<br />

satisfactory. Today one practically always uses vacuum depression of the<br />

film on the plate at the bottom of the camera. This method is very convenient,<br />

provided that there is no dust interposed between the film and<br />

the plate, which is unfortunately quite difficult to achieve. There follows<br />

a local distortion of the image, which is difficult to detect (except in radiometrically<br />

uniform zones, where there appears an effect of shade) and<br />

impossible to model.<br />

The dimensional stability of the image during the time, if it is improved<br />

with the use of so-called ‘stable’ supports, is quite insufficient for the use<br />

of photogrammetrists. Distortions ten times better than the necessary<br />

measurement precision are frequent. The only efficient counter-measure is<br />

the measure of the coordinates on the images of well-known points (reference<br />

marks of the bottom plate of the camera) and the modelling of the<br />

distortion by a bidimensional transformation. One usually chooses an<br />

affinity (general linear transformation) that represents correctly the main<br />

part of the distortion. But this distortion is thus measured only on the side<br />

of the image, in a limited number of points (eight in general). The interpolation<br />

inside the image is therefore of low reliability.<br />

1.1.3.6 Modelling of non-quantifiable defects<br />

The main corrections to apply to the simplistic model of the central perspective<br />

have been reviewed. Several of them can be considered as perfectly<br />

known – the curvature of the Earth for example; others can be calculated

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

roughly: refraction, distortion of the film, and others that are completely<br />

unknown, the distortion of the porthole glass plate, for example.<br />

When the precise geometric reconstitution is indispensable – as is notably<br />

the case in aerotriangulation, where the addition of systematic errors can<br />

generate intolerable imprecision – a counter-measure consists in adding to<br />

the equation of collinearity and its corrective terms a parametric model<br />

of distortion (often polynomial). This model contains a small number of<br />

parameters, chosen in order to best represent influences of no directly<br />

modelizable defects. These supplementary a posteriori unknowns of systematism<br />

will be solved simultaneously with the main unknowns of the<br />

photogrammetric system, which are coordinates of the ground points, tops<br />

of images, and rotations of images. This technique usually permits a gain<br />

of 30 to 40 per cent on altimetric precision of the aerotriangulation.<br />

1.1.4 Some convenient rules<br />

The geometry of the aerial image 15<br />

Rules for the correct use of aerial photogrammetry have been known for<br />

several decades, and we will therefore only briefly recall the main features.<br />

Besides, variables on which one can intervene are not numerous. This<br />

arises essentially from the necessary precision, which can be specified in<br />

the requirements. They can also be deduced from the scale of the survey<br />

(even though this one is digital, one can assign it a scale corresponding to<br />

its precision, that is conventionally 0.1 mm to the scale). The practice<br />

shows that the errors that accumulate during the photogrammetric process<br />

limit its precision – in the current studies – to about 15 to 20 microns on<br />

the image (on the very well-defined points) for each of the two planimetric<br />

coordinates. This number can be improved appreciably (down to 2 to<br />

3 m) by choosing some more coercive operative (and simultaneously<br />

costlier) ways to work. For the altimetry, this number must be grosso<br />

modo divided by the ratio between the basis (distance between points of<br />

view) and the distance (of these points of view to the photographed object).<br />

This ratio depends directly on the chosen field angle for the camera: the<br />

wider the field, the more this ratio will increase, the more the altimetric<br />

precision will be close to the planimetric precision. As an example, Table<br />

1.1.5 sums up the characteristics of the most current aerial camera values<br />

(in format 24 × 24 cm) with values of standard base length (overlap of<br />

55 per cent).<br />

Knowing the precision requested for the survey, it is then very simple<br />

to determine the scale of the images. The choice of the focal distance will<br />

be guided by the following considerations: the longer the focal distance,<br />

the higher the altitude of flight will be, the more the hidden parts will be<br />

reduced (essentially feet of buildings in city, not to mention complete<br />

streets), but simultaneously the more the altimetric precision is degraded,<br />

and the more marked the radiometric influence of the atmosphere.

16 Yves Egels<br />

Table 1.1.5 Characteristics of the most current aerial camera values<br />

(in format 24 × 24 cm) with values of standard baselength<br />

(overlap of 55 per cent)<br />

Focal length 88 mm 152 mm 210 mm 300 mm<br />

Field of view 120° 90° 73° 55°<br />

B/H 1.3 0.66 0.48 0.33<br />

Flight height (1/10,000) 880 m 1520 m 2100 m 3000 m<br />

Planimetric precision (1/10,000) 20 cm 20 cm 20 cm 20 cm<br />

Altimetric precision (1/10,000) 15 cm 30 cm 40 cm 60 cm<br />

In practice, one will preferentially use the focal distance of 152 mm.<br />

Shorter focal lengths will be reserved for the very small scales, for which<br />

the altitude of flight can constitute a limitation (the gain in altimetric precision<br />

is actually quite theoretical, as the mediocre quality of the optics<br />

makes one lose what was gained in geometry). A long focal distance will<br />

be preferred most of the time for the orthophotographic surveys in urban<br />

zones, in which the loss of altimetric quality is not a major handicap. In<br />

the case of a colour photograph, it will be necessary nevertheless to take<br />

care of the increase of the atmospheric diffusion fog (due to a thicker<br />

diffusing layer), which will especially have the consequence of limiting the<br />

number of days when it is possible to take the images. Another way (less<br />

economic) to palliate the hidden part problem is to use a camera with a<br />

normal focal distance, while choosing more important overlaps: one can<br />

reconcile a good altimetric precision, a weak atmospheric fog, and acceptable<br />

distortions for objects on the ground.<br />

1.2 RADIOMETRIC EFFECTS OF THE ATMOSPHERE<br />

AND THE OPTICS<br />

Michel Kasser<br />

1.2.1 Atmospheric diffusion: Rayleigh and Mie diffusions<br />

The atmosphere is generally opaque to the very short wavelengths, its<br />

transmission only starting toward 0.35 m. Then, the atmosphere presents<br />

several ‘windows’ until 14 m, the absorption becoming again practically<br />

total between 14 m and 1 mm. Finally, the transmission reappears<br />

progressively between 1 mm and 5 cm, to become practically perfect to<br />

the longer wavelengths (radio waves).<br />

The low atmosphere, in the visible band, is not perfectly transparent,<br />

and when it is illuminated by the Sun it distributes a certain part of the

1111<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

1011<br />

1<br />

2<br />

3111<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

20111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

30111<br />

1<br />

2<br />

3<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

40111<br />

1<br />

2<br />

3<br />

4<br />

45111<br />

Radiometric effects of the atmosphere, optics 17<br />

radiance that it receives: all of us can observe the milky white colour of<br />

the sky close to the horizon, and to the contrary its blue colour is very<br />

marked in high altitude sites towards the zenith. These luminous emissions<br />

are important data to understand correctly the images used in<br />

photogrammetry, and in particular the diffuse lighting coming from the<br />

sky in zones of shades, or again the atmospheric fog or white-out and its<br />

variation according to the altitude of image acquisition. We will start with<br />

a presentation of the present diffusion mechanisms in the atmosphere, to<br />

assess the effects of this diffusion on the pictures obtained from an airplane<br />

or a satellite.<br />

1.2.1.1 Rayleigh diffusion<br />

The diffusion by the atmospheric gases (often called absorption, in an erroneous<br />

way, since the luminous energy removed from the light rays is merely<br />

redistributed in all directions, but not transformed into heat) is due to the<br />

electronic transitions or vibrations of atoms and molecules generally present<br />

in the atmosphere. On the whole, the electronic transitions of atoms<br />

provoke a diffusion generally situated in the UV (these resonances are very<br />

much damped because of the important couplings between atoms in every<br />

molecule, and thus the effect is still noticeable even at much lower frequencies),<br />

whereas vibration transitions of molecules rather provoke diffusions<br />

situated at lower frequencies, and therefore in the IR (and these are sharp<br />

resonances, because there is nearly no coupling between the elementary<br />

resonators that are molecules, and therefore the flanks of bands are very<br />

stiff). The Rayleigh diffusion, particularly important to the high optical<br />

frequencies, corresponds to these atomic resonances. We have therefore a<br />

factor of attenuation of a light ray when it crosses the atmosphere, and<br />

at the same time a substantial source of parasitic light, since light removed<br />