CS224N Problem Set 1 Lukas Biewald April 7 ... - Stanford AI Lab

CS224N Problem Set 1 Lukas Biewald April 7 ... - Stanford AI Lab

CS224N Problem Set 1 Lukas Biewald April 7 ... - Stanford AI Lab

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

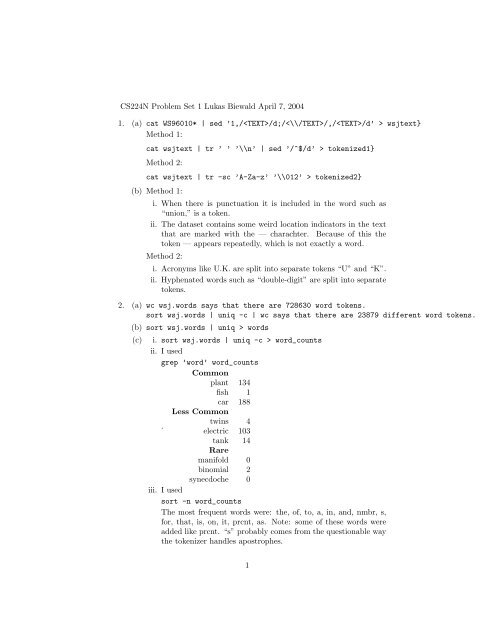

<strong>CS224N</strong> <strong>Problem</strong> <strong>Set</strong> 1 <strong>Lukas</strong> <strong>Biewald</strong> <strong>April</strong> 7, 2004<br />

1. (a) cat WS96010* | sed ’1,//d;//,//d’ > wsjtext}<br />

Method 1:<br />

cat wsjtext | tr ’ ’ ’\\n’ | sed ’/^$/d’ > tokenized1}<br />

Method 2:<br />

cat wsjtext | tr -sc ’A-Za-z’ ’\\012’ > tokenized2}<br />

(b) Method 1:<br />

i. When there is punctuation it is included in the word such as<br />

“union,” is a token.<br />

ii. The dataset contains some weird location indicators in the text<br />

that are marked with the — charachter. Because of this the<br />

token — appears repeatedly, which is not exactly a word.<br />

Method 2:<br />

i. Acronyms like U.K. are split into separate tokens “U” and “K”.<br />

ii. Hyphenated words such as “double-digit” are split into separate<br />

tokens.<br />

2. (a) wc wsj.words says that there are 728630 word tokens.<br />

sort wsj.words | uniq -c | wc says that there are 23879 different word tokens.<br />

(b) sort wsj.words | uniq > words<br />

(c) i. sort wsj.words | uniq -c > word_counts<br />

ii. I used<br />

grep ’word’ word_counts<br />

Common<br />

plant 134<br />

fish 1<br />

car<br />

Less Common<br />

188<br />

.<br />

twins<br />

electric<br />

4<br />

103<br />

tank<br />

Rare<br />

14<br />

manifold 0<br />

binomial 2<br />

synecdoche 0<br />

iii. I used<br />

sort -n word_counts<br />

The most frequent words were: the, of, to, a, in, and, nmbr, s,<br />

for, that, is, on, it, prcnt, as. Note: some of these words were<br />

added like prcnt. “s” probably comes from the questionable way<br />

the tokenizer handles apostrophes.<br />

1

iv. tail +2 wsj.words > wsj.nextwords<br />

tail +3 wsj.words > wsj.thirdwords}<br />

paste wsj.words wsj.nextwords > bigrams<br />

paste wsj.words wsj.nextwords wsj.thirdwords > trigrams<br />

sort bigrams | uniq -c | sort -n<br />

sort trigrams | uniq -c | sort -n<br />

Most common bigrams:<br />

the company<br />

to the<br />

on the<br />

in nmbr<br />

u s<br />

nmbr nmbr<br />

for the<br />

dllr million<br />

in the<br />

of the<br />

Most common trigrams:<br />

of the year<br />

the dow jones<br />

in new york<br />

dllr billion in<br />

nmbr to nmbr<br />

the end of<br />

one of the<br />

dllr million in<br />

nmbr nmbr nmbr<br />

the u s<br />

3. (a) cut -f1 word_counts | sort -nr | nl > freq<br />

(b) It’s hard for me to find a windows machine in my lab, so I did everything<br />

in R. I hope this is ok.<br />

2

(c)<br />

logfreq<br />

0 2 4 6 8 10<br />

●<br />

● ● ● ●●●<br />

● ●●●● ●● ● ●●●●●<br />

●<br />

●<br />

0 2 4 6 8 10<br />

logrank<br />

The intercept was 14.1 and the slope was -1.4 for the linear regression.<br />

The data does approximately show the kind of curvature suggested<br />

by Mandelbrot’s law.<br />

3<br />

●