CLASSIFICATION AND PREDICTION - Universität Wien

CLASSIFICATION AND PREDICTION - Universität Wien

CLASSIFICATION AND PREDICTION - Universität Wien

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

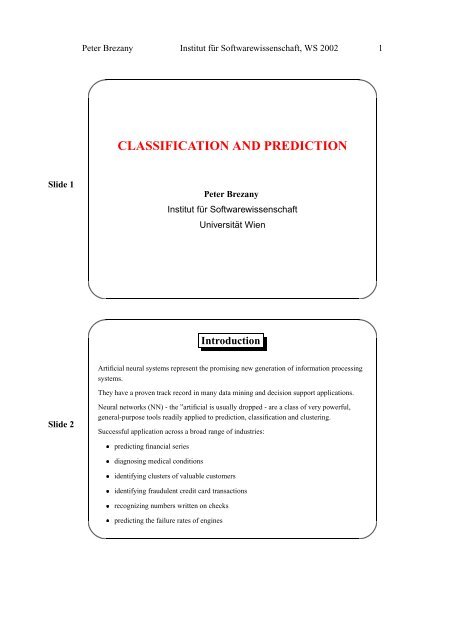

Peter Brezany Institut für Softwarewissenschaft, WS 2002 1<br />

<strong>CLASSIFICATION</strong> <strong>AND</strong> <strong>PREDICTION</strong><br />

Slide 1<br />

Peter Brezany<br />

Institut für Softwarewissenschaft<br />

Universität <strong>Wien</strong><br />

Introduction<br />

Artificial neural systems represent the promising new generation of information processing<br />

systems.<br />

They have a proven track record in many data mining and decision support applications.<br />

Slide 2<br />

Neural networks (NN) - the ”artificial is usually dropped - are a class of very powerful,<br />

general-purpose tools readily applied to prediction, classification and clustering.<br />

Successful application across a broad range of industries:<br />

predicting financial series<br />

diagnosing medical conditions<br />

identifying clusters of valuable customers<br />

identifying fraudulent credit card transactions<br />

recognizing numbers written on checks<br />

predicting the failure rates of engines

Peter Brezany Institut für Softwarewissenschaft, WS 2002 2<br />

Introduction (2)<br />

People are good at generalizing from experience.<br />

Computers usually excel at following explicit instructions over and over.<br />

Slide 3<br />

NN bridge this gap by modeling on a computer, the neural connections in human brains.<br />

Their ability to generalize and learn from data mimics our own ability to learn from<br />

experience.<br />

This ability is useful for data mining.<br />

Drawback: The results of training a NN are internal weights distributed throughout the<br />

network. These weights provide no more insight into why the solution is valid than asking<br />

many human experts why a particular decision is the right decision. They just know that it<br />

is.<br />

A Bit of History<br />

1940 (neurologist Warren McCulloch and logician Walter Pits) – the original work on how<br />

neurons work (no digital computers available at that time)<br />

Slide 4<br />

1950s - computer scientists implemented models called perceptrons based on the work of<br />

McCulloch and Pits - some limited successes with perceptrons in the laboratory, but the<br />

results were disappointing for general problem-solving. One of the reasons: there were no<br />

powerful computers available<br />

1970s - the study of NN implementations on computers slowed down drastically.<br />

1982 - John Hopfield invented backpropagation, a way of training NN – a renaissance in NN<br />

research.<br />

1980s - research moved from the labs into the commercial world.<br />

NN have been applied in virtually every industry field.

Peter Brezany Institut für Softwarewissenschaft, WS 2002 3<br />

Real Estate Appraisal<br />

Slide 5<br />

NN are used to replace the appraiser who estimates the market value of a home based on a<br />

set of features: position (town, country), garage, house style and a lot of other factors that<br />

figure into her mental calculation. She is not applying some formula, but balancing her<br />

experience and knowledge of the sales prices of similar homes. And, her knowledge about<br />

housing prices is not static. She is aware of recent sale prices for homes throughout the<br />

region and can recognize trends in prices over time – she performs fine-tuning her<br />

calculation to fit the latest data.<br />

In 1992, researchers at IBM recognized this as a good problem for NN. The figure on the<br />

next slide illustrates why.<br />

A Neural Network as an Opaque Block<br />

inputs<br />

living space<br />

size of garage<br />

age of house<br />

etc. etc. etc.<br />

Neutral Network Model<br />

output<br />

appraised value<br />

Slide 6<br />

A neural network model calculates the appraised value (the output) from the inputs.<br />

The calculation is a complex process that we do not need to understand to use the<br />

appraised values.<br />

During the 1st phase, we need to train the network using examples of previous sales. An<br />

example from the training is shown in Tab. 1.<br />

A Technical Detail: NN work best when all the input and output values are between 0 and 1.<br />

This requires massaging (changing) all the values both continuous and categorical, to get<br />

new values between 0 and 1.<br />

The training set example with massaged values is in Tab. 2.

Peter Brezany Institut für Softwarewissenschaft, WS 2002 4<br />

Tab. 1: Training Set Example<br />

Feature<br />

Description<br />

Value<br />

Slide 7<br />

Sales_Price<br />

Months_Ago<br />

Num_Apartments<br />

Year_Built<br />

Plumbing_Fixtures<br />

Heating_Type<br />

Basement_Garage<br />

Attached_Garage<br />

Living_Area<br />

Deck_Area<br />

Porch_Area<br />

Recroom_Area<br />

Basement_Area<br />

the sales price of the house<br />

When it was sold?<br />

Number of dwelling units<br />

Year built<br />

Number of plumbing fixtures<br />

Heating system type<br />

Basement garage (number of cars)<br />

Attached frame garage area (in square feet)<br />

Total living are (squaer feet)<br />

Deck / open porch area(square feet)<br />

Enclosed poech area(sqare feet)<br />

Recreation room area (square feet)<br />

Finished basement area (square feet)<br />

$ 171 ,000<br />

4<br />

1<br />

1923<br />

9<br />

A<br />

0<br />

120<br />

1,614<br />

0<br />

210<br />

0<br />

175<br />

Tab. 2: Massaged Values for Training Set Example<br />

Feature<br />

Range of Values<br />

Original Value<br />

Massaged Value<br />

Slide 8<br />

Sales_Price<br />

Months_Ago<br />

Num_Apartments<br />

Year_Built<br />

Plumbing_Fixtures<br />

Heating_Type<br />

Basement_Garage<br />

Attached_Garage<br />

Living_Area<br />

Deck_Area<br />

Porch_Area<br />

Recroom_Area<br />

Basement_Area<br />

$ 103,000-$ 250,000<br />

0-23<br />

1-3<br />

1850-1986<br />

5-17<br />

Coded as A or B<br />

0-2<br />

0-228<br />

714-4185<br />

0-738<br />

0-452<br />

0-672<br />

0-810<br />

$ 171,000<br />

4<br />

1<br />

1923<br />

9<br />

B<br />

0<br />

120<br />

1,614<br />

0<br />

210<br />

0<br />

175<br />

0.4626<br />

0.1739<br />

0.0000<br />

0.5328<br />

0.3333<br />

1.0000<br />

0.0000<br />

0.5263<br />

0.2593<br />

0.0000<br />

0.4646<br />

0.0000<br />

0.2160

Peter Brezany Institut für Softwarewissenschaft, WS 2002 5<br />

How to use a NN?<br />

1. Training<br />

The NN is ready to be trained when the training examples have all been massaged.<br />

Slide 9<br />

During the training phase, we repeatedly feed the examples in the training set though the<br />

NN. The NN compares its predicted output value to the actual sales price and adjust all its<br />

internal weights to improve the prediction.<br />

By going through all the training examples (sometimes many times), the NN calculates a<br />

good set of weights. Training is complete when the weights no longer change very much or<br />

until the network has gone through the training set a maximum number of times.<br />

2. Test (Evaluating)<br />

We run the network on a test set that it had never seen before - when the performance is<br />

satisfactory, then we have a neural network model. The model is ready for use.<br />

How to use a NN? (2)<br />

3. Production runs<br />

The NN model takes descriptive information about a house, suitable massaged, and<br />

produces an output.<br />

Slide 10<br />

One problem: the output is a number between 0 and 1, so we need to unmassage the value to<br />

turn it back into a sales price.<br />

If we get a value like 0.75, then we multiply it by the size of the range ($147,000) and then<br />

add the base number in the range ($103,00) to get an appraisal value of $213,250.<br />

WARNING<br />

A neural network is only as good as the training set used to generate it. The model is static<br />

and must be explicitly updated by adding more recent examples into the training set and<br />

retraining the network (or training a new network) in order to keep it up-to-date and useful.

Peter Brezany Institut für Softwarewissenschaft, WS 2002 6<br />

What Is a Neural Net?<br />

NN consists of basic units modeled on the principles of biological neurons. These units are<br />

connected together as shown in the next figures.<br />

Slide 11<br />

input 1<br />

input 2<br />

input 3<br />

input 4<br />

output<br />

output<br />

output<br />

1<br />

2<br />

3<br />

A neural network can produce<br />

multiple output values.<br />

What Is a Neural Net? (2)<br />

input 1<br />

input 2<br />

input 3<br />

input 4<br />

output<br />

A very simple neural network<br />

takes four inputs and produces an<br />

output. The result of training this<br />

network is exactly equivalent to<br />

the statistical technique called<br />

logistic regression.<br />

Slide 12<br />

input 1<br />

input 2<br />

input 3<br />

input 4<br />

output<br />

This network has a middle layer<br />

called the hidden layer.The<br />

hidden layer makes the network<br />

more powerful by enabling it to<br />

recognize more patterns.<br />

input 1<br />

input 2<br />

input 3<br />

output<br />

Increasing the size of the hidden<br />

layer makes the network more<br />

powerful but introduces the risk of<br />

overfitting. Usually, only one<br />

hidden layer is needed.<br />

input 4

Peter Brezany Institut für Softwarewissenschaft, WS 2002 7<br />

What Is the Unit of a Neural Network?<br />

Artificial NNs are composed of basic units to model the behavior of biological neurons (see<br />

the next figure). The unit combines its inputs into a single output value. This combination is<br />

called the unit‘s transfer function.<br />

Slide 13<br />

The output remains very low until the combined inputs reach a threshold value - then, the<br />

unit is activated and the output is high.<br />

Small changes in the inputs can have large effects on the output, and, conversely, large<br />

changes in the inputs of the unit may have little effect on the output. This property is called<br />

non-linear behavior.<br />

The most common combination function is the weighted sum. Other combination functions<br />

are sometimes useful, e.g., MAX, MIN, logical <strong>AND</strong> or OR of the weighted values.<br />

The following diagram (after the figure) compares 3 typical activation functions. By far, the<br />

most common activation function is the sigmoid function ¡£¢¥¤§¦©¨¢£<br />

.<br />

<br />

The Unit of an Artificial Neural Network<br />

output<br />

The result is exactly one output<br />

value, usualy between 0 and 1 .<br />

Slide 14<br />

Transfer<br />

Function<br />

The activation function calculates the<br />

output value from the result of the<br />

combination function.<br />

The combination function combines<br />

all the inputs into a single value,<br />

usually as a weighted summation.<br />

w1<br />

w2<br />

w3<br />

Each input has its own weight.<br />

inputs

Peter Brezany Institut für Softwarewissenschaft, WS 2002 8<br />

Three Common Activation Functions<br />

1,5<br />

1,0<br />

0,5<br />

Slide 15<br />

0,0<br />

sigmoid<br />

(logistic)<br />

-0,5<br />

linear<br />

-1,0<br />

exponential<br />

(tanh)<br />

-1,5<br />

-10 -5 0 5 10<br />

The real estate training example in a NN<br />

0,0000<br />

output<br />

from unit<br />

0,5328<br />

0,21666<br />

input<br />

weight<br />

constant<br />

input<br />

0,3333<br />

0,49728<br />

0,23057<br />

Slide 16<br />

Num_Apartments<br />

Year_Built<br />

Plumbing_Fixtures<br />

Heating_Type<br />

Basement_Garage<br />

Attached_Garage<br />

Living_Area<br />

Deck_Area<br />

Porch_Area<br />

Recroom_Area<br />

Basement_Area<br />

1<br />

1923<br />

9<br />

8<br />

0<br />

120<br />

1614<br />

0<br />

210<br />

0<br />

175<br />

0,0000<br />

0,5328<br />

0,3333<br />

1,0000<br />

0,0000<br />

0,5263<br />

0,2593<br />

0,0000<br />

0,4646<br />

0,0000<br />

0,2160<br />

1,0000<br />

0,0000<br />

0,5263<br />

0,2593<br />

0,0000<br />

0,4646<br />

0,48854<br />

0,24764<br />

0,26228<br />

0,53988<br />

0,53040<br />

0,53499<br />

0,35250<br />

0,52491<br />

0,86181<br />

0,47909<br />

0,73920<br />

0,35789<br />

0,04826<br />

0,24434<br />

0,73107<br />

0,22200 0,58282<br />

0,98888<br />

0,76719<br />

0,19472 0,33192<br />

0,57265<br />

0.33530<br />

0,42183<br />

0.49815<br />

$176.228<br />

0,29771<br />

0,0000<br />

0,00042<br />

0,2160

Peter Brezany Institut für Softwarewissenschaft, WS 2002 9<br />

Multiple Output<br />

Sometimes the output layer has more than one unit. For example, a department store chain<br />

wants to predict the likelihood that customers will be purchasing products from various<br />

departments, like women‘s apparel, furniture, and entertainment. The stores want to use this<br />

information to plan promotions and direct target mailings.<br />

Slide 17<br />

last purchase<br />

age<br />

propensity to purchase<br />

women‘s apparel<br />

gender<br />

propensity to purchase<br />

furniture<br />

avg balance<br />

propensity to purchase<br />

entertainment<br />

How does the NN learn Using Backpropagation?<br />

Slide 18<br />

The necessary theoretical background is explained<br />

in the next part.

Peter Brezany Institut für Softwarewissenschaft, WS 2002 10<br />

Formal Introduction<br />

Neuronal network is a set of connected input/output units where each connection has a<br />

weight associated with it.<br />

Slide 19<br />

During the learning phase, the network learns by adjusting the weights so as to be able to<br />

predict the correct class label of the input samples. Neural network learning is also referred<br />

to as connectionist learning due to the connections between units.<br />

Neuronal networks involve long training times and are therefore more suitable for<br />

applications where this is feasible. They require a number of parameters that are typically<br />

best determined empirically, such as the network topology.<br />

Advantages of neural networks include their high tolerance to noisy data as well as their<br />

ability to classify patterns on which they have not been trained.<br />

The most popular network algorithm is the backpropagation algorithm which performs<br />

learning on a multilayer feed-forward neural network.<br />

A Multilayer Feed-Forward Neural Network<br />

An example of such network is in Fig. 1.<br />

The inputs correspond to the attributes measured for each training sample. The inputs are<br />

fed simultaneously into a layer of units making up the input layer.<br />

Slide 20<br />

The weighted outputs of the units of the input layer are fed simultaneously to a second layer<br />

of “neuronlike” units, known as a hidden layer.<br />

The hidden layer’s weighted outputs can be input to another hidden layer, and so on. The<br />

number of hidden layers is arbitrary, although in practice, usually only one is used.<br />

The weighted outputs of the last hidden layer are input to units making up to output layer,<br />

which emits the networks prediction for given samples.<br />

The units in the hidden layers and output layer are sometimes referred to as neurodes due to<br />

their symbolic basis, or as output units. The network shown in Fig. 1 is a two-layer neural<br />

network. Similarly, a network containing 2 hidden layers is called a three-layer neural<br />

network, and so on.

Peter Brezany Institut für Softwarewissenschaft, WS 2002 11<br />

A Multilayer Feed-Forward Neural Network (2)<br />

Slide 21<br />

The network is feed-forward in that none of the weights cycles back to an input unit or to<br />

an output unit of a previous layer.<br />

It is fully connected in that each unit provides input to each unit in the next forward layer.<br />

A Multilayer Feed-Forward Neural Network (3)<br />

Input<br />

layer<br />

Hidden<br />

layer<br />

Output<br />

layer<br />

x 1<br />

x 2<br />

Slide 22<br />

.<br />

.<br />

.<br />

.<br />

.<br />

.<br />

.<br />

.<br />

.<br />

x i<br />

wij<br />

O j<br />

wjk<br />

O k<br />

A multilayer feed-forward neural network: A training sample, X = (x1 , x2, . . . , xi ), is fed to the<br />

input layer. Weighted connections exist between each layer , where w ij denotes the weight<br />

from a unit j in one layer to a unit i in the previous layer.<br />

Figure 1:

(19)<br />

?X?<br />

to the previous layer, 2<br />

Peter Brezany Institut für Softwarewissenschaft, WS 2002 12<br />

Backpropagation Algorithm<br />

Algorithm: Backpropagation. Neural network learning for classification,<br />

using the backpropagation algorithm.<br />

Input: The training samples, samples; the learning rate, ;<br />

a multilayer feed-forward network, network.<br />

Slide 23<br />

Output: A neural network trained to classify the samples.<br />

Method:<br />

(1) Initialize all weights and biases in network;<br />

(2) while terminating condition is not satisfied<br />

(3) for each training sample in samples<br />

(4) // Propagate the inputs forward:<br />

!<br />

"$#&%('*),+ ) #.- )/10<br />

(5) for each hidden or output layer unit<br />

(6) ; //compute the net input of unit with respect<br />

-3#4% 5<br />

(7)<br />

#>=?<br />

// compute the output of each unit<br />

57698;:9<<br />

Backpropagation Algorithm (2)<br />

(8) // Backpropagate the errors:<br />

(9) for each unit in the output layer<br />

(10) @!ABA#&%(-3#§CEDGFH-I#.JKCMLN#!FO-3#J ; // compute the error<br />

(11) for each unit in the hidden layers, from the last to the first hidden layer<br />

Slide 24<br />

(12) @!ABA#&%(-3#§CEDGFH-I#.J '*P @QABA P + # P ; //compute the error with respect to<br />

the next higher layer, R<br />

+ ) # <br />

) S&+ ) #Q%TCMJE@!ABA#U-<br />

(13) for each weight in network<br />

(14) ; // weight increment<br />

(15) + ) #Q%V+ ) # / S&+ ) # ;<br />

?<br />

// weight update<br />

0 # <br />

S #&%TCMJW@QABA# 0<br />

(16) for each bias in network<br />

(17) ; // bias increment<br />

(18) 0 #4% 0 # / S 0 # ;<br />

?<br />

// bias update

[<br />

Peter Brezany Institut für Softwarewissenschaft, WS 2002 13<br />

A hidden or output layer unit j<br />

x<br />

0<br />

Weights<br />

w 0j<br />

Bias<br />

j<br />

Slide 25<br />

x<br />

1<br />

.<br />

.<br />

x n<br />

w<br />

1j<br />

w nj<br />

f<br />

Output<br />

Inputs<br />

(outputs from<br />

previous layer)<br />

Weighted<br />

sum<br />

Activation<br />

funktion<br />

A hidden or output layer unit j: The inputs to unit j are outputs from the previous layer.<br />

These are multiplied by their corresponding weights in order to form a weighted sum,<br />

which is added to the bias associated with unit j. A nonlinear activation function is applied<br />

to the net input.<br />

Figure 2:<br />

Backpropagation Algorithm - Additional Explanation<br />

The weights are initialized to small random numbers (e.g., ranging from -1.0 to 1.0, or -0.5<br />

to 0.5).<br />

Slide 26<br />

Each unit has a bias associated with it. The bias acts as a threshold in that it serves to vary<br />

the activity of the unit.<br />

Error of the output unit Y : OZ is the actual output of Y , and TZ is the true output, based on<br />

the known class label of the given training sample.<br />

To compute the error of a hidden layer unit Y , the weighted sum of the errors of the units<br />

connected to Y in the next layer are considered.<br />

is the learning rate, a constant typically having a value between 0.0 and 1.0. A rule of<br />

thumb is to set [ to \B].^ , where ^ is the number of iterations through the training set so far.

Peter Brezany Institut für Softwarewissenschaft, WS 2002 14<br />

S<br />

Example Calculations for Backpropagation Learning<br />

The figure below shows a NN. Let the learning rate be 0.9. The initial weight and bias<br />

values of the network are given in the table on the next slide, along with the first training<br />

sample, X = (1, 0, 1) whose class label is 1.<br />

Slide 27<br />

w 34<br />

35<br />

x 1 1<br />

w 14<br />

x 3 3<br />

w<br />

w 15<br />

5<br />

4<br />

w 24<br />

x 2<br />

2<br />

w 25<br />

w 46<br />

w 36<br />

6<br />

An<br />

example of a multilayer feed-forward neural network.

_`acb ed b Kf bQ_ d b4_ f bQa d bQa f b d;g b f;g h.dihfjhg<br />

<br />

k \lknmporqsknmptukNm vwkNmx\yqzkNm|{}kNm|o~qkNmpt*qzknmpo*qsknm vVkNm|o}kNmx\<br />

\<br />

<br />

Peter Brezany Institut für Softwarewissenschaft, WS 2002 15<br />

Example (2)<br />

Initial input, weight, and bias values<br />

Slide 28<br />

The € sample is fed into the network, and the input and output of each unit are computed.<br />

These values are shown in the table below.<br />

UnitZ IZ OZ<br />

1/(1+e‚pƒ<br />

Net input, Output,<br />

4 0.2+0-0.5-0.4=-0.7 )=0.332<br />

5 -0.3+0+0.2+0.2=0.1 1/(1+e ‚<br />

)=0.525<br />

6 (-0.3)(0.332)-(0.2)(0.525)+0.1=-0.105 1/(1+eU‚<br />

f<br />

)=0.474<br />

Example (2)<br />

Slide 29<br />

The error of each unit is computed and propagated backwards - the error value are shown in<br />

the table below.<br />

ErrZ<br />

UnitZ<br />

6 (0.474)(1-0.474)(1-0.474)=0.1311<br />

5 (0.525)(1-0.525)(0.1311)(-0.2)=-0.0065<br />

4 (0.332)(1-0.332)(0.1311)(-0.3)=-0.0087<br />

The weight and bias updates are shown in the table on the next slide.

Peter Brezany Institut für Softwarewissenschaft, WS 2002 16<br />

Example (3)<br />

Calculations of weight and bias updating.<br />

Weight or bias<br />

New value<br />

-0.3+(0.9)(0.1311)(0.332)=-0.261<br />

wd;g<br />

-0.2+(0.9)(0.1311)(0.525)=-0.138<br />

wf„g<br />

Slide 30<br />

0.2+(0.9)(-0.0087)(1)=0.192<br />

w$d<br />

-0.3+(0.9)(-0.0065)(1)=-0.306<br />

wef<br />

w _ d 0.4+(0.9)(-0.0087)(0)=0.4<br />

w _ f 0.1+(0.9)(-0.0065)(0)=0.1<br />

w d<br />

w a f 0.2+(0.9)(-0.0065)(1)=0.194<br />

a<br />

-0.5+(0.9)(-0.oo87)(1)=-0.508<br />

g 0.1+(0.9)(0.1311)=0.218<br />

h<br />

f 0.2+(0.9)(-0.0065)=0.194<br />

h<br />

h.d -0.4+(0.9)(-0.0087)=-0.408<br />

Estimating Classifier Accuracy<br />

Slide 31<br />

Data<br />

Training<br />

set<br />

Derive<br />

classifier<br />

Estimate<br />

accuracy<br />

Test set<br />

Figure 3:

Peter Brezany Institut für Softwarewissenschaft, WS 2002 17<br />

Neural Networks for Time Series<br />

In many data mining problems, the data naturally falls into a time series - e.g., the price of<br />

IBM stock, the daily value of the Swiss Franc to U.S. Dollar exchange rate.<br />

Slide 32<br />

Someone who is able to predict the next value, or even whether the series is heading up or<br />

down, has a tremendous advantage over other investors.<br />

NN are easily adapted for time-series analysis. Next figure illustrates how this is done.<br />

The network is trained on the time-series data, starting at the oldest point in the data. The<br />

training then moves to the second oldest point and the oldest point goes to the next set of<br />

units in the input layer, and so on. The network trains like a feed-forward, backpropagation<br />

network trying to predict the next value in the series in each step.<br />

Neural Networks for Time Series (2)<br />

value 1, time t<br />

time lag<br />

historical units<br />

value 1, time t-1<br />

hidden layer<br />

Slide 33<br />

value 1, time t-2<br />

output<br />

value 2, time t<br />

value 1, time t+1<br />

value 2, time t-1<br />

value 2, time t-2

Peter Brezany Institut für Softwarewissenschaft, WS 2002 18<br />

Neural Networks for Time Series (3)<br />

Slide 34<br />

Notice that the time-series network is not limited to data from just a single time series. It can<br />

take multiple inputs. For instance, if we were trying to predict the value of the Swiss Franc<br />

to U.S. Dollar exchange rate, we might include other time-series information, such as the<br />

U.S. Dollar to Deutsch Mark exchange rate, the closing value of the stock exchange, etc.<br />

The number of historical units controls the length of the patterns that the network can<br />

recognize. For instance, keeping 10 historical units on a network predicting the closing price<br />

of a favorite stock will allow the network to recognize patterns that occur within two-week<br />

time periods.<br />

Neural Networks for Time Series (4)<br />

Actually, we can get the same effect of a time-series NN using a regular feed-forward,<br />

backpropagation network by modifying the input data.<br />

Say that we have the time-series, shown in the table below with 10 data elements and we are<br />

interested in two features: the day of the week and the closing price.<br />

Data Element Day-of-Week Closing Price<br />

Slide 35<br />

1 1<br />

2<br />

2<br />

3<br />

3<br />

4<br />

4<br />

5<br />

5<br />

6<br />

1<br />

7<br />

2<br />

8<br />

3<br />

9<br />

4<br />

10<br />

5<br />

$ 40.25<br />

$ 41.00<br />

$ 39.25<br />

$ 39.75<br />

$ 40.50<br />

$ 40.50<br />

$ 40.75<br />

$ 41.25<br />

$ 42.00<br />

$ 4150

Peter Brezany Institut für Softwarewissenschaft, WS 2002 19<br />

Neural Networks for Time Series (5)<br />

To create a time series with a time lag of three, we just add new features for the previous<br />

values - see the table below. This data can now be input into a feed-forward,<br />

backpropagation network without any special support for time series.<br />

Previous Previous-1<br />

Data Element Day-of-Week Closing Price Closing Price Closing Price<br />

Slide 36<br />

1<br />

2<br />

1<br />

2<br />

$<br />

$<br />

40.25<br />

41.00<br />

$ 40.25<br />

3<br />

3<br />

$<br />

39.25<br />

$ 41.00<br />

$ 40.25<br />

4<br />

5<br />

6<br />

7<br />

8<br />

9<br />

4<br />

5<br />

1<br />

2<br />

3<br />

4<br />

$<br />

$<br />

$<br />

$<br />

$<br />

$<br />

39.75<br />

40.50<br />

40.50<br />

40.75<br />

41.25<br />

42.00<br />

$ 39.25<br />

$ 39.75<br />

$ 40.50<br />

$ 40.50<br />

$ 40.75<br />

$ 41.25<br />

$ 41.00<br />

$ 39.25<br />

$ 39.75<br />

$ 40.50<br />

$ 40.50<br />

$ 40.75<br />

10<br />

5<br />

$ 41.50 $ 42.00 $ 41.25<br />

Heuristics for Using Neural Networks<br />

Even with sophisticated NN packages, getting the best results from a NN takes some effort.<br />

1. The number of units in the hidden layer - probably the biggest decision<br />

Slide 37<br />

The more units, the more patterns the network can recognize. However, a very large layer<br />

might end up memorizing the training set instead of generalizing from it. In this case, more<br />

is not better.<br />

Fortunately, we can detect when a network is overtrained. If the network performs very well<br />

on the training set, but does much worse on the test set, then this is an indication that it has<br />

memorized the test set.<br />

How large should the hidden layer be? It should never be more than twice as large as the<br />

input layer. A good place to start is to make the hidden layer the same size as the input layer.<br />

If the network is overtraining, reduce the size of the layer. If it is not sufficiently accurate,<br />

increase its size. When using a network for classification, the network should start with one<br />

hidden unit for each class.

For a network with … input units, † hidden units, and 1 output, there are ‡‰ˆ<br />

<br />

<br />

Peter Brezany Institut für Softwarewissenschaft, WS 2002 20<br />

Heuristics for Using Neural Networks (2)<br />

2. The size of the training set<br />

Slide 38<br />

The training set must be sufficiently large to cover the ranges of inputs available for each<br />

feature. In addition, we want several training examples for each weight in the network.<br />

Šz†‹Šr\<br />

weights in the network. We want at least 5 to 10 examples in the training set for each weight.<br />

…GŠ~\<br />

3. The learning rate<br />

Initially, the learning rate should be set high to make large adjustements to the weights. As<br />

the training proceeds, the learning rate should decrease in order to fine-tune the network.<br />

Slide 39