The Next Big Idea 10 concepts that could - University of Toronto ...

The Next Big Idea 10 concepts that could - University of Toronto ...

The Next Big Idea 10 concepts that could - University of Toronto ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

LifeonCampus<br />

A Neural Network for<br />

a New Millennium<br />

Canada’s first Google fellow, Ilya<br />

Sutskever, is making breakthroughs<br />

in computer science<br />

photo: courtesy <strong>of</strong> ilya sutskever<br />

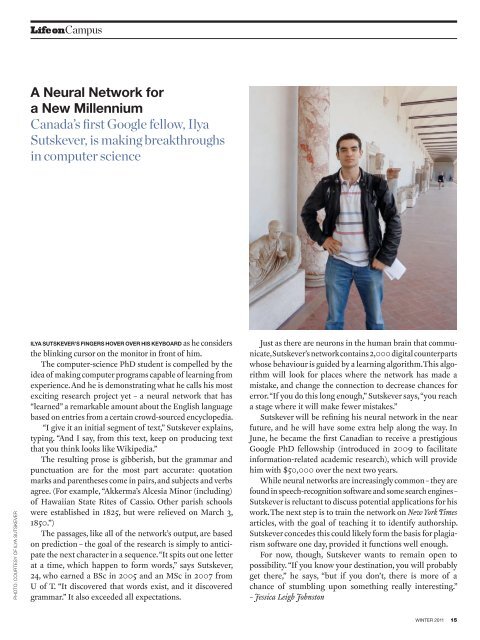

Ilya Sutskever’s fingers hover over his keyboard as he considers<br />

the blinking cursor on the monitor in front <strong>of</strong> him.<br />

<strong>The</strong> computer-science PhD student is compelled by the<br />

idea <strong>of</strong> making computer programs capable <strong>of</strong> learning from<br />

experience. And he is demonstrating what he calls his most<br />

exciting research project yet – a neural network <strong>that</strong> has<br />

“learned” a remarkable amount about the English language<br />

based on entries from a certain crowd-sourced encyclopedia.<br />

“I give it an initial segment <strong>of</strong> text,” Sutskever explains,<br />

typing. “And I say, from this text, keep on producing text<br />

<strong>that</strong> you think looks like Wikipedia.”<br />

<strong>The</strong> resulting prose is gibberish, but the grammar and<br />

punctuation are for the most part accurate: quotation<br />

marks and parentheses come in pairs, and subjects and verbs<br />

agree. (For example, “Akkerma’s Alcesia Minor (including)<br />

<strong>of</strong> Hawaiian State Rites <strong>of</strong> Cassio. Other parish schools<br />

were established in 1825, but were relieved on March 3,<br />

1850.”)<br />

<strong>The</strong> passages, like all <strong>of</strong> the network’s output, are based<br />

on prediction – the goal <strong>of</strong> the research is simply to anticipate<br />

the next character in a sequence. “It spits out one letter<br />

at a time, which happen to form words,” says Sutskever,<br />

24, who earned a BSc in 2005 and an MSc in 2007 from<br />

U <strong>of</strong> T. “It discovered <strong>that</strong> words exist, and it discovered<br />

grammar.” It also exceeded all expectations.<br />

Just as there are neurons in the human brain <strong>that</strong> communicate,<br />

Sutskever’s network contains 2,000 digital counterparts<br />

whose behaviour is guided by a learning algorithm. This algorithm<br />

will look for places where the network has made a<br />

mistake, and change the connection to decrease chances for<br />

error. “If you do this long enough,” Sutskever says, “you reach<br />

a stage where it will make fewer mistakes.”<br />

Sutskever will be refining his neural network in the near<br />

future, and he will have some extra help along the way. In<br />

June, he became the first Canadian to receive a prestigious<br />

Google PhD fellowship (introduced in 2009 to facilitate<br />

information-related academic research), which will provide<br />

him with $50,000 over the next two years.<br />

While neural networks are increasingly common – they are<br />

found in speech-recognition s<strong>of</strong>tware and some search engines –<br />

Sutskever is reluctant to discuss potential applications for his<br />

work. <strong>The</strong> next step is to train the network on New York Times<br />

articles, with the goal <strong>of</strong> teaching it to identify authorship.<br />

Sutskever concedes this <strong>could</strong> likely form the basis for plagiarism<br />

s<strong>of</strong>tware one day, provided it functions well enough.<br />

For now, though, Sutskever wants to remain open to<br />

possibility. “If you know your destination, you will probably<br />

get there,” he says, “but if you don’t, there is more <strong>of</strong> a<br />

chance <strong>of</strong> stumbling upon something really interesting.”<br />

– Jessica Leigh Johnston<br />

winter 2011 15